Data is the fuel that powers modern businesses—and data warehouses are the engines that make sense of it all. As the world becomes more and more data-driven, robust infrastructure is required to collect, process, and analyze the massive volumes of data at its disposal. Snowflake vs BigQuery are two of the main cloud data warehouses on the market today, with solid scalability, ease of use, and advanced analytical capabilities, but which one is ideal for your needs?

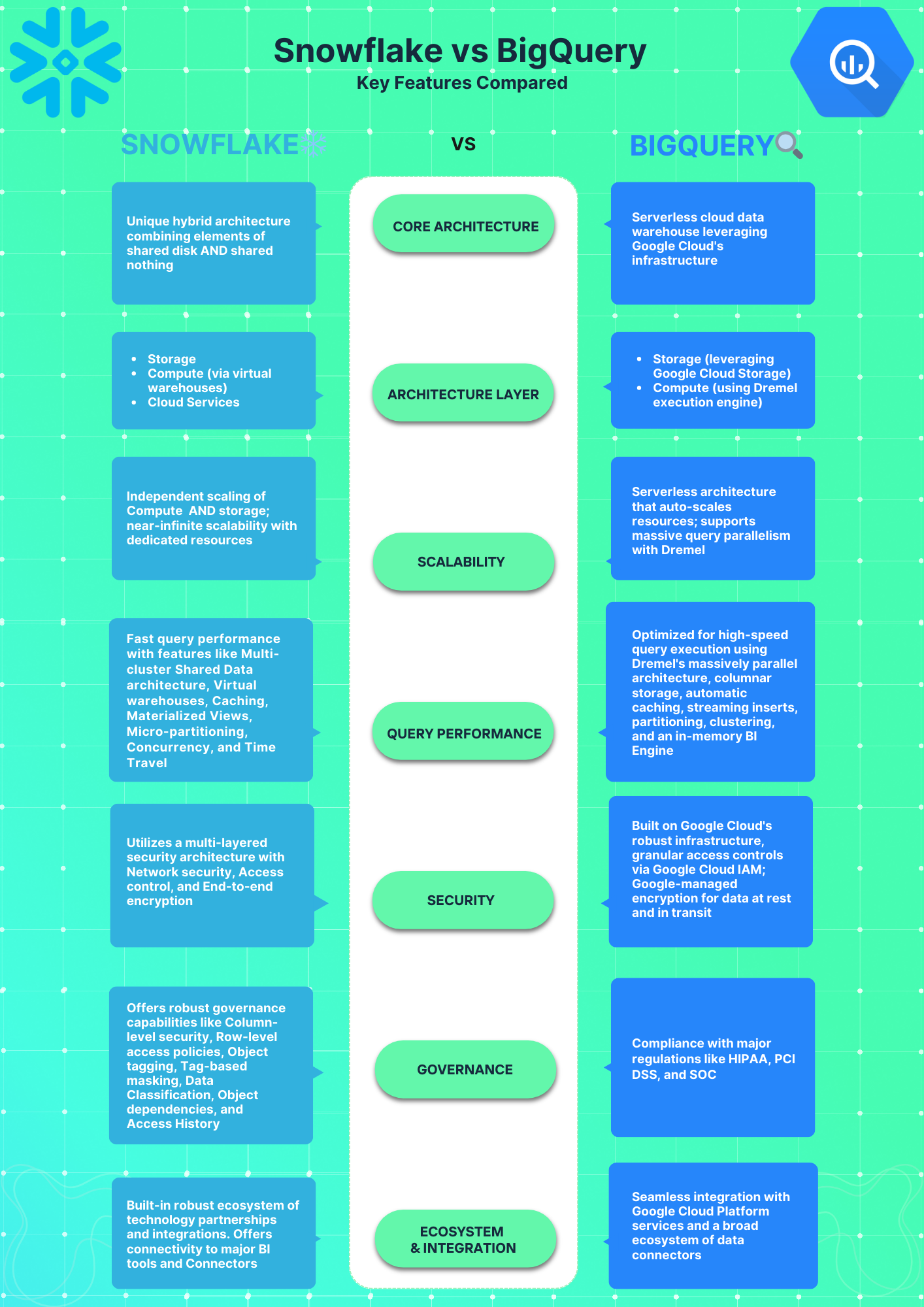

In this article, we'll compare Snowflake vs BigQuery ( ❄️ vs 🔍 ) in 7 essential areas, including its architecture, scalability, performance, security, pricing models, use cases, —and integration ecosystem. We'll also evaluate the key main benefits plus drawbacks of each platform and guide you on which one is best suited to your needs.

Let's dive right in!!

Snowflake vs BigQuery — Clash of Data Warehouse Titans

Need a fast rundown on Snowflake vs BigQuery? This brief overview highlights the main distinctions between the two.

What is BigQuery?

Google BigQuery is a fully-managed and serverless data warehouse offered as part of Google Cloud Platform. It enables organizations to perform analytics on large datasets by leveraging Google's infrastructure. BigQuery is based on Dremel, Google's distributed query engine. It supports ANSI SQL queries and can handle petabyte-scale datasets.

Key features of BigQuery are:

- Serverless architecture: No infrastructure to manage. BigQuery automatically handles provisioning and management.

- Columnar storage: Data is stored in proprietary columnar format called Capacitor for compression.

- Dremel query engine: Executes SQL queries using distributed and hierarchical trees of operations.

- Automated optimization: Handles query optimization, execution planning, and resource management.

- Autoscaling: BigQuery elastically scales to handle query loads without user intervention.

- Streaming analytics: Supports streaming inserts for real-time analytics.

- Machine learning: BigQuery ML allows creating ML models using SQL without manual coding.

- Integrated robust analytics: Seamlessly combines data visualization, monitoring, and orchestration on GCP.

What is Snowflake?

Snowflake is a cloud-native data warehouse delivered as software-as-a-service (SaaS). It uses a unique architecture that separates storage from compute. This enables independent scaling of resources.

Key capabilities and components of Snowflake include:

- Shared Architecture: Snowflake uses a shared data architecture where storage is separate from compute, enabling independent scaling.

- Virtual Warehouses: Compute resources are provided via auto-scaling virtual warehouses that can be spun up or down on demand.

- Micro-partitioned Storage: Data is stored in small micro-partitions for faster queries and scalability.

- Time Travel & Fail-safe: Snowflake provides data protection and recovery capabilities like time travel and fail-safe features.

- Secure Data Sharing: Snowflake allows securely sharing data across accounts without data movement or duplication.

- Diverse Workloads: Snowflake supports diverse analytical workloads including analytics, data science, machine learning and application development.

- Multi-Cloud: Snowflake runs on all major cloud platforms - AWS, Azure and GCP.

- Auto-scaling & Auto-suspend: Snowflake automatically scales up and down virtual warehouses and suspends unused resources to optimize costs.

- Zero Ops: Snowflake requires zero management of hardware or software.

- Semi-structured Data: Snowflake supports semi-structured data through VARIANT data types.

- Security: Snowflake provides comprehensive security features like network policies, access controls, and authentication.

To fully understand Snowflake's capabilities, architecture, security, and features, read this in-depth article, which covers everything you need to know about Snowflake.

Save up to 30% on your Snowflake spend in a few minutes!

Now that we have a thorough understanding of Snowflake vs Google BigQuery, we can compare them based on the following major features:

7 Critical Factors for Comparison—Snowflake vs BigQuery

Now that we've provided an overview of Snowflake and BigQuery, let's do a deep-dive comparison across 7 key factors:

- Architecture

- Scalability

- Performance

- Security

- Pricing Models

- Use Cases

- Integrations

Lets analyze how Snowflake and BigQuery stack up across these key areas will help you to find out about their strengths, weaknesses, and ideal use cases.

1. Snowflake vs BigQuery — Architecture Comparison

The underlying architecture has significant implications on performance, scaling, security, and other capabilities.

Let's thoroughly examine Snowflake and BigQuery architectural approaches.

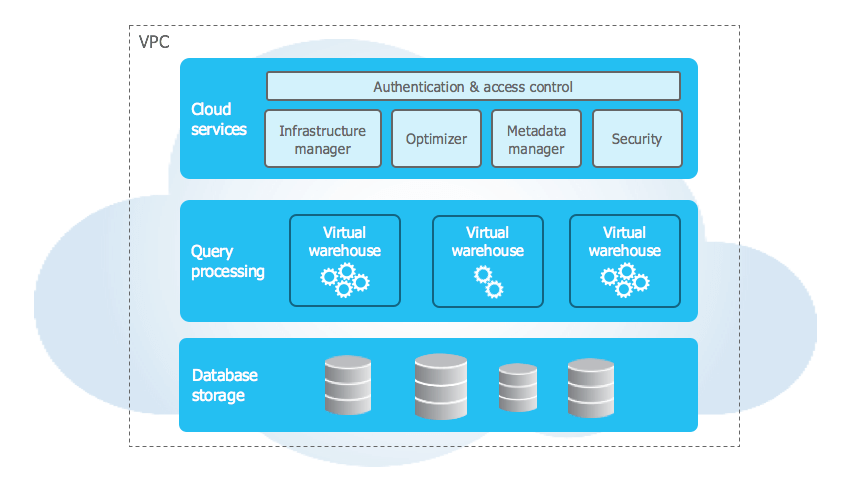

Snowflake Architecture

Snowflake utilizes a unique hybrid architecture that combines elements of shared disk and shared nothing architectures for optimal performance, scalability, and flexibility. The storage layer uses centralized cloud storage, like a shared disk architecture. All data loaded into Snowflake is stored in cloud storage buckets and is accessible to all compute resources, which provides a single source of truth for data. Snowflake optimizes storage using micro-partitioning, compression, and columnar storage formats. The compute layer leverages independent virtual warehouses, like a shared nothing architecture. Virtual warehouses provide on-demand compute clusters for executing queries in parallel. Their independence ensures fast, scalable query processing.

Snowflake's architecture layers:

- Storage layer: Manages structured, semi-structured and unstructured data storage and optimization. Fully managed by Snowflake.

- Compute layer: Scalable virtual warehouses execute queries in parallel as independent MPP compute clusters.

- Cloud services layer: Handles all services like authentication, metadata, optimization, access control. Runs on instances managed by Snowflake.

To learn more in-depth about Snowflake's capabilities and architecture, check out this detailed article.

Google BigQuery architecture

BigQuery is Google's fully-managed, petabyte scale, low cost enterprise data warehouse designed for business intelligence. It enables super-fast, SQL analytics over massive datasets in the Google Cloud. BigQuery's serverless architecture separates compute and storage resources completely, allowing each part to scale independently without limits. This provides enormous flexibility for customers to leverage as much or as little of each resource as needed for their workloads. But before we discuss its architecture, let's actually understand its data lifecycle.

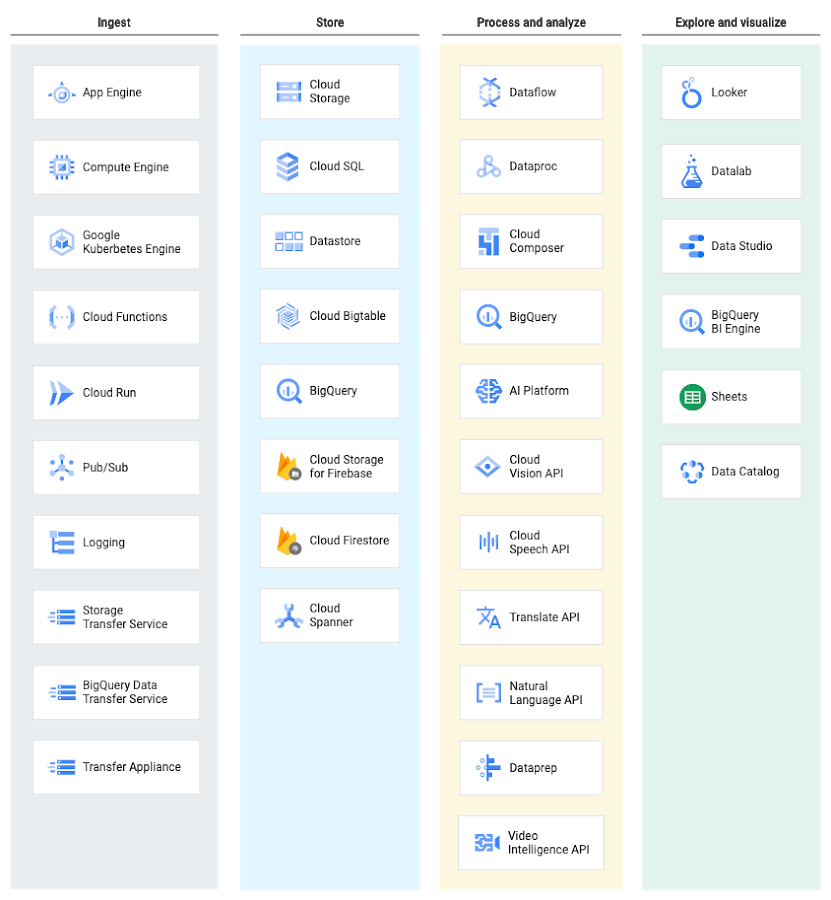

How Google BigQuery Fits Into the Data Lifecycle

BigQuery is a core component of Google Cloud's end-to-end data analytics platform. It covers stages across the data lifecycle, including:

- Ingestion — Loading data into BigQuery from batch or streaming sources

- Processing — Transforming, cleansing, and preparing data for analysis

- Storage — Storing large datasets cost-effectively

- Analysis — Enabling interactive SQL, BI, and machine learning

- Collaboration — Sharing datasets, dashboards, and insights

BigQuery integrates tightly with other Google Cloud data services like Cloud Storage, Pub/Sub, Dataflow, Looker, and more!! This allows users to assemble an optimized serverless data warehouse architecture on GCP.

At each phase of the life cycle, Google Cloud provides multiple services to fit specific data needs and workflows. BigQuery delivers a fast, scalable, and fully-managed data warehouse at the core of this ecosystem.

Now, let’s discuss its architecture.

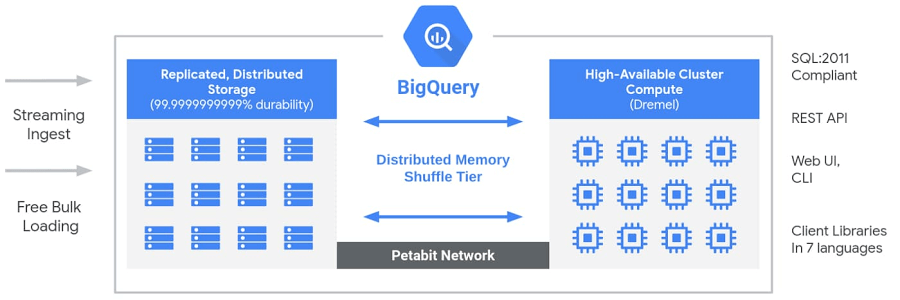

BigQuery is Google's fully-managed, petabyte scale, low cost enterprise data warehouse designed for business intelligence. It enables super-fast, SQL analytics over massive datasets in the Google Cloud. BigQuery's serverless architecture separates compute and storage resources completely, allowing each part to scale independently without limits, providing enormous flexibility for customers to leverage as much or as little of each resource as needed for their workloads.

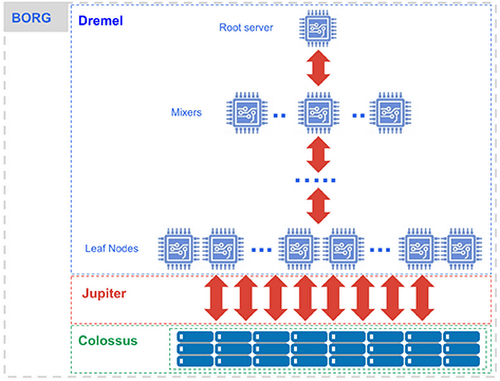

Storage

At its core, BigQuery relies on a distributed storage system called Colossus. Colossus is Google's distributed file system optimized for sequential access to huge datasets. It handles replication, recovery, and management of storage clusters spread across data centers globally. Colossus allows BigQuery to scale up to petabytes of stored data without needing to provision expensive compute resources (like traditional data warehouses). The data itself is stored in a columnar format with advanced compression algorithms, ideal for the type of large-scale aggregations and analytics BigQuery is designed for. Columnar storage only reads relevant columns for a query instead of full rows, reducing I/O and significantly improving performance.

Compute

For processing, BigQuery leverages Dremel, Google's scalable and multi-tenant distributed SQL query engine. Dremel divides incoming queries into smaller pieces called slots, which it then assigns to servers in the Dremel cluster for parallel processing. The number of slots allocated dynamically scales based on the current query workload and available resources, which allows BigQuery to utilize thousands of CPUs concurrently for a single query when needed.

Dremel's architecture mixes results from the parallel slots at each step before moving to the next. This tree structure allows it to handle complex SQL operations like joins, aggregations, subqueries… etc. Dremel compiles all SQL queries down to execution trees and is fully managed internally. Users can just interact with it through the familiar SQL interface.

Networking

The Jupiter backbone network connects the distributed Colossus storage and Dremel compute clusters inside Google's data centers. Jupiter operates at a scale of 100s of petabits/second, facilitating the rapid transfer of massive datasets between storage and compute/processing. This shared infrastructure powers the movement of vast amounts of data 'under the hood' transparently when querying BigQuery.

Orchestration

Tying all the pieces together is Borg—Google's cluster manager and orchestration system, the predecessor to Kubernetes. Borg handles resource allocation and scheduling across the fleet of machines running Dremel and Colossus. It provides redundancy, makes efficient use of hardware, and enables BigQuery to easily scale resources up and down on demand.

Serverless usage

The combination of all these technology stacks (mentioned above) enables the serverless nature of BigQuery's architecture. Users can easily interact with a unified SQL interface without having to manage infrastructure or clusters. Storage and compute can independently autoscale based on usage. Google handles all the underlying data warehousing operations like provisioning, scaling, tuning, high availability, backups, and more..

BigQuery is well-suited for analytics workloads like dashboarding, aggregations, ETL, machine learning, etc. It can ingest data from databases, log files, streams, applications, and more!!!

TLDR; BigQuery's unique combination of tech stacks like Dremel, Colossus, Jupiter and Borg implemented on Google's cloud infrastructure enables it to be a performant, serverless, and most cost-effective modern data warehouse solution. The decoupled storage and compute architecture provides flexibility along with easy scalability to analyze massive data using standard SQL at any scale.

2. Snowflake vs BigQuery — Scalability

The ability to elastically scale storage and compute is crucial for modern data platforms. Let's see how Snowflake and BigQuery scale.

Snowflake's Scalability

Snowflake is built for elastic scalability to support growing data volumes and user demands. Its unique architecture separates storage and compute, allowing each to scale independently.

For storage, Snowflake's data warehouse can be scaled easily. Storage nodes can be added quickly and seamlessly, ensuring that storage capacity can keep up with data growth. Snowflake also handles optimization tasks like micro-partitioning, compression, and clustering data for performance, freeing users from having to worry about storage tuning and management.

On the compute side, Snowflake uses virtual warehouses to execute queries and tasks in parallel. These auto-scaling compute clusters provide elastic scalability to query processing. You can instantly spin up more virtual warehouses to handle peak loads and scale back down during slower periods, paying only for the compute time used.

Some key aspects of Snowflake's scalability:

- Automatic elastic scaling of storage and compute clusters

- Support for clustered tables spanning multiple micro-partitions

- Workload management for prioritizing queries and resources

- Caching of query results to optimize performance

- Seamless scalability across cloud platforms and regions

- Ability to clone databases for scaling specific workloads

Limitations

BUT!! Wait! There are some limitations to be aware of:

- Snowflake relies on the cloud provider's infrastructure so issues there can impact Snowflake

- Users can't customize infrastructure sizing at a granular level

- Movement of large datasets out of Snowflake can be challenging

To learn more in-depth about how Snowflake leverages its architecture for almost unlimited scalability, check out this detailed article.

BigQuery's Scalability

BigQuery is built as a serverless cloud data warehouse, which allows it to scale compute and storage resources automatically without user management.

For the storage side, BigQuery leverages the scalable cloud storage infrastructure from Google Cloud (GCS). The data is stored in a columnar format optimized for fast queries. BigQuery handles all the behind-the-scenes work of scaling storage capacity linearly as data grows.

On the compute side, BigQuery leverages its Dremel massively parallel query execution engine. It dynamically allocates resources to run queries in parallel across thousands of CPUs as needed, providing elastic scalability for compute power to match query complexity and workload demands.

Here are some key aspects of BigQuery scalability:

- Serverless architecture automatically scales resources up and down.

- Support for massive query parallelism with Dremel execution engine.

- Columnar storage format optimized for fast scans and queries.

- Automatic partitioning schemes to optimize query performance.

- Streaming insert API for ingesting real-time data at scale.

- Seamless scaling from terabytes to petabytes without downtime.

Limitations

But! Wait!! There are some limitations:

- BigQuery relies on Google's infrastructure, so issues there affect BigQuery.

- Maximum of 100 concurrent users by default, after which quotas might be applied.

TLDR; As a serverless system, BigQuery removes the guesswork of capacity planning. It can scale seamlessly to petabytes of data and thousands of concurrent users without throttling performance.

3. Snowflake vs BigQuery — Performance

Blazing fast query performance is essential for interactive analytics. How do Snowflake and BigQuery compare?

Snowflake's Performance

Snowflake is engineered for blazing fast query performance to enable interactive analytics on large datasets. Several key architectural factors give Snowflake its speed advantage:

- Multi-cluster shared data architecture: Snowflake's unique separation of storage from compute allows flexible scaling of resources. Queries leverage multiple compute clusters working in parallel to execute queries rapidly across partitions of data.

- Virtual warehouses: On-demand virtual warehouses provide dedicated compute resources, so queries don't compete for resources. Virtual warehouses can be scaled up and down to match workload demands.

- Caching and materialized views: Frequently repeated queries can be cached in memory or precomputed as materialized views to avoid recomputation. This significantly boosts performance for common queries.

- Micro-partitioning: Snowflake's native support for semi-structured data allows for very fine-grained partitioning of data. This enables optimized pruning of partitions irrelevant to a query.

- Concurrency: Snowflake architecture is built to handle high numbers of concurrent queries without throttling.

- Time travel: Snowflake provides quick access to historical versions of table data, enabling fast query performance without costly table reconstruction.

Snowflake's unique architecture, which avoids compute resource bottlenecks, consistently shows faster performance than BigQuery and other warehouses on complex workloads in benchmark results.

To learn more in-depth about how Snowflake leverages its unique architecture to deliver industry-leading query performance, check out this detailed article on Snowflake's performance.

BigQuery Performance

As a serverless data warehouse, BigQuery is also optimized for high-speed query execution and out of the box performance; Here are several key architectural factors that give BigQuery its speed advantage: :

- Dremel columnar architecture: BigQuery uses Dremel's massively parallel trees of execution units to run queries on thousands of machines simultaneously. This parallelism boosts BigQuery performance for big data analytics.

- Columnar storage: Columnar compression reduces I/O and scanning. Columnar compression is crucial for fast BigQuery performance with large datasets.

- Automatic caching and optimization: BigQuery caches frequently executed queries and reuses compilation results for repeat BigQuery performance gains.

- Streaming inserts: BigQuery is optimized for ingesting and querying real-time streamed data with low latency.

- Partitioning and clustering: Tables are partitioned across nodes; clustering improves BigQuery performance by pruning scans.

- In-memory BI Engine: BigQuery's BI Engine caches aggregated data in memory for extremely super-fast interactive dashboards.

While other warehouses like Snowflake have advantages in some areas, BigQuery's innovative architecture delivers excellent BigQuery performance at scale for most analytics workloads. Its speed and scalability make BigQuery a top choice for high-performance analytics.

4. Snowflake vs BigQuery — Security, Compliance and Governance

Enterprise-grade security is non-negotiable for any data platform today. Let's explore Snowflake vs BigQuery security capabilities.

Snowflake—Security, Compliance and Governance

Snowflake provides robust security capabilities to safeguard data and meet compliance requirements. Snowflake utilizes a multi-layered security architecture consisting of network security, access control, and End-to-End encryption.

Snowflake's security features are built on its innovative cloud architecture. By default, data in Snowflake is encrypted using industry-standard AES-256 encryption both at rest and in transit, meaning that even if someone were to gain unauthorized access to Snowflake's servers, they would not be able to read the data without the encryption key.

Snowflake's granular access control features allow organizations to restrict access to data based on user roles, virtual warehouses, and views. This means that organizations can create different roles for different users, with each role having different permissions to data. For example, an organization might create a role for data analysts that allows them to read and write data, but not create or delete tables. Snowflake also offers masking policies, which can be used to obscure sensitive data fields, which means that organizations can mask sensitive data, such as social security numbers or credit card numbers, so that they are not visible to unauthorized users.

On top of its security features, Snowflake is also certified for a number of major regulations, including HIPAA, PCI DSS, GDPR, and SOC 2. So this means that Snowflake has been audited and found to meet the requirements of these regulations.

Snowflake offers robust governance capabilities through features like column-level security, row-level access policies, object tagging, tag-based masking, data classification, object dependencies, and access history. These built-in controls help secure sensitive data, track usage, simplify compliance, and provide visibility into user activities.

To learn more in-depth about implementing strong data governance with Snowflake, check out this article

BigQuery—Security, Compliance and Governance

BigQuery security is built on Google Cloud's robust infrastructure and access controls. Google Cloud IAM enables granular BigQuery security through customized roles and permissions. Organizations can create roles with different BigQuery data access for various users. BigQuery also leverages Google-managed encryption for BigQuery security, encrypting data at rest and in transit.

BigQuery is also certified for a number of major regulations, including HIPAA, PCI DSS, and SOC.

- HIPAA is the Health Insurance Portability and Accountability Act, a set of regulations that protect the privacy and security of medical information. BigQuery is HIPAA compliant and offers a HIPAA compliance mode with additional BigQuery security controls. This mode helps users/businesses/organizations to meet their HIPAA compliance requirements.

- PCI DSS is the Payment Card Industry Data Security Standard, a set of security controls designed to protect cardholder data. BigQuery is PCI DSS compliant, which means that BigQuery has met the requirements of this standard.

- SOC stands for Service Organization Control, a set of auditing standards that assess the security, availability, processing integrity, confidentiality, and privacy of an organization's information systems. BigQuery has been audited and certified by a number of independent auditors, including SOC 1, SOC 2, and SOC 3. These audits confirm that BigQuery has implemented appropriate security controls to protect customer data.

To learn more in-depth check out Google BigQuery's full range of compliance offerings.

Overall, both Snowflake and BigQuery offer strong security, compliance, and governance features. The best choice for an organization will depend on its specific needs and requirements.

5. Snowflake vs BigQuery — Pricing Models

Cloud data warehouses promise easier cost management. How do the pricing models of Snowflake and BigQuery compare?

Snowflake's Pricing Model

Snowflake uses a unique credit-based pricing model for compute resources. The key aspects are:

- Snowflake charges separately for storage and compute. This allows you to scale storage and compute independently.

- Compute resources are called "virtual warehouses". These are clusters of compute power that can be scaled up and down on demand.

- Virtual warehouses come in different sizes - XS, S, M, L, XL etc. Larger sizes have more compute power.

- Each virtual warehouse size uses a certain number of compute credits per hour. For example, an XS warehouse uses 1 credit per hour, while an 6-XL uses 512 credits per hour.

- The price per credit depends on your plan – Standard, Enterprise and above

- So the hourly cost for a virtual warehouse is the number of credits * price per credit.

- You only pay for the virtual warehouses while they are running. You can start, stop, scale up or down warehouses on demand.

- Snowflake auto-scales warehouses based on load. So you don't have to guess capacity needs.

- Snowflake also offers pre-purchased credits at discounted rates for larger workloads.

This pricing model is very flexible and optimized for the cloud. Some key benefits are:

- Pay per use: You only pay for the compute power while a virtual warehouse is running. No need to provision resources in advance.

- Independent scaling: Scale storage and compute independently based on needs.

- Elasticity: Snowflake auto-scales compute power based on load. No more guessing capacity.

- Cost optimization: Snowflake provides tools to monitor and optimize virtual warehouse usage.

- Agility: Virtual warehouses can be started, stopped and resized instantly. So you can match usage closely.

- Pre-purchase discounts: For predictable workloads, pre-purchased credits offer discounts.

The major downside is complexity. It takes some work to right-size and manage virtual warehouses efficiently. Cost optimization does not happen automatically. But overall, Snowflake's pricing aligns well with cloud economics.

To learn more details about Snowflake's pricing model, Check out this article:

BigQuery Pricing Model

BigQuery also uses a consumption-based model with two main components:

- Storage BigQuery pricing: This covers the cost of storing data in BigQuery. It is charged per GB of data stored.

- Compute BigQuery pricing: This covers the cost of processing queries. It is charged based on the volume of data processed.

Storage pricing is relatively straightforward. Here are some key aspects:

- Data storage is priced per GB of data. Current rates are around $0.02/GB for active storage and $0.01/GB for long-term storage.

- Network egress for exporting data is free within the same region. Cross-region egress is charged per GB.

- The first 10GB of storage per month is free.

- Tables not modified for 90 days convert to lower-cost long-term storage.

For compute pricing, BigQuery offers 2 options:

1). On-demand pricing (the default)

- Queries are charged per TB of data processed, at a rate of around $6.25/TB.

- The first 1 TB of processing per month is free.

- Provides access to a shared pool of thousands of slots (virtual CPUs).

- Suitable for sporadic, unpredictable workloads.

2). Flat-rate pricing

- Purchase slots (virtual CPUs) via reservations, at a fixed monthly rate. 100 slots cost ~$2125/month.

- Queries then use your reserved slots. No data processing charges apply.

- Slot reservations are dedicated to your project.

- Suitable for large, consistent workloads. Reduces costs at scale.

The compute pricing model is tailored to BigQuery's serverless architecture. The key aspects are:

- Consumption-based: Pay for exact resources used. No need to provision excess capacity.

- Scales automatically: BigQuery scales compute resources based on demand.

- Cost-effective: Large flat-rate discounts available via reservations.

- Workload optimization: On-demand + flat-rate options allow workload-based cost optimization.

- Free tier: 1 TB free per month allows exploration at no cost.

To learn more details about Google BigQuery pricing, refer to: BigQuery pricing

6. Snowflake vs BigQuery — Use Cases

Understanding the ideal use cases is important in determining which solution better fits your requirements.

Snowflake Use Cases

Snowflake is optimized for analytic workloads and provides a wide range of capabilities that make it suitable for the following key use cases

Enterprise Data Warehouse and Business Intelligence

As an enterprise data warehouse, Snowflake offers a full-featured SQL data warehouse suitable for developing business intelligence solutions including reporting, dashboards, and analytics. It provides support for diverse structured, semi-structured, and unstructured data types, along with broad ANSI-compliant SQL capabilities and enterprise-grade security features. Snowflake also has mature ETL and data ingestion functionalities through partnerships with major BI tools like Tableau, Looker, and Power BI. The separation of storage and compute in Snowflake's architecture allows workloads to be isolated and scaled independently, making it a great choice as a central data platform for large enterprises.

Cloud Data Lake

Snowflake excels as a cloud data lake by providing secure, governed access to large volumes of raw, semi-structured data through support for variant data types like JSON, Avro, Parquet, ORC, and XML. It enables secure data sharing with fine-grained access controls and governed access to data through data masking and filtering. Snowflake also has robust metadata management capabilities, which makes it an ideal choice for building governed data lakes on the cloud where the data lake can act as a single source of truth for downstream analytics systems.

Data Science and Advanced Analytics

For advanced analytics use cases like data science, Snowflake provides data scientists and analysts with a self-service analytics environment enabled by support for programming languages like Python, R, and Scala. Snowflake allows execution of UDFs written in these languages and provides Python, Scala and R clients for access from notebooks. It also has integrations with data science platforms like Databricks and machine learning cloud services like AWS SageMaker and GCP AI Platform. These capabilities make Snowflake a compelling central data platform for data science teams.

Hybrid and Multi-Cloud Deployments

With availability across major cloud platforms like AWS, Azure, and GCP, Snowflake is also ideal for hybrid and multi-cloud data analytics use cases. It provides unified SQL access to data stored anywhere, along with platform-specific integrations, tools, and the ability to copy data between cloud platforms.

High Performance Analytics

Snowflake provides a high performance SQL analytics engine capable of processing massive data volumes. Key capabilities include:

- Decoupled storage and compute for scaling

- Distributed query processing for performance

- Caching, clustering, partitioning for faster queries

- Support for loading terabytes of data per hour

- Performance optimization

These features make Snowflake suitable for large-scale, high concurrency workloads across industries like retail, advertising, gaming etc.

BigQuery Use Case

BigQuery is optimized as a serverless data warehouse for analytics on massive datasets. Key BigQuery use cases where it shines include:

Ad-hoc Analytics

As a serverless data warehouse, BigQuery is optimized for ad-hoc analytics on massive datasets leveraging its auto scaling architecture. The serverless nature of BigQuery allows for quick, ad-hoc access to analyze large datasets without capacity planning, making it ideal for business users who need to analyze terabytes of data on demand.

Data Warehousing and BI

BigQuery provides ANSI-compliant SQL capabilities suitable for enterprise data warehousing needs, along with integrated BI tools like Looker, Tableau, and Power BI. BigQuery can automatically scale compute resources to handle data ingestion and query workloads, enabling near real-time analytics.

Large-Scale Batch Processing

BigQuery excels at large-scale batch processing workloads like ETL across petabytes of data through its high throughput capabilities. It can handle heavy ETL jobs through partitioning, clustering for performance, and integrations with data processing frameworks like Cloud Dataflow and Dataproc.

Machine Learning and AI

For machine learning and AI use cases, BigQuery provides integrated in-database model training and prediction capabilities leveraging Google Cloud's AI services. BigQuery ML allows training ML models directly in BigQuery, while prebuilt prediction functions enable model deployment. BigQuery also has integrations with Vertex AI services like AutoML and provides public datasets to enrich models. These machine learning features make it suitable for end-to-end ML workflows.

Real-time and Streaming Analytics

A key advantage of BigQuery is its support for real-time and streaming analytics through fast data ingestion capabilities. BigQuery enables continuous insertion of streaming data and integrations with streaming pipelines via Pub/Sub. Combined with its scalable architecture, BigQuery can handle real-time dashboards, reports, and alerts on rapidly changing data streams.

Hybrid and Multi-Cloud with BigQuery Omni

BigQuery Omni allows BigQuery to run seamlessly across multiple cloud platforms:

- Available across AWS, Azure and GCP

- Unified SQL endpoint for querying data anywhere

- Data replication and synchronization

- Availability of platform-specific integrations

Omni enables BigQuery to be used as a multi-cloud data analytics platform.

BigQuery excels at interactive analytics, real-time analysis, and ML-enhanced workflows for enterprises invested in Google Cloud.

7. Snowflake vs BigQuery—Integrations and Ecosystem

An ecosystem of integrations determines what you can achieve on top of a data platform. Let's explore Snowflake and BigQuery's partner and tool / technology ecosystems.

Snowflake's Ecosystem

Snowflake has built an extensive ecosystem of technology partners and platform integrations that allow customers to build end-to-end data solutions.

For data integration, Snowflake offers connectivity with leading ETL/ELT platforms (like Fivetran, Matillion, Talend, Informatica, Hevo, and Stitch). These tools can sync data bi-directionally between hundreds of data sources and Snowflake, handling transformation in a scalable manner. Snowflake also interoperates natively with data replication platforms like HVR and Qlik to enable real-time data sync.

Snowflake further provides over 100+ first-party data connectors to sync data from diverse sources. This includes connectors for cloud platforms, relational and NoSQL databases, SaaS applications, ad tech, social, mobile, blockchain, IoT and more. Snowflake's partner ecosystem expands this connector library exponentially.

For business intelligence and reporting, Snowflake offers native direct query integrations with leading BI tools like Tableau, Qlik, Power BI, and MicroStrategy, which allows analysts to visualize and dashboard Snowflake data interactively with sub-second query response times. Snowflake also connects with Looker for embedded analytics and insight apps.

Also, Snowflake integrates with big platforms like Databricks, Redshift, Dataiku, and more!, allowing data engineers and data scientists to leverage Snowflake's data alongside these tools for tasks like data preparation, model training, and deployment. Python, R, Scala and Java clients are also available for programmatic access to Snowflake.

On the monitoring and operations side, Snowflake provides visibility into usage, workloads, and costs through native integrations with platforms like Tableau and Looker. For identity and access management, SAML and OAuth 2.0 integrations secure access and authentication.

Snowflake is also cloud-agnostic, running natively on the major cloud platforms - AWS, Azure, and GCP. It integrates deeply with key data services on these platforms like S3, ADLS, and BigQuery for hybrid scenarios.

Overall, Snowflake's extensive and growing partner ecosystem helps customers extract greater value by enabling Snowflake's data to power a broad range of modern data workloads with ease.

BigQuery's Ecosystem

As Google's data warehouse solution, BigQuery integrates natively with various Google Cloud services and offers great ecosystem support.

For data ingestion and processing, BigQuery integrates seamlessly with Google Cloud data services. BigQuery loads data easily from Cloud Storage, Dataflow, Pub/Sub, and more.

BigQuery also has over 100+ partners and connectors to sync data from diverse sources like relational databases, apps, IoT and more. Google's ecosystem expands this connector library with solutions like Alooma.

For business intelligence, BigQuery offers deep integrations with Google's cloud-native BI tools Looker and Data Studio for interactive dashboards over BigQuery data. It also integrates with leading third-party BI tools like Tableau, Qlik, Power BI via standard SQL and visualization connectors.

BigQuery allows running Python and R code directly within BigQuery queries for executing UDFs. BigQuery also connects seamlessly with Google's fully managed AI/ML services like Vertex AI, AutoML, and BERT to train and deploy models.

On the monitoring side, native integrations with Google Cloud Operations suite, StackDriver and Looker provide usage metrics and workload visibility. Role-based access control and identity management integrations enable governance.

Being a Google Cloud service, BigQuery is optimized to run on GCP infrastructure. It also integrates tightly with key GCP data services like Cloud Bigtable, Spanner, Dataflow, Dataproc and more for building end-to-end data solutions on Google Cloud.

More recently, the launch of BigQuery Omni has expanded BigQuery's reach across AWS and Azure while providing some multi-cloud capabilities. But BigQuery's ecosystem remains most robust within the Google Cloud.

TLDR; BigQuery offers simplified data integration, processing, BI and AI capabilities for Google Cloud customers via deep native integrations with GCP services. While BigQuery's ecosystem is more limited outside of Google, it provides a powerful set of capabilities on GCP through a unified analytics platform.

Snowflake vs BigQuery — Pros and Cons

Snowflake Pros and Cons

Here are the main Snowflake pros and cons:

Snowflake Pros

- Separation of storage and compute — Snowflake uses a unique architecture that decouples storage from compute. This allows independent scaling of storage and compute resources based on workload needs.

- Virtual warehouses — Snowflake provides access to scalable clusters of compute power called virtual warehouses. These can be spun up or down on demand to match workload capacity needs.

- Availability features — Snowflake offers robust data protection and recovery capabilities through features like Time Travel for data versioning and Fail-safe for cloud platform failures.

- Secure data sharing — Snowflake allows secure data sharing across accounts and cloud platforms without having to move or duplicate data. This makes data access and governance efficient.

- Diverse workloads — Snowflake can handle a variety of analytic workloads ranging from enterprise BI to data science, machine learning and application development.

- Multi-cloud — Snowflake is cloud agnostic and can run natively on AWS, Azure and GCP, providing flexibility.

Snowflake Cons

- Cost — Snowflake's usage-based pricing can become expensive at scale since customers pay for storage and compute consumed. Costs need active monitoring and optimization.

- Limited data versioning — While Snowflake offers data versioning through Time Travel, the retention period is limited. More robust data lifecycle management capabilities are needed.

- Complexity — Snowflake has a more complex architecture requiring more administrative overheads for setup and management compared to serverless solutions.

- Machine learning support — While Snowflake has growing machine learning capabilities, it is still more optimized for analytics over ML workloads.

BigQuery Pros and Cons

Here are the main BigQuery pros and cons:

BigQuery Pros

- Machine learning capabilities — BigQuery comes with integrated machine learning capabilities like BigQuery ML, prediction functions and AutoML integrations.

- Unified analytics - BigQuery allows interactive SQL, Python/R, and pipeline-based ETL processing in one platform, simplifying workflows.

- Elastic resources — BigQuery leverages Google's serverless architecture to autoscale compute resources, optimizing costs.

- Collaboration — BigQuery makes it easy to share notebooks, clusters, and code for effective collaboration between data teams.

- Multi-cloud data — BigQuery integrations like BigQuery Omni allow unified analytics across data stored on AWS, Azure and GCP.

- Data lifecycle management — BigQuery supports better schema evolution and data versioning capabilities than Snowflake.

BigQuery Cons

- Infrastructure management — As a PaaS, BigQuery still requires managing underlying infrastructure configurations.

- Limited workloads — BigQuery is more tailored for data science, ML workloads over traditional SQL-based analytics.

- Scalability limits — BigQuery has more scalability constraints than the virtually unlimited elasticity provided by Snowflake.

- Data governance — BigQuery has fewer native data security, access control and governance capabilities compared to Snowflake.

- Data silos — BigQuery lacks native features to share data across accounts like Snowflake data sharing.

- Portability — BigQuery's proprietary architecture makes models less portable across platforms unlike Snowflake.

Want to take Chaos Genius for a spin?

It takes less than 5 minutes.

Conclusion

For today's data-driven organizations, leveraging the power of cloud data warehousing is a strategic imperative. Snowflake and BigQuery both simplify accessing incredible insights from vast data volumes. But which platform is right for your business?

These modern platforms eliminate the headaches of managing infrastructure and offer crucial capabilities like elastic scalability, high performance, and accessible SQL. However, architectural differences give each distinct strengths. In this article, we compared Snowflake vs BigQuery across 7 essential areas, including architecture, scalability, performance, security, pricing models, use cases, and integration ecosystem.

Snowflake shines with its multi-cloud portability, independent resource scaling, and support for diverse analytic workloads. It provides the flexibility many enterprises need in hybrid environments. BigQuery is purpose-built for Google Cloud and provides unmatched speed within the GCP ecosystem. It really excels at ad-hoc analysis and ML-enhanced workflows.

So if you're heavily invested in Google Cloud, BigQuery may offer faster time-to-value given its seamless integrations with GCP's market-leading data services. But other enterprises may benefit more from the multi-cloud capabilities and workload breadth of Snowflake.

FAQs

Is Snowflake or BigQuery better for the cloud?

Snowflake may be better for multi-cloud and hybrid environments given its flexibility and support across AWS, Azure, and GCP. BigQuery is optimized for GCP-centric organizations.

Which offers higher query performance — Snowflake vs BigQuery?

Snowflake generally benchmarks higher in terms of query performance and concurrency. But both can deliver fast query speeds at scale.

What is the main difference between Snowflake and BigQuery architecture?

Snowflake uses separate storage and compute while BigQuery is serverless. BigQuery auto-scales while Snowflake allows independent storage and compute scaling.

Which solution is easier to use — Snowflake vs BigQuery?

Both platforms are easy to use with SQL support, useful UI and integrations. But BigQuery may have a slight edge with its fully managed serverless architecture.

Does Snowflake or BigQuery have better data sharing capabilities?

Snowflake offers native data sharing across accounts and clouds. BigQuery recently launched BigQuery Data Sharing for similar needs.

Which data warehouse scales better — Snowflake vs BigQuery?

Snowflake can scale compute and storage independently. BigQuery auto-scales compute but has fixed storage limits. Both can handle scalability needs of most organizations.

Is Snowflake or BigQuery more cost effective?

It depends on usage patterns and needs. BigQuery can be more cost effective at smaller scales while Snowflake offers savings through optimizations at scale.

Which solution has a better ecosystem for analytics — Snowflake vs BigQuery?

Both platforms have strong ecosystems. Snowflake integrates across more tools given its cloud-agnostic nature. BigQuery has deeper GCP integration.

Which data warehouse is better for ad-hoc analysis — Snowflake vs BigQuery?

BigQuery's serverless architecture makes it ideal for ad-hoc analysis without capacity planning. But Snowflake also handles ad-hoc workloads well.

Can I run machine learning models easily in Snowflake vs BigQuery?

Yes, both platforms allow running ML models. BigQuery simplifies model building while Snowflake focuses on model deployment.

Is Snowflake or BigQuery better for data science?

BigQuery comes with more integrated data science capabilities. But Snowflake also works well for data science via its ecosystem integration and UDF support.

Which solution has a steeper learning curve — Snowflake vs BigQuery?

BigQuery's serverless architecture typically translates to an easier learning curve. Snowflake requires more expertise to optimize usage and costs.

Does Snowflake allow scaling storage and compute separately?

Yes, Snowflake uniquely decouples storage from compute allowing independent scaling. BigQuery auto-scales compute but storage and databases have fixed limits.

Can BigQuery handle data volumes as large as Snowflake?

Yes, both Snowflake and BigQuery can handle massive data volumes in the petabyte scale for enterprise needs. But Snowflake has greater configurability at the highest scales.