Snowflake Notebooks is a powerful new interactive development environment that was just launched and made available in public preview during the Snowflake Summit 2024. This new development interface in Snowsight offers an interactive, cell-based programming environment where you can write raw SQL and Python code. Snowflake Notebooks enable users to easily perform data analysis, develop machine learning models, and carry out numerous data engineering tasks—all within the Snowflake ecosystem.

In this article, we will cover everything you need to know about Snowflake Notebooks, including its key features, an overview of the Snowflake Notebook interface, tips and tricks for using Snowflake Notebooks effectively, and a step-by-step guide to setting up and using this powerful interactive development environment.

What Is Notebook in Snowflake?

Snowflake Notebooks is an integrated development environment within Snowsight that supports Python, SQL, and Markdown. It is designed to help users to perform exploratory data analysis, develop machine learning models, and carry out other data science and data engineering tasks seamlessly—all within the Snowflake ecosystem.

Snowflake Notebooks is designed to integrate deeply with the Snowflake ecosystem, leveraging the power of Snowflake Snowpark, Streamlit, Snowflake Cortex, and Iceberg tables, allowing users to perform complex data operations and data visualizations, build interactive applications, and manage large-scale data workflows efficiently.

Want to take Chaos Genius for a spin?

It takes less than 5 minutes.

Potential of Snowflake Notebooks—Key Features and Capabilities

Snowflake Notebooks offer a ton of features that help users harness the full potential of data within the Snowflake ecosystem. Here are all the features that this interactive development environment offers:

1) Interactive, Cell-Based Interface

Snowflake Notebooks provide an interactive, cell-based programming environment where users can write and execute raw SQL, Python, and even Markdown code. This interactive environment supports cell-by-cell development, making it easy to iterate on code and visualize results and output in real-time.

2) Scalability, Security and Governance

As part of the Snowflake ecosystem, Snowflake Notebooks inherit the scalability, security, and governance features that Snowflake is renowned for. Users can leverage the power of Snowflake's distributed architecture to process and analyze large datasets efficiently, while also benefiting from the platform's robust security measures, such as role-based access control (RBAC) and data encryption.

On top of that, Snowflake Notebooks integrate seamlessly with Snowflake's data governance capabilities, ensuring that data access and usage adhere to organizational policies and compliance requirements.

3) AI/ML and Data Engineering Workflows

Snowflake Notebooks support extensive AI/ML and data engineering workflows. Users can explore, analyze, and visualize data using Python and SQL, leverage Snowflake Snowpark for scalable data processing, and utilize embedded Snowflake Streamlit visualizations for interactive data apps.

4) Collaboration and Efficiency

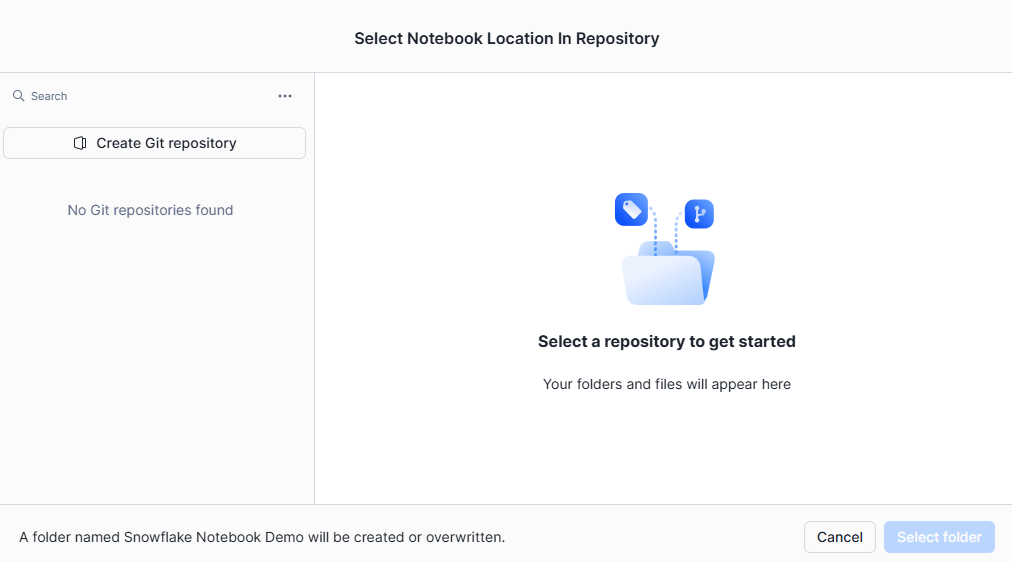

Collaboration is a key aspect of modern data workflows, and Snowflake Notebooks are designed to facilitate seamless collaboration among team members. With built-in Git integration, users can version control their notebooks and work together on the same codebase, whether it's hosted on GitHub, GitLab, BitBucket, or Azure DevOps.

This integration not only promotes code sharing and collaboration but also enhances efficiency by enabling users to track changes, revert to previous versions, and merge updates from multiple contributors.

5) Integrated Data Analysis

One of the major strengths of Snowflake Notebooks is their ability to handle entire data workflows within a single environment. Snowpark, Snowflake's scalable data processing engine, allows users to do complicated data transformations and analyses from within their notebooks, using the power of distributed computing without leaving the familiar notebook interface.

Snowflake Notebooks also facilitate the development and execution of Snowflake Streamlit applications, allowing users to create interactive data visualization and data exploration tools that can be readily shared and collaborated on within the notebook environment.

6) Data Pipeline and Management

Snowflake Notebooks simplify data engineering workflows by providing a unified environment for data profiling, ingestion, transformation, and orchestration. Users can use Python and SQL to create scripts and queries that automate many portions of their data pipelines, such as extracting data from external sources, running data quality checks, and executing complex transformations.

On top of that, Snowflake Notebooks easily integrate with Python APIs, Git Integration, and the Snowflake CLI, allowing users to automate data pipeline deployment and administration.

7) End-to-End ML Development

Snowflake Notebooks make it easier to build end-to-end machine learning pipelines without the need for additional infrastructure or tooling. From data preprocessing and feature engineering to model training, data visualization, and deployment, users can leverage the power of Python libraries and Snowflake's native SQL capabilities to build and deploy machine learning models directly within the notebook environment.

This streamlined method not only simplifies the ML development process, but it also encourages collaboration and reproducibility because the entire workflow can be captured and shared in a single notebook.

8) Scheduling Feature

Acknowledging the value of automation in data workflows, Snowflake Notebooks offer a scheduling feature that allows users to automate the execution of notebooks. Users can use the notebook interface to create schedules to run their notebooks at specific intervals, track the progress of scheduled tasks, and view the results of previous runs.

This scheduling feature allows users to link their notebooks into bigger data pipelines, guaranteeing that important data processing and analysis tasks are carried out consistently and reliably.

9) Snowflake Copilot Integration

Snowflake Notebooks are further enhanced by their integration with Snowflake Copilot, an advanced AI-powered coding assistant. Copilot leverages natural language processing and machine learning to understand the context and intent of a user's code, providing intelligent suggestions, code completions, and explanations to improve productivity and accelerate the development process.

With Copilot integrated into Snowflake Notebooks, you as a user can benefit from intelligent code assistance, making it easier to write efficient and maintainable code, whether it's SQL queries, Python scripts, or data analysis tasks

Snowflake Notebooks Interface Walkthrough

To fully utilize Snowflake Notebooks, it's essential to understand its interface and basic operations. Here is an initial overview of the Snowflake Notebooks interface; we will explore it in greater detail in a later section:

Remember this: whenever you create a notebook for the first time, three example cells are displayed. You can modify those cells or add new ones.

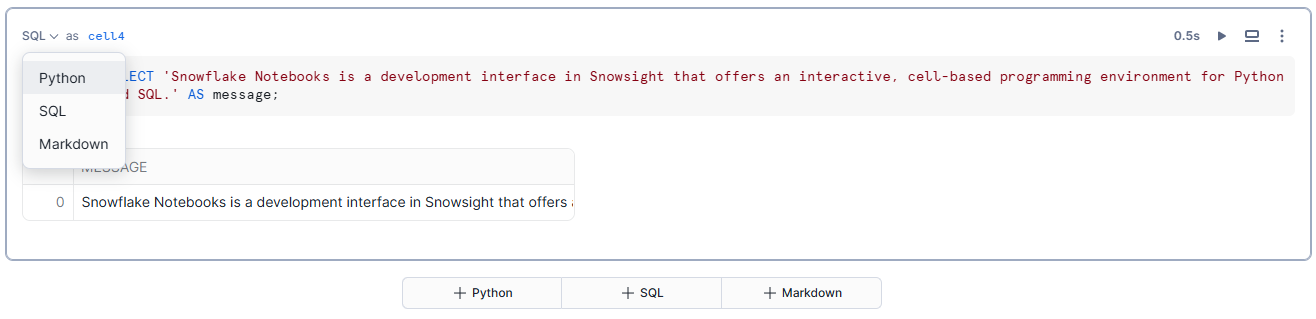

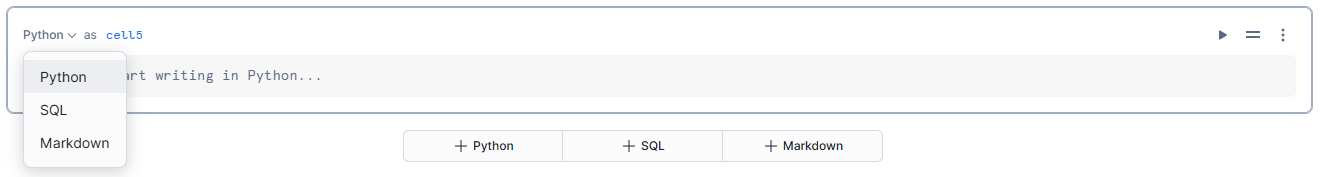

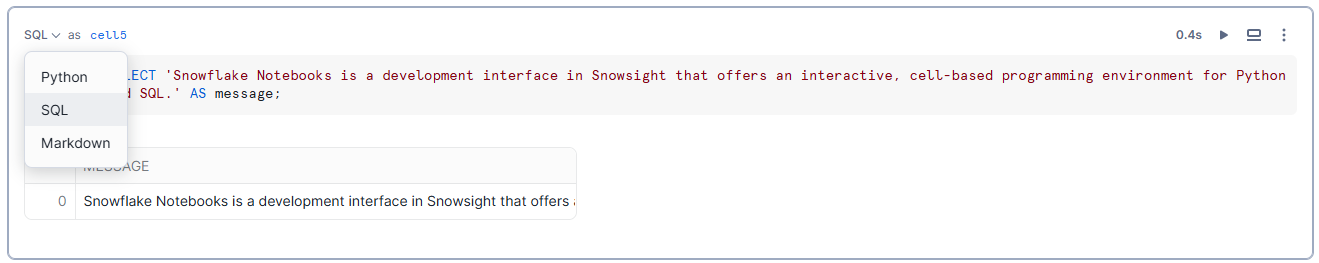

1) Creating New Cells in Snowflake Notebooks

To create a new cell, select the desired cell type button—SQL, Python, or Markdown. You can change the cell type anytime using the language dropdown menu.

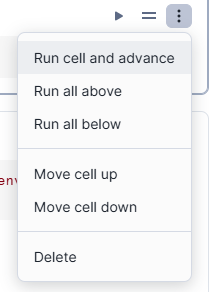

2) Moving Cells in Snowflake Notebooks

Cells can be moved by dragging and dropping or using the actions menu.

Option 1—Hover your mouse over the cell you want to move. Select the drag-and-drop icon and move the cell to its new location.

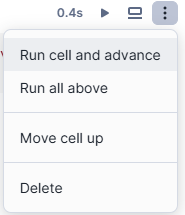

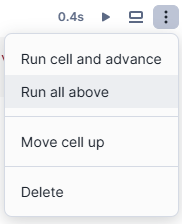

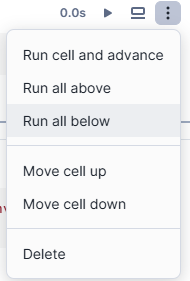

Option 2—Click the vertical ellipsis (more actions) menu for the worksheet. Select the appropriate action from the menu.

3) Running Cells in Snowflake Notebooks

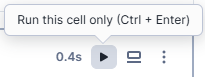

Cells can be run individually or in sequence.

1) Run a Single Cell

When making frequent code updates, you can run a single cell to see the results immediately. To do this, press CMD + Return on a Mac or CTRL + Enter on Windows. Alternatively, you can select "Run this cell only" from the cell's menu by clicking on the vertical ellipsis (more actions).

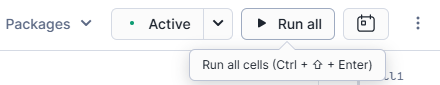

2) Run All Cells in a Notebook

Before presenting or sharing your Snowflake notebook, it's important to ensure that all cells are up-to-date. To run all cells sequentially, press CMD + Shift + Return on a Mac or CTRL + Shift + Enter on a Windows keyboard. You can also select "Run all" from the notebook menu to execute every cell in the notebook in order.

3) Run a Cell and Advance to the Next Cell

To run a cell and quickly move to the next one, press Shift + Return on a Mac or Shift + Enter on Windows. This option allows for a smooth workflow when you need to check the results of each cell consecutively. Also, you can select "Run cell and advance" from the cell's actions menu by clicking the vertical ellipsis.

4) Run All Above

If you need to run a cell that references the results of earlier cells, you can execute all preceding cells first. This is done by selecting "Run all above" from the cell's actions menu, ensuring all necessary previous computations are complete.

5) Run All Below

When running a cell that later cells depend on, you can execute the current cell and all subsequent cells by selecting "Run all below" from the cell's actions menu. This ensures that all dependent cells are processed in sequence.

4) Deleting Cells in Snowflake Notebooks

Cells can be deleted using the actions menu or keyboard shortcuts. Select the vertical ellipsis, choose delete, and confirm the action.

5) Inspecting Cell Status in Snowflake Notebooks

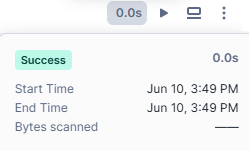

The status of a cell run is indicated by color codes and displayed in the cell's left wall and right navigation map.

- Blue dot: Indicates the cell has been modified but hasn't run yet.

- Red: An error has occurred.

- Green: The run was successful.

- Moving green: The cell is currently running.

- Blinking gray: The cell is waiting to be run, typically occurring when multiple cells are triggered to run.

Note: Markdown cells do not show any status indicators.

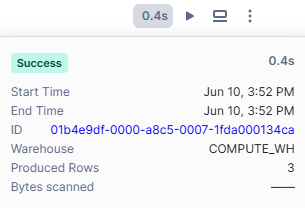

After a cell finishes running, the time it took to run is displayed at the top of the cell. You can select this text to view the run details window, which includes the execution start and end times and the total elapsed time.

SQL cells provide additional information, such as the warehouse used to run the query, the number of rows returned, and a hyperlink to the query ID page for further details.

6) Stopping a Running Cell in Snowflake Notebooks

To stop a running cell, select the stop button on the top right of the cell. This stops the current cell and any subsequent cells scheduled to run.

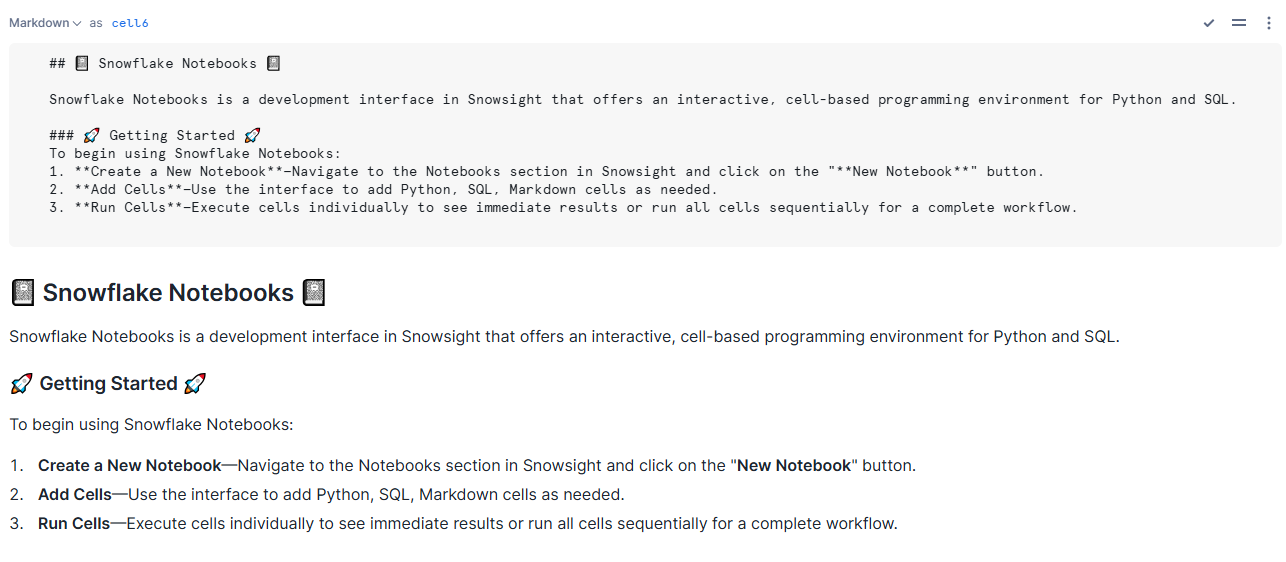

7) Formatting Text with Markdown in Snowflake Notebooks

Markdown cells allow you to format text within the notebook. Add a Markdown cell and type valid Markdown syntax to format your text. The formatted text appears below the Markdown syntax.

Step-by-Step Guide to Setting Up and Using Snowflake Notebooks

Follow these detailed steps to get started with Snowflake Notebooks and unlock their full potential for your data analysis and machine learning tasks.

Prerequisites:

- Snowflake Account: Make sure you have a Snowflake account. If you don't, sign up for a 30-day free trial.

- Snowsight Access: Access to the Snowsight interface within Snowflake.

- Required Privileges: Make sure you have the necessary privileges:

- USAGE on the database containing the notebook

- USAGE, CREATE TASK on the schema containing the notebook

- EXECUTE TASK on the account

- Familiarity with Python and SQL: Familiarity with Python and SQL for effective use of Snowflake Notebooks.

- Git Integration: Access to GitHub, GitLab, BitBucket, or Azure DevOps if you plan to use version control.

- Required Python Packages: Knowledge of the Python packages needed for your data analysis or machine learning tasks.

- .ipynb File (Optional): If you plan to import an existing notebook, ensure you have the .ipynb file ready.

Step 1—Login to Snowflake

First, sign in to your Snowflake account using the Snowsight interface.

Step 2—Access Snowflake Notebooks

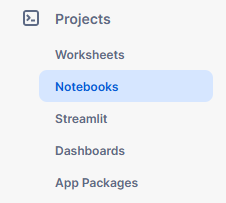

Once logged in, navigate to the “Projects” pane and select "Notebooks" within the Snowsight interface.

This will open the Snowflake Notebooks interface where you can create, manage, and run notebooks.

Step 3—Start a Notebook Session

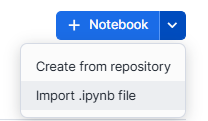

Create a new notebook or open an existing one to start your session. Click on the "+ Notebook" button located at the top right corner to create a new notebook.

Step 4—Select/Create a Dedicated Database or Schema

Choose an existing database and schema or create new ones where your notebook will be stored. Note that these cannot be changed after creation.

CREATE DATABASE snowflake_notebooks_db;

CREATE SCHEMA snowflake_notebooks;Select/Create a Dedicated Database or Schema - Snowflake Notebooks

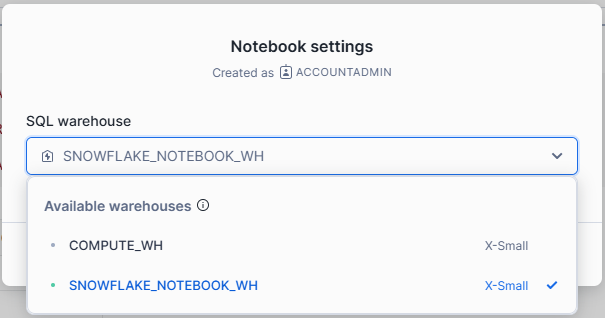

Step 5—Select a Warehouse for Running Snowflake Notebooks

Select an appropriate warehouse to run your notebook. The warehouse should have sufficient compute resources for your tasks.

CREATE WAREHOUSE snowflake_notebook_wh WITH

WAREHOUSE_SIZE = 'XSMALL'

AUTO_SUSPEND = 60

AUTO_RESUME = TRUE;

USE WAREHOUSE snowflake_notebook_wh;elect a Warehouse for Running Snowflake Notebooks

Step 6—Changing the Notebook Warehouse

If needed, you can change the warehouse from the notebook settings to match the computational needs of your analysis.

Step 7—Assign Required Privileges

To manage who can create and modify notebooks, Snowflake provides a dedicated schema-level privilege. Each notebook is owned by the active role in your session at the time of creation, and any user with that role can open, run, and edit the notebooks owned by that role. Sharing notebooks with other roles is not allowed, so consider creating a dedicated role for notebook management.

To create a notebook, you must use a role that has the following privileges:

| Privilege | Object |

| USAGE | Database |

| USAGE or OWNERSHIP | Schema |

| CREATE NOTEBOOK | Schema |

Granting Required Privileges

Grant the necessary privileges on the database and schema intended to contain your notebooks to a custom role. Below is an example of how to grant these privileges for a database named snowflake_notebooks_db and a schema named snowflake_notebooks to a role named create_notebooks:

GRANT USAGE ON DATABASE snowflake_notebooks_db TO ROLE create_notebooks;Grant USAGE privilege on the database

GRANT USAGE ON SCHEMA snowflake_notebooks TO ROLE create_notebooks;Grant USAGE privilege on the schema

GRANT CREATE NOTEBOOK ON SCHEMA notebooks TO ROLE create_notebooks;Grant CREATE NOTEBOOK privilege on the schema

If you assign these privileges, you guarantee that the designated role has the necessary permissions to create and manage notebooks within the specified schema. This setup helps maintain a controlled and secure environment for notebook creation and modification.

Step 8—Executing Snowflake Notebooks

Let's dive into the first demo notebook in Snowflake and unravel its secrets.

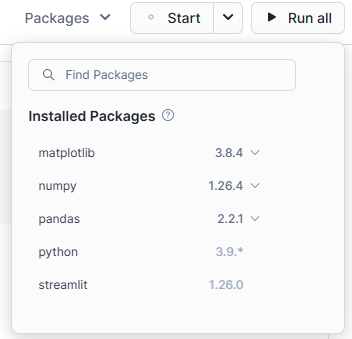

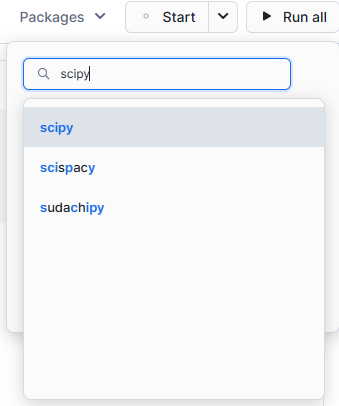

📓 Add Necessary Python Packages

Snowflake Notebooks come pre-installed with common Python libraries for data science and machine learning, such as numpy, pandas, matplotlib, and so much more. But, if you need to use other packages, you can easily add them via the Packages dropdown on the top right of the notebook interface.

Here’s how to add additional packages and use them in your notebook:

First—Click on the Packages dropdown at the top right of your notebook interface.

Second—Type the name of the package you need and add it to your notebook environment.

Third—Once added, you can import and use these packages just like any other Python library.

# Import Python packages used in this notebook

import streamlit as st

import altair as alt

# Pre-installed libraries that come with the notebook

import pandas as pd

import numpy as np

# Package that we just added

import matplotlib.pyplot as plt

import scipyImporting Packages Snowflake Notebooks

📓 Switching Between SQL and Python Cells

Switching between SQL and Python at various stages in your data analysis workflow can significantly enhance your productivity and flexibility.

When creating a new cell in Snowflake Notebooks, you have the option to choose between SQL, Python, and Markdown, allowing you to select the most appropriate language for each task—whether it's running SQL queries, executing Python scripts, or adding documentation and notes.

Each cell in the notebook has a dropdown menu located at the top left corner. This dropdown not only shows the type of cell (SQL, Python, or Markdown) but also displays the cell number, making it easier to navigate and organize your notebook efficiently.

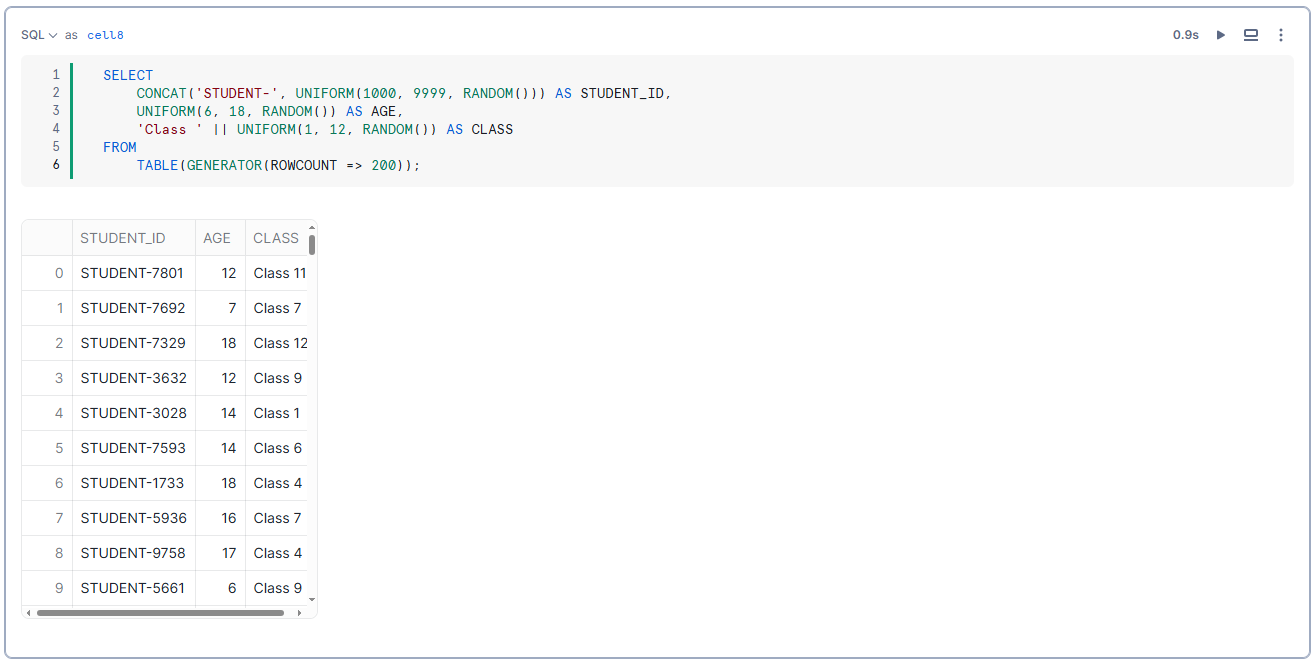

Let's write some simple SQL to generate sample student data to play with.

SELECT

CONCAT('STUDENT-', UNIFORM(1000, 9999, RANDOM())) AS STUDENT_ID,

UNIFORM(6, 18, RANDOM()) AS AGE,

'Class ' || UNIFORM(1, 10, RANDOM()) AS CLASS

FROM

TABLE(GENERATOR(ROWCOUNT => 200));SQL to generate sample student data

📓 Simple and Advanced Data Visualization

Visualizing data is a crucial aspect of data analysis and understanding. Snowflake Notebooks support a variety of libraries for creating data visualizations, such as:

Here, we'll focus on using Altair, Matplotlib, and Plotly to demonstrate how to visualize data effectively in Snowflake Notebooks.

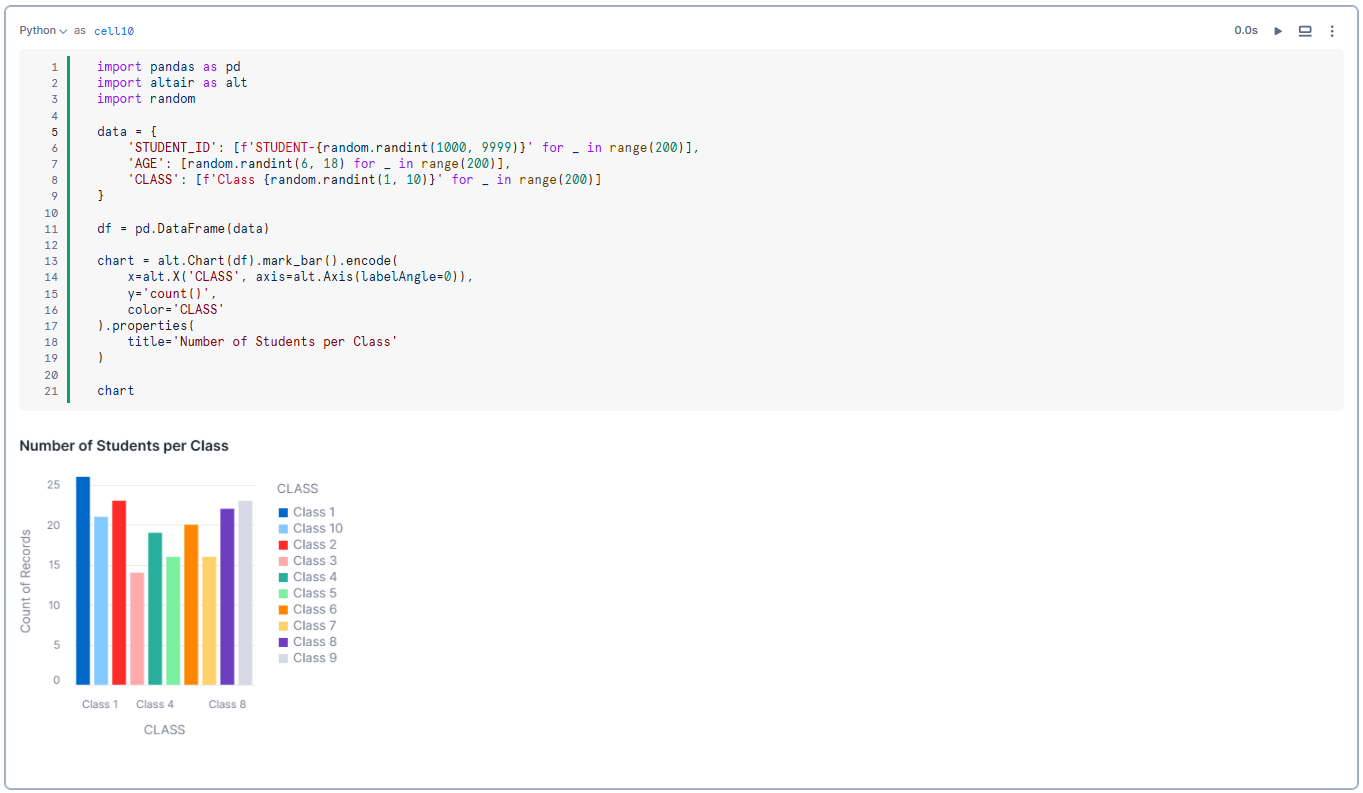

Example 1—Visualizing data by using Altair

Altair is a declarative statistical data visualization library for Python and is included by default in Snowflake Notebooks as part of Snowflake Streamlit. Currently, Snowflake Notebooks support Altair version 4.0.

Step 1—Install Matplotlib

From the notebook, select Packages and locate the Altair library to install it.

Step 2—Import the Necessary Libraries:

import pandas as pd

import altair as alt

import randomImporting the Necessary Libraries - Snowflake Notebooks

Step 3—Generate Synthetic Data

data = {

'STUDENT_ID': [f'STUDENT-{random.randint(1000, 9999)}' for _ in range(200)],

'AGE': [random.randint(6, 18) for _ in range(200)],

'CLASS': [f'Class {random.randint(1, 10)}' for _ in range(200)]

}Generating Synthetic Data - Snowflake Notebooks

Step 4—Create a DataFrame

df = pd.DataFrame(data)Creating a DataFrame - Snowflake Notebooks

Step 5—Create a Bar Chart to Visualize the Number of Students in Each Class

chart = alt.Chart(df).mark_bar().encode(

x=alt.X('CLASS', axis=alt.Axis(labelAngle=0)),

y='count()',

color='CLASS'

).properties(

title='Number of Students per Class'

)Creating a Bar Chart to Visualize the Data - Snowflake Notebooks

Step 6—Display the Chart

chart

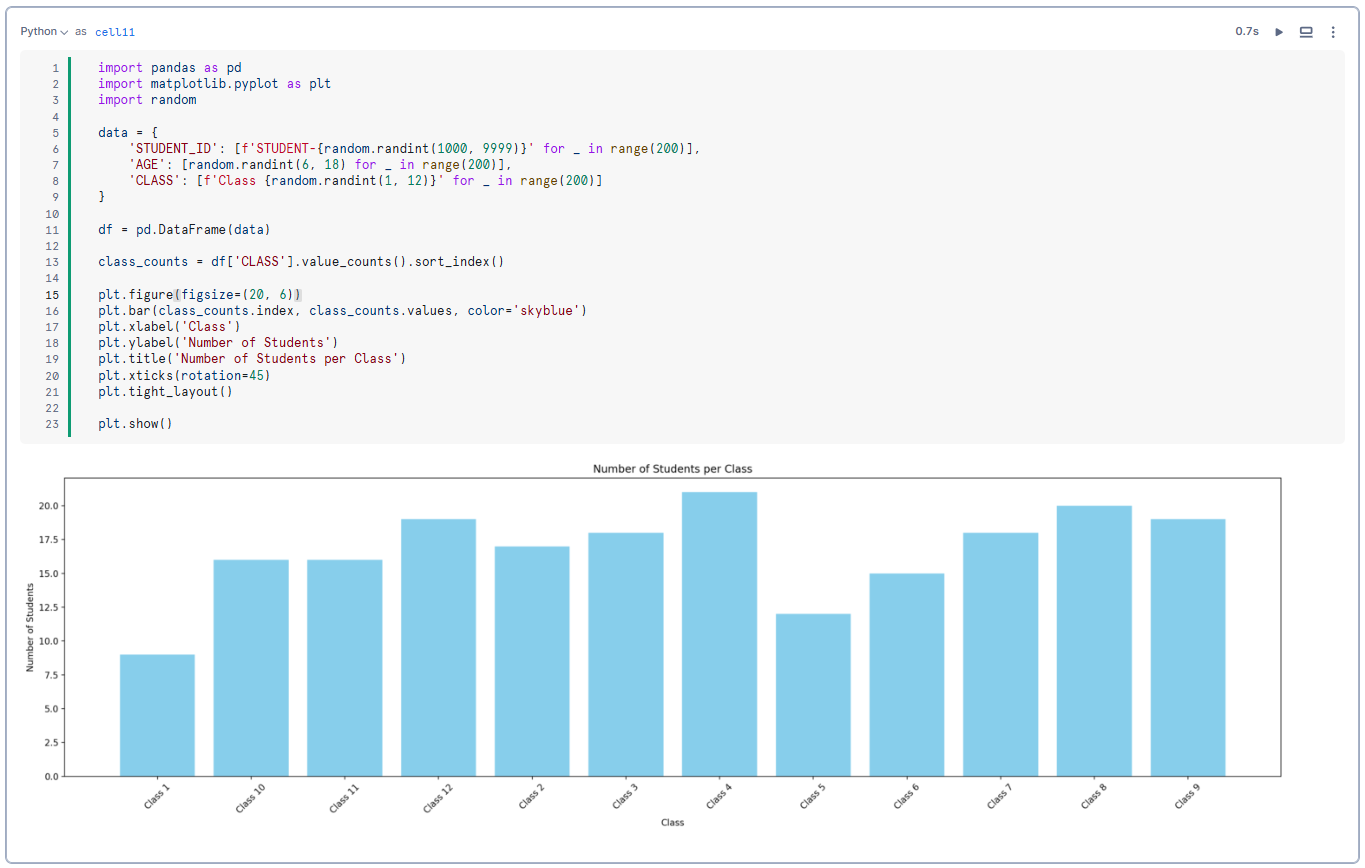

Example 2—Visualizing data by using Matplotlib

Matplotlib is a widely used plotting library in Python. To use Matplotlib in Snowflake Notebooks, you need to install the library first.

Step 1—Install Matplotlib

From the notebook, select Packages, and locate the Matplotlib library to install it.

Step 2—Import the Necessary Libraries

import pandas as pd

import matplotlib.pyplot as plt

import randomImporting the Necessary Libraries - Snowflake Notebooks

Step 3—Generate Synthetic Data

data = {

'STUDENT_ID': [f'STUDENT-{random.randint(1000, 9999)}' for _ in range(200)],

'AGE': [random.randint(6, 18) for _ in range(200)],

'CLASS': [f'Class {random.randint(1, 12)}' for _ in range(200)]

}Generating Synthetic Data - Snowflake Notebooks

Step 4—Create a DataFrame

df = pd.DataFrame(data)Creating a DataFrame - Snowflake Notebooks

Step 5—Count the Number of Students in Each Class

class_counts = df['CLASS'].value_counts().sort_index()Counting the Number of Students in Each Class - Snowflake Notebooks

Step 6—Create a Bar Chart to Visualize the Number of Students in Each Class

plt.figure(figsize=(20, 6))

plt.bar(class_counts.index, class_counts.values, color='skyblue')

plt.xlabel('Class')

plt.ylabel('Number of Students')

plt.title('Number of Students per Class')

plt.xticks(rotation=45)

plt.tight_layout()Creating a Bar Chart to Visualize the Data - Snowflake Notebooks

Step 7—Display the Chart

plt.show()

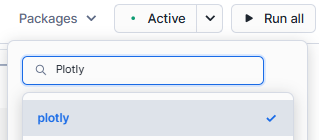

Example 3—Visualizing data by using Plotly

Plotly is an interactive graphing library for Python. To use Plotly in Snowflake Notebooks, you need to install the library first.

Step 1—Install Plotly

From the notebook, select Packages and locate the Plotly library to install it.

Step 2—Import the Necessary Libraries

import pandas as pd

import plotly.express as px

import randomImporting the Necessary Libraries - Snowflake Notebooks

Step 3—Generate Synthetic Data

data = {

'STUDENT_ID': [f'STUDENT-{random.randint(1000, 9999)}' for _ in range(200)],

'AGE': [random.randint(6, 18) for _ in range(200)],

'CLASS': [f'Class {random.randint(1, 12)}' for _ in range(200)]

}Generating Synthetic Data - Snowflake Notebooks

Step 4—Create a DataFrame

df = pd.DataFrame(data)Creating a DataFrame - Snowflake Notebooks

Step 5—Count the Number of Students in Each Class

class_counts = df['CLASS'].value_counts().reset_index()

class_counts.columns = ['CLASS', 'COUNT']Counting the Number of Students in Each Class - Snowflake Notebooks

Step 6—Create a Bar Chart to Visualize the Number of Students in Each Class:

fig = px.bar(class_counts, x='CLASS', y='COUNT', title='Number of Students per Class')Creating a Bar Chart to Visualize the Data - Snowflake Notebooks

Step 7—Display the Chart

fig

For an in-depth guide on creating advanced visualizations with Snowflake Notebooks, check out this video:

Now, let's move on to explore how you can use Snowflake Snowpark API to process data.

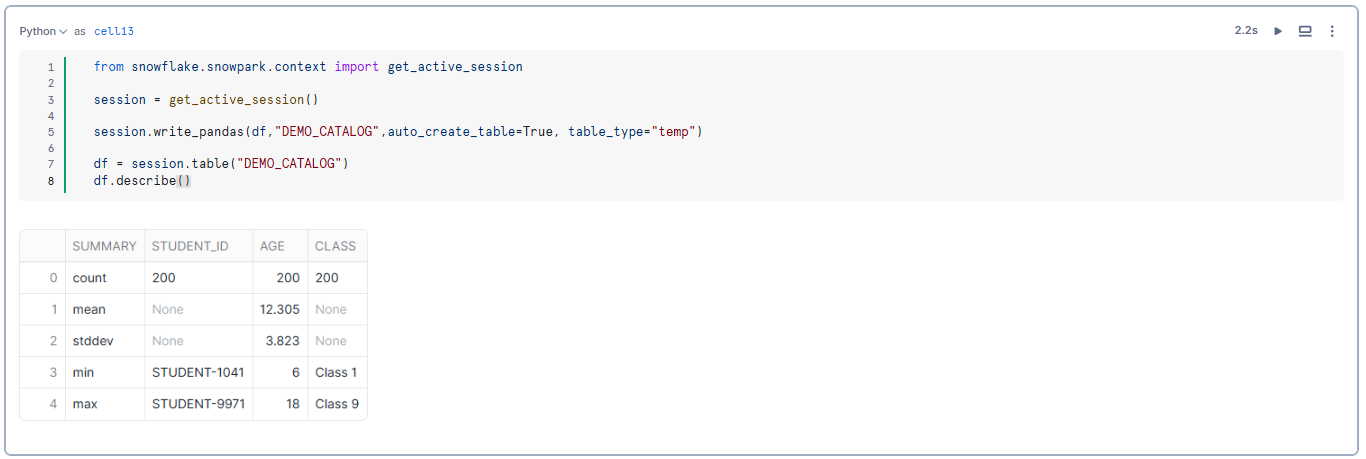

📓 Using Snowflake Snowpark Python API to Process Data

Snowflake Notebooks not only support your favorite Python data science libraries but also integrate seamlessly with the Snowflake Snowpark Python API, enabling scalable data processing within the notebook environment.

Snowpark Python API allows you to execute complex data transformations and operations directly on Snowflake tables using Python, providing a powerful toolset for data engineers and scientists.

To start using the Snwoflake Snowpark Python API, first you need to initialize a session. The session serves as the primary interface to interact with Snowflake's Python API within the notebook.

from snowflake.snowpark.context import get_active_session

session = get_active_session()Initializing a Snowpark session - Snowflake Notebooks

The session variable is the entry point that gives you access to Snowflake's Python API.

Here, we use the Snowpark Python API to write a pandas DataFrame as a Snowpark table named DEMO_CATALOG.

session.write_pandas(df,"DEMO_CATALOG",auto_create_table=True, table_type="temp")Using Snowpark Python API to write a pandas DataFrame as a Snowpark table

Now that we have created the DEMO_CATALOG table, we can reference the table and compute basic descriptive statistics with Snowpark.

df = session.table("DEMO_CATALOG")

df.describe()Referencing the table - Snowflake Notebooks

As you can see, this streamlined integration helps you to leverage Snowflake's robust processing capabilities directly within your Python-based workflows.

📓 Referencing Cells and Variables in Snowflake Notebooks

Referencing cells and variables in Snowflake Notebooks allows for seamless integration of outputs across different cell types, which enhances workflow efficiency and dynamic data interaction.

Now, let's dive in and see how you can reference cells and variables in Snowflake Notebooks

1) Referencing SQL output in Python cells

You can assign names to SQL cells and reference their outputs in subsequent Python cells. This feature is particularly useful for transforming SQL query results into Dataframes for further analysis using Snowflake Snowpark Python API or Pandas Dataframes.

Here is how you can convert SQL cell output to Snowpark or Pandas Dataframes:

To reference the output of a named SQL cell and convert it into a Snowpark Dataframe:

my_snowpark_df = cell8.to_df()Referencing SQL output in Python cells - Snowflake Notebooks

Similarly, to convert the SQL cell output into a Pandas Dataframe:

my_df = cell8.to_pandas()Converting SQL cell output into a Pandas Dataframe - Snowflake Notebooks

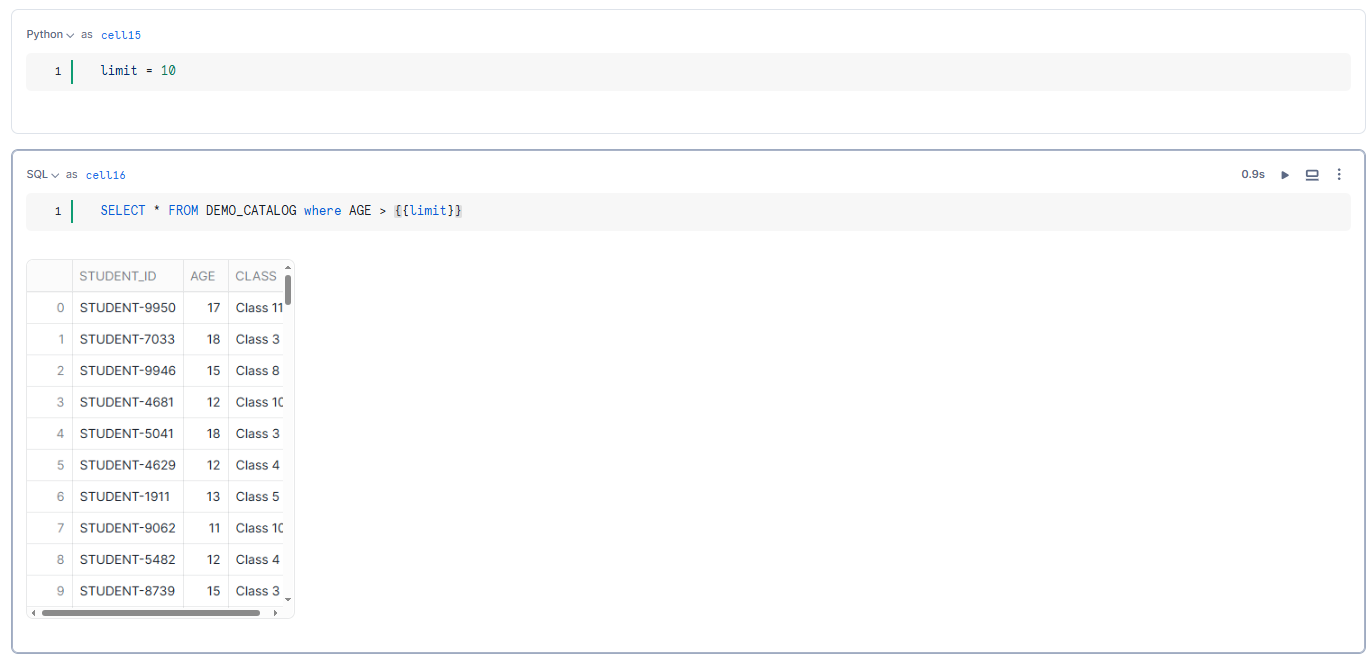

2) Referencing Python Variables in SQL cells

Snowflake Notebooks support the use of Jinja syntax {{...}} to dynamically reference Python variables within SQL cells. This allows for the creation of flexible and parameterized SQL queries.

limit = 10Python Variables

SELECT * FROM DEMO_CATALOG where AGE > {{limit}}Referencing Python Variables in SQL cells - Snowflake Notebooks

Likewise, you can also reference a Pandas Dataframe within your SQL statements, making it possible to use the Dataframe directly in SQL queries:

SELECT * FROM {{my_df}}Refrencing Pandas Dataframe within SQL statements - Snowflake Notebooks

As you can see, this ability to reference outputs and variables across different cell types in Snowflake Notebooks significantly enhances data manipulation capabilities and allows for more interactive and dynamic data workflows.

📓 Building an Interactive Data App with Snowflake Streamlit

Snowflake Notebooks seamlessly integrate with Snowflake Streamlit, enabling the creation of interactive data applications directly within your notebooks. Streamlit is a powerful tool that allows data scientists and analysts to build interactive and user-friendly web apps for data exploration and data visualization. This step-by-step guide will show you how to leverage Streamlit within Snowflake Notebooks to create an interactive data app.

Streamlit is already included in Snowflake Notebooks as part of the pre-installed libraries.

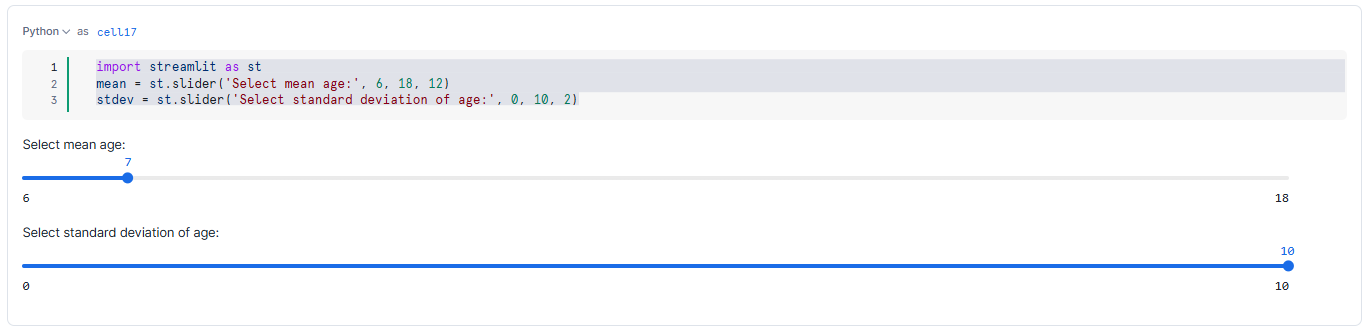

Step 1—Initialize Streamlit and Create Interactive Sliders

First, we'll create interactive sliders using Snowflake Streamlit to allow users to select the mean and standard deviation for generating data.

import streamlit as st

mean = st.slider('Select mean age:', 6, 18, 12)

stdev = st.slider('Select standard deviation of age:', 0, 10, 2)Initializing Streamlit and Creating Interactive Sliders

Step 2—Initialize a Snowpark Session

Initialize a Snowpark session to interact with Snowflake's Python API within the notebook.

from snowflake.snowpark.context import get_active_session

session = get_active_session()Initializing a Snowpark Session

Step 3—Capture Slider Values and Generate Data

Capture the mean and standard deviation values from the sliders and use them to generate a distribution of values to populate a Snowflake table.

CREATE OR REPLACE TABLE STUDENT_DATA AS

SELECT CONCAT('STUDENT-', UNIFORM(1000, 9999, RANDOM())) AS STUDENT_ID,

UNIFORM({{mean}}, {{stdev}}, RANDOM()) AS AGE,

'Class ' || UNIFORM(1, 12, RANDOM()) AS CLASS

FROM

TABLE(GENERATOR(ROWCOUNT => 200));Capturing Slider Values and Generating Data

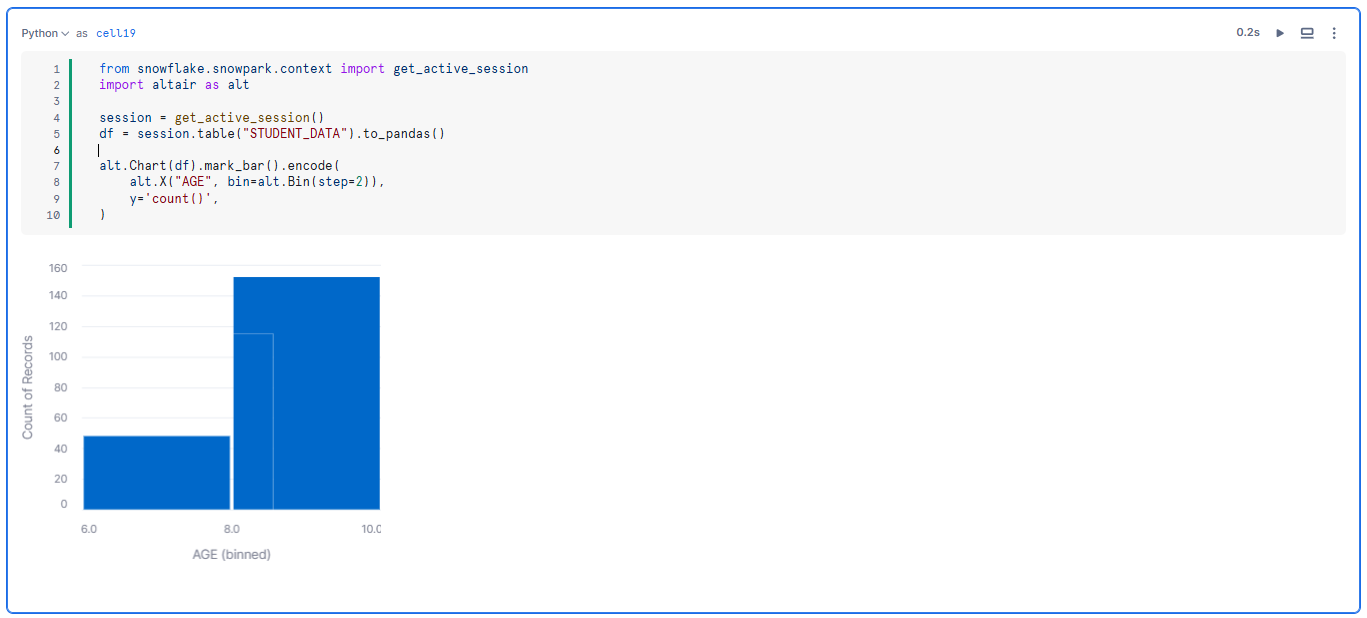

Step 4—Plot the Data Using Altair

Use Altair to plot a histogram of the generated data. This visualization will update automatically as the slider values are adjusted.

from snowflake.snowpark.context import get_active_session

import altair as alt

session = get_active_session()

df = session.table("STUDENT_DATA").to_pandas()

alt.Chart(df).mark_bar().encode(

alt.X("AGE", bin=alt.Bin(step=2)),

y='count()',

)Plotting the Data Using Altair

As you adjust the slider values, the cells re-execute itself, and the histogram updates based on the new data. This dynamic interactivity makes it easy to explore different data distributions and gain insights in real time.

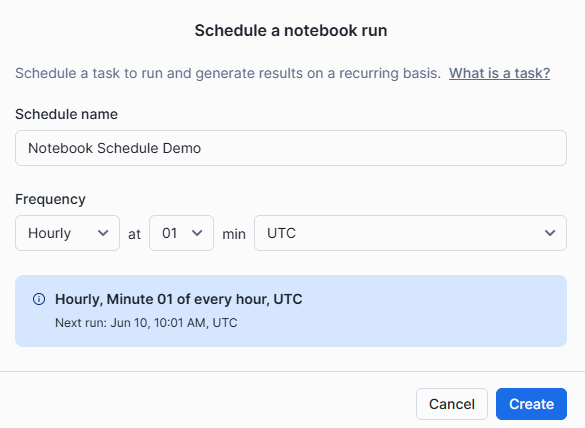

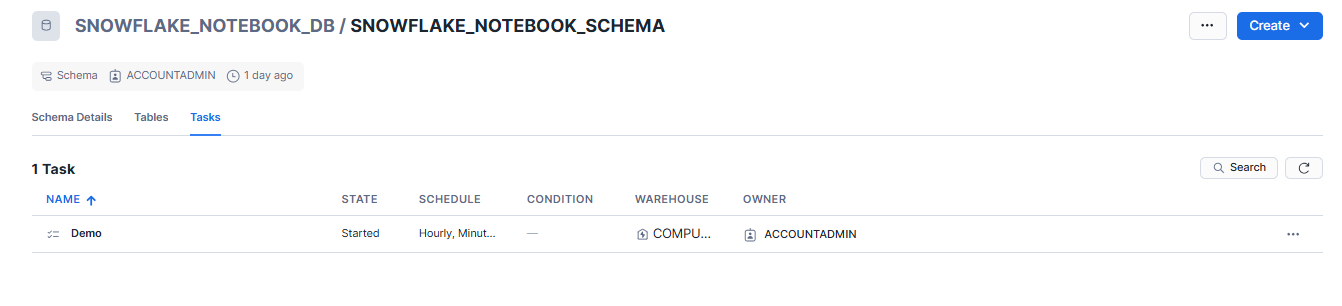

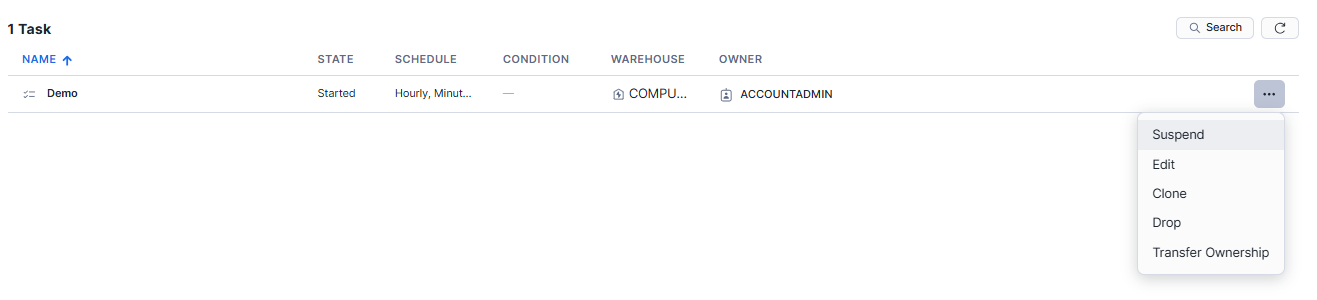

Step 9—Schedule your Snowflake Notebooks

Snowflake Notebooks offer the capability to schedule notebook runs, automating data workflows, and guaranteeing timely updates.

Whenever you create a schedule for your notebook, Snowsight creates a task that runs the notebook according to the specified schedule. The notebook runs in a non-interactive mode, executing each cell sequentially from top to bottom. The task is associated with the notebook owner's role and uses the designated warehouse for execution. If the task fails ten times, it automatically suspends to prevent further resource usage.

Each scheduled run resumes the warehouse and keeps it active until 15 minutes after the task is completed, which might affect billing.

To schedule a notebook, your role must have specific privileges. Make sure the following permissions are granted:

- EXECUTE TASK on the account

- USAGE on the database containing the notebook

- USAGE and CREATE TASK on the schema containing the notebook

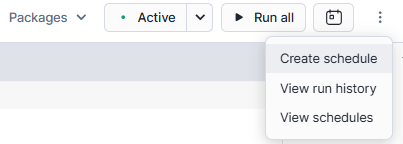

First—Create Snowflake Notebook Schedules

Follow these steps to schedule your notebook:

Step 1—Navigate to the Projects pane and select Notebooks.

Step 2—Find the notebook you want to schedule.

Step 3—In the Snowflake Notebook, select the schedule button and then click on "Create schedule".

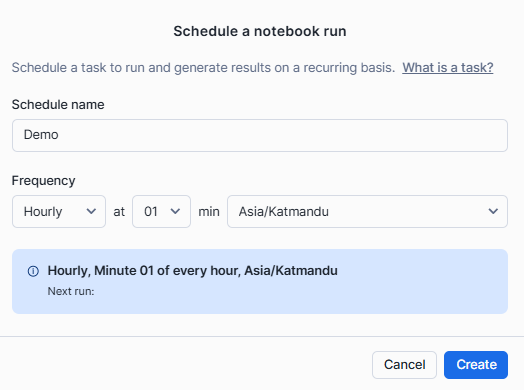

Step 4—The schedule dialog will appear. Provide the following details:

- Schedule Name: Enter a name for the notebook schedule.

- Frequency: Choose the frequency (e.g., Daily, Weekly).

- Scheduled Time: Adjust the time and other options as needed.

Step 5—Review the schedule details and click "Create" to establish the task.

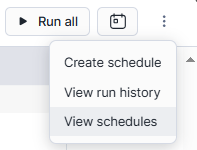

Second—Manage Snowflake Notebook Schedules

After creating a schedule, you can manage it through the task list:

Step 1—Select the schedule button in the notebook and choose "View schedules". This displays all tasks in the schema.

Step 2—Use the vertical ellipsis (more actions) menu to edit the schedule, such as changing the time or frequency, or suspending/dropping the task.

For more on scheduling Notebook runs, see Schedule your Snowflake Notebook to run.

14 Tips for Running Snowflake Notebooks Effectively

Tip 1—Understanding Notebook Permissions

Permissions are extremely crucial for making sure that you and your team can efficiently create, modify, and run notebooks. Here are few things to keep in mind:

- Make sure your role has the necessary privileges, such as CREATE TASK and EXECUTE TASK, which are required for scheduling and running notebooks.

- Use Snowflake’s role-based access controls (RBAC) to manage who can view, edit, and execute notebooks.

- Always verify that the role you are using has USAGE privileges on the database and schema where the notebook resides.

Tip 2—Managing Session Context

Managing the session context effectively guarantees that your Snowflake Notebooks execute in the correct environment:

- Set session variables at the beginning of your notebook to define the context

- Use fully qualified names for tables and other database objects to avoid ambiguity and ensure correct data access.

- Use SQL commands like USE DATABASE and USE SCHEMA to explicitly set the context when necessary.

Tip 3—Specifying the Appropriate Warehouse

Choosing the right warehouse is essential for optimizing performance and managing costs. The warehouse you select should be appropriately sized for your data operations.

For heavy data processing tasks, a larger warehouse might be necessary, while smaller operations can run on a smaller warehouse. Remember that warehouse costs are incurred while they are running, so it's important to manage and monitor their usage effectively.

Adjusting the compute resources based on the data size and complexity can significantly impact the efficiency and cost-effectiveness of your workflows.

Tip 4—Verify Required Python Packages

Snowflake Notebooks come pre-installed with several common Python packages. But, you might need additional packages for specific tasks. Use the package picker to verify and add the necessary Python packages before you begin your analysis.

If you encounter errors related to missing packages, check the compatibility and availability of those packages within the Snowflake environment.

Tips 5—Structure Your Notebook for Clarity

A well-structured notebook is easier to read, understand, and maintain:

- Organize your notebook into clear sections with descriptive titles and comments. Use Markdown cells to add explanations and context.

- Maintain consistent formatting for code and text to enhance readability.

- Include comments to document any assumptions or important details about the data and analysis.

Tip 6—Automatic Draft Saving

Snowflake Notebooks automatically save drafts every few seconds, which is a valuable feature to prevent data loss. However, automatic saving is useful, regularly committing significant changes to your version control system to maintain a comprehensive history.

Tip 7—Integrating with Git

Integrating your Snowflake Notebooks with Git provides powerful version control and collaboration capabilities. Sync your notebooks with repositories in GitHub, GitLab, BitBucket, or Azure DevOps.

This integration allows you to track changes, collaborate with team members, and maintain a history of notebook versions. Using Git also facilitates continuous integration and deployment (CI/CD) practices, ensuring that your notebooks are always up-to-date and consistent.

Tip 8—Maintaining Sequential Cell Execution

Make sure that your notebook cells are executed in the correct sequence.

For Python cells, non-linear cell execution order is not supported – no global referencing, meaning that if you try to run a previous cell that declares a variable, you need to run all subsequent cells below it.

Tip 9—Executing Snowflake Scripting Blocks

Snowflake Notebooks do not support the BEGIN … END syntax for Snowflake scripting within SQL cells. Instead, use the session.sql().collect() method in a Python cell to execute scripting blocks. This approach allows you to run complex transactional logic and procedural code within your notebooks.

Tip 10—Regularly Refresh the Notebook or Check Package Versions if Errors Occur

If you encounter errors or unexpected behavior, try refreshing your notebook and checking the versions of your Python packages. Compatibility issues can arise when packages are updated or when the notebook environment is refreshed. Regularly refreshing the notebook helps ensure that you are working with the most current environment and package versions.

Tip 11—Leverage Snowflake’s Role-Based Access Controls (RBAC)

Snowflake’s RBAC features allow you to manage permissions and secure access to your notebooks and data. Use RBAC to control who can view, edit, and execute notebooks.

Tip 12—Utilizing Keyboard Shortcuts

Keyboard shortcuts can significantly enhance your productivity when working with Snowflake Notebooks. Familiarize yourself with the available shortcuts to navigate and edit your notebook more efficiently.

| Command | Shortcut |

| Run this cell and advance | SHIFT + ENTER |

| Run this cell only | CMD + ENTER |

| Run all cells | CMD + SHIFT + ENTER |

| Add cell BELOW | b |

| Add cell ABOVE | a |

| Delete this cell | d + d |

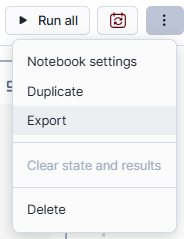

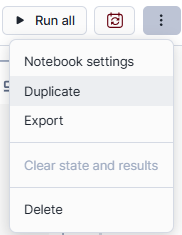

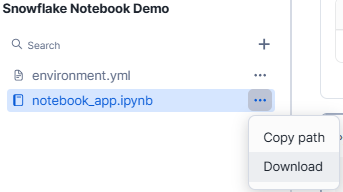

Tip 13—Exporting, Duplicating and Downloading Snowflake Notebooks

Snowflake Notebooks can be exported, duplicated, and downloaded for backup and sharing purposes. Exporting a notebook allows you to create a snapshot of your work that can be shared with others or stored for future reference. To do so, click on the action menu located at the top of the Snowflake Notebook and select “Export”.

Duplicating a notebook is useful when you want to experiment with changes without affecting the original version. To do so, click on the action menu and select “Duplicate”.

Downloading notebooks as .ipynb files allows you to work with them in other environments, such as Jupyter Notebooks. To do so, navigate to the left side panel, select the notebook you want to download, click on the menu option, and hit “Download”.

Tip 14—Importing Existing Projects

You can import existing .ipynb projects into Snowflake Notebooks to continue development and analysis within the Snowflake environment.

To import a project, navigate to the Notebooks section in Snowsight, select the import .ipynb file option, and upload your .ipynb file.

That’s it! This is everything you need to know to get started with using Snowflake Notebooks.

Further Reading:

📖 Read:

- Snowflake Notebook Demos

- Develop and run code in Snowflake Notebooks

- Snowflake Notebooks, Interactive Environment for Data & AI Teams

- Experience Snowflake with notebooks

- A Getting Started Guide With Snowflake Notebooks

📺 Watch:

Snowflake Notebooks 101: SQL, Python, Streamlit & Snowpark

Getting Started With Your First Snowflake Notebook

End-to-End Machine Learning with Snowflake Notebooks

Using Snowflake Notebooks To Build Data Engineering Pipelines With Snowpark Python And SQL

Save up to 30% on your Snowflake spend in a few minutes!

Conclusion

And that’s a wrap! Snowflake Notebooks bring together the best of data analysis, machine learning, and data engineering—all within the Snowflake ecosystem. Snowflake Notebooks, with their interactive, cell-based interface, seamless connection with Snowflake tools, and strong scheduling and automation capabilities, have the potential to transform how data professionals interact with their data.

In this article, we have covered:

- What Is a Notebook in Snowflake?

- Potential of Snowflake Notebooks—Key Features and Capabilities

- Step-by-Step Guide to Setting Up and Using Snowflake Notebooks

- 14 Things to Keep in Mind When Running Snowflake Notebooks Effectively

…and so much more!

FAQs

What is Snowflake Notebooks?

Snowflake Notebooks is an interactive development environment within Snowsight that supports Python, SQL, and Markdown. It enables users to perform data analysis, develop machine learning models, and carry out data engineering tasks within the Snowflake ecosystem.

Is Snowflake Notebooks a separate product or an integrated part of the Snowflake platform?

Snowflake Notebooks is an integrated part of the Snowflake platform, designed to work seamlessly with other Snowflake components and leverage the platform's scalability, security, and governance features.

What programming languages are supported in Snowflake Notebooks?

Snowflake Notebooks support Python, SQL, and Markdown cells, allowing users to write and execute code, as well as document and format their work using the Markdown syntax.

How do you create a new cell in Snowflake Notebooks?

Select the desired cell type button (SQL, Python, or Markdown) to create a new cell. You can change the cell type anytime using the language dropdown menu.

How do you move cells in Snowflake Notebooks?

Cells can be moved by either dragging and dropping or using the actions menu.

How do you delete cells in Snowflake Notebooks?

Select the vertical ellipsis (more actions), choose delete, and confirm the action.

How do you stop a running cell in Snowflake Notebooks?

Select the stop button on the top right of the cell to stop the current cell and any subsequent cells scheduled to run.

How do you format text with Markdown in Snowflake Notebooks?

Add a Markdown cell and type valid Markdown syntax to format your text. The formatted text appears below the Markdown syntax.

How do you install additional Python packages in Snowflake Notebooks?

Click on the Packages dropdown at the top right of the notebook interface, type the package name, and add it to your notebook environment.

How do you use the Snowpark API in Snowflake Notebooks?

Initialize a session using get_active_session() from the snowflake.snowpark.context module, then use the session object to interact with the Snowpark API.

How do you reference cells and variables in Snowflake Notebooks?

You can assign names to SQL cells and reference their outputs in Python cells using cell_name.to_df() or cell_name.to_pandas(). You can also reference Python variables in SQL cells using Jinja syntax {{variable_name}}.

Can I visualize my data within Snowflake Notebooks?

Yes, Snowflake Notebooks provide built-in support for data visualization using popular libraries such as Matplotlib, Plotly, and Altair. Also, users can leverage Streamlit to build interactive data applications directly within their notebooks.

How do Snowflake Notebooks integrate with Git for version control?

Snowflake Notebooks offer built-in integration with Git, allowing users to version control their notebooks and collaborate with team members effectively. Also, Snowflake Notebooks supports popular hosting services such as GitHub, GitLab, Bitbucket, and Azure DevOps.

Can I schedule the execution of my Snowflake Notebooks?

Yes, Snowflake Notebooks provide a scheduling feature that allows users to automate the execution of their notebooks at specified intervals. Users can monitor the progress of scheduled tasks and inspect the results of past runs directly from the notebook interface.

How do you export, duplicate, or download Snowflake Notebooks?

To export or duplicate a notebook, click on the action menu at the top and select "Export" or "Duplicate". To download a notebook as an .ipynb file, navigate to the left side panel, select the notebook, click on the menu option, and select "Download".