Databricks' unified lakehouse architecture has revolutionized the data analytics and warehousing landscape, streamlining data management while enhancing performance and scalability for data processing operations. Core to this innovation is Databricks SQL—a powerful and intelligent data warehouse powered by DatabricksIQ, the Data Intelligence Engine that recognizes the uniqueness of your data. Databricks SQL democratizes analytics for both technical and business users. There are several distinct types of Databricks SQL warehouses available: Serverless, Pro, and Classic—each tailored to specific use cases and performance demands.

In this article, we will cover everything you need to know about different types of Databricks SQL warehouses, including Serverless, Pro, and Classic Databricks SQL warehouses.

What Is Databricks SQL?

Databricks SQL is an intelligent data warehouse solution that leverages the Databricks platform's capabilities. It's got a special engine, DatabricksIQ, that makes it happen. This tool is designed for two types of users: technical users like data engineers and scientists, and business users who need to get insights from data. It helps you do fast, scalable analytics by providing a unified platform for data management, analysis, and visualization.

Databricks SQL Warehouse - Databricks Serverless SQL

Key Features/Characteristics of Databricks SQL

1) Lakehouse Architecture

Databricks SQL combines data storage and analytics in a single platform, simplifying the management of data lakes and warehouses.

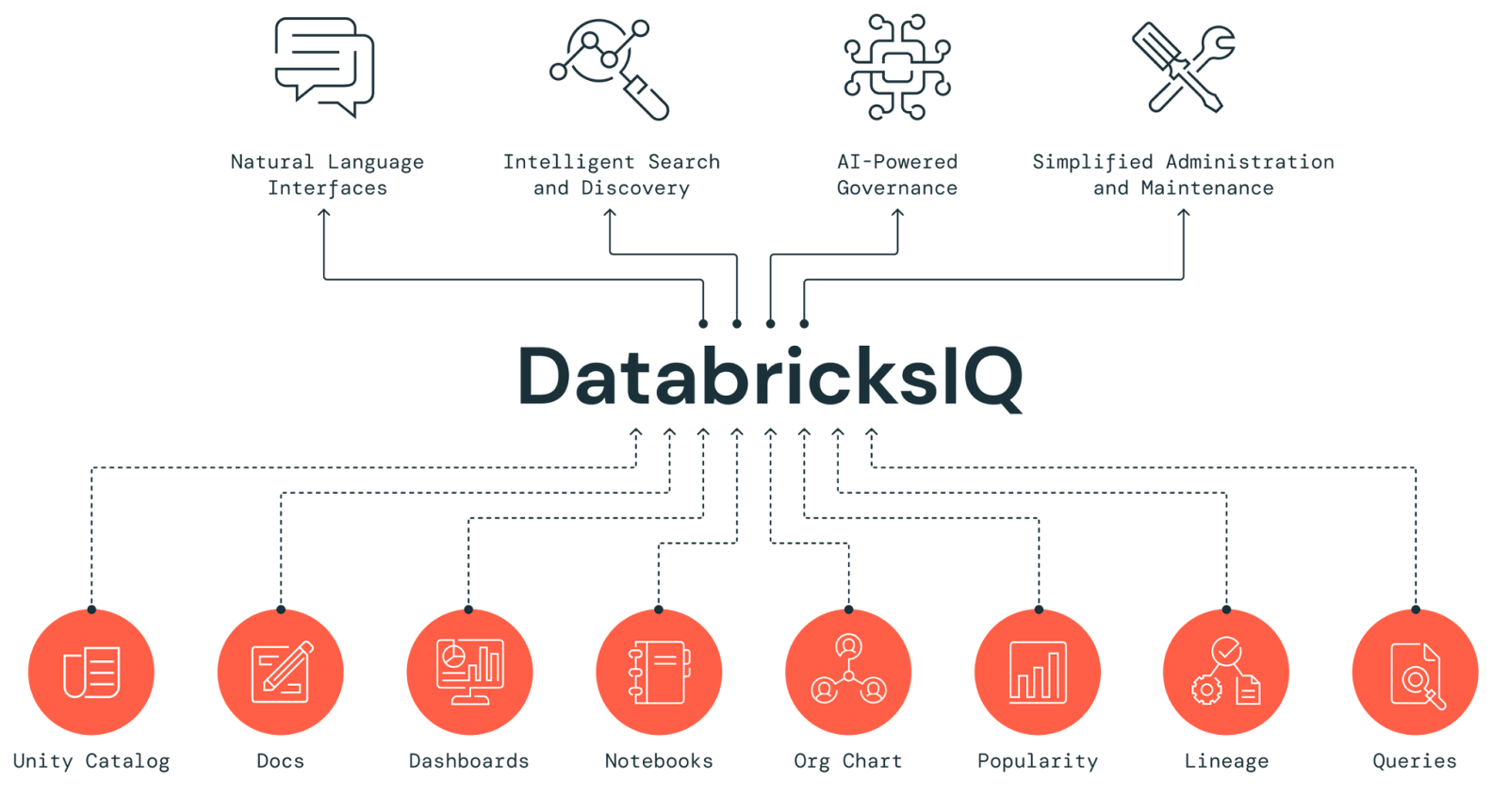

2) DatabricksIQ

This intelligent engine automatically optimizes data queries and management, improving performance and efficiency.

3) Databricks Serverless Compute

Databricks SQL offers flexible, on-demand computing resources that scale automatically to meet workload needs, reducing management overhead.

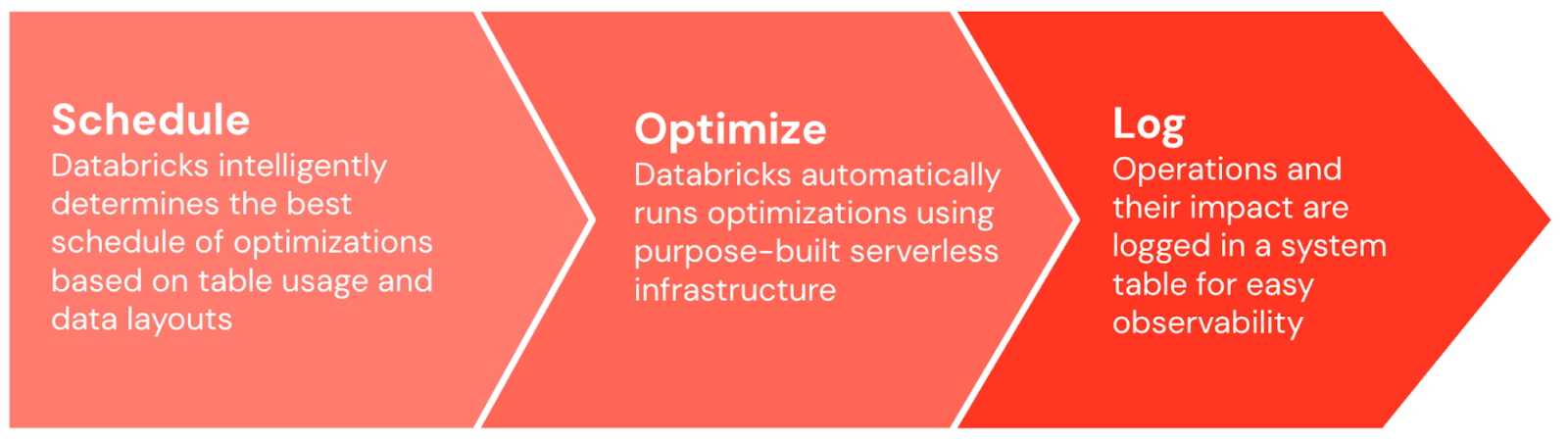

4) Predictive Optimizations

The platform uses machine learning to dynamically enhance query performance and resource allocation.

5) Unified Governance via Unity Catalog

Databricks SQL ensures consistent data governance and access control across the organization through Databricks Unity Catalog, which provides a unified security model.

6) Natural Language Processing

Users can interact with their data using natural language queries, making analytics accessible to non-technical users without the need for coding.

7) 12x better price/performance

Databricks SQL is designed to provide the best possible performance at the lowest possible cost.

8) Photon Engine

This advanced vectorized query engine significantly boosts query performance, especially for large datasets.

9) Integration with BI Tools

Databricks SQL seamlessly connects with business intelligence tools like Power BI, Tableau, and Looker, enabling straightforward data visualization and reporting.

10) Diverse ecosystem

Databricks SQL integrates seamlessly with popular tools like Fivetran, dbt, Power BI, Tableau, and Looker, allowing users to ingest, transform, and query all their data in one place.

For more details, see What makes Databricks SQL stand out.

Save up to 50% on your Databricks spend in a few minutes!

What Are the Benefits of Databricks SQL?

Databricks SQL offers a wide range of benefits. Here are some of the key benefits of using Databricks SQL:

1) Serverless and Cost-Effective

Databricks SQL operates in a serverless environment, meaning you don’t have to manage or scale infrastructure.

2) Built-in Governance

Databricks SQL integrates with Databricks Unity Catalog, providing a unified governance model that ensures data security and compliance.

3) Diverse Tool Integrations

Databricks SQL integrates smoothly with popular tools such as Fivetran for data ingestion, dbt for data transformation, and business intelligence platforms like Power BI, Tableau, and Looker.

4) Streamlined Analytics

Databricks SQL simplifies access to the latest data, enabling quick transitions from business intelligence to machine learning. It removes data silos, providing a unified platform for all analytics needs.

5) Easy Data Ingestion and Transformation

Ingesting and transforming data with Databricks SQL is straightforward. You can easily import data from cloud storage and enterprise applications and transform it in place using built-in ETL capabilities or your chosen tools.

For more details, see Key Benefits of Databricks SQL.

What is a SQL warehouse in Databricks?

Databricks SQL warehouse is a compute resource that allows users to query and explore data efficiently using SQL. It's essentially a cluster optimized for running SQL queries, providing the computational power needed to process large datasets quickly and efficiently.

Types of Databricks SQL Warehouse

Databricks offers three types of SQL warehouses:

- Serverless Databricks SQL Warehouse

- Pro Databricks SQL Warehouse

- Classic Databricks SQL Warehouse

Each of these types has its own characteristics and use cases, which we'll explore in detail in the following sections.

SQL Warehouse Types in Databricks

1) Serverless Databricks SQL Warehouses

Serverless SQL warehouses are the latest addition to the Databricks SQL lineup, and they're quickly becoming the recommended option for most use cases. But what exactly are they?

Serverless Databricks SQL warehouses provide a fully managed environment where compute resources are automatically scaled based on demand. This type of SQL warehouse eliminates the need for users to manage infrastructure, allowing them to focus on data analysis.

Here are some of the key features of Serverless Databricks SQL warehouses:

- Databricks Serverless SQL warehouses can start up in a matter of seconds (typically 2-6 seconds), compared to minutes for other types.

- Databricks Serverless SQL warehouses can quickly add or remove compute resources based on query demand, optimizing both performance and cost.

- Databricks takes care of all the underlying infrastructure, including capacity management, patching, and upgrades.

- Databricks Serverless SQL warehouses can admit queries closer to the hardware's limitations, maximizing resource utilization.

- Databricks Serverless SQL warehouses automatically provision and scale the resources as needed, preventing over-provisioning and reducing idle times, resulting in a cheaper total cost of ownership.

- Databricks Serverless SQL warehouses can access data from any supported region, regardless of where the warehouse is located.

Performance Capabilities

Serverless Databricks SQL warehouses support all of Databricks SQL's advanced performance features, like:

Databricks Photon Engine is a high-performance, vectorized query engine designed to significantly accelerate the execution of SQL and DataFrame workloads.

Predictive I/O is a suite of features for speeding up selective scan operations in SQL queries. It can accelerate data reads and updates, delivering significant performance improvements for data processing tasks.

Intelligent workload management (IWM) is a set of features that optimizes the performance and cost-effectiveness of serverless SQL warehouses by dynamically managing resources based on real-time workload demands. Utilizing AI-powered predictions, IWM analyzes incoming queries to allocate the appropriate compute resources quickly. It automatically scales resources up or down as needed, monitors query queues, and optimizes resource utilization to enhance overall system performance while minimizing costs.

2) Pro Databricks SQL Warehouse

Pro Databricks SQL warehouses are the middle ground between serverless and classic warehouses. They offer more control over the underlying infrastructure while still providing advanced performance features.

Unlike Serverless Databricks SQL warehouses, Pro Databricks SQL warehouses operate within the user's cloud account, providing greater control over the networking and compute environment. But this means they take longer to start up (typically around 4 minutes) and don't scale as dynamically as serverless warehouses.

Here are some of the key features of Pro Databricks SQL warehouses:

- Pro Databricks SQL warehouses allow you to connect to databases in your network or on-premises, enabling hybrid architectures.

- Pro Databricks SQL warehouses give you more control over the underlying infrastructure, which can be super-efficient for compliance or specific performance tuning needs.

- Administrators can configure settings such as Databricks cluster size and auto-stop features to optimize performance based on specific use cases.

- Pro Databricks SQL warehouses are not as dynamic as Databricks SQL Serverless warehouses but still offer auto scaling capabilities.

Performance Capabilities

Pro Databricks SQL warehouses support only two of the three Databricks SQL's advanced performance features, like:

Pro Databricks SQL warehouses do not support Intelligent Workload Management, which means they're less responsive to rapidly changing query demands compared to serverless warehouses.

3) Classic Databricks SQL Warehouse

Classic Databricks SQL warehouses are the original offering from Databricks. While they're still supported, they offer the most basic type, providing essential SQL querying capabilities without some of the advanced features found in Serverless and Pro Databricks SQL warehouses.

Like Pro Databricks SQL warehouses, Classic Databricks SQL warehouses run in your own cloud account. They have similar startup times to Pro warehouses (around 4 minutes) and offer the least dynamic scaling capabilities.

Here are some of the key features of Classic Databricks SQL warehouses:

- Basic Functionality: Classic warehouses provide the essential SQL query capabilities.

- Familiar Model: Classic Databricks SQL warehouses may feel more familiar to those coming from traditional data warehouses.

- Manual Resource Management: Classic Databricks SQL warehouses give users complete control over the setup of compute resources.

- Stable Environment: Classic Databricks SQL warehouses offer a consistent performance profile for predictable workloads.

- Legacy Support: Classic Databricks SQL warehouses are useful for supporting older workflows that haven't been optimized for newer warehouse types.

Performance Capabilities

Classic Databricks SQL warehouses support only one of the advanced performance features:

Classic Databricks SQL warehouses do not support Predictive IO or Intelligent Workload Management, which means they offer the least optimized performance among the three warehouse types.

Performance Showdown—Serverless vs Pro vs Classic Databricks SQL Warehouses

To help you understand the differences between the three warehouse types, let's compare them across various performance and operational factors:

| Features | Serverless Databricks SQL warehouse | Pro Databricks SQL warehouse | Classic Databricks SQL warehouses |

| Photon Engine | ✅ | ✅ | ✅ |

| Predictive IO | ✅ | ✅ | ❌ |

| Intelligent Workload Management (IWM) | ✅ | ❌ | ❌ |

| Startup Time | 2-6 seconds | ~4 minutes | ~4 minutes |

| Autoscaling | Rapid and dynamic | Moderate | None |

| Workload Type | All types, especially variable loads (ETL, BI, Exploratory Analysis) | Steady, predictable loads + Custom workloads | Basic SQL workloads |

| Compute Layer Location | Databricks Account | Customer Cloud Account | Customer Cloud Account |

As you can see clearly, Serverless Databricks SQL warehouse offers the most advanced features and the best performance, especially for workloads with variable demands. Pro Databricks SQL warehouses are a good middle ground, offering advanced features with more control over the infrastructure. Classic Databricks SQL warehouses, while still useful in certain scenarios, offer the most basic functionality and performance.

Check out this article to understand the pricing structure of Databricks SQL.

Databricks SQL Avilability Region

Below is the list of regions where Databricks SQL warehouses are available:

1) AWS Regions:

- US East (Northern Virginia)

- US East (Ohio)

- US West (Oregon)

- US West (California)

- Canada (Central)

- EU (Frankfurt)

- EU (France)

- EU (Ireland)

- EU (London)

- South America (São Paulo)

- Asia Pacific (Mumbai)

- Asia Pacific (Seoul)

- Asia Pacific (Singapore)

- Asia Pacific (Sydney)

- Asia Pacific (Tokyo)

2) Azure Regions:

- US East

- US East 2

- US West

- US West 2

- US West 3

- US Central

- US North Central

- US South Central

- US West Central

- US Gov Virginia

- US Gov Arizona

- Canada Central

- Canada East

- North Europe

- West Europe

- France Central

- Germany West Central

- Australia East

- Australia Central 2

- Australia Central

- Australia Southeast

- UK South

- UK West

- Norway East

- Sweden Central

- Switzerland North

- Switzerland West

- Brazil South

- South Africa North

- CN East 2

- CN East 3

- CN North 2

- CN North 3

- Asia East

- Asia Southeast

- UAE North

- Korea Central

- India Central

- India South

- India West

- Japan East

- Japan West

3) Google Cloud Regions:

- Asia Pacific (Singapore)

- Asia Pacific (Tokyo)

- Australia (Sydney)

- EU (Belgium)

- EU (England)

- EU (Frankfurt)

- US (Iowa)

- US (South Carolina)

- US (Virginia)

- US (Oregon)

- US (Nevada)

- Canada (Quebec)

- India (Mumbai)

What Is the Default SQL Warehouse in Databricks?

The default SQL warehouse type in Databricks depends on several factors, including your region and how you're creating the warehouse. Here's a breakdown:

For Workspaces in Regions that Support Serverless SQL:

- Using UI: The default warehouse type is Serverless.

- Using Databricks SQL Warehouses API: The default is Classic unless enable_serverless_compute is set to true.

- Legacy External Hive Metastore: Defaults to Pro in UI and Classic in API.

For Workspaces in Regions that Do Not Support Serverless SQL:

- Using UI: The default warehouse type is Pro.

- Using Databricks SQL Warehouses API: The default is Classic.

Keep in mind that Databricks is always making changes to its product, so these defaults might not stay the same. For the latest info, check the most recent docs.

Step-By-Step Guide to Creating a Databricks SQL Warehouse

Now that we understand the different types of SQL warehouses in Databricks, let's walk through the process of creating one. We'll use the Databricks UI, but you can also follow these steps using the API or other tools.

Prerequisites

Before you start, make sure you have:

- Databricks workspace

- Appropriate permissions (workspace admin or user with unrestricted Databricks cluster creation permissions)

- If you are creating a serverless warehouse, make sure your region supports it and you've completed any required steps to enable Databricks serverless SQL warehouses

Step 1—Login to Databricks

First, log into your Databricks workspace. You should land on the homepage of your Databricks environment.

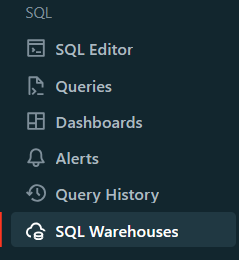

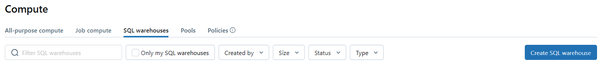

Step 2—Navigate the SQL Warehouses Section

In the sidebar, click on "SQL Warehouses". This will take you to the SQL warehouses management page.

Step 3—Click on "Create SQL Warehouse"

Look for the "Create SQL Warehouse" button, typically located in the upper right corner of the page. Click on it to start the creation process.

Step 4—Configure Basic Databricks SQL Warehouse Settings

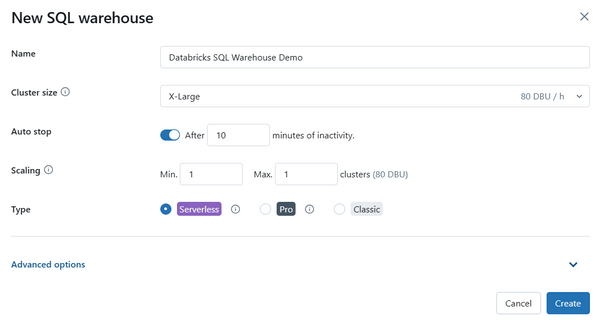

In the creation form, you'll need to configure several basic settings:

1) Name: Give your warehouse a descriptive name. This will help you identify it later.

2) Databricks Cluster Size: This represents the size of the driver node and the number of worker nodes. The default is X-Large, but you can adjust this based on your performance needs. Remember, larger sizes will process queries faster but cost more.

3) Auto Stop: This setting determines how long the warehouse will remain idle before automatically stopping.

For serverless warehouses, the default is 10 minutes (minimum 5 minutes).

For Pro and Classic warehouses, the default is 45 minutes (minimum 10 minutes).

4) Scaling: Here, you can set the minimum and maximum number of Databricks clusters for your warehouse. The default is a minimum and maximum of one Databricks cluster. Increase the maximum if you expect high concurrency.

5) Type: Choose between Serverless, Pro, or Classic.

Serverless is typically the recommended option for most use cases.

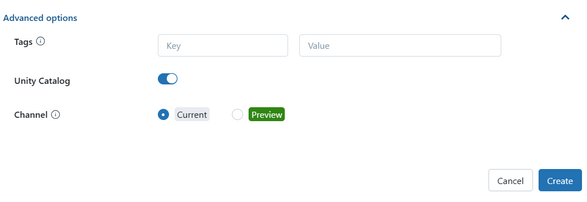

Step 5—Configure Advance Databricks SQL Warehouse Settings

Expand the "Advanced Options" section to configure additional settings, like:

1) Tags: You can add key-value pair tags to help with resource tracking and cost management.

2) Unity Catalog: If Databricks Unity Catalog is enabled for your workspace, you can toggle it to use it with this Databricks SQL warehouse.

3) Channel: You can choose between the stable channel or the preview channel.

Preview Channel lets you test out new features (not recommended for production workloads)

Step 6—Create Databricks SQL Warehouse

Once you have configured all the settings, click the “Create” button at the end of the form. Databricks will then provision your new SQL warehouse.

After you've created your warehouse, you can take control of it by starting or stopping it, tweaking its settings, or setting permissions.

What Is the Difference Between Cluster and SQL Warehouse in Databricks?

Both Databricks clusters and Databricks SQL warehouses give you compute power to process data. But they're not exactly the same. Knowing how they differ can help you pick the one that's best for you.

Check out this table that breaks down the main differences:

| Databricks Clusters | Databricks SQL Warehouse |

| Databricks Clusters is a general-purpose compute for running a wide range of workloads including batch processing, streaming, machine learning, and SQL queries. | Databricks SQL warehouse is optimized for running interactive SQL queries and BI workloads, providing high performance and scalability for data warehousing. |

| Databricks Clusters provide isolated, customizable virtual environments with dedicated resources for running Apache Spark jobs, supporting diverse workloads and applications. | Databricks SQL warehouse offers scalable SQL compute resources that are decoupled from storage, tailored specifically for SQL workloads, and optimized for query performance and cost efficiency. |

| Databricks Clusters are versatile and can be used for ETL, data processing, streaming, and iterative development tasks, allowing customization in terms of node types, libraries, and resources. | Databricks SQL warehouses are specifically designed to handle high-concurrency SQL queries, with features like the Photon engine and Predictive IO for enhanced query performance and efficient resource utilization. |

| Databricks Clusters support both auto-scaling and manual scaling based on workload demands, making them flexible for varied compute needs. | Databricks SQL warehouse, especially the Serverless option, auto-scale rapidly to meet workload demands, ensuring optimal performance for SQL queries with minimal manual intervention. |

| Databricks Clusters can be secured with granular access controls and integrated with secure networking configurations, suitable for environments requiring strong isolation and custom security setups. | Databricks SQL warehouse integrate deeply with Unity Catalog for centralized governance, data discovery, and security, providing a streamlined solution for data governance and audit trails. |

| Databricks Clusters typically take a few minutes to start up, depending on their configuration and resource allocation, but offer a broad range of customization options. | Databricks SQL warehouse, particularly Serverless, offer fast startup times (2-6 seconds), making them ideal for on-demand SQL querying and BI workloads where responsiveness is critical, while Pro and Classic have longer startup times (~4 minutes). |

| Databricks Clusters are ideal for complex data engineering tasks, machine learning model training, and large-scale data processing, with the ability to handle both batch and streaming data. | SQL Warehouses are tailored for business intelligence, reporting, and real-time analytics, ensuring that SQL queries run efficiently with optimized resource management for cost-effective operations. |

Want to take Chaos Genius for a spin?

It takes less than 5 minutes.

Conclusion

Databricks SQL Warehouses offer a versatile and scalable solution for running SQL queries on large datasets. No matter if you choose Serverless, Pro, or Classic, knowing what each type can and can't do helps you make smart choices that fit your needs. When you pick the right SQL Warehouse type, you can streamline your data workflows, reduce costs, and get more done.

In this article, we have covered:

- What is Databricks SQL?

- What are the benefits of Databricks SQL?

- What is a SQL warehouse in Databricks?

- SQL warehouse types in Databricks

- Performance showdown—Serverless vs. Pro vs. Classic Databricks SQL warehouses

- What is the default SQL warehouse in Databricks?

- Step-by-step guide to creating a Databricks SQL warehouse

- Difference between a cluster and a SQL warehouse in Databricks

… and more!

FAQs

What is a SQL warehouse in Databricks?

SQL warehouse in Databricks is a compute resource optimized for running SQL queries on data stored in your Databricks lakehouse. It provides compute power needed to process large datasets quickly and efficiently.

What is the start time for Databricks SQL warehouse?

The start time varies depending on the type of warehouse. Serverless Databricks SQL warehouses can start in as little as ~2-6 seconds. Pro and Classic warehouses typically take around ~4 minutes to start.

What is Databricks serverless SQL warehouse?

Databricks serverless SQL warehouse is a fully managed compute resource that automatically scales based on workload demands. It offers instant startup, and rapid autoscaling, and is optimized for cost efficiency.

How to create a SQL warehouse in Databricks?

To create a SQL warehouse, log into your Databricks workspace, navigate to the SQL Warehouses section, click "Create SQL Warehouse", configure the settings (including size, auto-stop time, scaling, and type), and click "Create".

What are the benefits of Databricks SQL serverless?

Some key benefits of Databricks SQL serverless include instant startup, dynamic scaling, cost optimization, minimal management overhead, and support for advanced performance features like Photon Engine, Predictive IO, and Intelligent Workload Management.

What is the difference between cluster and SQL warehouse in Databricks?

Databricks Clusters are general-purpose compute resources that support multiple languages and use cases, while Databricks SQL warehouses are optimized specifically for SQL analytics. SQL warehouses offer faster startup times (for serverless), more dynamic scaling, and are designed to integrate with BI tools.

Do serverless Databricks SQL warehouses differ from classic Databricks SQL warehouses?

Yes, significantly. Serverless warehouses offer faster startup times, more dynamic scaling, and advanced performance features like Predictive I/O and Intelligent Workload Management. They run in Databricks' account rather than the customer's cloud account. On the other hand, Classic Databricks SQL warehouses offer more basic functionality and run in the customer's cloud account.

What is the default SQL warehouse in Databricks?

The default type depends on your region and how you're creating the warehouse. In regions that support serverless and when using the UI, serverless is typically the default. When using the API or in regions without serverless support, the default may be Classic or Pro.

Can I connect my BI tools to Databricks SQL warehouses?

Yes, Databricks SQL warehouse supports integration with many popular BI tools, like Tableau, Power BI, and Looker.

How does pricing work for Databricks SQL warehouses?

Pricing varies by type. For serverless warehouses, you pay only for the compute time used to run queries. For Pro and Classic warehouses, you typically pay for the time the warehouse is running, even when idle.

Can I use Databricks SQL warehouses for real-time analytics?

Yes, Databricks SQL supports streaming data ingestion and processing, enabling real-time analytics capabilities.

How does Unity Catalog integrate with Databricks SQL warehouses?

Unity Catalog provides centralized data governance for Databricks SQL warehouses, allowing you to manage data access and security across your entire lakehouse architecture.

Can I use custom libraries with Databricks SQL warehouses?

SQL warehouses are optimized for SQL workloads and don't support custom libraries in the same way that Databricks clusters do. But you can create and use custom functions within your SQL queries.

How do I monitor the performance of my Databricks SQL warehouse?

Databricks provides built-in monitoring tools that allow you to track query performance, resource utilization, and costs associated with your SQL warehouses. Alternatively, you can use Chaos Genius, which offers in-depth monitoring of your entire workload.