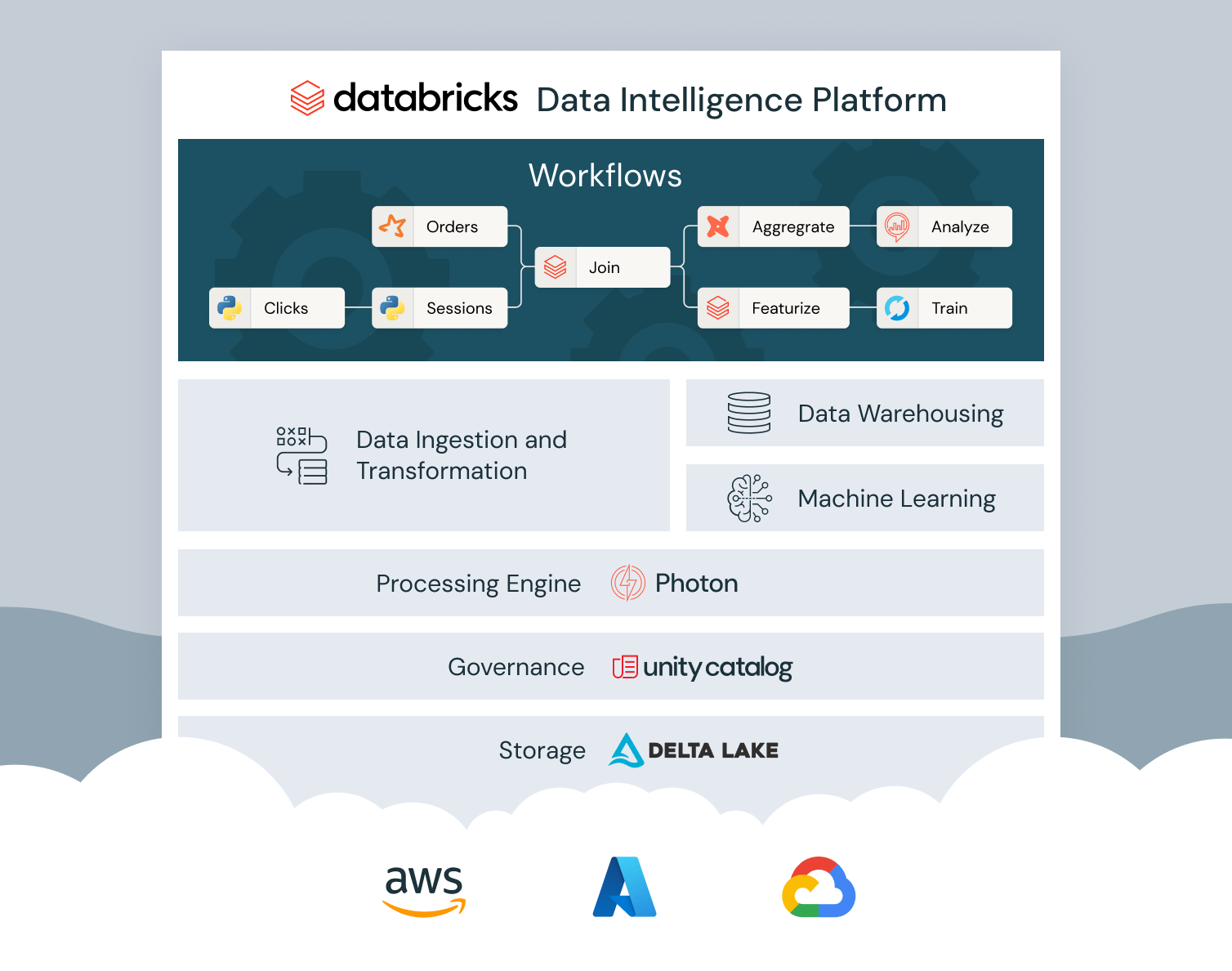

Databricks is a leading unified analytics platform that simplifies the complexities of big data processing and machine learning. Built on the foundation of Apache Spark, it provides a collaborative environment for data scientists, engineers, and business analysts to work together easily. As more people use Databricks for their data needs, understanding its pricing model is extremely crucial for effective budgeting and cost management. Databricks uses a simple, pay-as-you-go pricing model—you only pay for the resources you use. These costs are measured in Databricks Units (DBUs). Having a pricing calculator is a must to navigate these costs smoothly. It helps you estimate expenses based on how much you'll use the platform, making it easier to plan your spending and allocate resources.

In this article, we will explore the top 5 best Databricks pricing calculator tools to help you select the optimal one for your needs. These Databricks pricing calculators are freely available and can help you estimate your Databricks costs for varying configurations and workloads.

Databricks Pricing Model 101

Databricks charges based on a pay-as-you-go model. Users pay for the compute and storage resources they use. This pricing is tied to Databricks Units or DBUs.

Each job or workload on Databricks uses a certain amount of Databricks DBUs based on cluster size, instance type, and workload type. Since Databricks supports multiple cloud providers—Azure, AWS, and Google Cloud—the exact DBU rates and cost structures vary across these environments.

What is a DBU in Databricks?

Databricks Unit (DBU) is a unit of processing power in the Databricks environment. It represents the amount of computational power needed to run a specific workload over a given time frame. Databricks DBU usage is metered on an hourly basis and is determined by the resources consumed by a cluster, which include the instance type, cluster size, and the Databricks Runtime.

Databricks DBU consumption is influenced by several key factors:

- Cluster Configuration: The size and type of cluster deployed—whether standard or high-concurrency—affects Databricks DBU consumption.

- Databricks Runtime: Different runtimes (e.g., ML, Delta, Photon) have distinct Databricks DBU rates. Runtimes optimized for machine learning or streaming consume more Databricks DBUs than those for standard jobs.

- Workload Type: SQL analytics, batch processing, and streaming workloads all have different DBU consumption patterns.

Key Factors Affecting Databricks DBU Costs

Here are several factors which influence the costs associated with using Databricks:

1) Compute Resources (Cluster Size, Type, and Runtime)

The primary driver of Databricks costs is compute usage, which is influenced by:

- Cluster size: The number of worker nodes and driver node specifications directly influence Databricks DBU usage.

- Instance type: CPU, memory, and I/O capabilities of the chosen cloud instances contribute to Databricks DBU costs.

- Runtime: Databricks Runtime version and additional libraries/features enabled can increase Databricks DBU usage.

2) Storage

Databricks integrates with cloud storage (e.g., AWS S3, Azure Blob Storage, Google Cloud Storage). The volume of data stored and processed impacts overall costs. Using high-performance storage, such as SSD-based or optimized Delta storage, could lead to higher costs.

3) Data Volume

Larger datasets require more computational resources, resulting in higher Databricks DBU consumption.

4) Network Egress

Transferring data between different regions or clouds incurs additional network egress charges.

5) Databricks Edition

Databricks offers tiered editions—Standard, Premium, and Enterprise—with varying Databricks DBU costs. The Enterprise edition offers advanced features and commands the highest Databricks DBU cost.

6) Cloud Provider Variability

DBU rates differ between AWS, Azure, and Google Cloud due to their varying infrastructure costs and pricing models.

Save up to 50% on your Databricks spend in a few minutes!

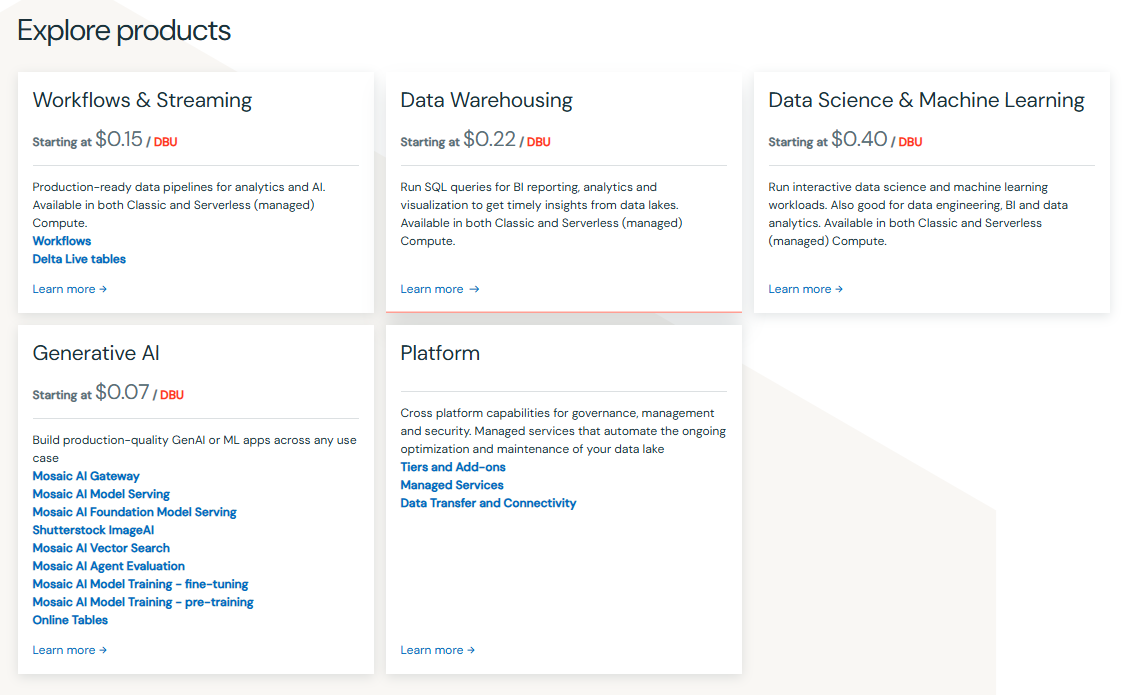

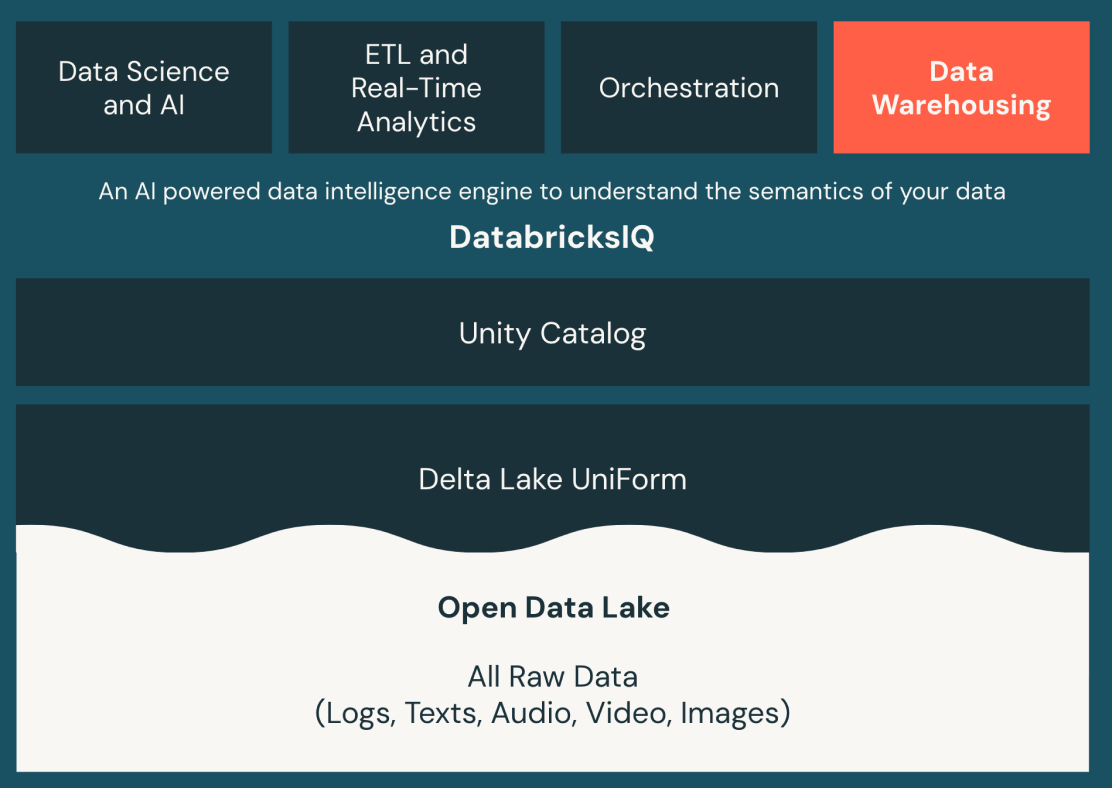

Databricks Products and Pricing

The range of Databricks products varies based on the workload and services used. Here’s a brief breakdown of key product categories:

1) Workflows & Streaming

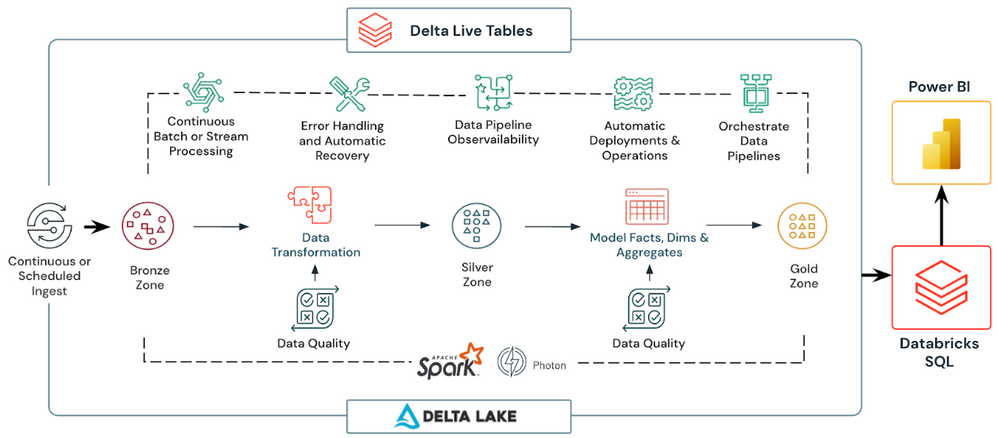

Databricks Workflows: Workflows is a managed orchestration service for defining, managing and monitoring multi-task workflows for ETL, analytics and ML pipelines. Supports a wide range of task types and provides deep observability.

Delta Live Tables: DLT is a declarative ETL framework for building reliable, maintainable, and testable data processing pipelines. Manages task orchestration, cluster management, monitoring, data quality, and error handling. Implements materialized views as Delta tables.

2) Data Warehousing

Databricks SQL: Brings data warehousing capabilities to the data lakehouse. Supports standard ANSI SQL, a SQL editor, and dashboarding tools. Integrates with Unity Catalog for data governance.

3) Data Science & Machine Learning

Compute for Interactive Workloads: Provides scalable compute resources for interactive data exploration, model development, and testing in notebooks.

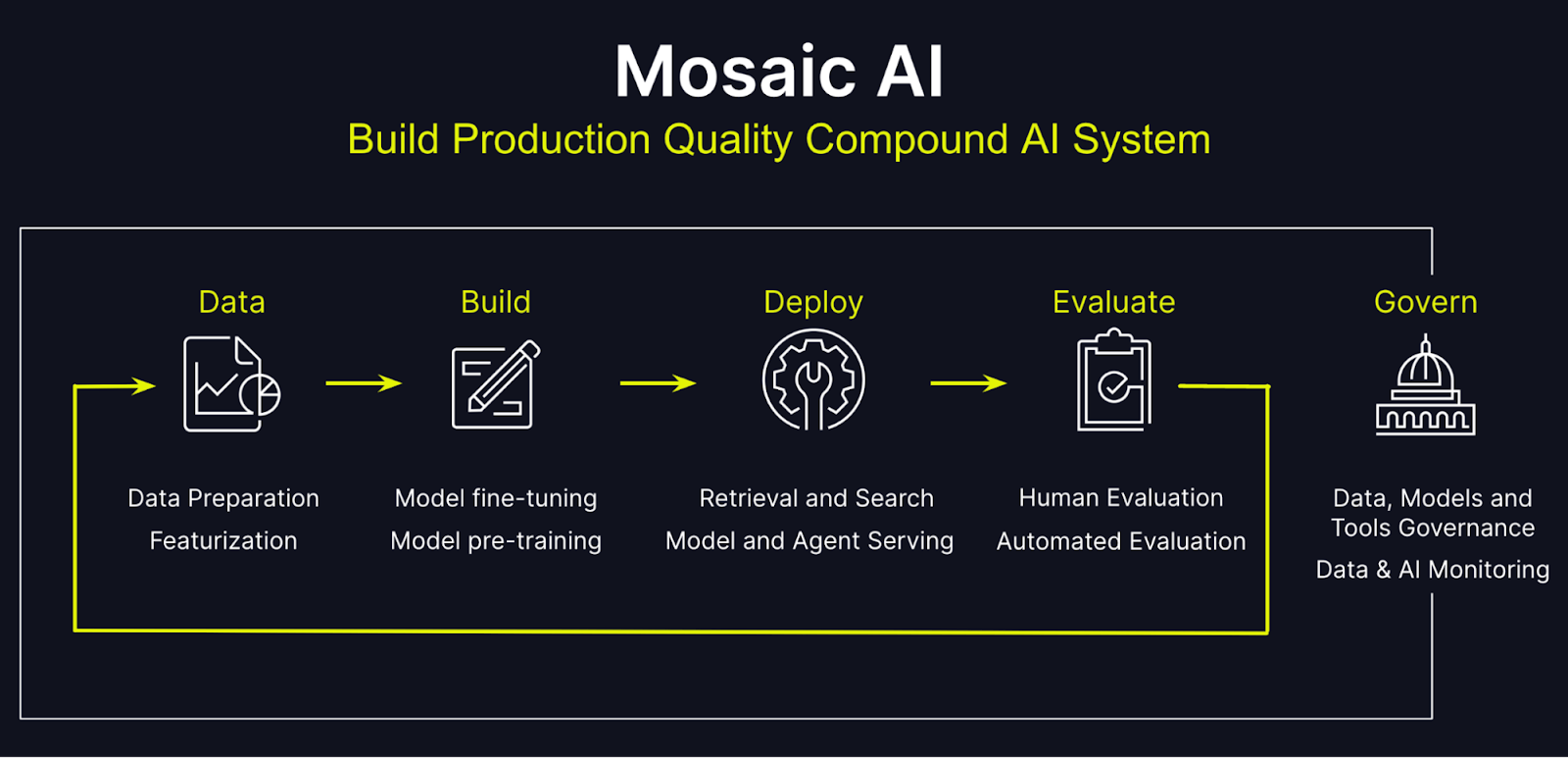

4) Generative AI

Mosaic AI Gateway: Centralized service for governing and monitoring access to generative AI models. Provides features like permission and rate limiting, payload logging, usage tracking, AI guardrails, and traffic routing.

Mosaic AI Model Serving: Unified interface to deploy, govern, and query AI models as REST APIs. Automatically scales to meet demand changes. Supports custom models, foundation models, and external models.

Mosaic AI Vector Search: A tool that enhances search capabilities by utilizing vector embeddings, improving the accuracy of search results in generative AI applications.

Mosaic AI Agent Evaluation: A framework for assessing the performance of AI agents in various tasks, ensuring they meet desired benchmarks before deployment.

Mosaic AI Foundation Model Serving: Optimized serving of state-of-the-art open models with pay-per-token pricing and provisioned throughput options.

Online Tables (Preview): Stores and serves large language model outputs efficiently for interactive applications.

5) Platform Management

- Tiers: Include Databricks Workspace (the core environment), Performance (optimized compute resources), Governance and Manageability (tools for managing data access), and Enterprise Security (enhanced security features).

- Add-Ons: Such as Enhanced Security and Compliance options that provide additional layers of protection for sensitive data.

- Lakehouse Monitoring: Tools for monitoring the health and performance of the Lakehouse environment.

- Predictive Optimization: Features that optimize resource allocation based on usage patterns.

- Fine-Grained Access Control (FGAC): Provides detailed permissions management for single-user clusters to ensure secure data access.

Data Transfer and Connectivity: Solutions that facilitate seamless integration with various data sources, enabling efficient data ingestion and processing across cloud environments.

How to Choose the Best Databricks Cost Calculator Tools: Key Factors

Using a Databricks pricing calculator tool can be a big help when estimating and managing your Databricks costs. But with so many options, you need to pick the right one for you. Here are some factors to think about when choosing a Databricks cost calculator:

1) Accuracy Plus Reliability

Opt for a Databricks pricing calculator that provides up-to-date pricing data from Databricks and cloud providers (Azure, AWS, and Google Cloud). It should account for factors like compute type, instance size, and region to deliver precise estimates.

2) Customization and Flexibility

A good Databricks pricing calculator tool should allow custom inputs based on your specific workloads—letting you adjust data volume, processing complexity, and throughput to see cost impacts.

3) Integration with Your Environment

If you’re using cost management or cloud optimization tools, select a calculator that integrates with your existing ecosystem, providing a holistic view of cloud spending.

4) Ease of Use and Accessibility

The Databricks pricing calculator tool should have a clean, user-friendly interface with clear explanations for inputs and outputs. Ideally, it should be accessible through web, API, or CLI channels.

5) Workload Pattern Customization

Advanced Databricks pricing calculator tools should allow modeling of complex workload patterns, including:

- Batch vs. streaming jobs

- Interactive vs. automated workloads

- Varying cluster sizes and instance types throughout the day

6) Auto-scaling and Job Scheduling Consideration

Make sure the Databricks pricing calculator tool accounts for Databricks’ auto-scaling features and job scheduling capabilities, giving you dynamic workload cost estimates.

7) Data Volume and Growth Projections

Robust estimation Databricks pricing calculator should allow input of current data volumes and projected growth rates to forecast future storage and processing costs.

8) Detailed Cost Breakdown and Reporting

Databricks pricing calculator tool should provide granular cost breakdowns, including:

- Compute costs by cluster type and workload

- Storage costs by data type and volume

- Additional costs for premium features and services

9) Regular Updates to Reflect Pricing Change

Databricks cost calculator tools must keep pace with changes in Databricks pricing and cloud service updates. Choose a tool with regular support and maintenance.

Top 5 Tools in the Market for Databricks Pricing Calculator

Databricks is a powerful data analytics platform utilized by companies for its scalable computing capabilities across multiple cloud providers. Estimating the costs of running Databricks workloads, however, can be complex—especially with varying cloud services, configurations, and usage models. Fortunately, there are tools available to simplify the process. Below is a list of five essential pricing calculators, including Databricks’ official tools and third-party options for comprehensive cost management.

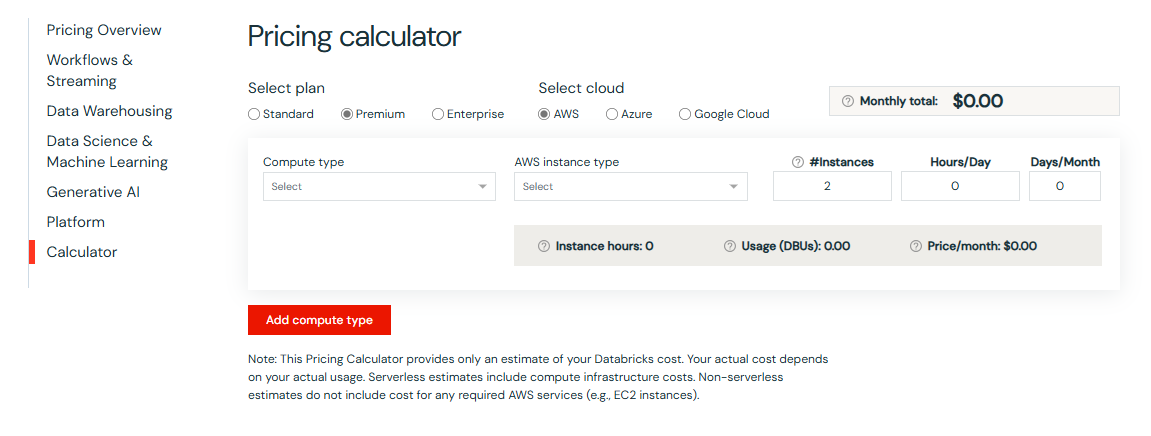

1) Databricks Official Pricing Calculator

Databricks provides an official pricing calculator to help users estimate the cost of running Databricks workloads on Azure, AWS, and Google Cloud. The Databricks pricing calculator allows users to input various parameters, such as the Databricks plan (Standard, Premium, or Enterprise), compute type, instance size, and cloud region, to generate an accurate estimate of their Databricks expenses.

URL Links:

AWS Pricing: https://www.databricks.com/product/aws-pricing

Azure Pricing: https://www.databricks.com/product/azure-pricing

Google Cloud Pricing: https://www.databricks.com/product/gcp-pricing

How to Use:

To use the Databricks pricing calculator, follow these steps:

- Navigate to the Databricks pricing calculator.

- Choose the relevant cloud platform (AWS, Azure, or Google Cloud).

- Select the appropriate compute type and instance size.

- Specify the cloud region.

- Adjust other parameters to suit your workload.

The Databricks pricing calculator will provide an estimate of the Databricks Units (DBUs) consumed, their cost, and the total daily/monthly expenses.

Key Features:

- Supports all major cloud platforms

- Allows customization of various parameters to match your specific workload

- Provides a detailed breakdown of costs associated with different components, including compute resources and storage.

Pros:

- Official tool provided by Databricks

- Accurate estimates based on your specific workload

- Easy to use and understand

- Supports multiple cloud environments.

Cons:

- Limited to Databricks usage only, does not include costs for other cloud services

- Requires some knowledge of Databricks and cloud computing to use effectively

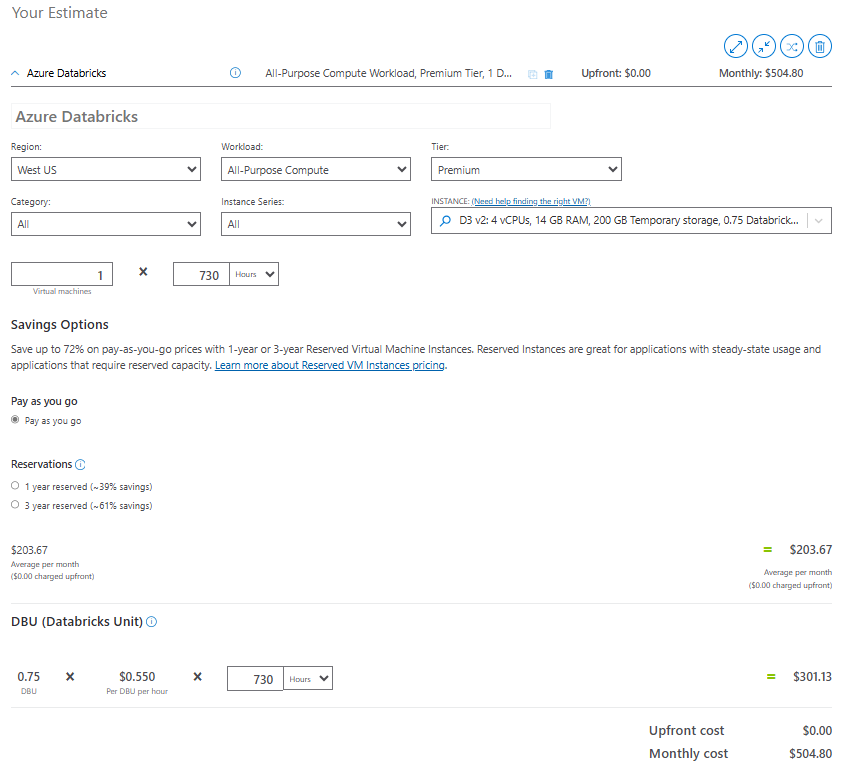

2) Azure Databricks Pricing Calculator (for Azure Databricks)

Azure Databricks pricing calculator is a tool provided by Microsoft to estimate the costs associated with running Databricks on the Azure cloud platform. It allows users to input various parameters such as compute type, instance size, and Azure region to get an accurate estimate of their Azure Databricks expenses.

URL:

How to Use:

Users can estimate their costs by following these steps:

- Visit the Azure Pricing Calculator page

- Search for "Azure Databricks" among the available services.

- Enter details such as region, workload, instance type, tier, and expected usage

- Calculate the estimated monthly or hourly costs based on inputs

Key Features:

- Integrated with the Azure Pricing Calculator.

- Users can choose different Azure regions to see how pricing varies geographically.

- Allows customization of various parameters to match your specific workload.

- Provides very detailed cost estimates for Azure Databricks usage.

Pros:

- Seamless integration with the Azure ecosystem.

- Accurate estimates based on your specific workload.

- Easy to use and understand.

- Perfect for users already within the Azure cloud ecosystem.

Cons:

- Limited to Azure infrastructure—cannot estimate non-Azure Databricks costs.

- Can be overwhelming for users unfamiliar with Azure’s pricing structure.

- Requires some knowledge of Databricks and Azure computing to use effectively

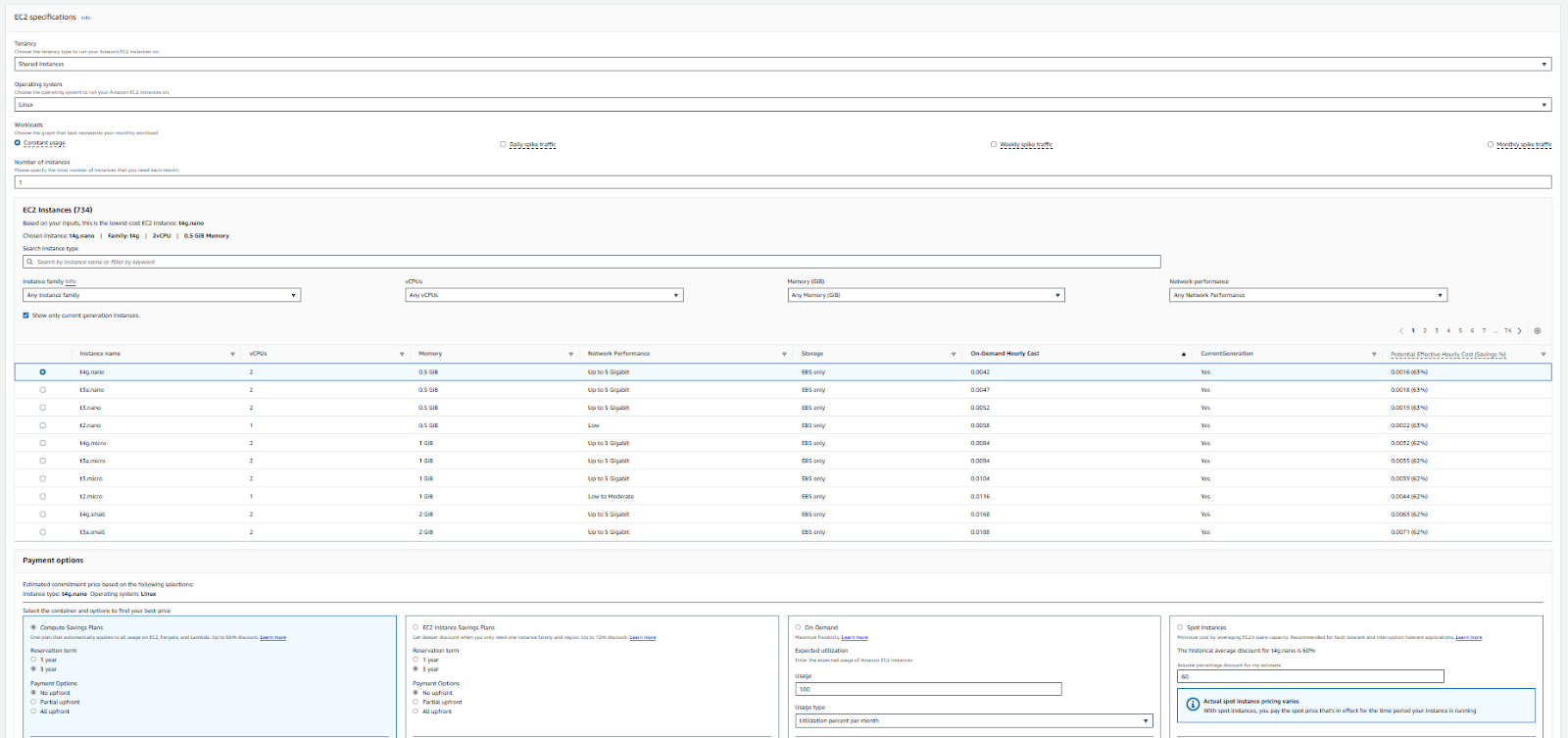

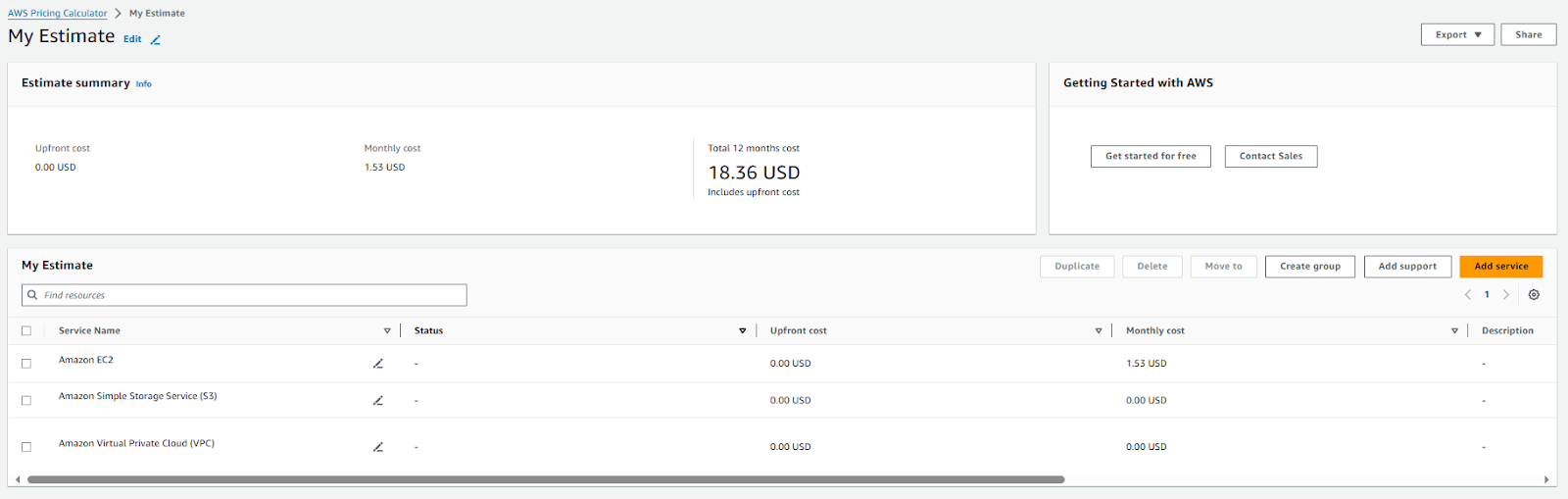

3) AWS Pricing Calculator for Databricks (for Databricks on AWS)

Although no dedicated AWS-specific calculator exists for Databricks, users can leverage both the Databricks Pricing Calculator and the AWS Pricing Calculator to get a complete estimate of Databricks workloads on AWS. You can estimate costs for DBUs using the Databricks calculator while using AWS's calculator for associated services like:

- EC2 Instances: The virtual machines that run your Databricks workloads. Costs vary based on instance type, size, and usage hours.

- S3 Storage: Costs associated with storing data in Amazon S3, which is commonly used for data lakes and storage in conjunction with Databricks.

- Networking and Security: Charges related to data transfer, VPC configurations, and other security measures.

Databricks on AWS integrates Databricks' analytics capabilities with AWS infrastructure, allowing users to deploy clusters on Amazon EC2 within a Virtual Private Cloud (VPC). The architecture supports secure data storage in S3, high availability across multiple Availability Zones, and flexible compute scaling. AWS services like IAM, KMS, and CloudWatch are used for security, encryption, and monitoring. With automated deployment through AWS CloudFormation templates, users can quickly set up a Databricks workspace, benefiting from seamless data processing, machine learning, and ETL capabilities

How to Use:

To use the calculate the cost, follow these steps:

- Start with the Official Databricks Pricing Calculator, selecting AWS as the provider.

- Choose the appropriate Databricks edition (Standard, Premium, or Enterprise) based on your requirements

- Select the compute type and AWS instance type that best fits your workload

- Specify the AWS region where you plan to deploy Databricks

- Adjust other parameters as needed to reflect your actual data and pipeline requirements

- Review the estimated costs, including Databricks Units (DBUs) consumed, their associated cost, and the total daily and monthly expenses

- Use the AWS Pricing Calculator to estimate costs for additional AWS services used in conjunction with Databricks, such as EC2 instances, S3 storage, IAM, VPC and Data Transfer

URL Links:

Key Features:

- Flexible configuration options to match workload needs.

- Estimates costs for both Databricks (DBUs) and AWS infrastructure.

- Detailed cost breakdown for AWS services like EC2 and S3.

Pros:

- Provides a comprehensive cost model by combining Databricks DBU costs with AWS infrastructure costs.

- Useful for estimating the cost of multiple AWS services in conjunction with Databricks, allowing you to build a more complete cost model.

Cons:

- Requires two calculators—one for DBUs and one for AWS services.

- Some manual effort is required to estimate the complete cost across the AWS services used by Databricks.

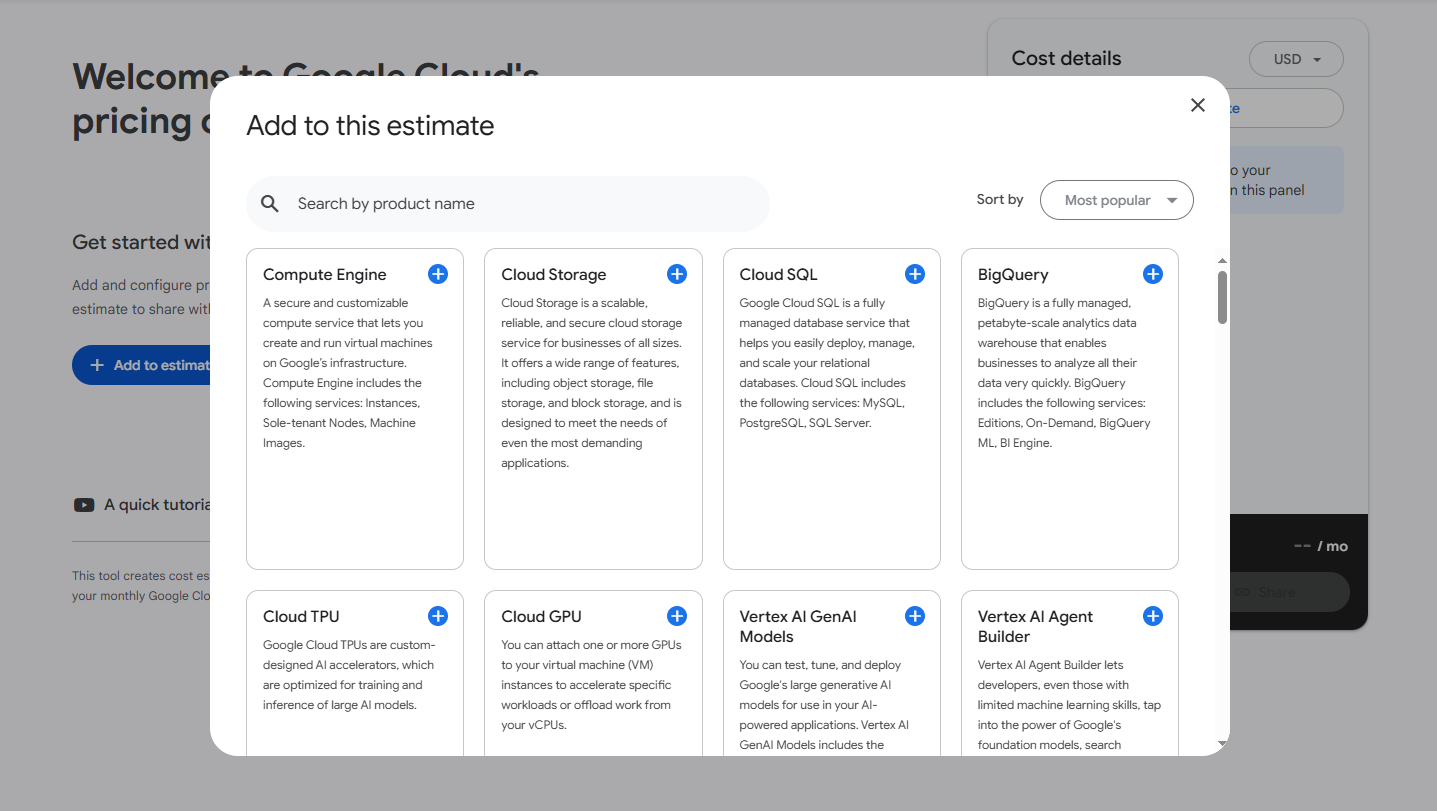

4) Google Cloud Pricing Calculator for Databricks (for Databricks on Google Cloud)

Similar to AWS, there isn't a dedicated Google Cloud Databricks pricing calculator. However, you can use the Official Databricks Pricing Calculator to estimate Databricks DBU costs for your Google Cloud Databricks workloads. For estimating the cost of underlying Google Cloud services (Compute Engine Instances, GKE, Cloud Storage, BigQuery, Networking and Security Costs), you can use the Google Cloud Pricing Calculator.

Databricks on Google Cloud Platform (GCP) is built on a unified architecture that leverages Google Kubernetes Engine (GKE) for containerized deployments, providing scalability and flexibility. The architecture consists of a Control Plane managed by Databricks, which handles user management and job scheduling, while the Compute Plane utilizes GKE to deploy and manage clusters that process data. Data is stored in Google Cloud Storage (GCS), with tight integration to BigQuery for analytics and the Google Cloud AI Platform for machine learning. This setup allows users to perform ETL processes, build machine learning models, and conduct collaborative data science in a secure environment, supported by Google Cloud Identity for access management and compliance.

How to Use:

To use the calculate the cost, follow these steps:

- Access the Databricks Pricing Calculator and select Google Cloud as the cloud provider

- Choose the appropriate Databricks edition (Standard, Premium, or Enterprise) based on your requirements

- Select the compute type and Google Cloud instance type that best fits your workload

- Specify the Google Cloud region where you plan to deploy Databricks.

- Adjust other parameters as needed to reflect your actual data and pipeline requirements.

- Review the estimated costs, including Databricks Units (DBUs) consumed, their associated cost, and the total daily and monthly expenses.

- Use the Google Cloud Pricing Calculator to estimate costs for additional Google Cloud services used in conjunction with Databricks, such as Compute Engine instances, Cloud Storage, Cloud Identity/Access Management and VPC-related charges

URL Links:

Key Features:

- Seamless integration with Google Cloud services and infrastructure

- Customizable inputs to accurately model different usage scenarios

- Detailed cost breakdown for various components, including compute and storage

Pros:

- Supports estimation of both Databricks-specific (DBU) costs and GCP infrastructure costs.

- Google Cloud Pricing Calculator allows for flexible configuration of multiple services.

Cons:

- No dedicated Databricks-branded pricing tool for GCP—requires combining two separate cost estimations.

- Manual effort is needed to combine the DBU costs and the Google Cloud infrastructure.

5) Third-Party Databricks Pricing Calculator

Besides the official Databricks cost calculators, there are some other tools out there that can help you estimate Databricks costs. Although these tools might not explicitly label themselves as "Databricks pricing calculators", they can still help you in determining your Databricks costs and offer strategies for reducing them. Using these tools can help you estimate costs and potentially save a significant amount. Here are a few examples:

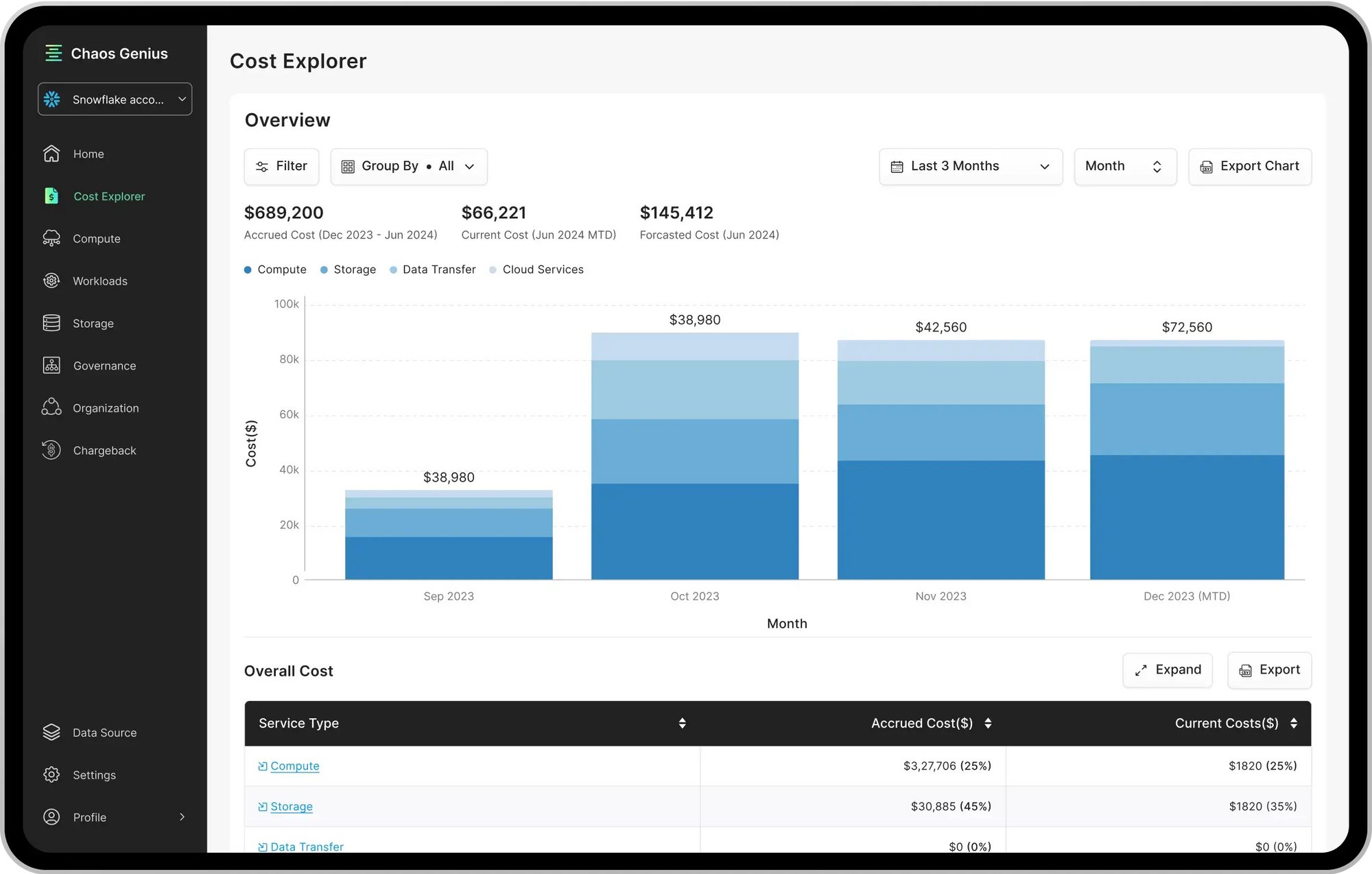

a) Chaos Genius

Chaos Genius is a DataOps observability platform designed for managing and optimizing cloud data costs, especially for Snowflake and Databricks environments.

Key features of Chaos Genius are:

- Cost Allocation and Visibility: Detailed dashboards to monitor and analyze cloud data costs and identify areas to cut costs.

- Instance Rightsizing: Recommends optimal sizing and configurations for data warehouses and clusters to use resources efficiently.

- Query and Database Optimization: Insights and recommendations to tune queries for performance and cost savings.

- Anomaly Detection: Identifies unusual patterns and deviations in data usage, so users/teams can quickly fix issues.

- Alerts and Reporting: Real-time alerts via email and Slack on any anomalous activity so you can respond to data issues fast.

Try it yourself for free!!

Want to take Chaos Genius for a spin?

It takes less than 5 minutes.

b) KopiCloud

KopiCloud is a cloud cost management platform that offers Databricks cost optimization capabilities. It offers daily and monthly cost reports, cost allocation per owner, and custom reporting capabilities.

Pros of Using Third-party Databricks Cost Calculator:

- Granular cost visibility and optimization suggestions.

- Advanced reporting tools for tracking spending over time.

- User-friendly interfaces for both technical and non-technical users.

Cons of Using Third-party Databricks Cost Calculator:

- Often requires a subscription or comes with usage fees.

- Estimates can vary if the platform is not aligned with actual usage.

- Some platforms may require significant technical expertise.

Further Reading

- Official Databricks Pricing Calculator

- Databricks Pricing 101 article

- Azure Databricks pricing guide

- AWS Pricing Guide

- Azure Pricing Guide

- Google Cloud Pricing Guide

Conclusion

That's a wrap. You should now know that choosing the right tool for estimating Databricks costs is key to saving cost and planning resources. Whether you use the native pricing calculator or go with a third-party solution like Chaos Genius and others, it's helpful to understand what drives Databricks DBU consumption—things like cluster setups, data amounts, and workload types. This can really help you stay on top of your Databricks expenses.

In this article, we have covered:

- Basic of Databricks Pricing Model

- Available Databricks Products and Pricing

- How to Choose the Best Databricks Cost Calculator Tools?

- Top 5 Tools in the Market for Databricks Cost Calculator

- Databricks Official Pricing Calculator

- Azure Databricks Pricing Calculator

- AWS Pricing Calculator for Databricks

- Google Cloud Pricing Calculator for Databricks

- Third-Party Databricks Cost Calculator

… and so much more!

FAQs

How do you calculate the cost of Databricks?

Databricks costs are primarily based on Databricks DBUs consumed by your workload. You can estimate this using the Official Databricks Cost Calculator or tools that we covered above.

What is a DBU in Databricks?

Databricks Unit (DBU) measures processing power in Databricks, factoring in the type of workload and cluster configuration.

How much does DBU cost Databricks?

Databricks DBU cost varies by cloud provider and workload type. Azure, AWS, and Google Cloud all have different DBU rates.

Does Databricks charge per query?

No, Databricks charges are based on the DBU consumption of the cluster running the queries, not per query.

Can I use Databricks for free?

Yes, there are options such as free trials or community editions available.

Is Databricks Community Edition free?

Yes, it provides limited features suitable for learning purposes without incurring charges.

What are the main differences in pricing between Databricks on different cloud providers?

Each cloud provider has different rates based on infrastructure costs; thus prices vary significantly across AWS, Azure, and GCP.

How can I optimize my Databricks costs?

Regularly analyze usage patterns, leverage auto-scaling features, choose appropriate instance types, and utilize committed-use discounts where applicable.