Databricks is an all-in-one, open analytics platform that simplifies data management, advanced analytics and AI workflows. Built on Apache Spark, it provides a robust, high-performance environment tailored for processing large datasets and executing machine learning tasks at scale. A defining feature of Databricks is its collaborative workspace, where data engineers, analysts, and data scientists can perform ETL processes, analyze data in real-time, and develop machine learning models within a unified interface, all while benefiting from shared notebooks and collaboration tools. Databricks’ architecture is designed to operate seamlessly across major cloud providers—Microsoft Azure, Amazon Web Services (AWS), and Google Cloud Platform (GCP)—offering flexibility in cloud platform choice. Each platform offers distinct integration points and performance optimizations to align Databricks with its native services, assuring compatibility with a user’s existing ecosystem and unique requirements.

In this article, we'll cover the unique capabilities, features, optimizations, and pricing of Databricks on AWS, Azure, and Google Cloud platforms.

Save up to 50% on your Databricks spend in a few minutes!

Now that we’ve covered why Databricks is leading in big data processing, analytics, and AI/ML, it’s time to look behind the scenes at how Databricks operates. Let’s examine its architecture.

Databricks Architecture Overview

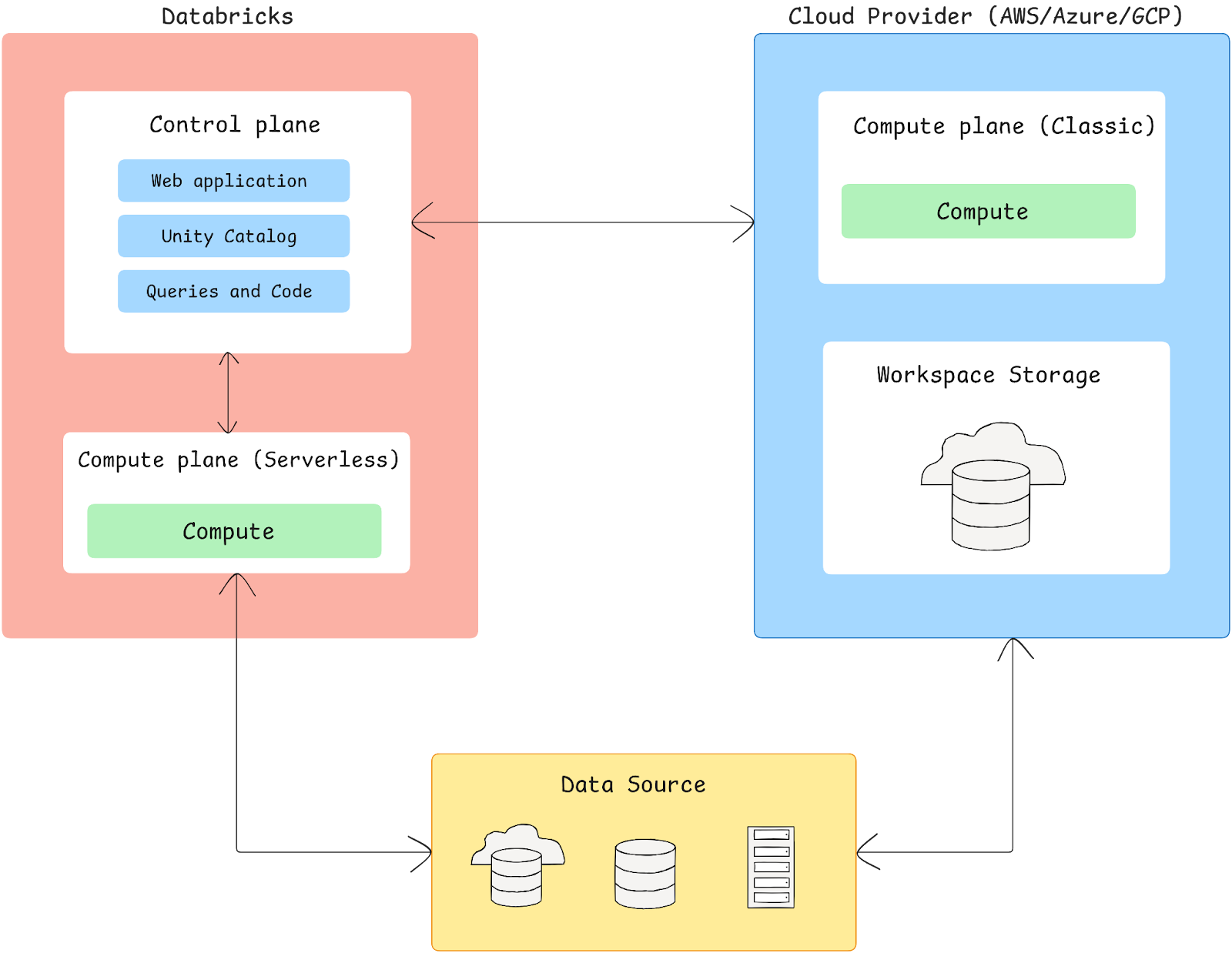

Databricks is engineered to integrate seamlessly with cloud providers like Azure, AWS, and Google Cloud, enabling a unified environment for big data processing and analytics. Its architecture is divided into two primary components—the Control Plane and Compute Plane—which together offer flexibility in data processing, access, and resource management.

The Databricks architecture is segmented and unites different components:

a) Control Plane

Control plane is a fully managed layer by Databricks, responsible for key backend services. It is responsible for orchestrating and managing the cluster lifecycle, scheduling jobs, handling user authentication, managing access controls, and providing secure data access. The control plane hosts the Databricks web application, user interfaces, and APIs, which operate as a conduit for interfacing with various platform services.

b) Compute Plane

Compute plane is responsible for executing data workloads and is hosted within your cloud account. Databricks offers two options here:

➤ Serverless Compute Plane:

- Databricks manages the infrastructure, so resources are provisioned automatically.

- Supports network isolation to secure workloads, maintaining compliance and privacy.

- Provides instant scaling without requiring you to manage the underlying cloud resources.

➤ Classic (Customer-Managed) Compute Plane:

- Compute resources operate within your cloud account, granting you full control over network, security policies, and scaling.

- Operates within a dedicated Virtual Private Cloud (VPC) on AWS or a Virtual Network (VNet) on Azure and Google Cloud, enabling detailed security configurations and custom network controls.

c) Storage Architecture (Workspace Storage)

Databricks workspaces are associated with a dedicated storage bucket in AWS or Google Cloud or a storage account in Azure. Key components include:

- Databricks Workspace Storage: Stores notebooks, job logs, cluster logs, and other system files.

- Unity Catalog Metadata: A metadata layer for managing data governance across the platform, providing central access control and compliance features.

- DBFS (Databricks File System): A distributed file system layered over cloud storage, enabling users to organize data within a Databricks environment.

Check out this article to learn more in-depth about Databricks architecture.

Databricks on AWS vs Azure vs GCP: A Quick Comparison

If you're in a hurry, here’s a quick summary and detailed comparison of key features for Databricks on AWS, Azure, and GCP.

1) Architecture

| Component | Databricks on AWS | Databricks on Azure | Databricks on GCP |

| Compute Infrastructure |

➤ EC2-based clusters with support for multiple instance families ➤ Support for on-demand, reserved, and spot instances ➤ AWS Graviton (ARM) processor support |

➤ Azure VM-based clusters ➤ Support for all general-purpose and memory-optimized VM series ➤ Support for spot and reserved instances |

➤ Google Compute Engine (GCE) based clusters ➤ Support for standard, compute-optimized, and memory-optimized machine types ➤ Preemptible VM support |

| Storage Architecture |

➤ Amazon S3 for data lake storage ➤ EBS volumes for cluster storage ➤ Delta Lake with ACID transaction support |

➤ Azure Blob Storage and ADLS Gen2 for data lake ➤ Premium SSD managed disks for cluster storage ➤ Delta Lake with ACID transaction support |

➤ Google Cloud Storage (GCS) for data lake ➤ Persistent disk storage for clusters ➤ Delta Lake with ACID transaction support |

| Identity & Access |

➤ Native AWS IAM integration ➤ SCIM provisioning support ➤ Support for AWS SSO |

➤ Azure Active Directory integration ➤ SCIM provisioning support ➤ Support for Azure AD SSO |

➤ Google Identity and Access Management integration ➤ SCIM provisioning support ➤ Support for Google Cloud Identity SSO |

2) Network and Security

| Feature | Databricks on AWS | Databricks on Azure | Databricks on GCP |

| Network Integration |

➤ VPC support with customer-managed VPCs ➤ AWS PrivateLink support ➤ VPC peering capabilities |

➤ Azure VNet injection ➤ Azure Private Link support ➤ VNet peering support |

➤ VPC support ➤ Private Service Connect ➤ VPC Network Peering |

| On-premises Connectivity |

➤ AWS Direct Connect support ➤ VPN connectivity options |

➤ Azure ExpressRoute support ➤ VPN Gateway integration |

➤ Cloud Interconnect support ➤ Cloud VPN options |

| Security Features |

➤ Security groups ➤ Network ACLs ➤ VPC endpoints for AWS services |

➤ Network Security Groups (NSGs) ➤ Azure Firewall integration ➤ Service endpoints |

➤ Cloud Armor integration ➤ Firewall rules ➤ VPC Service Controls |

3) Data Integration

| Service Type | Databricks on AWS | Databricks on Azure | Databricks on GCP |

| Data Warehousing |

➤ Native Redshift integration ➤ Redshift Spectrum support |

➤ Azure Synapse Analytics integration ➤ Dedicated SQL pools support |

➤ BigQuery integration ➤ BigQuery ML support |

| Streaming Data |

➤ Amazon Kinesis integration ➤ MSK (Managed Kafka) support |

➤ Event Hubs integration ➤ Azure Stream Analytics support |

➤ Pub/Sub integration ➤ Dataflow support |

| ETL Services |

➤ AWS Glue catalog integration ➤ EMR integration capabilities |

➤ Azure Data Factory integration ➤ Azure Purview support |

➤ Cloud Dataprep integration ➤ Cloud Data Fusion support |

4) Machine Learning Capabilities

| Feature | Databricks on AWS | Databricks on Azure | Databricks on GCP |

| ML Platforms |

➤ Integration with SageMaker ➤ MLflow tracking server ➤ Support for deep learning AMIs |

➤ Azure ML integration ➤ MLflow tracking server ➤ Azure ML workspace integration |

➤ Vertex AI integration ➤ MLflow tracking server ➤ Deep Learning VM images |

| GPU Support |

➤ Support for NVIDIA GPUs ➤ P3, P4, G4 instance types |

➤ NCv3, NDv2, NC T4 v3 series ➤ NVIDIA GPU support |

➤ A2, N1, N2 machine types ➤ NVIDIA GPU support |

| Model Serving |

➤ Model serving via SageMaker endpoints ➤ MLflow model serving |

➤ Azure ML deployment ➤ MLflow model serving |

➤ Vertex AI endpoint deployment ➤ MLflow model serving |

5) Cost Management

| Feature | Databricks on AWS | Databricks on Azure | Databricks on GCP |

| Billing Integration |

➤ Unified billing with AWS Marketplace ➤ AWS Cost Explorer integration |

➤ Azure EA integration ➤ Azure Cost Management integration |

➤ GCP Marketplace billing ➤ Cloud Billing integration |

| Cost Optimization |

➤ Instance pools ➤ Spot instance support ➤ AWS Savings Plans compatibility |

➤ Instance pools ➤ Spot VM support ➤ Azure Reserved VM compatibility |

➤ Instance pools ➤ Preemptible VM support ➤ Committed use discounts |

6) Compliance and Security

| Feature | Databricks on AWS | Databricks on Azure | Databricks on GCP |

| Certifications |

➤ SOC 2 Type II ➤ ISO 27001 ➤ HIPAA ➤ PCI DSS ➤ FedRAMP High |

➤ SOC 2 Type II ➤ ISO 27001 ➤ HIPAA ➤ PCI DSS ➤ FedRAMP High |

➤ SOC 2 Type II ➤ ISO 27001 ➤ HIPAA ➤ PCI DSS |

| Data Protection |

➤ AWS KMS integration ➤ BYOK support ➤ Field-level encryption |

➤ Azure Key Vault integration ➤ BYOK support ➤ Column-level encryption |

➤ Cloud KMS integration ➤ BYOK support ➤ Column-level encryption |

Comprehensive Breakdown of Databricks on AWS vs Azure vs GCP

1) Databricks on AWS

Databricks on AWS offers a unified data analytics and AI platform by integrating directly with Amazon S3, enabling efficient SQL analytics, data science, and machine learning on a single platform. It supports SQL-optimized compute clusters and utilizes the Lakehouse architecture, which combines data lake scalability with data warehouse performance, offering up to 12x better price performance compared to traditional data warehouses. Databricks on AWS is widely adopted by enterprises due to its proven ability to handle comprehensive analytics and AI workloads.

Databricks on AWS—Architecture Overview

Databricks on AWS provides a unified analytics platform that combines data engineering, data science, machine learning, and business analytics on AWS infrastructure. The platform implements a Lakehouse architecture pattern, combining the best elements of data lakes and data warehouses while integrating natively with AWS services.

Databricks on AWS architecture can be broken down into two main planes: the Control Plane and the Compute Plane.

Control Plane

Control Plane is managed by Databricks and includes backend services that facilitate user interactions and workspace management. Key features of the Control Plane include:

- Web Application: The user interface for managing Databricks workspaces, notebooks, and jobs.

- REST APIs: For programmatic access to Databricks functionalities, supporting automation and integration with other services.

- Workspace Management: Manages user authentication, job scheduling, and resource allocation.

- Security Features: Implements encryption and access controls for data security.

Compute Plane

This is where data processing occurs and is hosted within the customer’s AWS account. It can operate in two modes:

- Classic Compute Plane: This operates within the user’s AWS account. All compute resources are provisioned in the customer’s virtual private cloud (VPC), providing complete control over network configurations and security settings. This setup allows for:

- Elastic Compute Cloud (EC2) Instances: Databricks clusters run on EC2 instances within private subnets, providing a secure environment for data processing.

- Isolation: Each workspace resides in its own VPC for workload isolation.

- Serverless Compute Plane: Hosted in a Databricks-managed account, this allows automatic scaling and resource management with minimal user setup.

Workspace Storage Bucket

Each Databricks workspace relies on an AWS S3 bucket for workspace-level storage, which serves multiple purposes:

- Storage of Workspace System Data (like notebook revisions, job run details, command results, and Spark logs)

- Databricks File System (DBFS)

- Unity Catalog

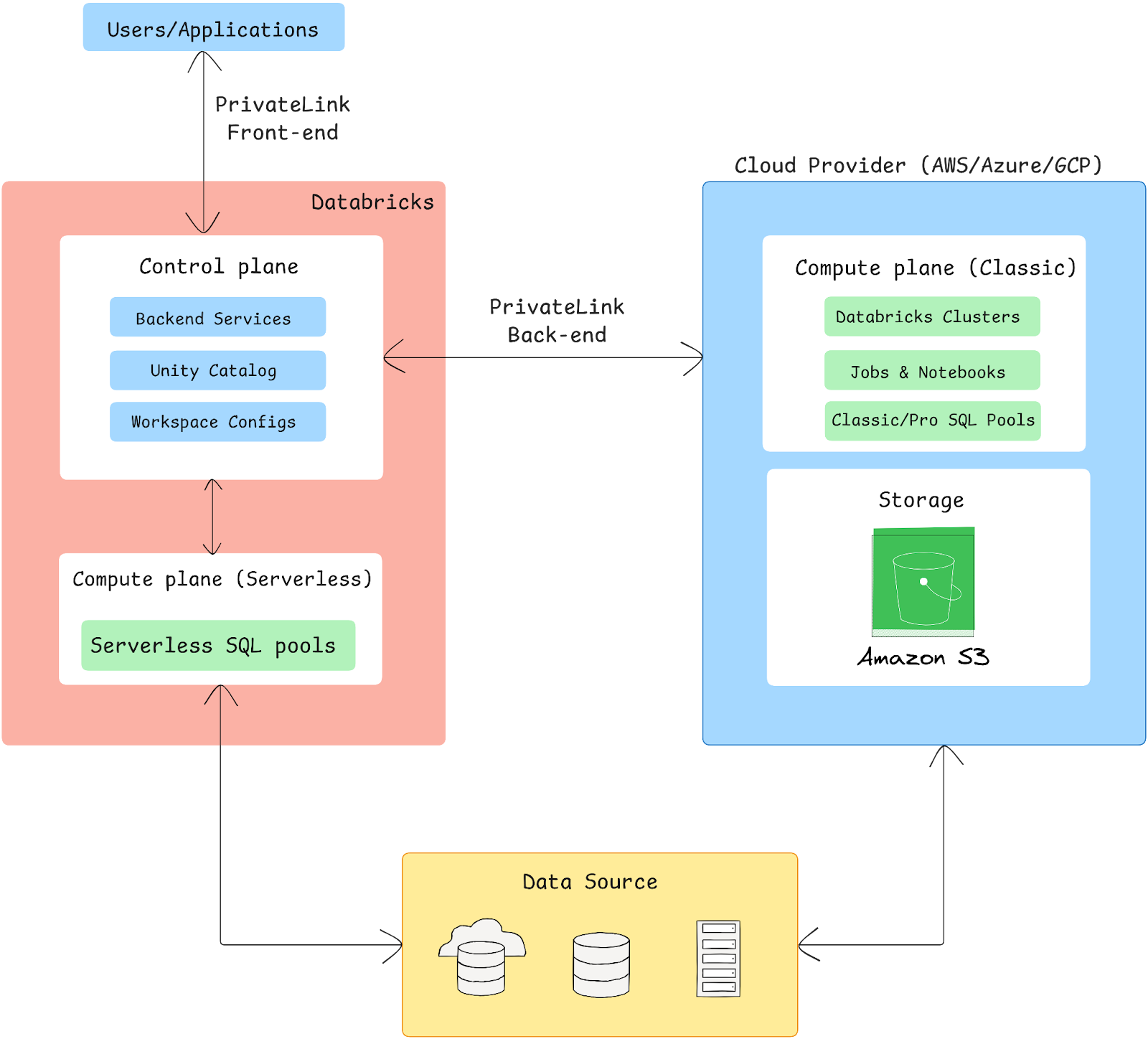

Networking Architecture

The networking architecture implements multiple security layers and connectivity options:

a) PrivateLink Implementation:

i) PrivateLink Front-end:

- Establishes private endpoints in the customer VPC for web application and REST API access.

- Uses custom DNS resolution to route user traffic directly to Databricks workspace URLs.

- Supports mutual TLS authentication for API endpoints.

- Handles traffic routing through the AWS backbone network.

ii) PrivateLink Back-end:

- Creates dedicated VPC endpoints for cluster-to-control-plane communication.

- Implements secure channel encryption for all data in transit.

- Supports custom routing tables and network ACLs.

- Maintains persistent secure connections for optimal performance.

AWS Native Features and Service Integration

Databricks on AWS seamlessly integrates with AWS native services, extending its capabilities for complex data workflows:

- Amazon S3 for Data Storage: Databricks uses Amazon S3 as their primary storage solution.

- AWS Glue for ETL: Databricks connects with AWS Glue, a fully managed ETL solution that streamlines data preparation and loading for analytics.

- Amazon Redshift for Data Warehousing: Databricks can read and write to Redshift, enabling complicated analytics processes by integrating data from Redshift with Databricks Spark clusters for increased processing capability.

- AWS Lambda and EventBridge: AWS Lambda and AWS EventBridge integrate with Databricks to enable real-time event processing, making it easy to trigger Databricks workflows based on AWS events.

- Amazon Kinesis for Real-Time Data Processing: The integration with Amazon Kinesis facilitates real-time data ingestion and processing.

- Amazon Athena for Querying Data: Users can utilize Amazon Athena to run SQL queries directly against data stored in S3.

- AWS Graviton Support: Databricks clusters can use AWS Graviton instances, which are designed for high performance and low cost.

- Integration with Amazon QuickSight: Databricks seamlessly integrates with Amazon QuickSight, allowing users to develop data-driven visualizations and dashboards.

- IAM and IAM Roles: Databricks employs AWS IAM to manage permissions and secure resources, enabling fine-grained access controls over S3 buckets, databases, and other AWS services.

- AWS KMS (Key Management Service): Databricks integrates with AWS KMS to encrypt S3 data and secure other sensitive information.

- SageMaker and Other ML Services: Databricks connects with Amazon SageMaker for deploying and managing ML models, with a flexible API integration that allows data scientists to train and deploy models using familiar AWS tools.

- AWS Direct Connect and VPC Peering: Databricks can connect securely to on-premises networks through AWS Direct Connect or VPC Peering.

- PrivateLink: PrivateLink ensures secure, private connectivity between Databricks workspaces and AWS services.

In the following section, we will go into more depth regarding these services.

Performance

Databricks on AWS delivers impressive performance benefits by leveraging AWS's infrastructure to optimize data processing, analytics, and machine learning workloads. This platform boosts performance with optimized cluster setups, efficient file management, and specialized processing frameworks such as Photon for supercharging SQL workloads.

Databricks on AWS also benefit from AWS Graviton instances, which are ARM-based and offer price-to-performance ratios that are up to 3 times better than traditional x86 instances.

To support seamless and scalable data operations, Databricks on AWS relies on Delta Lake, which makes data even more reliable with features like data compaction and automatic file size adjustments to speed up query response times.

Data Storage

Databricks on AWS leverages Amazon S3 as its primary data storage layer, using the Databricks File System (DBFS) as an interface that enables users to work with data as though it’s in a local file system. DBFS provides compatibility with cloud object storage, though the use of Unity Catalog volumes is now preferred over legacy DBFS mount configurations due to enhanced access management and data governance capabilities.

Computing

Databricks on AWS leverages AWS EC2 instances to support a distributed computing environment. Clusters, composed of a driver and worker nodes, execute tasks within an AWS VPC.

Cluster types include:

- All-Purpose Clusters: For interactive analysis.

- Job Clusters: For scheduled or automated data jobs.

Integration Services

Databricks on AWS provides seamless integration with AWS data services, particularly AWS Glue and Amazon Redshift. Through AWS Glue integration, Databricks can utilize the AWS Glue Data Catalog as an external metastore, enabling consistent metadata management across AWS services. With Amazon Redshift integration, Databricks supports direct connectivity through Redshift's Data API, enabling high-performance data exchange between Databricks clusters and Redshift warehouses. Users can leverage Databricks' JDBC/ODBC connectors to query Redshift data directly or use Databricks' optimized Spark-Redshift connector for bulk data transfers.

Security and Compliance

Security features on Databricks for AWS include:

- AWS IAM Integration: Enables role-based access control and fine-grained permissions.

- SSO and RBAC: Supports centralized authentication and authorization.

- Compliance Standards: Aligns with AWS’s compliance certifications, ensuring data and network security.

Network Integrations

Network architecture in Databricks on AWS is built on AWS VPC infrastructure. You can deploy Databricks within your own VPC, maintaining complete control over network isolation and security. The deployment supports both classic and enhanced VPC architectures, with the latter providing additional security features and network customization options. Security groups and network ACLs can be configured to control traffic flow to and from Databricks clusters. The platform also supports AWS PrivateLink for secure connectivity between your Databricks workspace and AWS services, eliminating the need for public internet access.

AI & Machine Learning

For ML workloads, Databricks on AWS integrates with:

- Amazon SageMaker Integration: Models developed with Databricks can be hosted on SageMaker endpoints for production use.

- Delta Lake & MLflow: Provides feature storage and model tracking, supporting both batch and real-time inference options.

Pricing Overview

1) Databricks Workflows & Streaming — Starting at $0.15 per DBU (US East North Virginia region)

➤ Classic Jobs/Classic Jobs Photon Clusters

- Premium plan: $0.15 per DBU

- Enterprise plan: $0.20 per DBU

➤ Serverless (Preview):

- Premium plan: $0.35 per DBU

- Enterprise plan: $0.45 per DBU

2) Delta Live Tables — Starting at $0.20 per DBU (US East North Virginia region)

- Premium plan:

- DLT Classic Core: $0.20 per DBU

- DLT Classic Pro: $0.25 per DBU

- DLT Classic Advanced: $0.36 per DBU

- Enterprise plan:

- DLT Classic Core: $0.20 per DBU

- DLT Classic Pro: $0.25 per DBU

- DLT Classic Advanced: $0.36 per DBU

3) Databricks Warehousing — Starting at $0.22 per DBU (US East North Virginia)

- Premium plan:

- SQL Classic: $0.22 per DBU

- SQL Pro: $0.55 per DBU

- SQL Serverless: $0.70 per DBU (includes cloud instance cost)

- Enterprise plan:

- SQL Classic: $0.22 per DBU

- SQL Pro: $0.55 per DBU

- SQL Serverless: $0.70 per DBU (includes cloud instance cost)

4) Interactive workloads (Data Science & ML Pricing) — Starting at $0.40 per DBU (US East North Virginia)

- Premium plan:

- Classic All-Purpose/Classic All-Purpose Photon clusters: $0.55 per DBU

- Serverless: $0.75 per DBU (includes the cost of the underlying compute, and you get 30% off until January 31, 2025)

- Enterprise plan:

- Classic All-Purpose/Classic All-Purpose Photon clusters: $0.65 per DBU

- Serverless (Preview): $0.95 per DBU (includes the cost of the underlying compute, and you get 30% off until January 31, 2025)

5) Databricks Generative AI — Starting at $0.07 per DBU

a) Mosaic AI Gateway (US East North Virginia)

- Premium plan:

- AI Guardrails (Preview):

- Text filtering: $1.50 per million tokens

- Inference Tables (Preview):

- CPU/GPU endpoints: $0.50/GB

- Foundation Model endpoints: $0.20/million tokens

- Usage Tracking (Preview):

- CPU/GPU endpoints: $0.10/GB

- Foundation Model endpoints: $0.04/million tokens

- AI Guardrails (Preview):

- Enterprise plan:

- AI Guardrails (Preview):

- Text filtering: $1.50 per million tokens

- Inference Tables (Preview):

- CPU/GPU endpoints: $0.50/GB

- Foundation Model endpoints: $0.20/million tokens

- Usage Tracking (Preview):

- CPU/GPU endpoints: $0.10/GB

- Foundation Model endpoints: $0.04/million tokens

- AI Guardrails (Preview):

b) Mosaic AI Model Serving (US East North Virginia)

- Premium plan:

- CPU Model Serving: $0.070 per DBU (Databricks Unit), includes cloud instance cost

- GPU Model Serving: $0.07 per DBU, includes cloud instance cost

- Enterprise plan:

- CPU Model Serving: $0.07 per DBU, includes cloud instance cost

- GPU Model Serving: $0.07 per DBU, includes cloud instance cost

c) Mosaic AI Foundation Model Serving

See Mosaic AI Foundation Model Serving pricing breakdown.

d) Shutterstock ImageAI (US East North Virginia)

- Premium plan:

- Flat rate: $0.060 per generated image

- Enterprise plan:

- Flat rate: $0.060 per generated image

e) Mosaic AI Vector Search (US East North Virginia)

- Premium plan:

- Base rate: $0.28/Hour per unit

- Each unit provides 2M vector capacity

- Includes up to 30GB of storage free

- Enterprise plan:

- Base rate: $0.28/Hour per unit

- Each unit provides 2M vector capacity

- Includes up to 30GB of storage free

f) Mosaic AI Agent Evaluation (US East North Virginia)

- Premium plan:

- Base rate: $0.070 per judge request

- Enterprise plan:

- Base rate: $0.070 per judge request

g) Mosaic AI Model Training - Fine-tuning (US East North Virginia)

- Premium plan:

- Base rate: $0.65 per DBU

- Enterprise plan:

- Base rate: $0.65 per DBU

h) Mosaic AI Model Training - Pre-training (US)

- On-Demand Training: $0.65 per DBU

- Training Reservation: $0.55 per DBU

- Training Hero Reservation: $0.75 per DBU

See Mosaic AI Model Training - Pre-training.

i) Online Tables (Preview) (US East North Virginia)

- Premium plan:

- Online Tables Capacity Unit: $0.70 per unit per hour (50% promotional discount until Jan 31, 2025)

- Online Tables Storage: $0.345 per GB-month

- Enterprise plan:

- Online Tables Capacity Unit: $0.90 per unit per hour (50% promotional discount until Jan 31, 2025)

- Online Tables Storage: $0.345 per GB-month

See Online Tables (Preview).

2) Databricks on Azure

Databricks on Azure combines high-performance, auto-scaling Apache Spark clusters optimized for big data and machine learning workloads. With enhanced Spark performance—up to 50x faster in certain scenarios—Azure Databricks is a powerful choice for enterprises requiring advanced data analytics and machine learning. As a managed service, it simplifies setup and integrates seamlessly with Azure’s suite of services, providing a unified platform for data engineering, analytics, and data science teams.

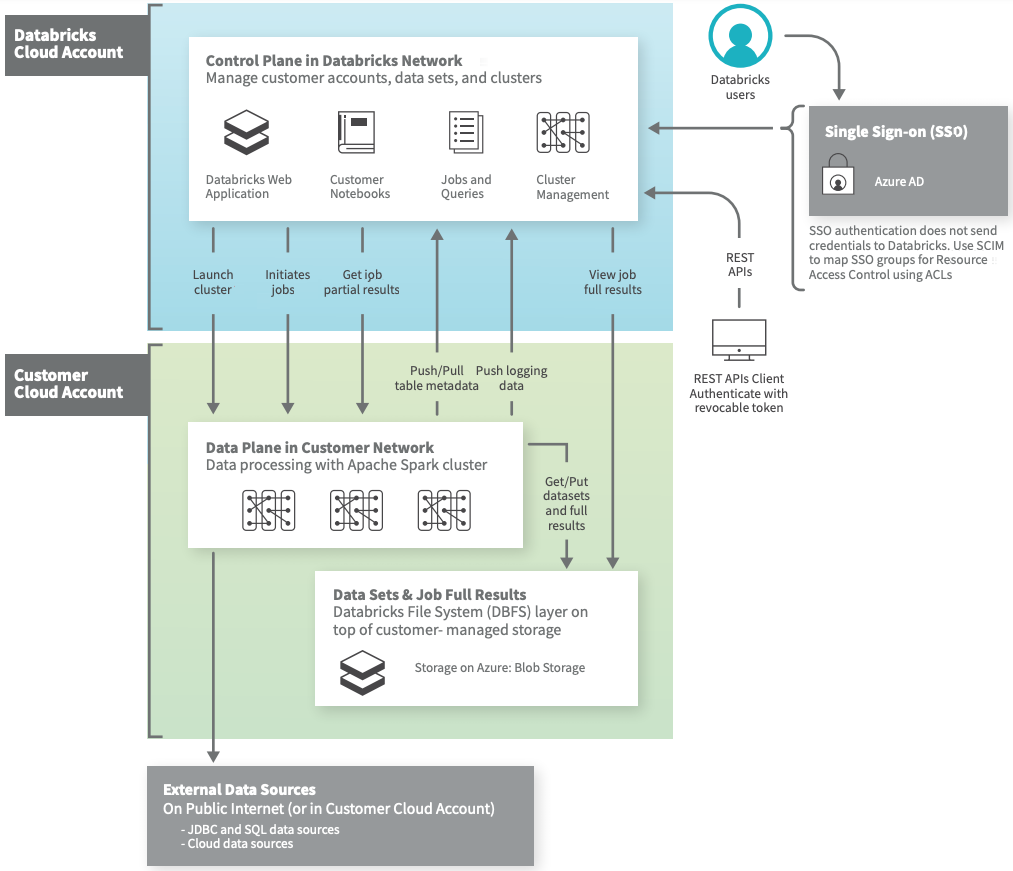

Databricks on Azure—Architecture Overview

Azure Databricks integrates Apache Spark’s data processing capabilities with Azure’s secure cloud infrastructure, enabling streamlined data analytics and machine learning.

Azure Databricks is built on a two-plane architecture: the Control Plane and the Compute Plane, providing separation of data processing and management layers. This architecture is pivotal for secure and efficient analytics at scale on the Azure platform.

- Control Plane: Managed entirely by Databricks, the control plane is located outside of the user’s Azure subscription. It handles backend tasks, including metadata storage, cluster lifecycle management, and job orchestration, with all communications secured through encrypted channels.

- Compute Plane: The compute plane, where data processing takes place, resides within the user’s Azure subscription. Azure Databricks offers two configurations:

- Classic Compute: Operates within the user's Virtual Network (VNet) with customizable access controls.

- Serverless Compute: In this configuration, compute resources are fully managed by Databricks, providing automatic scaling with minimal infrastructure oversight required from users.

Data Storage and Integration

Azure Databricks integrates seamlessly with Azure’s storage solutions, such as Azure Data Lake Storage (ADLS) and Azure Blob Storage. These connectors, optimized by Microsoft, provide high-speed data access and support efficient storage scalability for large datasets. The Delta Lake storage layer also adds ACID transactions and schema enforcement, making it ideal for creating Lakehouse architectures that combine data lakes and data warehouses into a unified data management approach.

Networking and Security

Networking within Azure Databricks can be configured using two primary approaches:

- Databricks-Managed VNet: Databricks manages the virtual network and associated security configurations. Network Security Groups (NSGs) and routing are handled automatically, simplifying the setup for most users but with limited customization options.

- Customer-Managed VNet (VNet Injection): Allows users to configure custom VNets, granting control over IP address ranges, private endpoints, and on-premises connectivity.

All configurations leverage Azure’s private backbone network to minimize exposure to the public internet, while Secure Cluster Connectivity (SCC) ensures that data is transmitted solely through private channels.

Security Features

Azure Databricks integrates robust security features to meet stringent enterprise requirements:

- Microsoft Entra ID (formerly Azure Active Directory) supports user authentication, Single Sign-On (SSO), and RBAC, enabling secure and managed user access.

- Private Endpoints restrict Databricks and other Azure services access, enabling secure connections without exposing services to the internet.

- Encryption at rest and in transit, with key management through Azure Key Vault, adds an extra layer of protection for sensitive data.

- Audit Logging through Azure Monitor provides insights into workspace activity, helping organizations maintain visibility and compliance.

We will go into greater detail regarding the capabilities and services offered by Databricks on Azure.

Azure Native Features and Service Integration

Databricks on Azure includes multiple integrations with Azure-native services. Here’s a breakdown of key features and integrations:

- Azure Data Lake Storage (ADLS): Scalable storage for structured, semi-structured, and unstructured data, ideal for big data workloads.

- Azure Data Factory: Facilitates ETL workflows to move and transform data for use within Databricks.

- Azure Synapse Analytics: Supports seamless data exchange for advanced analytics and near-real-time processing.

- Azure Event Hubs: Enables real-time event streaming into Databricks for immediate analysis.

- Azure Key Vault: Manages sensitive information securely within Databricks environments.

- Azure Monitor: Provides insights into Databricks operations for efficient performance management.

- Power BI: Integrates for real-time visualization and data sharing.

- Azure Active Directory (AAD): Offers identity management and SSO for secure, simplified access.

- Microsoft Purview: Supports governance by connecting with Databricks’ Unity Catalog for data visibility and compliance.

- Azure DevOps: Integrates version control, CI/CD, and project management into Databricks workflows.

In the following sections, we will go over each of these services and features in further detail.

Performance and Scalability

Databricks on Azure utilizes an optimized version of Apache Spark, enhancing processing speed significantly—up to 50 times faster than conventional Spark setups—ideal for big data ETL, interactive queries, and real-time analytics.

Data Storage

Azure Databricks primarily uses Azure Data Lake Storage Gen2 (ADLS Gen2), which combines features of ADLS Gen1 and Blob Storage. This supports:

- Hierarchical namespaces

- ACID transactions via Delta Lake

You can store structured, semi-structured, and unstructured data at exabyte scale without file size limitations.

Computing

For computing power, Azure Databricks runs on Azure Virtual Machines, offering options from general-purpose VMs to GPU-enabled instances. The platform supports:

- Azure Confidential Computing (using DCsv2 and DCsv3 series VMs) provides isolated environments with Intel SGX for secure workloads.

- AMD SEV-SNP Technology in select VMs enables full VM memory encryption, adding further security layers for sensitive computations.

Integration Services

Databricks on Azure can be easily integrated with other Azure services. You can:

- Connect directly to Azure Synapse Analytics for SQL operations.

- Use Azure Data Factory for workflow orchestration.

Security and Compliance

Databricks on Azure is highly secure. Here are some notable security features that Azure provides to strengthen the Databricks workflow:

- User Authentication: Managed via Azure Active Directory (AAD), supporting single sign-on (SSO) to streamline secure access.

- Secrets Management: Secured through Azure Key Vault, allowing Databricks to store and manage sensitive information, such as API keys, securely.

- Role-Based Access Control (RBAC): Implements fine-grained permissions, enabling control over access to resources at the workspace, cluster, and job levels.

Network Integrations

For network security in Azure Databricks, Azure Virtual Networks are key. They help isolate and control network traffic. You can set up VNets with Network Security Groups to manage access to resources, which is crucial for keeping your Azure Databricks workspace secure. Azure Private Link also lets you create private connections to Databricks services. This limits exposure to the public internet and makes things more secure by routing data through Azure's private network.

To make remote access more secure, you can use Azure ExpressRoute for private connections from on-premises environments to Azure Databricks. This is done over a dedicated network, so you avoid the public internet. If ExpressRoute isn't an option, VPN Gateway can provide encrypted remote access. Another step is to enable secure cluster connectivity, also known as “No Public IP”. This restricts cluster access to VNets, stops public IP addresses from being used, and gives you more control over network access.

AI & Machine Learning

Azure Databricks provides robust AI and ML support, integrating with Azure Machine Learning for model training and deployment, and MLflow for tracking and versioning models. The platform supports popular Azure AI services, for building AI applications at scale.

AWS Databricks Pricing Overview

1) Databricks Workflows & Streaming — Starting at $0.15 per DBU (US East region)

➤ Classic Jobs/Classic Jobs Photon Clusters

- Standard plan: $0.15 per DBU

- Premium plan: $0.30 per DBU

➤ Serverless (Preview):

- Premium plan: $0.45 per DBU

2) Delta Live Tables — Starting at $0.20 per DBU

Premium Plan (Only plan available):

- DLT Classic Core: $0.30 per DBU

- DLT Classic Pro: $0.38 per DBU

- DLT Classic Advanced: $0.54 per DBU

3) Databricks Warehousing — Starting at $0.22 per DBU (US East North Virginia)

- Premium plan:

- SQL Classic: $0.22 per DBU

- SQL Pro: $0.55 per DBU

- SQL Serverless: $0.70 per DBU (includes cloud instance cost)

4) Interactive workloads (Data Science & ML Pricing) — Starting at $0.22 per DBU (US East North Virginia)

- Standard Plan:

- Classic All-Purpose/Classic All-Purpose Photon clusters: $0.40 per DBU

- Premium Plan:

- Classic All-Purpose/Classic All-Purpose Photon clusters: $0.55 per DBU

- Serverless (Preview): $0.95 per DBU (includes the cost of the underlying compute, and you get 30% off until January 31, 2025)

5) Databricks Generative AI — Starting at $0.07 per DBU

a) Mosaic AI Gateway

- Premium Plan (Only plan available):

- AI Guardrails (Preview):

- Text filtering: $1.50 per million tokens

- Inference Tables (Preview):

- CPU/GPU endpoints: $0.50/GB

- Foundation Model endpoints: $0.20/million tokens

- Usage Tracking (Preview):

- CPU/GPU endpoints: $0.10/GB

- Foundation Model endpoints: $0.04/million tokens

- AI Guardrails (Preview):

You don't have to pay extra for things like query permissions, rate limiting, and traffic routing.

b) Mosaic AI Model Serving

- Premium Plan (Only plan available):

- CPU Model Serving: $0.07 per DBU, includes cloud instance cost

- GPU Model Serving: $0.07 per DBU, includes cloud instance cost

c) Mosaic AI Foundation Model Serving

See Mosaic AI Foundation Model Serving pricing breakdown.

d) Shutterstock ImageAI

- Premium Plan (Only plan available):

- Flat rate: $0.060 per generated image

e) Mosaic AI Vector Search

- Premium Plan (Only plan available):

- Base rate: $0.28/Hour per unit

- Each unit provides 2M vector capacity

- Includes up to 30GB of storage free

Here is the pricing breakdown:

| Vector limit per unit (768 dim) | Price/hour/unit | DBU/hour - all regions |

| 2M | $0.28 | 4.000 |

f) Mosaic AI Agent Evaluation

- Premium Plan (Only plan available):

- Base rate: $0.070 per judge request

g) Mosaic AI Model Training - Fine-tuning

- Premium Plan (Only plan available):

- Base rate: $0.65 per DBU

h) Mosaic AI Model Training - Pre-training

See Mosaic AI Model Training - Pre-training.

i) Online Tables (Preview)

- Premium Plan (Only plan available):

- Online Tables Capacity Unit: $0.90 per unit per hour (50% promotional discount until Jan 31, 2025)

- Online Tables Storage: $0.390 per GB-month

See Online Tables (Preview).

3) Databricks on GCP

Databricks on Google Cloud integrates the open architecture of Databricks with Google Cloud’s robust infrastructure, offering a flexible, scalable platform for data engineering, data science, and analytics. It runs on Google Kubernetes Engine (GKE) with the first Kubernetes-based Databricks runtime, optimized to deliver high-performance analytics and fast insights. This managed integration provides seamless compatibility with Google Cloud’s native security, billing, and management tools, enabling organizations to leverage containerized deployments for efficient, scalable, and secure data processing workflows.

Deploying Databricks on Google Cloud is streamlined through a one-click deployment option available via the Google Cloud Console. This integration assures seamless compatibility with Google Cloud's security, billing, and management tools. Also, Databricks supports containerized deployments that enhance scalability and security while enabling efficient data engineering, collaborative data science, and production-level machine learning across various Google Cloud services, including BigQuery and Google Cloud Storage.

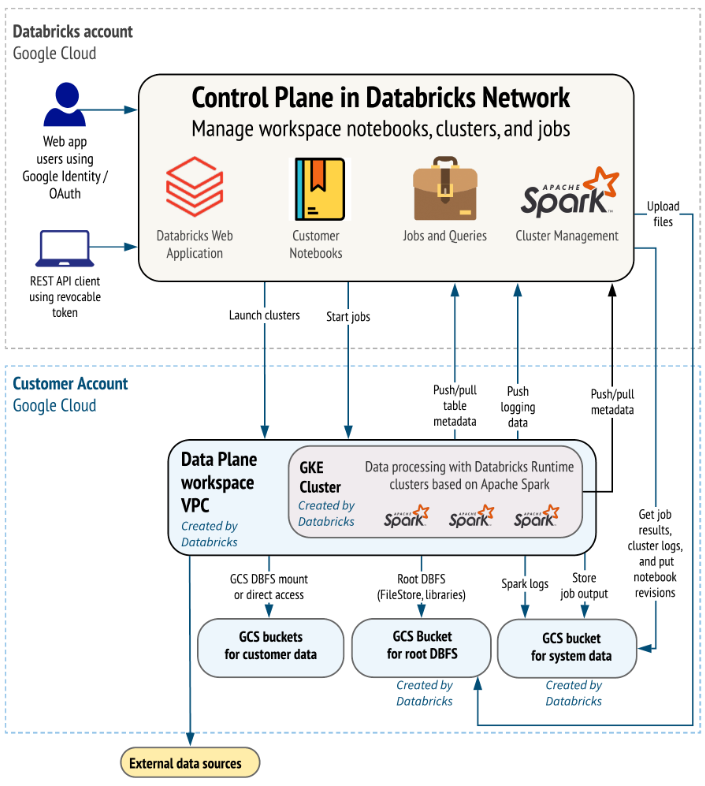

Databricks on GCP—Architecture Overview

Databricks on Google Cloud Platform (GCP) architecture is designed to enable efficient data engineering, data science, and analytics workflows while leveraging the scalability and security of GCP.

The architecture of Databricks on Google Cloud also consists of two main components: the Control Plane and the Data Plane.

Control Plane: Databricks control plane is hosted and managed by Databricks in their own cloud infrastructure. It handles:

- Workspace management and cluster lifecycle operations

- Job scheduling and monitoring

- Security and access management, including API operations

- Service metadata storage and orchestration

All interactions in the control plane are securely managed, and customer data does not traverse this layer.

Data Plane: Data Plane resides within the customer's GCP account and is where data processing occurs. Compute resources, typically deployed as clusters, operate within this plane to perform data engineering, analytics, and machine learning tasks. These clusters connect to Google Cloud Storage (GCS), BigQuery, and other external data sources for streamlined data ingestion and processing. The data plane is secured with Virtual Private Cloud (VPC) configurations that enable private communication between services without public internet exposure.

Integration with Google Cloud Services

Databricks on Google Cloud tightly integrates with several GCP services, enhancing data processing workflows and allowing efficient data access, transformation, and analytics:

- Google Cloud Storage (GCS): Used as the primary data storage solution, supporting Delta Lake with ACID transactions, time-travel, and scalable metadata handling.

- BigQuery: Databricks provides native support for querying BigQuery tables, enabling users to perform scalable SQL analytics directly within the Databricks environment. BigQuery integration supports analytics on large datasets with minimal data movement.

- Google Kubernetes Engine (GKE): Databricks runs its compute environment on GKE, leveraging Google’s Kubernetes infrastructure for flexible, containerized deployments that scale to meet dynamic workload demands.

- AI and Machine Learning Tools: Integrates with Databricks for model training and deployment, enabling users to build and operationalize machine learning models directly on data processed within Databricks.

Security Features

Databricks on GCP includes several layers of security to protect data, infrastructure, and user access:

- Encryption: Data at rest and in transit is encrypted. Customers can choose between Databricks-managed keys and Google Cloud’s Customer-Managed Encryption Keys (CMEK) for enhanced control.

- Role-Based Access Control (RBAC): Fine-grained access control ensures that users have permission only to access the data and functionalities they are authorized for, supporting secure multi-user environments.

- Network Security: VPC Service Controls are used to set up security perimeters around resources, limiting the exposure of sensitive data and services. Also, firewall rules provide granular control over network traffic to manage inbound and outbound requests effectively.

GCP Native Features and Service Integration

Databricks on Google Cloud is designed to leverage Google’s native services, creating an ecosystem where data workflows can be executed seamlessly across GCP’s data and analytics platforms. Here’s an overview of key integrations:

- Google Cloud Storage (GCS)

- BigQuery

- Google Cloud AI Platform

- Google Identity and Access Management (IAM)

You can directly access and analyze data without significant data movement, benefiting from the comprehensive Google ecosystem while maintaining Databricks’ Lakehouse architecture.

Performance and Scalability

Designed specifically to harness Google Cloud’s infrastructure, Databricks on GCP is optimized for scalable and efficient data processing. This deployment is the first to feature a Kubernetes-based Databricks runtime, allowing users to leverage Google Kubernetes Engine (GKE) for flexible, containerized compute environments that scale to meet varying workload demands efficiently.

Data Storage

Databricks on Google Cloud natively integrates with Google Cloud Storage (GCS), which provides scalable, durable storage for data of all types and sizes. This integration also supports Delta Lake storage, which brings the benefits of ACID transactions and structured file management, offering reliability and performance for both batch and real-time data processing needs.

Computing

Databricks on GCP utilizes both Google Compute Engine and Google Kubernetes Engine (GKE) for its compute infrastructure:

- Compute Engine: Provides scalable virtual machine instances, supporting various machine types tailored to workload requirements, including memory-optimized and compute-optimized instances.

- GKE: Runs containerized workloads, allowing Databricks to deploy a Kubernetes-based runtime that facilitates auto-scaling and high availability, making it ideal for handling diverse data processing and AI tasks.

Integration Services

Key integration services for Databricks on GCP include:

- BigQuery: Provides fast, scalable SQL analytics for large datasets, enabling both ad hoc and scheduled queries within Databricks.

- Google Cloud AI Platform: Enables model training, deployment, and management, with Managed MLflow in Databricks supporting experiment tracking and model lifecycle management.

Security and Compliance

Databricks on GCP leverages Google Cloud’s advanced security features, aligning with stringent enterprise security protocols:

- Google Identity and Access Management (IAM): Manages access at an organizational level, providing a unified point for managing Databricks user permissions within the Google Cloud environment.

- Google Cloud Identity: Supports secure authentication and authorization across users and applications, strengthening Databricks’ security setup.

- Secrets Management: Google Cloud’s Secret Manager integrates with Databricks, allowing secure management of sensitive information like API keys and credentials.

Network Integrations

Networking on Databricks for Google Cloud utilizes Google’s Virtual Private Cloud (VPC), which enables users to define IP ranges, subnets, and private connections. Databricks can integrate with Google Cloud Private Service Connect to establish private connections to Google services, minimizing public internet exposure and enhancing data security. For hybrid setups:

- Interconnect and VPN: Connect on-premises environments with Databricks on GCP through Google Cloud’s Interconnect and VPN services, which provide dedicated and encrypted connections, respectively.

- Secure Cluster Connectivity: Known as “No Public IP,” this configuration restricts cluster access to VPCs, enhancing network security by preventing the use of public IPs.

AI & Machine Learning

Databricks on Google Cloud integrates deeply with Google Cloud’s AI and ML capabilities. The Google Cloud AI Platform complements Databricks by providing tools for model building, training, and deployment. Managed MLflow on Databricks enables tracking, model versioning, and deployment management, supporting both batch and streaming inference. Databricks users can also leverage popular ML libraries, including TensorFlow, PyTorch, and scikit-learn, to build scalable AI solutions within GCP’s infrastructure.

GCP Databricks Pricing Overview

1) Databricks Workflows & Streaming — Starting at $0.15 per DBU (US East region)

➤ Classic Jobs/Classic Jobs Photon Clusters

- Premium plan: $0.15 per DBU

➤ Serverless (Preview):

- Premium plan: $0.35 per DBU

2) Delta Live Tables — Starting at $0.20 per DBU

Premium Plan (Only plan available):

- DLT Classic Core: $0.20 per DBU

- DLT Classic Pro: $0.25 per DBU

- DLT Classic Advanced: $0.36 per DBU

3) Databricks Warehousing — Starting at $0.22 per DBU (US Virginia)

- Premium plan (Only plan available):

- SQL Classic: $0.22 per DBU

- SQL Pro: $0.55 per DBU

- SQL Serverless: $0.70* per DBU (includes cloud instance cost)

4) Interactive workloads (Data Science & ML Pricing) — Starting at $0.55 per DBU

- Premium Plan (Only plan available):

- Classic All-Purpose/Classic All-Purpose Photon clusters: $0.55 per DBU

5) Databricks Generative AI — Starting at $0.07 per DBU

a) Mosaic AI Gateway

- N/A

b) Mosaic AI Model Serving (US Virginia)

- Premium Plan (Only plan available):

- CPU Model Serving: $0.07 per DBU, includes cloud instance cost

- GPU Model Serving: $0.07 per DBU, includes cloud instance cost

c) Mosaic AI Foundation Model Serving

See Mosaic AI Foundation Model Serving pricing breakdown.

d) Shutterstock ImageAI

- N/A

e) Mosaic AI Vector Search

- N/A

f) Mosaic AI Agent Evaluation

- N/A

g) Mosaic AI Model Training - Fine-tuning

- N/A

h) Mosaic AI Model Training - Pre-training

- Premium Plan (Only plan available):

- On-Demand Training: $0.65 per DBU.

- Training Reservation: $0.55 per DBU.

- Training Hero Reservation $0.75 per DBU.

See Mosaic AI Model Training - Pre-training.

i) Online Tables (Preview)

- Premium Plan (Only plan available):

- Online Tables Capacity Unit: $0.90 per unit per hour (50% promotional discount until Jan 31, 2025)

- Online Tables Storage: $0.390 per GB-month

See Online Tables (Preview).

Want to take Chaos Genius for a spin?

It takes less than 5 minutes.

Conclusion

Databricks is the real deal: a rock-solid, cloud-agnostic data and AI platform that helps data pros work together and streamline their workflows across AWS, Azure, and Google Cloud. Databricks' powerful Spark engine, a single workspace, and seamless connectivity with cloud services enable customers to easily tackle difficult data chores such as ETL, real-time analytics, and machine learning. When choosing a cloud platform for Databricks, think about what you already have in place, what you need to integrate, and what your budget is. Every cloud solution has its perks, so match the platform's strengths with your business needs to get the best bang for your buck with Databricks.

In this article, we have covered:

- Why Databricks is a leading choice for data processing, analytics, and AI

- Core Databricks architecture overview

- Comparison of Databricks on AWS, Azure, and GCP

- Databricks on AWS

- Databricks on Azure

- Databricks on GCP

- Quick summary—Databricks on AWS vs Azure vs GCP

… and much more!

FAQs

Who are the major cloud providers?

AWS, Microsoft Azure, and Google Cloud Platform lead the market in cloud services.

Which is better: GCP, AWS, or Azure?

Each platform has its strengths:

- AWS: Dominates in market share and service variety, offering extensive tools for various applications.

- Azure: Excels in enterprise integration, especially for organizations already using Microsoft products.

- GCP: Known for its advanced data analytics and machine learning capabilities, leveraging Google's expertise in AI.

Is Databricks better on Azure or AWS?

The effectiveness of Databricks depends on your requirements:

- Azure Databricks provides seamless integration with Microsoft services like Azure Active Directory and Power BI.

- AWS Databricks offers broader third-party integrations and access to a wider variety of AWS services.

What's the key difference between Databricks' Control and Compute Planes?

Control Plane manages backend services such as user management and job scheduling, while the Compute Plane handles data processing tasks(either Classic or Serverless).

How does Databricks on AWS integrate with AWS services?

Databricks on AWS utilizes several AWS services:

- Amazon S3 for storage.

- AWS Glue for ETL processes.

- Amazon Redshift for data warehousing.

- Amazon SageMaker for hosting machine learning models.

What are the key security features of Databricks on AWS?

It uses IAM (Identity and Access Management) for access control, AWS KMS (Key Management Service) for encryption, and AWS PrivateLink for secure connectivity to other AWS services without exposing traffic to the public internet.

How does Databricks on Azure integrate with Azure data services?

It connects to ADLS, Synapse Analytics, and Cognitive Services.

What are the networking options in Databricks on Azure?

Users can choose between:

- Databricks-Managed VNet, which simplifies setup but offers less control.

- Customer-Managed VNet, allowing for more customization and control over network configurations.

What GCP integrations does Databricks on GCP have?

It integrates with GCS, BigQuery, and AI Platform.

How does Databricks enforce security on GCP?

It uses IAM for user permissions and VPC Service Controls to define a security perimeter around resources to mitigate data exfiltration risks.

What performance benefits does the Kubernetes runtime provide for Databricks on GCP?

The Kubernetes runtime enables:

- Efficient resource utilization through container orchestration.

- Scalability to handle variable workloads dynamically, improving performance during peak usage times.

How does Databricks support Delta Lake across cloud platforms?

It integrates Delta Lake for ACID transactions and scalable metadata.

What are the Classic and Serverless Compute options in Databricks?

Classic is customer-managed, Serverless is fully managed by Databricks.

How does PrivateLink enhance security in Databricks on AWS?

PrivateLink allows users to establish private endpoints within their VPCs, ensuring that traffic between their Databricks workspace and other AWS services remains secure and isolated from the public internet.

What Azure services can be integrated into data pipelines using Databricks?

Databricks can connect with several Azure services including:

- Azure Data Factory for orchestrating data workflows.

- Azure Event Hubs for real-time event processing.

- Azure Purview for data governance and cataloging.

How does Databricks leverage GCP's private network infrastructure?

Databricks on GCP routes traffic through Google's private backbone network.

How can Graviton instances optimize costs when using Databricks on AWS?

Graviton instances are based on ARM architecture, providing better price-performance ratios compared to traditional x86 instances, allowing users to run workloads at a lower cost while maintaining high performance levels.

What key security features does Azure provide that benefit Databricks users?

Azure enhances security through features such as:

- Integration with Azure Active Directory for identity management.

- Use of Azure Key Vault for managing secrets and encryption keys securely.

- Monitoring capabilities via Azure Monitor, which provides insights into resource usage and performance metrics.

How does Databricks integrate ML on GCP?

Databricks integrates with GCP's ML ecosystem by connecting to:

- AI Platform, enabling model training and deployment.

- Popular frameworks and libraries, facilitating end-to-end machine learning workflows within the platform.

What networking/security isolation does Databricks provide?

VPCs, Network Security Groups, Private Endpoints.

How does Databricks support GPU computing on Azure?

Databricks leverages Azure's GPU instances such as the DCsv2/DCsv3 series, which are optimized for compute-intensive workloads like deep learning tasks, providing significant acceleration over CPU-based processing.

Who is Databricks' biggest competitor?

Databricks faces significant competition from several platforms, with Snowflake Redshift and Google BigQuery being its primary rivals in the data analytics space. Other notable competitors include Microsoft Azure Synapse Analytics and AWS EMR, each offering unique capabilities that challenge Databricks' market position.

Is BigQuery similar to Databricks?

No, BigQuery and Databricks serve distinct purposes:

- BigQuery: A fully managed, serverless data warehouse optimized for large-scale data analysis and SQL-based queries, excelling at handling massive datasets without infrastructure management.

- Databricks: A unified analytics platform built on Apache Spark, tailored for collaborative data science and machine learning, with real-time data processing and advanced analytics capabilities.

What role do Delta Live Tables play in managing data quality within Databricks?

Delta Live Tables automate the process of building reliable data pipelines by enforcing data quality constraints during ingestion, enabling users to monitor data quality dynamically while providing rollback capabilities through time travel features.