Data science and machine learning (ML) are revolutionizing how companies use data to gain productivity and practical insights. Everyone is rushing to create and implement machine learning models due to the recent hype surrounding AI. This accelerated model development pace means we need solid data management more than before. Similar to how DevOps transformed software development by combining development and operations, MLOps (Machine Learning Operations) fills that gap. MLOps covers the end-to-end management of ML models in production environments, including data analysis, preparation, feature engineering, model development, deployment, prediction serving, retraining, and continuous monitoring. A critical component of the MLOps ecosystem is the feature store, which manages “features” (the input data for ML models) with a focus on reliability, lineage, and versioning. It specifically addresses the needs of ML, making sure the right data is always available for both training models and making predictions.

In this article, we will cover everything you need to know about features, feature stores, and the Databricks Feature Store, exploring their key components, architecture, and implementation. On top of that, we will provide a step-by-step process for using the Databricks Feature Store in your machine-learning workflows.

What is a Feature (in Machine Learning)?

Machine learning (ML) models learn patterns from data to make predictions or decisions. Data for ML models is often structured in a tabular format, where rows represent individual data points (samples or instances) and columns represent their attributes or characteristics. A feature is one of these attributes, capturing a specific property or aspect of each data point.

Features serve as the input variables for ML models, and their quality directly influences the model's performance. They provide the information that the model uses to learn relationships and make accurate predictions or classifications.

Types of Features

Features can be broadly categorized into two main types: Numerical features and Categorical features.

1) Numerical Features

These represent quantitative information and can be further divided into continuous features (e.g., age, temperature, price) and discrete features (e.g., number of rooms, count of clicks). Continuous features can take any value within a range, while discrete features have specific, separate values.

2) Categorical Features

These represent qualitative information and define groups or categories (e.g., gender, country, product category). They can be nominal, where categories have no inherent order (e.g., colors), or ordinal, where categories have a natural order (e.g., education level).

Features are typically derived from raw data through feature engineering (a process we will detail later), which involves transforming, combining, and encoding raw data into a format suitable for model consumption. It is crucial to distinguish features from raw data. Raw data, in its unprocessed state, may not be directly interpretable or optimally usable by ML models. Feature engineering aims to extract meaningful representations from the raw data, enhancing the model's ability to learn and generalize.

Importance of Features

Features play a crucial role in ML, and their quality directly impacts model performance. Here are some key aspects highlighting their importance:

- Predictive Power: High-quality features capture the relevant information and patterns in the data, enabling the model to make more accurate predictions.

- Dimensionality Reduction: Effective feature engineering can reduce the number of features, simplifying the model, preventing overfitting, and improving computational efficiency.

- Interpretability: Well-engineered features can make the model's decision-making process more transparent and understandable, providing insights into the factors influencing the predictions.

TL;DR: Features are the foundation of any machine learning model and their careful selection and engineering are essential for developing effective and reliable models.

Note: The same feature can be used by multiple teams to create multiple models.

Now that we have a solid understanding of features, let's explore the concept of a Feature Store and its role in managing the feature lifecycle.

Save up to 50% on your Databricks spend in a few minutes!

What Is a Feature Store?

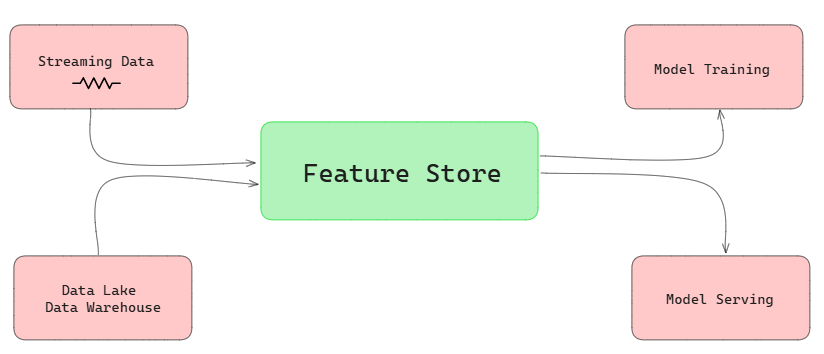

A Feature Store is a centralized repository designed to manage the complete lifecycle of ML features, from raw data ingestion and transformation to feature serving. It acts as a vital component within the ML ecosystem, enabling data scientists and engineers to efficiently store, share, discover, and reuse curated features for ML models. It seamlessly integrates with various data sources, facilitating the transformation of raw data into feature values suitable for model training and inference pipelines.

Feature Store Overview

Feature stores play a crucial role in ML production environments, providing a reliable and efficient way to manage features throughout the ML workflow. They support both the research and development phases of ML projects, acting as organized repositories that store and manage the essential ingredients (features) required to build accurate and robust models.

What Are Some Key Features of the Feature Store?

Feature stores provide essential capabilities for managing and serving features to machine learning models efficiently. Below are some of their core functionalities:

1) Collaborative Feature Sharing, Discovery, and Reuse. Enables data science teams to share and reuse features across an organization.

2) Automated Data Prep. Sets up reliable, automated pipelines for handling large amounts of data, ensuring readiness for model training and inference without manual intervention.

3) Integration with Data Sources and Sinks. Integrates various data sources—like data lakes, warehouses, and real-time streams—into one place, simplifying data management.

4) Real-time Serving and Online Feature Store. Provides the necessary infrastructure to supply features to production models in real-time, enhancing responsiveness and accuracy.

5) Temporal Feature Retrieval and Point-in-Time Correctness. Allows users to perform temporal queries on features, retrieving historical data from specific time windows—crucial for training and evaluating time-sensitive models

6) Flexible Predictions. Supports a wide range of use cases, whether predictions are made in batches or in real-time.

Types of Feature Stores

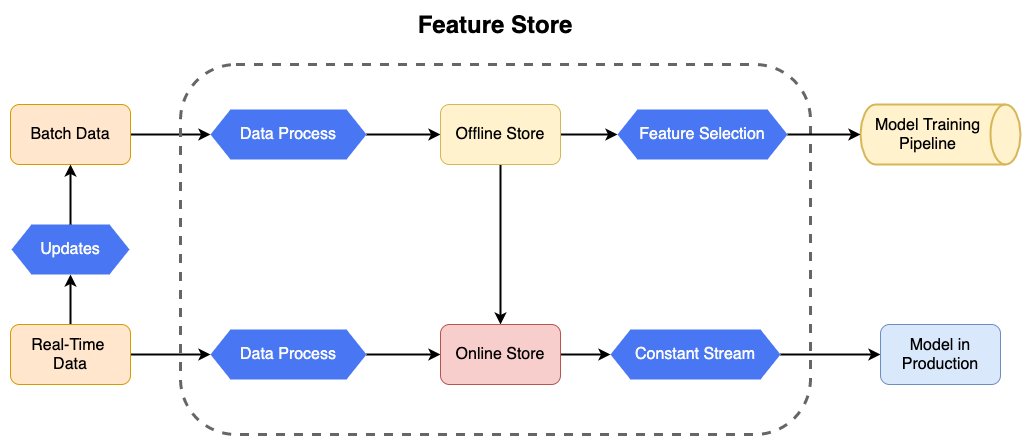

Feature stores typically handle two primary types of data—batch data and real-time data—and utilize two corresponding types of stores: Offline stores and Online stores.

Batch Data: Derived from data lakes or data warehouses, batch data consists of large, static datasets not updated in real time.

Real-time Data: Generated from streaming and log events, real-time data is continuously updated and immediately fed into the Feature Store.

To manage these data types effectively, feature stores uses:

1) Offline Stores (Cold Storage)

Offline Stores contain preprocessed features from batch data, creating a historical feature repository used in model training pipelines. They are often implemented using data lakes or distributed file systems for cost-effective storage and efficient batch processing.

2) Online Stores (Hot Storage)

Online Stores combine precomputed features from offline stores with real-time feature values, providing a unified and up-to-date feature set for models in production. They are designed for low-latency access and are typically implemented using NoSQL databases like Cassandra or Redis, or in-memory data stores like Memcached, to ensure rapid feature retrieval for real-time serving.

Architecture Overview of Feature Store

Feature Stores are designed to support a range of data ingestion, processing, and storage requirements, ensuring they can handle both batch and real-time data sources effectively.

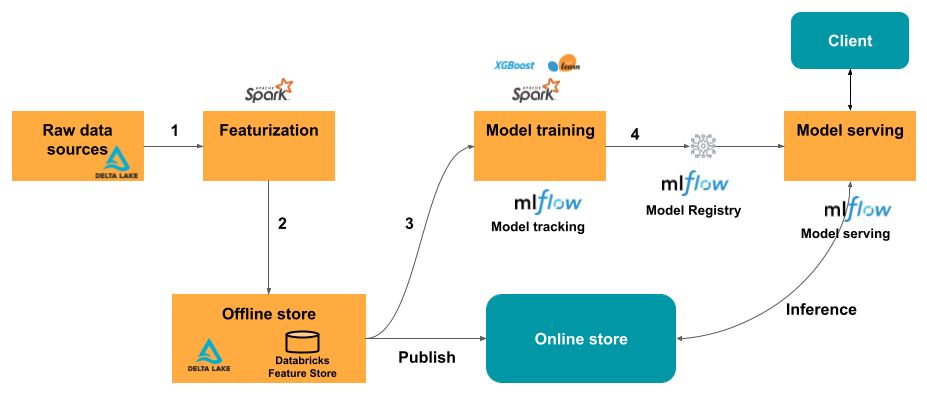

The diagram below outlines a typical architecture for a Feature Store, illustrating the flow of data from various sources through different stages to support both model training and real-time production environments.

1) Data Sources

a) Batch Data:

- Source: Data lakes, data warehouses, and historical databases.

- Characteristics: Large volumes of structured or unstructured data that are not frequently updated.

b) Real-Time Data:

- Source: Streaming data sources, such as logs or real-time events.

- Characteristics: Continuously generated data that reflects current events or transactions.

c) Updates:

This process involves synchronizing and integrating updates between batch and real-time data sources to maintain consistency and ensure up-to-date information across the Feature Store.

2) Data Processing

a) Ingestion: Raw data from batch and real-time sources is ingested into the Feature Store.

b) Processing: The ingested data undergoes preprocessing, cleaning, transformation, and feature engineering to convert it into a standardized format suitable for ML model consumption. This may involve tasks like data normalization, scaling, encoding, and feature generation. The processed data is then fed into the respective stores (Offline and Online).

2) Feature Stores

a) Offline Store: A repository for storing precomputed feature values derived from batch data. It typically uses scalable storage systems like cloud object storage (e.g.,S3, ADLS, or GCS) or distributed file systems (e.g., DBFS, HDFS) for scalability and cost-efficiency.

b) Online Store: This repository combines features from the Offline Store with real-time feature values, providing a unified and up-to-date feature set for online model serving and low-latency applications. It is designed for high-speed access and is typically implemented using low-latency databases like Cassandra, Redis, DynamoDB, or in-memory stores like Memcached.

3) Integration with ML Pipelines

a) Feature Selection:

- Function: In the context of the Offline Store, feature selection involves choosing the most relevant features from the historical data to be used in model training.

- Output: These selected features are then fed into the model training pipeline.

b) Constant Stream:

- Function: In the context of the Online Store, this process ensures that the model in production continuously receives the most up-to-date features.

- Output: This enables real-time predictions and ensures the model operates with the latest available data.

4) Model Training and Production

a) Model Training Pipeline: The selected features from the Offline Store are used to train ML models, enabling them to learn patterns and relationships from historical data.

b) Model in Production: Trained models are deployed to production environments, where they leverage the real-time features (served by the Online Store) as well as Offline Store to make predictions on incoming data streams or requests.

Does Databricks Have a Feature Store?

Yes, Databricks provides a managed Feature Store as part of its platform, offering a unified solution for feature management, sharing, and serving. The Databricks Feature Store simplifies the ML workflow by seamlessly integrating with other Databricks components, such as Delta Lake, MLflow, and Spark Structured Streaming, enabling efficient feature engineering, model training, and deployment.

What Is Feature Engineering?

Feature engineering is a critical process in ML and data science that involves transforming raw data into meaningful features that improve the performance of ML models. These features are derived from raw data through various techniques and domain knowledge, enabling models to learn and generalize better from the data.

Feature engineering includes the following critical steps:

- Data Cleaning: Data cleaning is the process of removing or correcting flaws and inconsistencies in raw data, such as missing numbers, duplicates, and outliers.

- Data Transformation: Data transformation is the process of converting raw data into a format that machine learning algorithms can easily process. This covers normalization, standardization, and scaling.

- Feature Creation: Creating new features based on the existing ones, often using domain-specific knowledge. This can involve operations like combining features, creating interaction terms, and performing mathematical transformations.

- Feature Selection: Selecting the most relevant features for the model, which can improve model performance and reduce overfitting. Commonly utilized techniques include correlation analysis, feature importance from models, and dimensionality reduction approaches.

- Encoding Categorical Variables: Converting categorical variables into numerical values using techniques like one-hot encoding, label encoding, or target encoding.

Effective feature engineering requires a solid understanding of the data and problem domain, as well as an iterative approach to testing various features and transformations to get the optimal set for the model.

Feature Engineering in Databricks Unity Catalog

Databricks Unity Catalog is a unified governance solution for all data and AI assets within the Databricks platform. It provides centralized governance and fine-grained access controls, enabling organizations to manage and secure their data effectively.

If you enable Databricks Unity Catalog in your workspace, it will become your feature store (Databricks Runtime 13.3 LTS and later). Any Delta table or Delta Live Table in Unity Catalog with a primary key can be used as a feature table for model training or inference purposes. Let's break down how Databricks Unity Catalog can help with feature engineering:

1) Centralized Feature Storage

Databricks Unity Catalog allows you to store and organize all features in one place, making them easily accessible and reusable by everyone on your team. This centralization ensures consistency and efficiency across models and projects.

2) Fine-grained Access Control

Databricks Unity Catalog enables you to implement precise access controls, ensuring only authorized personnel can view or modify features. This protects your data and helps comply with data standards.

3) Versioning and Lineage

Databricks Unity Catalog records the history of your features, including modifications and their origins. This transparency simplifies tracking changes over time, ensuring you understand the evolution of your data.

4) Simplified Feature Discovery

Using the Databruicks Unity Catalog interface, you can easily search for and manage feature tables. Features are tagged and documented, facilitating their discovery and reuse across various projects and teams. You can explore feature tables through the Feature store UI, which provides metadata like ownership, publication status, and last modification date.

5) Point-in-Time Correctness and Time Series Support

Databricks Unity Catalog supports the creation of time series feature tables. You can define primary keys with the TIMESERIES clause, enabling point-in-time joins necessary for accurate feature engineering in time-sensitive applications.

6) Integration with Model Lifecycle

Features from Databricks Unity Catalog are seamlessly integrated into the model lifecycle. During model training, feature metadata is packaged with the model. When the model is used for batch scoring or online inference, it automatically retrieves the necessary features from the Feature Store, simplifying model deployment and updates

7) Automatic Lineage Tracking

MLflow models trained on Databricks capture the lineage to the features used, stored as a feature_spec.yaml artifact within the model. This automatic tracking simplifies the maintenance of feature-to-model mappings, reducing errors and ensuring seamless integration during model scoring and updates.

For more detailed info about feature engineering within Databricks Unity Catalog, See Unity Catalog Feature Engineering.

Now that we've covered the basics of what a feature, feature store, and feature engineering are, let's look at what exactly the Databricks Feature Store is

🧱 Databricks Feature Store 🧱

Databricks Feature Store is a centralized repository designed to manage machine learning features throughout the entire lifecycle of ML models. It ensures that features are consistent and easily accessible for both training and inference, promoting reuse and collaboration among users—mainly data scientists and engineers. This integration within the Databricks platform simplifies feature management and makes model development and deployment more efficient.

What is the Use of Feature Store in Databricks?

The Databricks Feature Store serves multiple purposes within the Databricks ecosystem:

1) Easy Feature Discovery

Feature Store UI in the Databricks workspace allows you to simply browse and search for existing features. This makes it easier for you to discover and reuse features, saving time and effort.

2) Feature Lineage

When you create a feature table in Databricks, it keeps track of where the data came from and how it's used. You can see which models, notebooks, jobs, and endpoints rely on each feature, providing transparency and traceability.

3) Seamless Integration with Model Scoring and Serving

Databricks Feature Store seamlessly connects with model training and deployment. When you train a model with features from the Feature Store, it includes all relevant feature information. During batch scoring or online inference, the model automatically retrieves the required features from the Feature Store. This means you won't have to worry about adding logic to look up or link features, which simplifies the deployment process.

4) Integration with other Databricks components

Databricks Feature Store seamlessly integrates with other components of the Databricks Lakehouse platform, including Delta Lake for efficient data storage and management, MLflow for tracking and managing machine learning experiments, and Databricks Workflows for orchestrating complex data and ML pipelines.

5) Accurate Time-Based Lookups

Databricks Feature Store supports use cases that require features to be accurate at specific points in time, such as time series and event-based data.

6) Eliminate online/offline skew

Databricks Feature Store includes robust security features, such as access control lists (ACLs), to manage permissions. Integration with MLflow ensures that features are stored alongside ML models, facilitating governance and reducing the risk of model drift between training and serving

7) Enhanced Security and Governance

Databricks Feature Store streamlines feature lookups during model inference by integrating feature information into the MLflow model. This guarantees that the same transformations are used during both training and inference, lowering the possibility of inconsistencies and errors.

8) Automated Lineage Tracking

Databricks Feature Store captures a complete lineage graph, detailing data sources, feature computations, and downstream consumers such as models and endpoints. This comprehensive tracking supports lineage-based discovery and governance, allowing data scientists and engineers to understand and manage feature dependencies effectively

What Is the Difference Between Databricks Feature Store and Unity Catalog?

While the Databricks Feature Store and Databricks Unity Catalog are closely related components within the Databricks ecosystem, they serve distinct purposes. Here's a comparison of their key differences:

| Databricks Feature Store | Databricks Unity Catalog |

| Databricks Feature Store is a centralized repository for managing and sharing machine learning features | Databricks Unity Catalog is a unified governance solution for data and AI assets across Databricks |

| Databricks Feature Store is used to create, store, and reuse features for ML model training and inference | Databricks Unity Catalog is used to manage access control, auditing, and lineage of data across all Databricks workspaces |

| Databricks Feature Store provides a consistent way to manage feature computation and ensures features are used consistently in both training and inference | Databricks Unity Catalog manages various data formats, SQL functions, and structured streaming workloads |

| Features can be browsed and searched through the Feature Store UI | In Databricks Unity Catalog data assets can be discovered using a search interface and tagging system |

| Databricks Feature Store tracks the lineage of features, including their source data and usage in models, notebooks, and endpoints | Databricks Unity Catalog provides detailed data lineage for tables and other assets, showing how data is transformed and used |

| Databricks Feature Store is integrated with Databricks ML workflows, automatically retrieves features during batch scoring and online inference | Databricks Unity Catalog supports ML workflows by managing data but does not directly handle feature management or retrieval for ML models |

| Databricks Feature Store makes sure features are secure and accessible to authorized users within the ML pipeline | Databricks Unity Catalog provides comprehensive governance, including fine-grained access control, auditing, and compliance across data assets |

| Databricks Feature Store stores feature tables, which can be used directly in ML workflows | Databricks Unity Catalog manages storage locations at the metastore, catalog, and schema levels, providing flexible storage management |

| Databricks Feature Store works with Databricks Runtime 13.2 ML and above | Databricks Unity Catalog becomes the feature store in Databricks Runtime 13.3 and above |

| Databricks Feature Store is specifically designed for machine learning applications | Databricks Unity Catalog is broadly used for data governance, including non-ML data management |

Architecture of Databricks Feature Store—Behind the Scenes Functionality

Databricks Feature Store is designed to manage features throughout the ML lifecycle, but its responsibilities extend beyond feature storage. It relies on various components within the Databricks ecosystem to handle different aspects of feature engineering and model development.

How Databricks Feature Store Work?

The basic workflow of Databricks Feature Store typically involves several key steps, streamlining the process from raw data to model deployment:

Step 1—Feature Creation

The process begins with writing code to transform raw data into features, which involves Data cleaning and preprocessing and Feature engineering.

Step 2—Storing Features in the Feature Store

Depending on your workspace setup, you can store the DataFrame containing the features in one of two places:

- Unity Catalog-enabled Workspaces: Here, the DataFrame is written as a feature table in the Unity Catalog.

- Non-Unity Catalog-enabled Workspaces: Here, the DataFrame is written as a feature table in the Workspace Feature Store.

Step 3—Model Training

When training a machine learning model using the Databricks Feature Store:

- The model collects features from the feature store to guarantee that the same feature definitions are applied consistently across models and experiments.

- The specifications of the features used for training are saved alongside the model, including metadata such as feature names, types, and transformations applied.

Step 4—Model Registration

Once the model is trained, it is registered in the Model Registry. The Model Registry serves as a centralized repository for managing and versioning models, providing capabilities such as:

- Tracking model versions and their metadata.

- Logging the lineage of the model, including the features used for training.

Step 5—Model Inference

For model inference:

When the model is deployed, it automatically joins the necessary features from the feature tables stored in the feature store, eliminating the need for manual feature retrieval and ensuring that the same feature definitions are used during both training and inference.

Now that you have understood the basic workflow of how Databricks Feature Store works, let's dive into its detailed architecture overview.

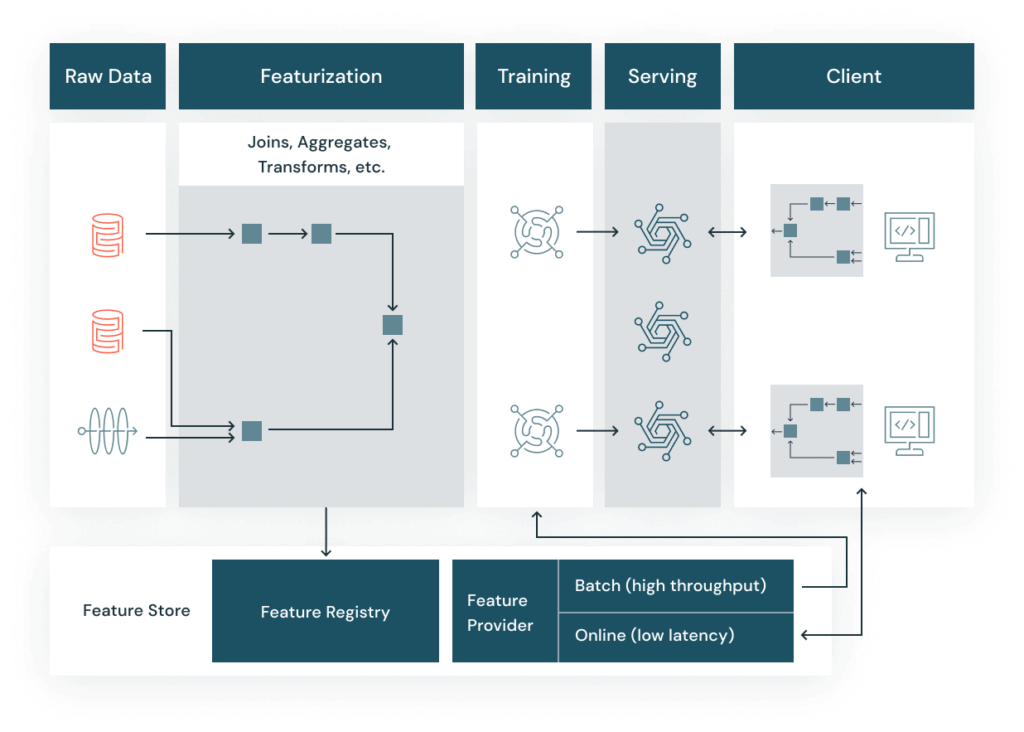

Detailed Architecture Overview of Databricks Feature Store

Databricks Feature Store's architecture can be broken down into the following key components:

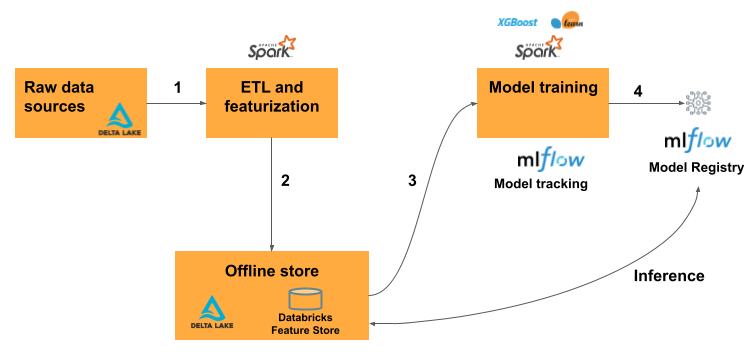

Databricks Feature Store Architecture with Offline Store

1) Raw Data Sources

The starting point of the feature engineering pipeline is the raw data stored in Delta Lake. These raw data sources can include structured, semi-structured, and unstructured data.

2) ETL and Featurization

The raw data undergoes ETL (Extract, Transform, Load) and featurization processes using Apache Spark. This step involves cleaning, transforming, and aggregating the data to create meaningful features that can be used by machine learning models.

3) Offline Store

The processed features are then stored in the Databricks Feature Store's offline store, which is backed by Delta Lake. The offline store serves as a historical repository of features, enabling users to access and use these features for model training.

4) Model Training

The features from the offline store are used to train machine learning models. Popular frameworks such as XGBoost and Scikit-learn can be used for model training. During this phase, MLflow is utilized for model tracking, which ensures that the experiments, parameters, and metrics are logged and traceable.

5) Model Registry and Inference:

Once trained, the models are registered in the MLflow Model Registry. This registry keeps track of different model versions, making it easier to manage and deploy models. For inference, the registered models can retrieve features from the offline store to make predictions.

Databricks Feature Store Architecture with Online Store

1) Raw Data Sources

Similar to the first architecture(offline), raw data is stored in Delta Lake and serves as the input for feature engineering.

2) Featurization

The ETL and feature engineering processes are carried out using Apache Spark, transforming raw data into features.

3) Offline Store

Features are stored in the offline store (Databricks Feature Store), which serves as a repository for training data and batch inference.

4) Model Training

Models are trained using features from the offline store, with MLflow tracking the training process. MLflow logs parameters, metrics, and artifacts to ensure reproducibility and traceability.

5) Online Store

For real-time applications, features are published from the offline store to an online store. The online store allows for fast, low-latency access to features, making it suitable for real-time inference.

6) Model Serving

The trained models are registered in the MLflow Model Registry. During model serving, the models retrieve features from the online store to perform real-time inference. This setup ensures that the models have access to the most up-to-date features for accurate predictions.

7) Client

The final predictions are served to the client application, which can be any end-user service or application that consumes the model's output.

Step-by-Step Guide to Use Databricks Feature Store

To help you get started with the Databricks Feature Store, here is a basic step-by-step guide that walks you through the process of setting up your environment, creating feature tables, training models, and performing batch scoring.

Here are the prerequisites and steps involved:

Prerequisite:

- Databricks Runtime 13.3 LTS or later: Ensure that you have a Databricks workspace running on Runtime 13.3 LTS or a later version, which supports the Databricks Feature Store out of the box.

- Unity Catalog (optional): If you plan to use Unity Catalog as your feature store, ensure that it is enabled in your Databricks workspace.

Step 1—Setting Up the Databricks Environment

To get started with Databricks, first, create an account by signing up at the Databricks website. Once you have an account, log in and create a workspace—this is where you'll manage all your Databricks resources.

See Databricks workspace guide.

After creating the workspace, navigate to the "Clusters" tab and spin up a new compute cluster. This cluster will provide the computational resources required for running your Databricks jobs and notebooks. When creating the cluster, choose an appropriate configuration based on your data processing needs, such as the number of workers, instance types, and memory allocation.

See Databricks cluster guide.

Make sure that the cluster is running before proceeding to the next step.

Finally, make sure you have the necessary libraries installed and imported (databricks-feature-store, pandas, scikit-learn, and mlflow) if they are not already available in your environment.

from databricks import feature_store

from databricks.feature_store import FeatureStoreClient

from sklearn.ensemble import RandomForestClassifier

import mlflow

import pandas as pdDatabricks Feature Store Example

Step 2—Loading and Preparing Data

Before you can create and store features, you'll need to load your raw data into a Spark DataFrame. Depending on your data source, you can use Spark SQL or the PySpark API to read the data from various formats like CSV, Parquet, or JSON. For example, if your data is in a CSV file, you can use the following code:

raw_data = spark.read.format("csv").option("header", "true").load("path/to/your/data.csv")Databricks Feature Store Example

Now, clean and transform your data as required for feature engineering. This might involve handling missing values, normalizing data, and converting data types. For example:

clean_data = raw_data.dropna().dropDuplicates()Databricks Feature Store Example

- dropna(): Removes any rows in the DataFrame that contain missing values (NaN). After applying this, only rows with no missing values in any column will be retained.

- dropDuplicates(): Removes duplicate rows from the DataFrame. After applying this method, only unique rows will be retained.

Next, prepare your data for feature engineering by transforming it as needed, for instance, converting data types or normalizing values.

from pyspark.sql.functions import col

transformed_data = cleaned_data.withColumn("normalized_column", col("column")/col("max_column"))Databricks Feature Store Example

It's crucial to make sure that your data is in the desired format and meets the quality standards before proceeding to the next step.

Step 3—Creating a Databricks Feature Table

After preparing your data, it's time to create a feature table in the Databricks Feature Store. This table will serve as a centralized repository for storing and managing your computed features.

First, initialize the Databricks Feature Store client, which provides an interface for interacting with the Feature Store.

from databricks.feature_store import FeatureStoreClient

fs = FeatureStoreClient()Databricks Feature Store Example

Next, define the features you want to store in the feature table. This typically involves selecting relevant columns from your transformed data and applying any necessary transformations or aggregations. For example, you might want to compute the average value of a feature column grouped by an entity ID.

from pyspark.sql.functions import avg

feature_df = transformed_data.groupBy("entity_id").agg(avg("feature_column").alias("average_feature"))Databricks Feature Store Example

Now that you have the feature DataFrame ready, you can create a new feature table in the Databricks Feature Store. This step requires specifying the table name, primary keys, schema, and an optional description.

fs.create_table(

name='feature_store_schema.feature_table_name',

primary_keys='entity_id',

schema=feature_df.schema,

description='Brief description of the Databricks feature store table'

)Databricks Feature Store Example

- name: defines the fully qualified name of the feature table, following the format <database_name>.<table_name>

- primary_keys: specifies the column(s) that uniquely identify each row in the table

- schema: allows you to define the table schema based on the feature DataFrame's schema

- description: provides a brief explanation of the table's purpose

Step 4—Storing Features

After creating the feature table, you can write the computed features to the table using the write_table method provided by the Feature Store client.

fs.write_table(

name='feature_store_schema.feature_table_name',

df=feature_df,

mode='overwrite'

)Databricks Feature Store Example

- name: specifies the fully qualified name of the feature table you created in the previous step

- df: is the feature DataFrame containing the computed features

- mode: determines how the data will be written to the table; 'overwrite' mode will overwrite any existing data in the table with the new feature data

Note: Databricks Feature Store supports different write modes, including 'overwrite', 'merge', and 'append'. The appropriate mode depends on your specific use case and whether you want to overwrite, merge, or append new data to the existing table.

Step 5—Loading Features for Model Training

Once you have stored your features in the Databricks Feature Store, you can easily load them for model training. The Feature Store provides a convenient way to combine your raw input data with the stored features, creating a comprehensive training set.

First, specify the features you want to use for training your model by defining FeatureLookup objects. Each FeatureLookup object represents a feature table and the lookup key (typically the primary key) used to join the raw data with the feature table.

from databricks.feature_store import FeatureLookup

feature_lookups = [

FeatureLookup(

table_name='feature_store_schema.feature_table_name',

lookup_key='entity_id'

)

]Databricks Feature Store Example

Now that you've defined FeatureLookup objects, you can build a training set by combining raw input data with features from the feature store. This can be achieved by using the create_training_set method on the Feature Store client.

training_set = fs.create_training_set(

raw_data,

feature_lookups,

label='label_column'

)

training_set_df = training_set.load_df()Databricks Feature Store Example

- raw_data: The Spark DataFrame containing the raw input data.

- feature_lookups: A list of FeatureLookup objects specifying the feature tables and lookup keys.

- label: The name of the column containing the target variable or label for supervised learning.

The load_df() method loads the training set as a Pandas DataFrame, which can be used directly for model training with popular machine learning libraries like scikit-learn.

Step 6—Model Training

After preparing the training set, you can train your machine learning model with your preferred library. In this example, we will use scikit-learn's Random Forest Classifier.

First, import the necessary model class from the scikit-learn library:

from sklearn.ensemble import RandomForestClassifierDatabricks Feature Store Example

Then, create an instance of the RandomForestClassifier:

model = RandomForestClassifier()Next, fit the model to the training data. Use the fit method on the training dataset, where training_set_df.drop('label_column') removes the label column from the features, and training_set_df['label_column'] provides the labels:

model.fit(training_set_df.drop('label_column'), training_set_df['label_column'])Step 7—Logging the Model with MLflow

After training your model, it's best practice to log and track it using a model management tool like MLflow. MLflow is an open-source platform for managing the end-to-end machine learning lifecycle, including experiment tracking, model packaging, and model deployment.

import mlflow

import mlflow.sklearn

with mlflow.start_run():

mlflow.sklearn.log_model(model, "model")Databricks Feature Store Example

In this example, we first import the required MLflow modules: mlflow for general MLflow functionality, and mlflow.sklearn for integrating with scikit-learn models.

Next, we invoke the mlflow.start_run() context manager to start a new MLflow run, which represents a single execution of your machine learning code. Within this context, we log the trained model using mlflow.sklearn.log_model(), specifying the model object and a name for the logged model artifact.

By using MLflow to log your model, you can track its performance metrics, parameters, and other metadata, allowing for model reproducibility and model versioning.

Step 8—Scoring Data with the Feature Store

After training and logging your model, you can use the Databricks Feature Store to score new data in batch mode. This process involves loading the trained model and using the Feature Store client to score the raw input data.

1) Load the Model: First, load the trained model from the MLflow model registry using the appropriate model URI.

model_uri = "models:/my_model/production"

model = mlflow.pyfunc.load_model(model_uri)Databricks Feature Store Example

2) Score Batch Data: Use the score_batch method provided by the Feature Store client to score the raw input data using the loaded model.

scored_df = fs.score_batch(

model_uri,

raw_data

)Databricks Feature Store Example

- model_uri: The URI or path to the trained model you want to use for scoring.

- raw_data: The Spark DataFrame containing the raw input data to be scored.

The method returns a Spark DataFrame (scored_df) containing the original input data along with the predicted scores or labels from the model.

Step 9—Maintaining and Updating Features

As your data evolves or feature definitions change, you'll need to update the features stored in the Databricks Feature Store. This process involves writing new or updated feature data to the existing feature table.

1) Update Features: If you have new data or modified feature definitions, compute the updated features and write them to the feature table using the write_table method with the 'merge' mode.

new_data = spark.read.csv('/path/to/new/data.csv', header=True, inferSchema=True)

updated_feature_df = new_data.groupBy("entity_id").agg(avg("new_feature_column").alias("average_new_feature"))

fs.write_table(

name='feature_store_schema.feature_table_name',

df=updated_feature_df,

mode='merge'

)Databricks Feature Store Example

'Merge' mode allows you to update existing rows in the feature table with the new feature data while preserving any existing rows that are not updated.

2) Backfill Historical Data: In some cases, you might need to backfill the feature store with historical data, especially when introducing new features or making significant changes to existing ones. This makes sure that your models can be retrained or scored consistently using the updated feature definitions.

historical_data = spark.read.csv('/path/to/historical/data.csv', header=True, inferSchema=True)

backfilled_feature_df = historical_data.groupBy("entity_id").agg(max("historical_feature_column").alias("max_historical_feature"))

fs.write_table(

name='feature_store_schema.feature_table_name',

df=backfilled_feature_df,

mode='overwrite'

)Databricks Feature Store Example

As you can see in this example, we read historical data, compute the new or updated features, and write them to the feature table using the 'overwrite' mode. This mode replaces the existing data in the table with the new feature data, effectively backfilling the feature store with the updated historical information.

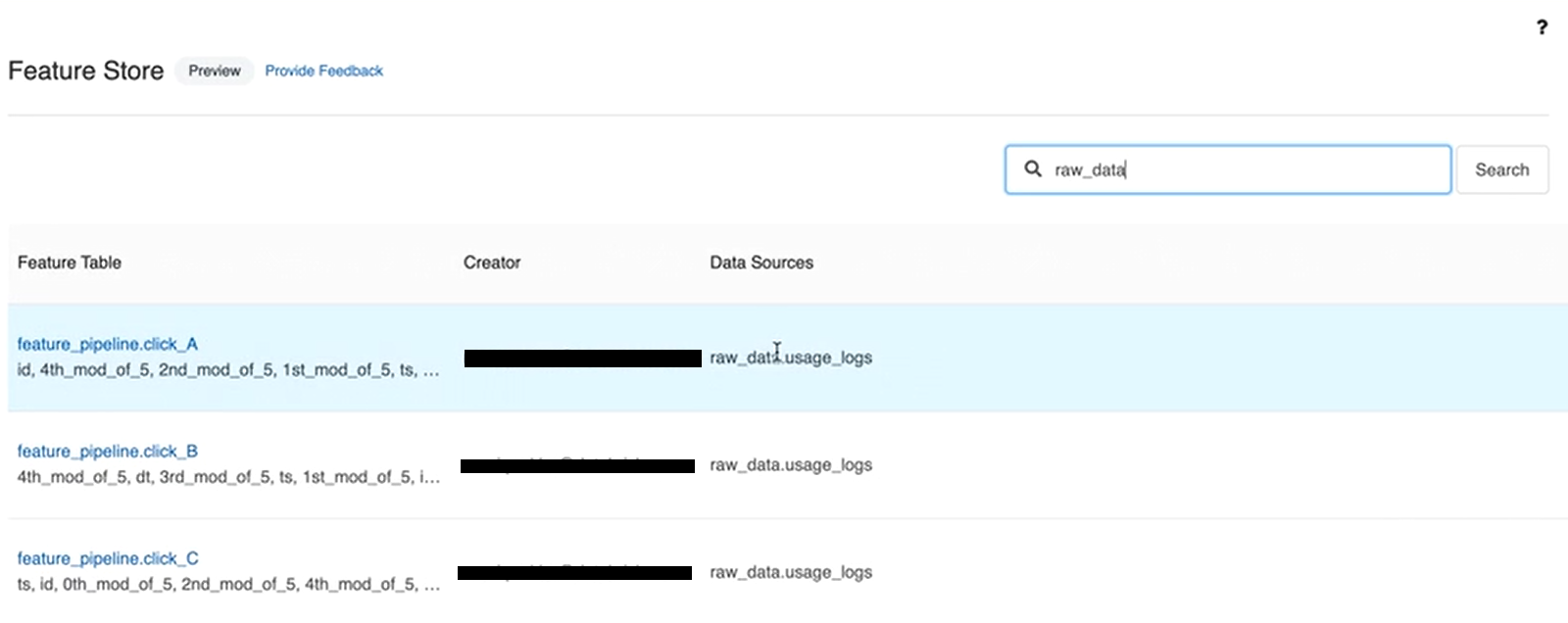

Step 10—Monitoring Feature Usage

As your machine learning pipeline grows and evolves, it becomes crucial to monitor the usage and performance of your features and models regularly. The Databricks Feature Store provides a user interface (UI) that allows you to monitor feature health and usage metrics.

To view the Feature Store UI, ensure you are in the Machine Learning persona (AWS, Azure, or GCP). Access the Feature Store UI by clicking the Feature Store icon on the left navigation bar.

Databricks Feature Store UI displays a list of all feature tables in the workspace. It provides detailed information about each table, including:

- Creator of the table

- Data sources

- Online stores

- Scheduled jobs that update the table

- Last update time of the table

If you regularly monitor these metrics, it helps maintain the health and performance of your machine learning pipeline.

That’s it! If you follow these steps carefully, you can effectively utilize the Databricks Feature Store.

Best Practices for Effective Databricks Feature Store Usage

To get the most out of the Databricks Feature Store, it's important to follow some best practices.

- Treat features as first-class citizens. Provide clear names, descriptions, and data types for all features.

- Consider reusability while designing features. Centralizing features in the Feature Store encourages data scientists and engineers to discover and reuse existing features rather than re-creating them.

- Automate the ETL and feature engineering processes that populate feature tables. Use Databricks Workflows or DLT to create pipelines that regularly update feature values.

- Point-in-time correctness should always be taken into consideration when feature engineering time-sensitive models.

- Regularly monitor the quality, freshness, and usage of features within the Feature Store.

- Create feature tables in Unity Catalog for workspaces with Unity Catalog enabled.

- Design feature tables and publishing strategies to cater to both offline (training, batch inference) and online (real-time inference) requirements.

- Use the on-demand feature computation capabilities for features that change frequently or are specific to an inference request (like real-time user interaction data). Avoid precomputing and storing features that are dynamic and better calculated at inference time.

What Is the Difference Between Feature Stores and Delta Tables?

While the Databricks Feature Store leverages Delta tables for storing feature data, there are some key differences:

| Databricks Feature Store | Delta Tables |

| Databricks Feature Store is a centralized repository for managing and serving machine learning features. | Delta Tables efficiently store and manage large-scale structured data. |

| Databricks Feature Store's primary objective is to centralize feature engineering and streamline model training and inference. | Delta Tables' primary objective is to provide ACID transactions and optimized query performance for structured data. |

| Databricks Feature Store tracks and manages versions of features for reproducibility and auditing. | Delta Tables track changes to data over time, enabling retrieval of historical snapshots. |

| Databricks Feature Store provides efficient feature serving for real-time inference and batch processing. | Delta Tables support both batch and streaming workloads in a single table. |

| Databricks Feature Store offers comprehensive metadata and documentation about features. | Delta Tables track schema changes but are not specifically focused on feature documentation. |

| Databricks Feature Store includes data quality checks to ensure reliable and accurate features. | Delta Tables ensure data integrity through ACID transactions. |

| Databricks Feature Store is designed to scale with growing data and ML requirements. | Delta Tables are optimized for high-performance analytics and querying on large datasets. |

| Databricks Feature Store is not primarily focused on schema evolution. | Delta Tables support schema evolution without breaking existing pipelines. |

| Databricks Feature Store use cases include model training, real-time inference, model auditing, and feature collaboration. | Delta Tables use cases include data warehousing, streaming data ingestion, analytics, reporting, and data archiving. |

| Databricks Feature Store can be used with Delta Tables for feature extraction. | Delta Tables can store raw data for feature extraction to populate Feature Store. |

Want to take Chaos Genius for a spin?

It takes less than 5 minutes.

Conclusion

And that’s a wrap! Databricks Feature Store is a centralized repository designed to manage machine learning features throughout the entire lifecycle of ML models. It keeps your features consistent and easily accessible, whether you're training new models or making predictions with existing ones. This setup encourages teamwork among data scientists and engineers, making feature management easier and speeding up the whole process of developing and deploying machine learning models.

In this article, we have covered:

- What is a Feature (in Machine Learning)?

- What Is a Feature Store?

- What Is Feature Engineering?

- What Is Databricks Feature Store?

- What Is the Difference Between Databricks Feature Store and Unity Catalog?

- What Is the Use of Feature Store in Databricks?

- How Databricks Feature Store Works?

- Architecture of Databricks Feature Store

- Detailed Architecture of Databricks Feature Store

- Step-by-Step Guide to Use Databricks Feature Store

- Best Practices for Effective Databricks Feature Store Usage

- What Is the Difference Between Feature Stores and Delta Tables?

… and so much more!

References

- What are the features in machine learning?

- Advancing Spark - Databricks Feature Store

- Enable Production ML with Databricks Feature Store

- What is a feature store?

- The Comprehensive Guide to Feature Stores

- Feature Store Function in Databricks — What You Need to Know

- Feature Storing — MLOps Guide

FAQs

What is a feature in machine learning?

Features are the input variables used by machine learning models to make predictions or classifications.

What are the two main types of features?

The two main types of features are numerical features (continuous and discrete variables) and categorical features (nominal and ordinal variables representing categories or groups).

Why are features important in machine learning?

Features are crucial because they directly impact a model's predictive power, ability to reduce dimensionality, and interpretability. High-quality features significantly enhance a model's accuracy and generalization.

What is a feature store?

A feature store is a centralized repository or data storage layer where users can store, share, and discover curated features for machine learning (ML) models. It facilitates the processing and transformation of raw data into consumable features for model training and serving pipelines.

What are the core functionalities of a feature store?

The core functionalities of a feature store include collaborative feature sharing, automated data preparation, integration of various data sources, real-time feature availability, temporal feature retrieval, and flexible predictions (batch and real-time).

What are the two main types of feature stores?

The two main types of feature stores are offline stores (for batch data) and online stores (for real-time data).

Does Databricks Have a Feature Store?

Yes, Databricks offers a comprehensive Feature Store integrated within its platform.

What Is the Use of Feature Stores in Databricks?

Databricks Feature Store serves multiple purposes, including easy feature discovery, tracking feature lineage, seamless integration with model scoring and serving, integration with other Databricks components like Delta Lake and MLflow, and enabling accurate time-based lookups for time-series and event-based data.

What Is the Purpose of Feature Engineering?

The purpose of feature engineering is to transform raw data into a format suitable for machine learning algorithms to consume, enabling models to learn effectively and make accurate predictions. It involves extracting meaningful representations from raw data, improving model performance, reducing dimensionality, and enhancing interpretability.

What Is Feature Engineering in Spark?

Feature engineering in Apache Spark involves transforming raw data into meaningful features that improve the performance of machine learning models. It includes steps like data cleaning, data transformation, feature creation, feature selection, and encoding categorical variables.

What Is the Difference Between Databricks Feature Store and Unity Catalog?

Databricks Feature Store is specifically designed for managing and serving machine learning features, while Unity Catalog is a broader unified governance solution for data and AI assets across Databricks workspaces. Unity Catalog manages access control, auditing, and lineage of data, while the Feature Store focuses on feature engineering, versioning, and serving for ML models.

Can You Use Databricks Without a Unity Catalog?

Yes, you can use Databricks without the Unity Catalog.

What Is the Purpose of Unity Catalog in Databricks?

Unity Catalog provides a unified governance solution for all data and AI assets, including centralized governance, fine-grained access control, auditing, and lineage tracking.

What are the key components of the Databricks Feature Store architecture?

The key components of the Databricks Feature Store architecture include raw data sources, ETL and featurization processes, offline and online feature stores, model training and registry, and model serving.

Can you monitor feature usage in the Databricks Feature Store?

Yes, you can monitor feature usage in the Databricks Feature Store by accessing the Databricks Feature Store UI, which displays a list of all feature tables in the workspace, along with detailed information about each table, including creator, data sources, online stores, scheduled jobs, and last update time.

Can the Databricks Feature Store be used with Delta Tables?

Yes, the Databricks Feature Store can be used with Delta Tables for feature extraction, where Delta Tables store the raw data, and the Feature Store extracts and manages the features derived from this data.