Storing and managing data efficiently is crucial when handling huge amounts of data in Databricks. Having a high-performance storage solution is essential. DBFS (Databricks File System) is designed for this purpose. It's natively integrated into Databricks and acts as an abstraction layer on top of object storage services like AWS S3, Azure Blob Storage, Azure Data Lake Storage Gen2, or Google Cloud Storage (GCS). As data accumulates over time, you might need to clean up your storage by deleting outdated or unnecessary files or folders from DBFS to maintain performance and manage space. Deleting folders can be necessary for organizing data, removing test data, or freeing up resources for new datasets.

In this article, we will provide a step-by-step guide to delete folder from DBFS. Here, we will explore four techniques for folder deletion—using the Databricks CLI, Databricks Notebooks, the Databricks REST API, and the Databricks UI. Each technique has its own advantages, and we will guide you through them step by step.

What is Databricks File System (DBFS)?

DBFS (Databricks File System) is essentially a layer over your cloud storage associated with your Databricks Workspace, such as AWS S3, Azure Blob Storage, Azure Data Lake Storage Gen2, or Google Cloud Storage (GCS). It provides a file system interface and is designed to handle big data workloads efficiently.

Databricks DBFS consists of two key components:

- DBFS Root: DBFS Root is the top-level directory created when your Databricks Workspace is set up. All files and folders in DBFS are organized under this root.

- DBFS Mount: Mounting allows you to access external storage as if it were part of DBFS.

Check out this article to learn more in-depth about Databricks DBFS.

In our previous articles, we covered step-by-step guides on how to upload files to DBFS and download files from DBFS. Here, we will cover 4 different techniques to delete folder in DBFS. Now, without any further ado, let's dive right into it!

Want to take Chaos Genius for a spin?

It takes less than 5 minutes.

Step-By-Step Guide to Delete Folder From DBFS (Databricks File System)

Let's start with a step-by-step guide on how to delete folder from DBFS to your local machine. We will explore 4 different techniques:

- Technique 1—Delete Folder from DBFS Using Databricks CLI

- Technique 2—Delete Folder from DBFS Using Databricks Notebook

- Technique 3—Delete Folder from DBFS Using Databricks REST API

- Technique 4—Delete Folder from DBFS Using Databricks UI

Prerequisites

First things first, before you start, you need to meet this prerequisite:

- You need access to a Databricks Workspace.

- Install and configure the Databricks CLI (latest version).

- Have the necessary permissions to access folder paths in Databricks DBFS.

- Know the exact path of the folder you want to delete.

🔮 Technique 1—Delete Folder From DBFS Using Databricks CLI

Using the Databricks CLI technique is one of the most straightforward methods to delete folder from DBFS. Follow these steps:

Step 1—Install Databricks CLI (if not already installed)

If you haven't installed the Databricks CLI yet, you can quickly set it up on your preferred operating system. Refer to the following guide for step-by-step instructions on installing the Databricks CLI on Linux, macOS, and Windows. Here's how to install it on each platform:

For this article, we will be using Windows OS.

Step 2—Configure Databricks CLI

After installing the Databricks CLI, you need to authenticate it to connect to your Databricks Workspace. The most common method is using a Personal Access Token and setting up a configuration profile.

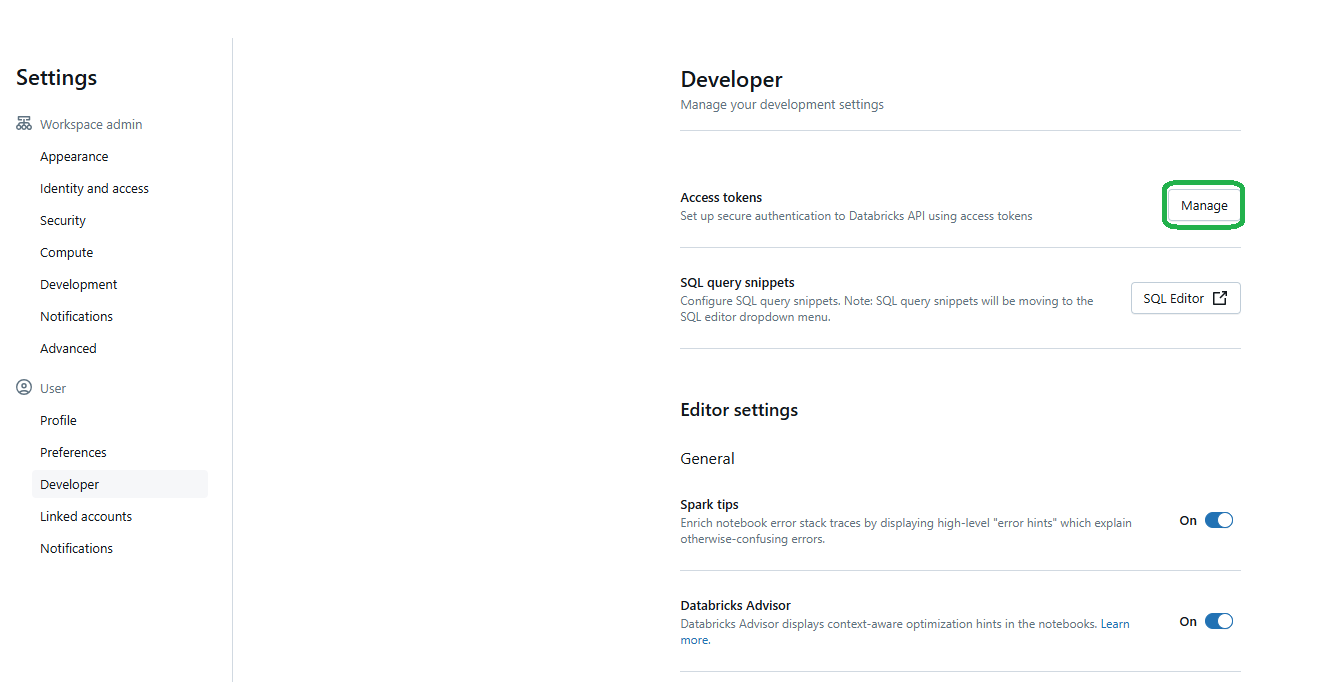

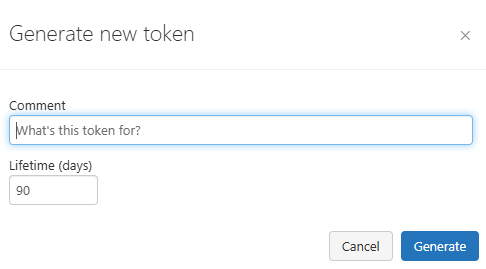

a) Generate a Personal Access Token

Personal Access Token functions like a password for authenticating the CLI with your workspace. To generate one:

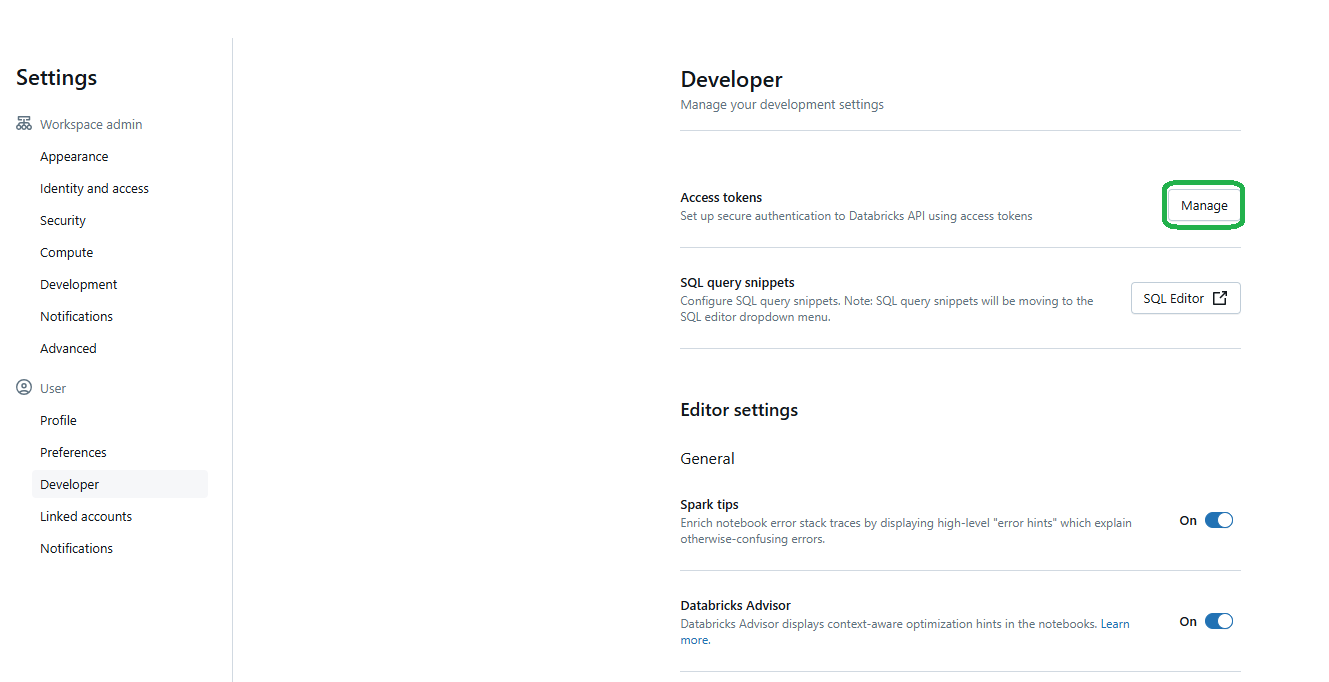

Open your Databricks Workspace and navigate to User Settings under your username.

In the Access Token section (under Developer Settings), create a new token.

Add a comment to describe its purpose, set an expiration date, and click Generate.

Copy the token and save it securely—it won’t be accessible later.

b) Configure a Profile with the Token

Now, configure the CLI to use the token for authentication. Open your terminal and run:

databricks configure You’ll be prompted to:

Enter your Databricks Workspace URL:

https://dbc-123456789.cloud.databricks.comThen, paste the token you just created.

This creates a profile in your .databrickscfg file automatically.

Alternatively, you can set up the .databrickscfg file manually in your home directory. Use this format:

[DEFAULT]

host = https://xxxx.cloud.databricks.com

token = XXXXXXXXXXXXXXXXXXXX

[PROFILE_NAME]

host = https://yyyy.cloud.databricks.com

token = XXXXXXXXXXXXXXXXXXXX You can also customize the file’s location by setting an environment variable.

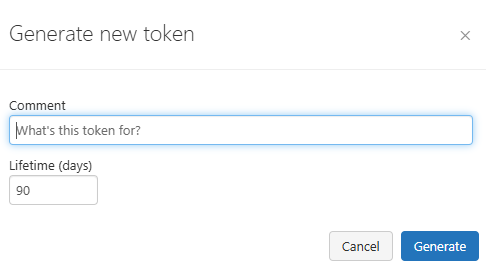

c) Verify Authentication

To check if the configuration works, list your profiles:

databricks auth profiles

Or test authentication with a command like:

databricks clusters spark-versionsOnce authenticated, the Databricks CLI is ready to interact with your Databricks Workspace.

Step 3—List Databricks DBFS Files and Folder From Databricks CLI

With the Databricks CLI installed and configured, you can now interact with files in Databricks DBFS. Start by listing the files in the directory where your target file is stored. This helps you confirm the file’s exact path and check that it’s available for download.

To list files in a specific Databricks DBFS directory, run:

databricks fs ls dbfs:/<folder-path>You have to replace <folder-path> with the folder path in Databricks DBFS where the file is located.

Step 4—Delete Folder using Databricks CLI

Now, to delete a folder and its contents in Databricks DBFS, use the following command:

databricks fs rm -r dbfs:/<folder-path>Replace <folder-path> with the actual folder path in Databricks DBFS you wish to delete. The -r flag ensures that the operation is recursive, deleting all contents within the folder.

Step 5—Verify Deleted Folder from DBFS

To confirm that the folder has been deleted, you can list the contents of the parent directory:

databricks fs ls dbfs:/As you can see, this command will display the remaining contents of the parent directory, allowing you to verify that the target folder has been removed.

Now, let's proceed to the next technique where we'll explore deleting folder from DBFS using a Databricks Notebook.

🔮 Technique 2—Delete Folder From DBFS Using Databricks Notebook

This technique involves using Databricks Notebooks to interact with and delete folders from DBFS. It’s a more visual and interactive method compared to the Databricks CLI approach. Here's a step-by-step guide:

Step 1—Log in to Databricks Account

First start by logging into your Databricks account using your credentials. This should be straightforward if you've already set up your account.

Step 2—Navigate to Databricks Workspace

Once logged in, locate and open the Workspace section in the Databricks UI. This is where you manage your notebooks, clusters, and data.

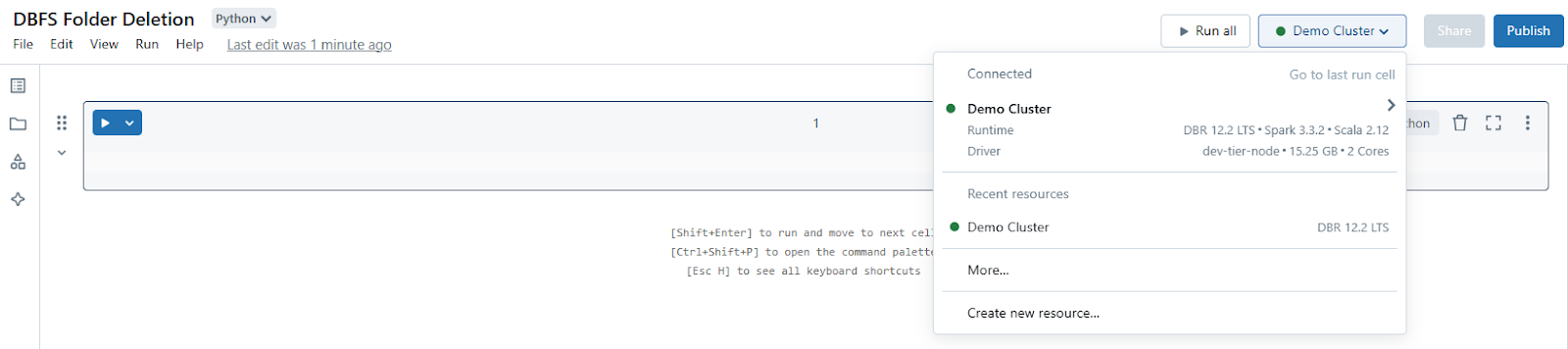

Step 3—Configure Databricks Compute Cluster

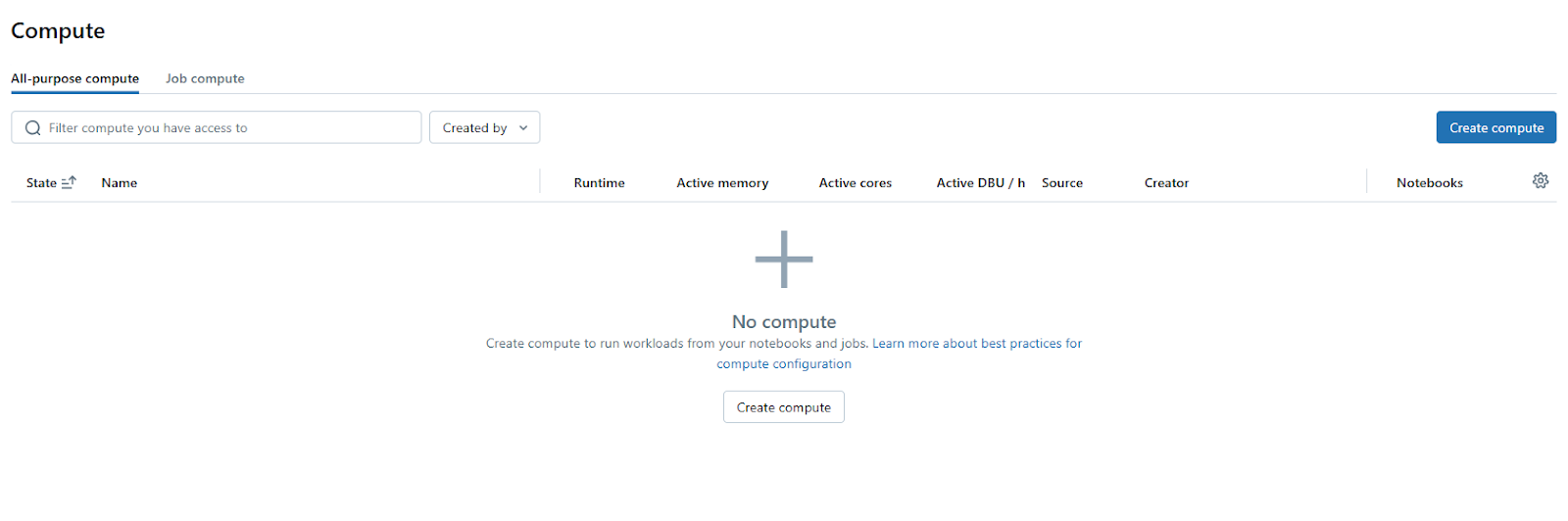

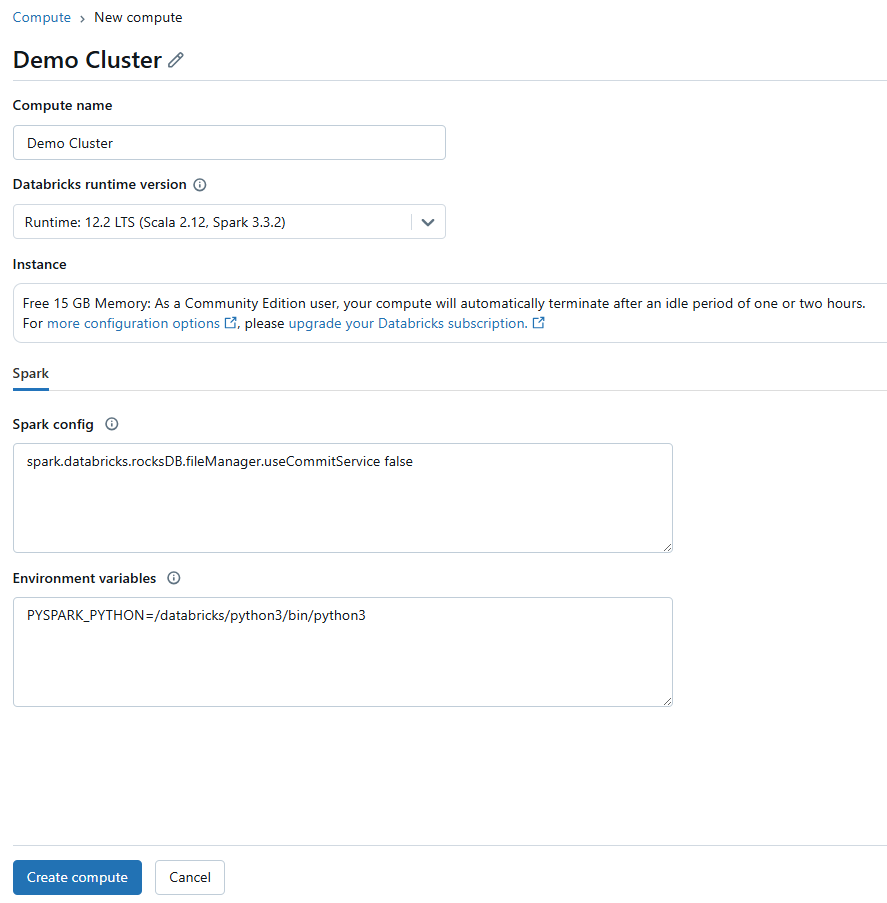

Before you can run any code or interact with Databricks DBFS in a notebook, you need an active Databricks compute cluster. To do so, go to the "Compute" section on the left sidebar. If you don't have a cluster or need a new one, click "Create Compute".

Then, configure the cluster settings according to your needs.

Start the cluster if it's not already running.

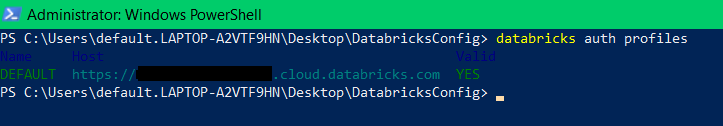

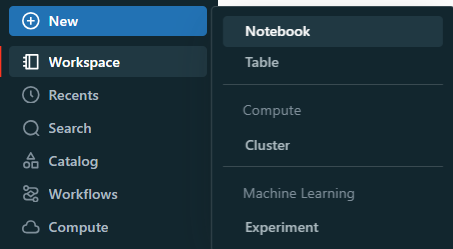

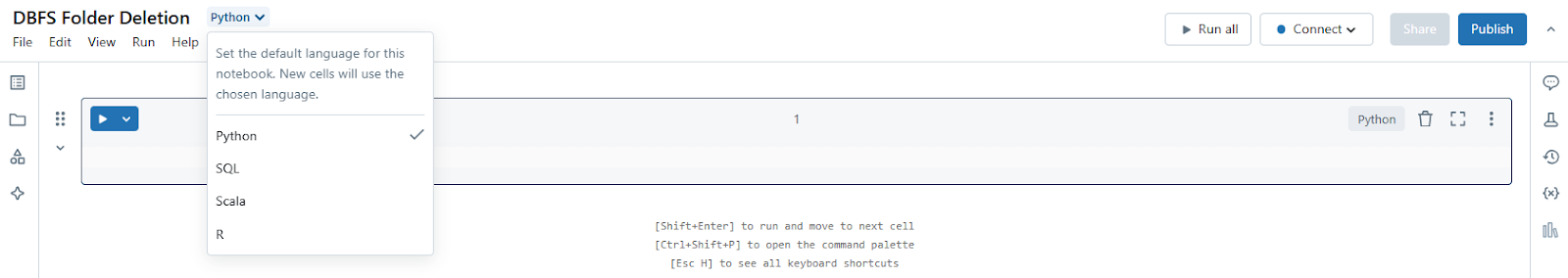

Step 4—Open a New Databricks Notebook

Head over to your workspace, click "+ New" then "Notebook".

Choose a language (Python, Scala, R, or SQL). For this example, we'll use Python. Give your notebook a name that reflects its purpose, like "DBFS Folder Deletion".

Step 5—Attach Databricks Notebook to Cluster

At the top of your newly created notebook, there's an option to "Connect" a cluster.

Select the cluster you configured or created in Step 3.

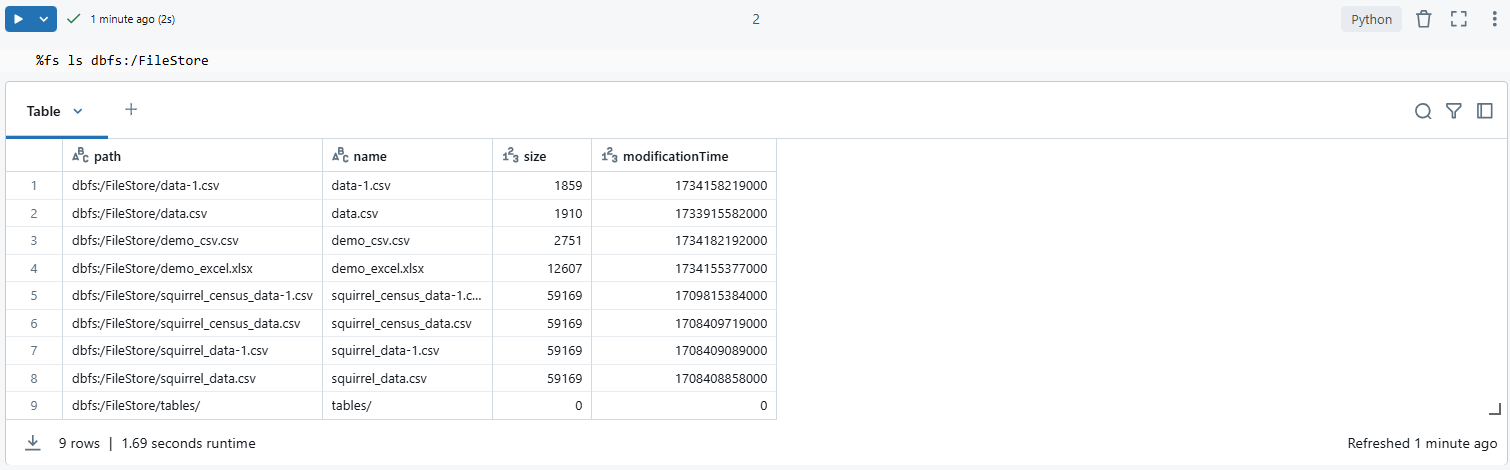

Step 6—List File and Folder From DBFS using %fs Magic Command

Databricks provides %fs Databricks magic commands for file system operations:

In a new cell, type:

%fs ls dbfs:/

As you can see, this command lists all files and directories at the root of your Databricks DBFS. Replace dbfs:/ with your specific path if you're looking at a different directory.

%fs ls dbfs:/FileStoreOr

dbutils.fs.ls("dbfs:/<folder-path>")

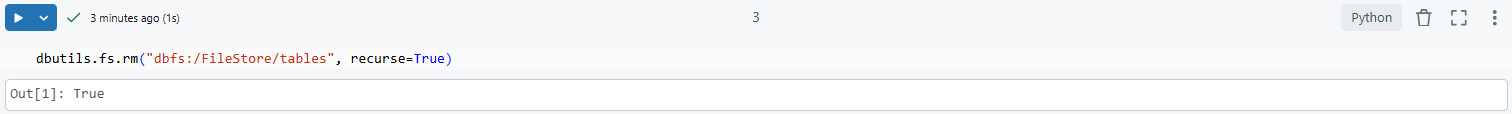

Step 7—Delete Folder Using dbutils command

Use the dbutils library to delete the folder. To do so, in another cell, use the dbutils.fs.rm function. Here's how:

dbutils.fs.rm("dbfs:/<folder-path>", recurse=True)dbfs:/<folder-path>should be replaced with the actual path to your folder.recurse=Trueensures that all contents within the folder are deleted as well. This is critical since Databricks DBFS doesn't allow deleting non-empty directories without this flag.

You can use the same technique to delete a specific file from a folder. Simply specify the file path, including the filename, and execute the command. Here's an example:

dbutils.fs.rm("dbfs:/<folder-path>/filename.csv")You will se that this command removes the specified file from the Databricks File System (DBFS).

Step 8—Verify Deleted Folder from DBFS

To confirm the folder has been deleted, list the parent directory again:

%fs ls dbfs:/<folder-path>If the folder was successfully deleted, it will no longer appear in the output.

Deleting a folder from DBFS using a Databricks Notebook is super handy if you're already working in a Databricks Notebook environment or want to tie this task into a bigger workflow. This way, you get instant feedback in the Databricks Notebook interface, which is really helpful for troubleshooting or just confirming everything worked as planned.

Let's move on to the next technique: a programmatic way to delete folder from DBFS using the Databricks REST API.

🔮 Technique 3—Delete Folder From DBFS Using Databricks REST API

Planning to use Databricks REST API to delete folders? This technique is might come in handy. With the help of Databricks REST API, you can programmatically manage DBFS operations, which is especially useful for automating workflows or integrating with other tools. Here's how you can go about it:

Step 1—Configure Databricks Cluster

Although this step not strictly necessary for the API operations, having a configured cluster ensures you have a workspace where you can test or run additional operations if needed:

Log into your Databricks Workspace.

Navigate to "Compute" and check whether you have a running cluster or create one if you haven't yet. The cluster itself isn't directly used for these API calls, but it's good for context or further operations.

Step 2—Generate a Personal Access Token for API authentication

The process for this step is same to the approach outlined in Technique 1. You will follow the same steps, starting from Step 2 of Technique 1. Here’s a quick recap:

Navigate to "User Settings" in your Databricks Workspace by selecting your profile in the upper-right corner. Under the "Access Token" section, located within "Developer Settings", create a new token. Add a descriptive comment to indicate its purpose, set an expiration date that suits your needs, and then click Generate to create the token.

Step 3—Identify Full Folder Path in Databricks DBFS

Before you start, know exactly which folder you want to delete. You can use the Databricks UI or any of the previously mentioned CLI or Notebook methods to find or confirm the path. Remember, paths in DBFS start with dbfs:/.

Step 4—Use REST API to List Folder Contents

First, you'll want to list the contents of the folder to ensure you're targeting the correct path:

Use curl, Postman, Hoppscotch, or any another HTTP client to make an API request:

curl -X GET -H "Authorization: Bearer PERSONAL_ACCESS_TOKEN" \

-H "Content-Type: application/json" \

-d '{"path": "/<folder-path>"}' \

https://<databricks-instance>/api/2.0/dbfs/listDatabricks REST API - Databricks DBFS API

You have to replace <folder-path> with the actual folder path, PERSONAL_ACCESS_TOKEN with the token you have just copied earlier and <databricks-instance> with your Databricks Workspace URL.

Step 5—Delete Files Inside the Folder First Using REST API

Since DBFS doesn't allow deleting non-empty directories directly via REST API, you need to delete each file inside beforehand:

Iterate over each file in the folder and send a POST request to the /api/2.0/dbfs/delete endpoint.

curl -X POST -H "Authorization: Bearer PERSONAL_ACCESS_TOKEN" \

-H "Content-Type: application/json" \

-d '{"path": "/<folder-path>/filename.csv"}' \

https://<databricks-instance>/api/2.0/dbfs/deleteDatabricks REST API - Databricks DBFS API

Now you have to replace /<folder-path>/filename.csv with the full path. Remember, you'll need to make a separate request for each file.

Step 6—Delete the Empty Folder Using REST API

Once all files are deleted, remove the empty folder. Send a POST request to the /api/2.0/dbfs/delete endpoint with the folder path.

curl -X POST -H "Authorization: Bearer PERSONAL_ACCESS_TOKEN" \

-H "Content-Type: application/json" \

-d '{"path": "/<folder-path>"}' \

https://<databricks-instance>/api/2.0/dbfs/deleteDatabricks REST API - Databricks DBFS API

Step 7—Verify Deleted Folder from DBFS

Finally, confirm the deletion, attempt to list the folder's contents again. If the folder has been successfully deleted, the list operation will return an error indicating that the path does not exist.

curl -X GET -H "Authorization: Bearer PERSONAL_ACCESS_TOKEN" \

-H "Content-Type: application/json" \

-d '{"path": "/<folder-path>"}' \

https://<databricks-instance>/api/2.0/dbfs/listDatabricks REST API - Databricks DBFS API

This Databricks REST API method, while more verbose due to the need to handle each file individually, is highly scalable for automation tasks. It's particularly useful if you're scripting operations that need to interact with DBFS from outside the Databricks environment. Remember, the API calls are direct and permanent, so always double-check the paths and contents before proceeding.

If you follow these steps thoroughly, you can programmatically manage and delete folders from DBFS using the Databricks REST API, integrating these operations seamlessly into your data workflows.

For more detailed info, refer to the official Databricks DBFS API documentation.

🔮 Technique 4—Delete Folder From DBFS Using Databricks UI

Finally, here's the easiest way to delete folder from DBFS—directly through the Databricks user interface. This method is straightforward, requiring no command-line or API interactions, just few clicks.

Step 1—Log in to Databricks Account

Start by opening your browser and logging into your Databricks account.

Step 2—Navigate to Databricks Workspace

Once logged in, you'll be in your main workspace area. Here's where all your notebooks, jobs, and data reside.

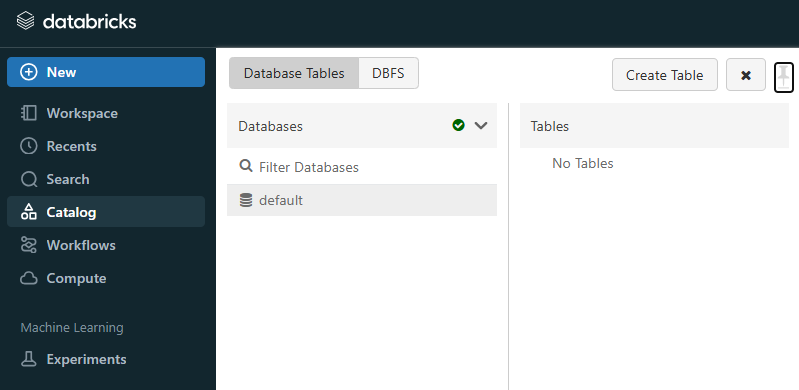

Step 3—Open Catalog Section

In the sidebar, click on the Catalog section (represented by a some symbol). This is where you manage DBFS alongside other data sources.

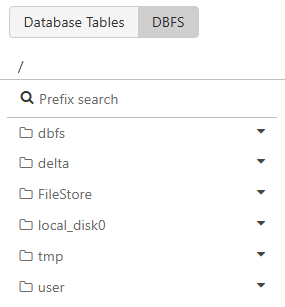

Step 4—Navigate to DBFS Tab

Within the Catalog section, select the DBFS tab to view the Databricks File System. This brings you to the file explorer-like interface for DBFS.

Step 5—Select Folder to Delete from DBFS

Browse through the directory structure to locate the folder you wish to delete. You can navigate through folders by clicking on them. Once you've found the right folder, click on it to select it.

Step 6—Delete Folder via UI options

With the folder selected, you have two primary ways to initiate deletion. To do so, Right-click on the folder to open a context menu. From there, select "Delete" or a similar option. Or, look for a delete button or icon (usually represented by a trash can) near the folder listing.

Note: A confirmation dialog will appear. This is your last chance to check whether you're deleting the correct folder. Confirm the action to proceed.

Step 7—Refresh to Verify Deletion

After confirming deletion, the folder should disappear from the list. However, to be absolutely sure. Click the refresh icon or press F5 on your keyboard to update the view. If the folder is no longer there, your deletion was successful.

As you can see, this technique is really very handy for those who prefer graphical interfaces or if you're doing ad-hoc file management tasks. It's less about scripting or command-line precision and more about straightforward file operations.

Note that, this action, like all deletions, is immediate and permanent in DBFS unless you've set up some form of backup. Always double-check the folder you're about to delete to avoid accidental data loss.

Save up to 50% on your Databricks spend in a few minutes!

Conclusion

And that's a wrap! DBFS, or Databricks File System, is an essential component of the Databricks platform, offering a unified view to manage data across various storage systems. However, as your data volume in DBFS grows, maintaining an organized file structure becomes essential—deleting unnecessary files or folders not only frees up storage space but also helps you to enhance workspace efficiency, reduces clutter.

In this article, we've explored 4 different techniques for deleting folders from DBFS:

- Technique 1—Delete Folder from DBFS Using Databricks CLI

- Technique 2—Delete Folder from DBFS Using Databricks Notebook

- Technique 3—Delete Folder from DBFS Using Databricks REST API

- Technique 4—Delete Folder from DBFS Using Databricks UI

… and so much more!

FAQs

What types of files can be stored in DBFS?

Databricks DBFS can store virtually any type of file. This includes structured data formats like Parquet, Avro, CSV, JSON, and ORC, as well as unstructured data like text files, images, audio, video, and binary files. Databricks DBFS essentially acts as a filesystem layer over cloud object storage.

Is DBFS suitable for production workloads?

Yes, Databricks DBFS is designed for production use. It integrates seamlessly with Databricks' platforms, offering high performance, scalability, and reliability for big data and AI workloads. It's optimized for working with Apache Spark and supports various data processing tasks from ETL to machine learning.

How does DBFS handle data security?

Security in Databricks DBFS is managed through the underlying cloud storage's security features like IAM roles in AWS, Azure AD in Azure, or GCP's IAM for Google Cloud Storage. Databricks adds another layer with workspace-level security, including access control lists (ACLs) and role-based access control (RBAC) to manage permissions on DBFS paths.

Can I access DBFS files using external tools?

Yes, you can access Databricks DBFS files using external tools by mounting cloud object storage or using the Databricks CLI and REST API. Databricks recommends using Unity Catalog volumes to configure access to non-tabular data files stored in cloud object storage.

What happens to my data in DBFS if I terminate my cluster?

Your data in Databricks DBFS remains intact if you terminate your cluster. DBFS data is stored in the cloud storage associated with your Databricks Workspace, not on the cluster itself. Thus, cluster termination does not affect your data's persistence in DBFS.

Where are Databricks DBFS files stored?

Databricks DBFS files are stored in the cloud storage solutions linked to your Databricks Workspace. This could be Amazon S3, Azure Blob Storage, or Google Cloud Storage, depending on which cloud platform your Databricks environment is hosted on.

Can I recover deleted folder from DBFS?

No, once you delete a folder or file from DBFS, it's permanently removed unless you have implemented external backup solutions or if your cloud storage provider offers versioning or soft delete features that you've enabled.

Can I delete files from DBFS without using the CLI or API?

Yes, you can delete files directly from the Databricks UI under the “Data” or “Catalog” section where DBFS is accessible. Simply navigate to the file or folder, select it, and choose the delete option.

What if the folder is too large to delete using the CLI?

If your folder is very large folders, using the CLI might hit time or resource limits. In this case, consider breaking the operation into smaller parts, using Databricks Notebooks to script the deletion in batches.

Can I delete folders using the Databricks REST API without deleting the contents first?

No, with the current API structure, you must delete all contents within a folder before you can delete the folder itself using the REST API because DBFS does not support deleting non-empty directories directly via the API.

Are there any permissions required to delete folder from DBFS?

Yes, you need write permissions on the folder or its parent directory to delete it. In Databricks, this is typically managed through workspace permissions or through the cloud storage's access policies if you're interacting with Databricks DBFS directly via cloud storage.