Wowza! 💥 Databricks Data and AI Summit 2024 blew the roof off with an energy-packed opening, leaving everyone buzzing with absolute excitement.

But the real fireworks came when the big curtain was pulled back on some seriously awesome tech announcements.

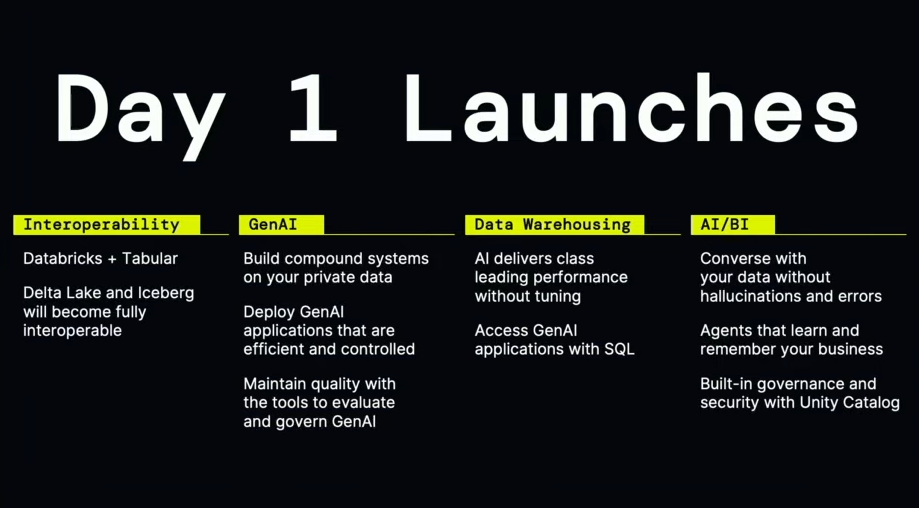

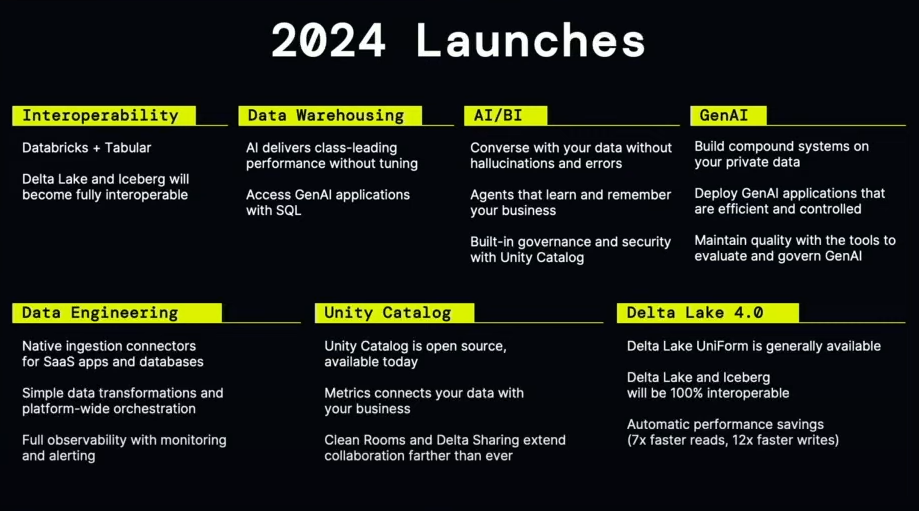

Databricks announced tons of jaw-dropping updates that are sure to turn heads. They open sourced Unity Catalog. Also, they officially announced the acquisition of Tabular to enhance data interoperability.

On the Gen AI front, they've rolled out a huge suite of updates, including significant enhancements to Databricks Mosaic AI. They also announced the biggest Delta release yet, alongside a complete shift to serverless architecture and native NVIDIA integration.

Plus, numerous new tools and features have been released, making this an exciting time for Databricks users.

In case you missed out or just want a quick rundown, we've got the scoop on all the major Databricks announcements and updates straight from the source. In this piece, we'll dive into the details of what was unveiled and give you insights into what to expect in the coming months.

Overall Announcement Summary—Databricks Data and AI Summit:

Are you in a bit of a rush? No worries! Here's a lightning-fast summary of the overall announcements:

📅Day 1 of Databricks Data and AI Summit

🤝 Databricks Acquisitions

- Acquisition of Tabular: Databricks acquired Tabular, the company behind Apache Iceberg.

🔓 Unity Catalog OSS

- Databricks Unity Catalog Open Sourced: Databricks Unity Catalog, which offers comprehensive governance and security for data, ML, and AI models, is now fully open source.

☁️ Full Transition to Serverless Compute

- Transition to 100% Serverless: Starting July 1st, Databricks will operate entirely serverlessly, covering everything from notebooks to Spark clusters.

🧠 Generative AI—Mosaic AI Enhancements

🛠️ Model Training and Fine-Tuning

- Mosaic AI Model Training and Fine Tuning: Managed API for fine-tuning foundation models and pre-training new models using GPUs.

- Databricks Mosaic AI Vector Search: High-performance search with customer-managed encryption keys and hybrid search capabilities.

🤖 AI Development Tools

- Databricks Mosaic AI Agent Framework: Facilitates the creation of AI systems with proprietary data.

- Databricks Mosaic AI Model Serving: Real-time serving of AI models, including foundation model APIs.

🧰 Databricks Mosaic AI Tool Catalog and Function-Calling

- Databricks Mosaic AI Tool Catalog: Enterprise registry for creating and sharing AI functions.

- Function-Calling in Model Serving: Extends model-serving capabilities to execute specific functions within models.

📊 AI Evaluation Tools

- Databricks Mosaic AI Agent Evaluation: Framework for defining quality metrics and evaluating AI system performance.

- MLflow 2.14: Introduces MLflow Tracing for tracking model lifecycle steps.

🔐 AI Governance

- Databricks Mosaic AI Gateway: Centralized interface for managing and governing AI models.

- Databricks Mosaic AI Guardrails: Safety filters to prevent unsafe responses and ensure data compliance.

- System.ai Catalog: A curated list of state-of-the-art open-source models managed within Databricks Unity Catalog.

🔗 Databricks AI Integration

- Integration with Shutterstock ImageAI: Collaboration with Shutterstock for a text-to-image model trained on Shutterstock's repository.

💻 Native NVIDIA Integration

- Native support for NVIDIA GPU acceleration: Databricks announced an expanded collaboration with NVIDIA to optimize data and AI workloads by bringing NVIDIA CUDA accelerated computing to the core of Databricks’ Data Intelligence Platform.

- DBRX model: Databricks’ open source model DBRX is now available as an NVIDIA Inference Microservice.

🚀 Advanced Databricks Workload Optimization and Performance

- Predictive I/O: Machine learning-powered feature for faster and cost-effective point lookups.

- Liquid Clustering: AI-driven data management technique replacing traditional table partitioning.

- Databricks AI Functions: Allows leveraging AI within SQL queries for tasks like sentiment analysis, classification, and text generation.

📈 Business Intelligence Enhancements

- AI/BI Dashboards: AI-first approach to business intelligence with no-code, drag-and-drop interface.

- AI/BI Genie: Conversational AI tool for asking business questions in natural language and receiving visualizations and queries.

📅Day 2 of Databricks Data and AI Summit

📦 Upgraded and Enhanced Data Formats

- Delta Lake 4.0: Introduction with new features like:

🏠 Lakehouse Advancements

- Lakehouse Federation (General availability): Query federation platform enabling cross-source queries without data migration, managed by Unity Catalog.

- Lakehouse Monitoring (General availability): Comprehensive monitoring solution for data quality, statistical properties, and ML model performance.

🤝 Data Sharing and Collaboration

- Databricks Clean Rooms: Facilitates secure, private computations between organizations.

- Delta Sharing: Enables seamless and secure sharing of tables and other data assets across different platforms.

🏗️ Advanced Data Engineering Tools

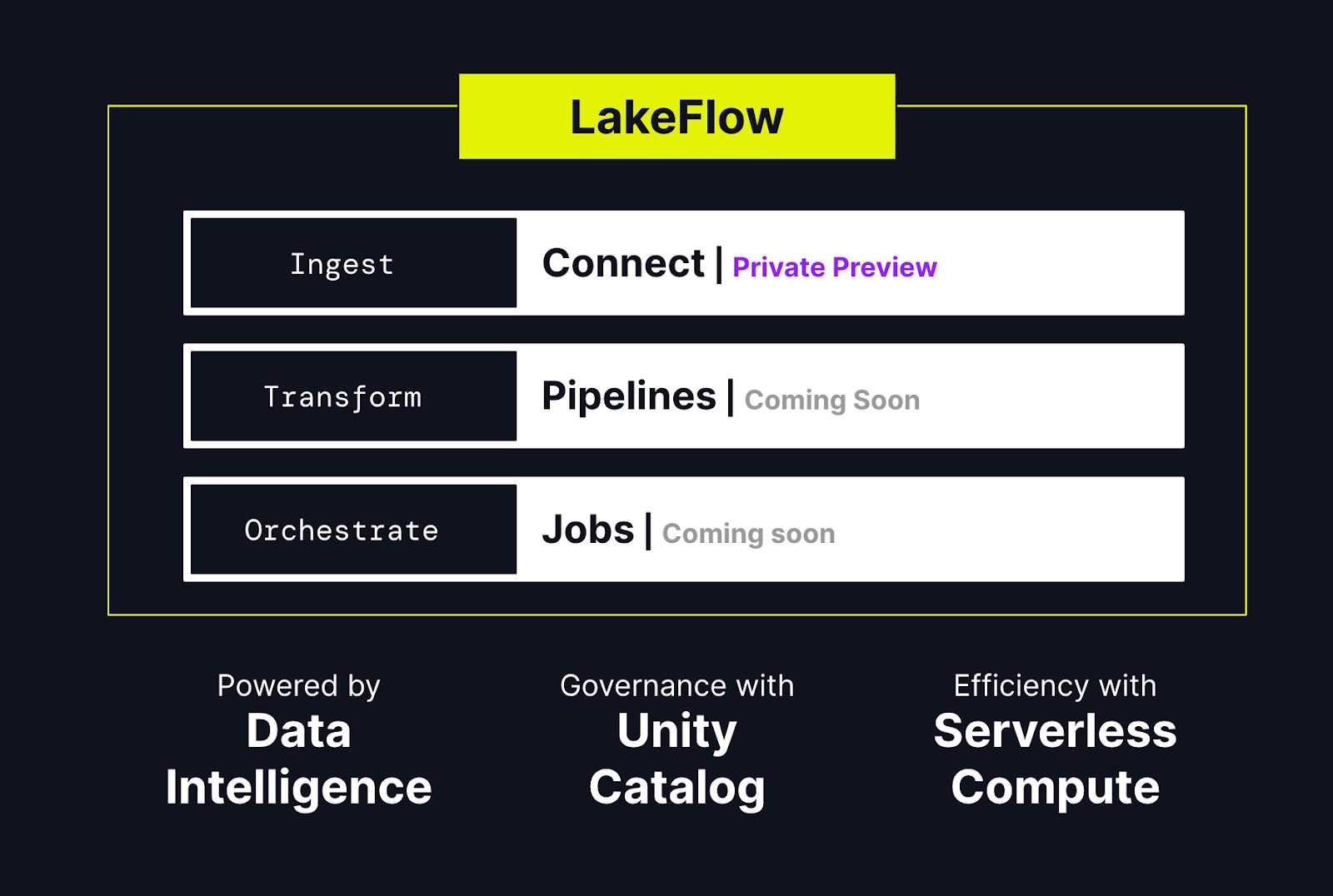

- Databricks LakeFlow: Comprehensive solution for building and operating production data pipelines.

🆕 Other Notable Updates

- Fully Refreshed Databricks Notebook UI

- Function Calling (Public Preview): Enhances notebooks with the ability to call functions using Foundation Model APIs.

- Unified Login (General Availability): Allows a single SSO configuration for all Databricks accounts and workspaces.

- Budgets to Monitor Account Spending (Public Preview): Enables account administrators to create and customize budgets for improved cost monitoring and management.

Now that you have a comprehensive overview of the releases from the Databricks Data and AI Summit, let's dive into the details. We'll start from the beginning of the session to explore what was unveiled during this summit.

When Databricks CEO Ali Ghodsi took the stage, he entered with a bang, energizing the crowd with his enthusiasm and characteristic charisma. But the party didn't last long, as Ali swiftly changed gears and went down straight to business.

Following a series of impressive stats about the event, Ali set the tone by reiterating Databricks' core mission: “to democratize data and AI”.

Ali unveiled his three-pronged vision for a democratized data future. As AI becomes increasingly crucial, security and privacy concerns are rising, and data estates are growing more fragmented. The challenges ahead seem extremely daunting and complex.

Ali presented a solution: “The Data Intelligence Platform”, which aims to unlock the potential of data and AI across entire organizations.

Now, let's dive in and take a closer look at the top 30 key announcements and some other interesting updates from this year's Databricks Data and AI Summit.

Want to take Chaos Genius for a spin?

It takes less than 5 minutes.

Day 1 of Databricks Data and AI Summit

In this section, we'll uncover the major announcements made on Day 1 of the Databricks Data + AI Summit.

Databricks Acquisitions

🧱 Databricks Acquires Tabular (Databricks ➕ Tabular)

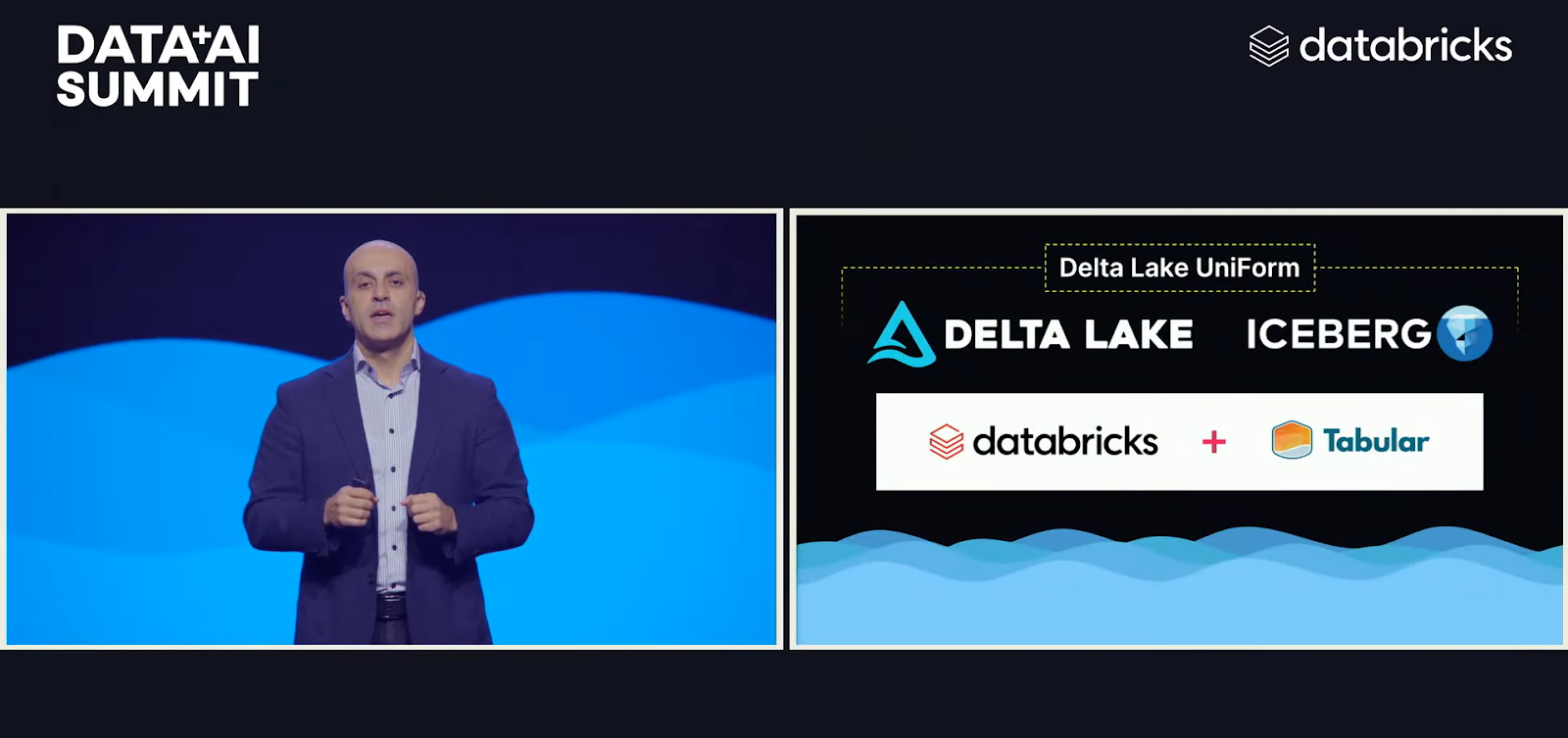

After Ali wrapped up his explanation of the Data Intelligence platform, he dropped a big announcement: Databricks has acquired Tabular, the company founded by the masterminds behind Apache Iceberg, Ryan Blue and Dan Weeks. This acquisition is a power move for Databricks as they aim to solve the ongoing data storage fragmentation puzzle.

Ali's plan for Databricks is to eliminate the need for organizations to choose between Databricks Delta Lake and Apache Iceberg formats. Databricks hopes that by bringing in Tabular's founders, it will improve Project Delta UniForm and ensure that these two formats operate together smoothly. This would enable customers to keep their data on cloud data lakes without fear of vendor lock-in. Databricks sees a future in which these formats are indistinguishable, making data storage as simple as connecting a USB drive.

🧱 Databricks Unity Catalog Fully Open Sourced

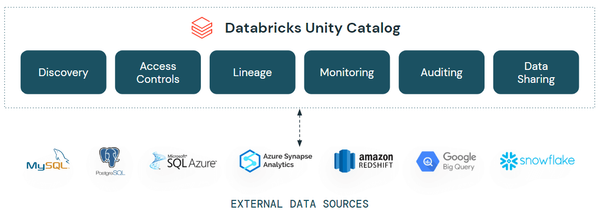

After covering the Databricks and Tabular announcements, Ali addressed the critical challenges of governance and security. He emphasized Databricks' important breakthrough, the Databricks Unity Catalog, which was introduced a few years ago. Unity Catalog has become an essential instrument at Databricks, providing extensive governance for tables, unstructured data, machine learning, and artificial intelligence models. It offers access control, security, discovery, lineage, auditing, and data quality monitoring, making it an essential tool for users.

Then Ali made a shocking announcement: Databricks is open sourcing Unity Catalog. Everyone was caught aback by this announcement, which was possibly the most important of the event. Databricks' decision to open source Unity Catalog indicates the company's dedication to building a more collaborative and secure data environment, enabling users to manage their data with unrivaled security and governance. Check out this GitHub repository.

Full Transition to Serverless Compute

🧱 Serverless Compute — General Availability

Ali made another groundbreaking announcement: starting July 1st, Databricks is going 100% serverless. Previously, only a few components of Databricks were serverless, but now everything—from Databricks notebooks to Spark clusters and workflows—will operate serverlessly. This monumental shift has been in the works for over two years, involving hundreds of engineers dedicated to reimagining Databricks for the serverless era.

Ali stated, “The decision to go fully serverless wasn't straightforward. Initially, the company's co-founders pushed for a simple lift-and-shift approach, but the engineers advocated for a complete redesign. In the end, the engineers were right. This redesign ensures instantaneous startup times, eliminates idle clusters, and optimizes cost efficiency by only charging for actual usage”. He also stated, "Owning all the machines has allowed Databricks to implement advanced disaster recovery, cost control, and enhanced security measures, making the serverless experience not only seamless but also robust and secure”

Generative AI—Mosaic AI Enhancements

The announcements Ali made were just the beginning—the real showstopper was undoubtedly Mosaic AI.

Last year, Ali revealed that Databricks had acquired the hot LLM company MosaicAI for $1.3 billion. But things took an even bigger turn when VP of Engineering Patrick Wendell hit the stage to announce the complete integration of Mosaic AI's capabilities. He detailed what features were available right now and what users could expect in upcoming days/weeks.

To explain how the platform could be used to build production-ready AI systems and applications, Patrick discussed several key capabilities currently in preview mode. Here's a summary of the topics he covered:

Model Training and Fine-Tuning

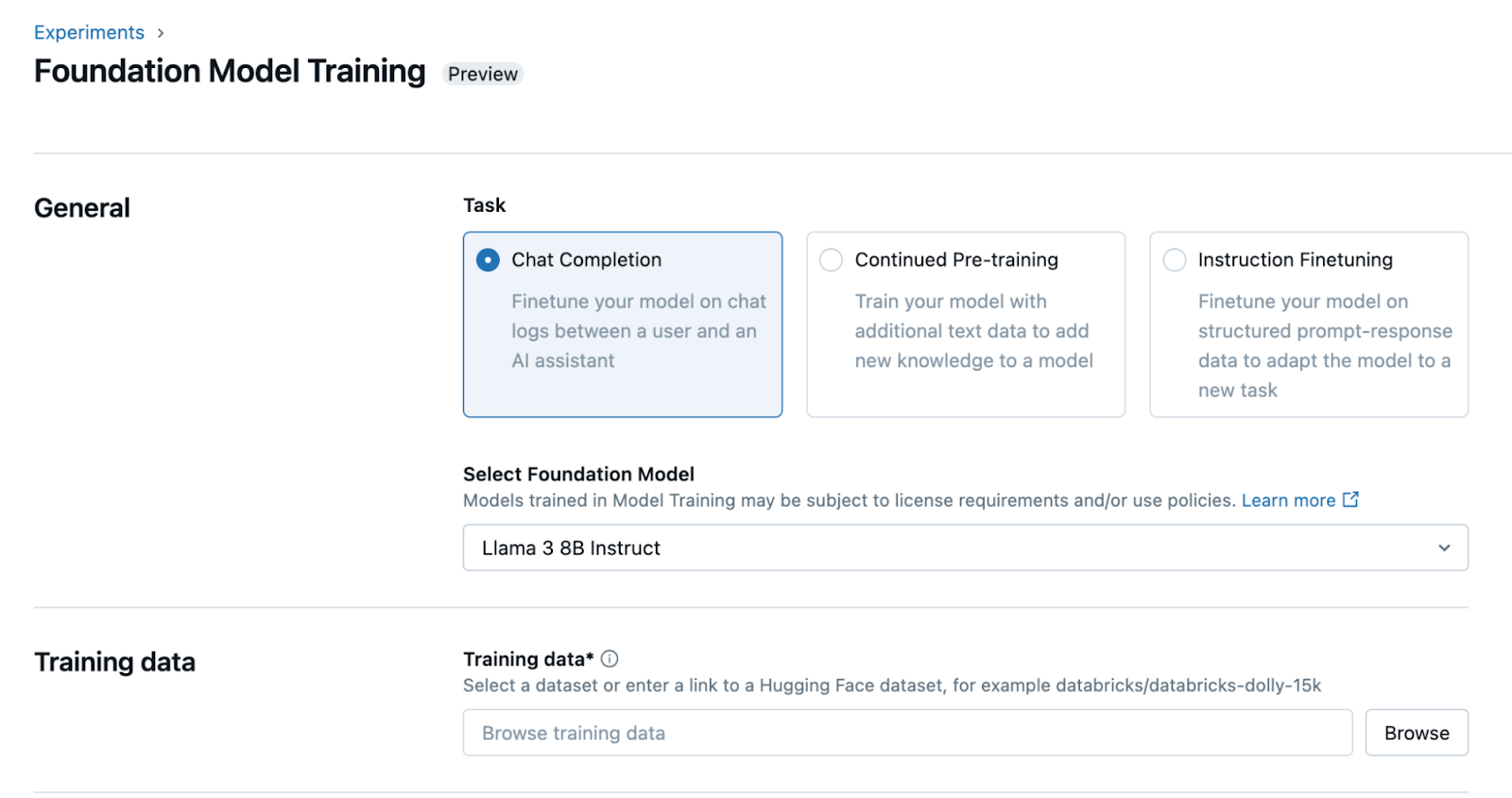

🧱 Mosaic AI Model Training and Fine Tuning

A managed API that simplifies the process of fine-tuning foundation models or pre-training new models on vast datasets using a large number of GPUs. It abstracts the infrastructure complexities and empowers users to own their models and data, enabling iterative improvements in model quality.

🧱 Mosaic AI Vector Search

A vector database integrated into the Databricks Data Intelligence Platform, leveraging its governance and productivity tools. A vector database is optimized for storing and retrieving embeddings—mathematical representations of the semantic content of data, typically from text or image sources. These embeddings are generated by large language models and are crucial for many GenAI applications, enabling efficient similarity searches for documents or images.

AI Development Tools

🧱 Mosaic AI Agent Framework

A development framework designed to help developers build and deploy high-quality generative AI applications using RAG for output that is consistently measured and evaluated to be accurate, safe and governed.It is managed securely within Databricks Unity Catalog.

🧱 Mosaic AI Model Serving

A service that enables real-time serving (deploying, governing, querying and monitoring) of AI models (fine-tuned or pre-deployed), agents, and retrieval-augmented generation (RAG) applications. It also includes Foundation Model APIs, available for both pay-per-token and provisioned throughput usage in production environments.

Mosaic AI Tool Catalog and Function-Calling

🧱 Mosaic AI Tool Catalog

An enterprise registry that allows organizations to create, store, and share a collection of common functions used in AI applications. These functions can include SQL functions, Python functions, model endpoints, remote functions, or retrievers. By centralizing these tools, the Tool Catalog facilitates their reuse across different projects and teams within the organization, promoting consistency and efficiency in AI development.

🧱 Enhanced Function-Calling in Model Serving

A feature that extends the capabilities of Mosaic AI Model Serving by allowing it to natively support the invocation of functions within models. This enables customers to use popular open-source models, such as Llama 3-70B, as reasoning engines for their AI agents. Function-calling allows models to execute specific functions or operations as part of their inference process, enhancing the flexibility and functionality of AI applications.

AI Evaluation Tools

🧱 Mosaic AI Agent Evaluation

A framework that enables developers to quickly and reliably assess the quality, latency, and cost of generative AI applications throughout the LLMops life cycle. It integrates evaluation metrics and data into MLflow Runs, supporting both qualitative and quantitative assessments of AI performance across development, staging, and production phases. Also, it allows gathering human feedback through a review app and employs LLM judges, which may use third-party services like Azure OpenAI service, to assist in evaluating applications.

🧱 MLflow 2.14 with MLflow Tracing

MLflow is a framework for evaluating AI systems and tracking parameters throughout the model lifecycle. The 2.14 version introduces MLflow Tracing, which records each step of model and agent inference, aiding in debugging and performance evaluation. It integrates with Databricks MLflow Experiments, Notebooks, and Inference Tables.

AI Governance

🧱 Mosaic AI Gateway for centralized AI governance

A unified interface for managing, governing, evaluating, and switching models. It includes rate limiting, permissions, and credential management for model APIs, as well as usage tracking and inference table capture to understand rate limits, implement chargebacks, and audit for data leakage.

🧱 Mosaic AI Guardrails

This feature provides safety filters at the endpoint or request level to prevent unsafe responses and detect sensitive data (PII), ensuring data security and compliance.

🧱 system.ai Catalog

A curated list of state-of-the-art open-source models managed within Unity Catalog. These models can be easily deployed using Model Serving Foundation Model APIs or fine-tuned with Model Training. The catalog is accessible on the Mosaic AI Homepage.

While many of the tools mentioned during the presentation were either already publicly available or in the final stages of development, the Mosaic AI Tool Catalog was still in the early testing phase(private preview phase) and not yet accessible to the general public.

🧱 Integration with Shutterstock ImageAI

Following the announcements of Mosaic AI, there was a brief session where they introduced Shutterstock’s new image AI—a cutting-edge text-to-image model. This model, trained exclusively on Shutterstock’s world-class image repository using Mosaic AI Model Training, generates customized, high-fidelity images tailored to specific users needs.

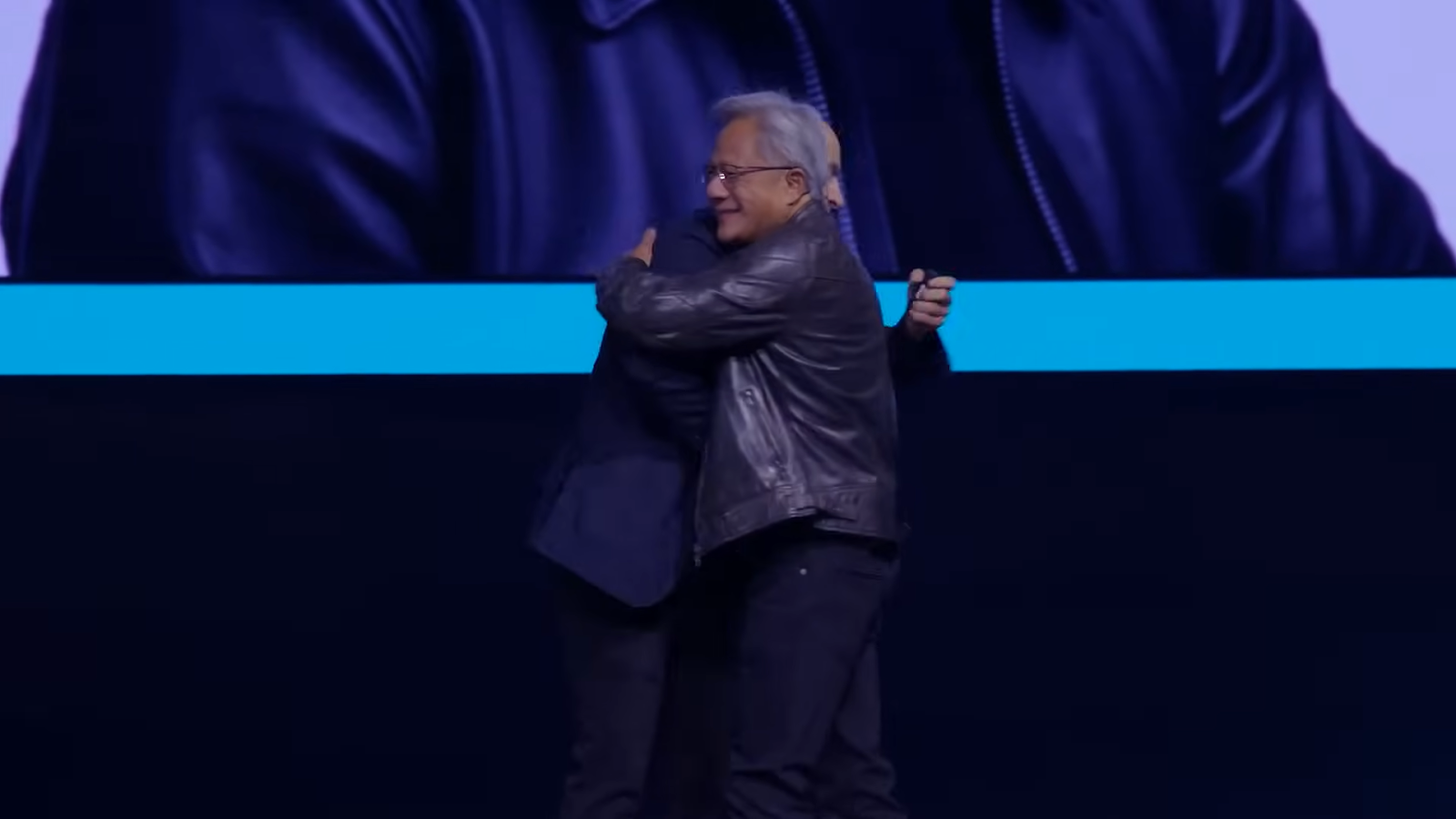

Native NVIDIA Integration

At the end of the announcements and demo sessions, Jensen Huang, CEO of NVIDIA, made a surprise appearance with his iconic black leather jacket, adding full-on excitement to the event.

During this session, there was extensive discussion about the native support for NVIDIA-accelerated computing within Databricks. The collaboration helps to leverage NVIDIA's full stack to enhance Databricks' Data Intelligence Platform.

Here are some key updates from the announcement:

🧱 Native support for NVIDIA GPU acceleration

Ali announced that Databricks now natively supports NVIDIA GPU acceleration. This integration involves NVIDIA’s full stack, including the H100 Tensor Core GPUs, which are optimized for large language models (LLMs). This enhancement aims to accelerate generative AI applications. Databricks’ Mosaic AI, utilizing these GPUs, provides an efficient platform for customizing LLMs. Also, the partnership includes NVIDIA's TensorRT-LLM software, ensuring scalable, high-performance model deployment while remaining cost-effective.

🧱 Databricks DBRX available as an NVIDIA NIM

Another significant announcement was that Databricks’ open source model, DBRX, is now available as an NVIDIA Inference Microservice (NIM). These microservices provide fully optimized, pre-built containers, simplifying the integration of generative AI models into enterprise applications. DBRX, built on Databricks and trained using NVIDIA DGX Cloud, can be customized with enterprise data to create high-quality, organization-specific models.

Advanced Databricks Workload Optimization and Performance Updates

Right after the session with Jensen, Reynold Xin, co-founder and Chief Architect, took the stage to share advanced workload optimization and performance updates on Databricks SQL.

Xin first dived into the concept of the Databricks Lakehouse, highlighting how it merges the best features of data warehouses and data lakes. He then highlighted three fundamental areas of improvement: core data warehousing capabilities, out-of-the-box performance, and ease of use.

He then emphasized these key updates:

🧱 Predictive I/O — General Availability

Predictive I/O is a machine learning-powered feature designed to make point lookups faster and more cost-effective. It leverages Databricks' extensive experience in building large AI/ML systems to enhance the Lakehouse without the need for additional indexes or expensive background services. Predictive I/O offers all the benefits of traditional indexing and optimization services without the associated complexity and maintenance costs. This feature is enabled by default in DB SQL Pro and Serverless, working at no extra cost.

🧱 Liquid Clustering — General Availability

Liquid Clustering is an innovative data management technique that replaces traditional table partitioning and ZORDER, eliminating the need for fine-tuning data layouts to achieve optimal query performance. This AI-driven feature significantly simplifies data layout decisions and allows clustering keys to be redefined without data rewrites. Liquid Clustering enables data layouts to evolve with analytic needs over time, providing flexibility that traditional partitioning methods on Delta could not.

🧱 Databricks AI Functions — Public Preview

Another big announcement was Databricks AI Functions, which is now in public preview. It allows users to leverage AI directly within SQL queries. These functions utilize state-of-the-art generative AI models via the Databricks Foundation Model APIs to perform tasks such as sentiment analysis, classification, extraction, grammar correction, generation, masking, similarity analysis, summarization, translation, and querying.

Here are some list of key Databricks AI Functions:

- ai_analyze_sentiment: Analyzes sentiment in text data.

- ai_classify: Classifies data based on predefined categories.

- ai_extract: Extracts relevant information from text.

- ai_fix_grammar: Corrects grammar in text data.

- ai_gen: Generates text based on given prompts.

- ai_mask: Masks sensitive information in text.

- ai_similarity: Computes similarity between data points.

- ai_summarize: Summarizes text data.

- ai_translate: Translates text between languages.

- ai_query: Queries machine learning models and large language models using Databricks Model Serving.

Also, there is a vector_search function that allows users to perform searches on a Mosaic AI Vector Search index using SQL, enhancing the ability to find and query relevant data efficiently.

These AI functions support Databricks Runtime 15.0 and above, while the ai_query function supports Databricks Runtime 14.2 and above.

Databricks Business Intelligence Enhancements

Reynold Xin handed over the stage to Ken Wong, Senior Director of Product Management, to discuss the challenges with dashboards and Databricks BI reports today.

He then went ahead and dropped two key product announcements:

🧱 Databricks AI/BI Dashboards

An AI-first approach to business intelligence designed to simplify and enhance the dashboard experience. These dashboards offer a no-code, drag-and-drop interface, along with features such as scheduling, exporting, and cross-filtering. Deeply integrated with Unity Catalog and built into Databricks SQL, this solution aims to provide the quickest and simplest way to build and share dashboards without the need to manage separate services.

🧱 Databricks AI/BI Genie

A conversational AI tool that allows users to ask business questions in natural language and receive answers in the form of visualizations and queries. Genie leverages an ensemble of specialized AI agents and multiple LLMs to understand and learn the unique data and semantics of a business. Integrated with Unity Catalog metadata, execution query history, and related assets such as notebooks, dashboards, and queries, Genie seeks user clarification when necessary and remembers these clarifications to continuously improve its responses.

With these key announcements, the first day of the Databricks Data and AI Summit concluded.

Day 2 of Databricks Data and AI Summit

Day 1 was incredible with all those exciting announcements, but Day 2 somehow managed to be even better! Ali kicked things off by recapping the best parts of Day 1, which got everyone pumped for what was to come. The day was jam-packed with an amazing lineup of speakers and topics.

After Ali's intro, Professor Yinjin took the stage to talk about small language models (SLM). She broke down how they work and shared some insights into what makes them so effective!

Then came one of the best sessions: Ali joined forces with Ryan Blue, the mastermind behind Apache Iceberg. They dove into all the latest updates on Iceberg, which was fascinating to hear straight from the source.

Upgraded and Enhanced Data Formats

Right after the session between Ali and Ryan concluded, Shant Hovsepian joined the stage where he dropped a bombshell announcement: release of Delta Lake 4.0.

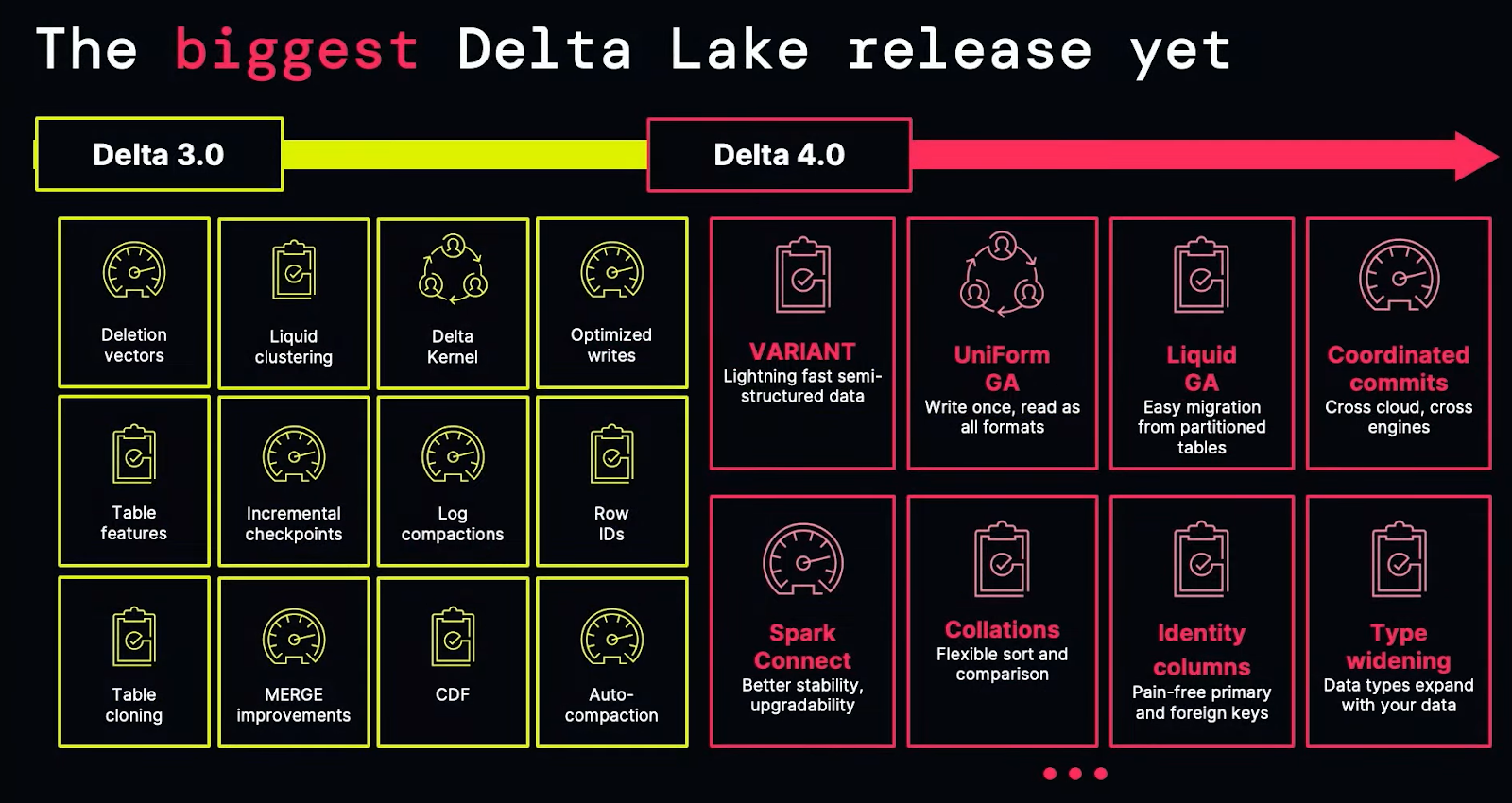

🧱 Delta Lake 4.0

Delta Lake 4.0 is the biggest update in Delta's history, packed with new features and functionality designed to make data management easier and more efficient.

Here's a breakdown of the key features and updates in this release:

🧱 Delta Connect

Delta Connect adds Spark Connect support to Delta Lake for Apache Spark. Spark Connect is a new initiative that adds a decoupled client-server infrastructure that allows remote connectivity from Spark from everywhere. Delta Connect allows all Delta Lake operations to work in your application running as a client connected to the Spark server.

🧱 Open Variant type

Open Variant Data Type is a new data type which is designed to handle semi-structured data more efficiently. This feature allows for flexible and high-performance storage of JSON data without the compromises of traditional methods.

- Flexibility and Performance: Unlike storing everything as strings (which is flexible but slow) or using concrete types (which is fast but inflexible), the Open Variant Data Type provides a sweet spot. It offers the flexibility of JSON with the performance of structured data, being up to 8 times faster than storing JSON as strings.

- Non-Proprietary and Open Source: This data type is fully open and available in Databricks Runtime 15.3. All code for the variant data type is already checked into Apache Spark, making it an open standard that other data engines can adopt, ensuring a non-proprietary way of reliably storing semi-structured data.

🧱 Convenience features

Lakehouses need to adapt to changing data and types. Delta Lake is flexible and can accommodate data types that expand over time, with convenience features that make it easier to work with your data.

🧱 Type widening

Type widening feature allows changing the type of columns in a Delta table to a wider type. This enables manual type changes using the ALTER TABLE ALTER COLUMN command and automatic type migration with schema evolution in INSERT and MERGE INTO commands.

🧱 Identity Columns

Identity columns are columns in a table that automatically generate a unique ID for each new row of the table. They are a long-standing component of data warehousing workloads, usually serving as primary and foreign keys for modeling data. Delta Lake 4.0 will automatically support creating identity columns with unique, auto-incrementing ID numbers for each new row, which dramatically simplifies data modeling, and avoids the need to roll manual, brittle solutions.

🧱 Delta Lake UniForm

Delta Lake Universal Format, or UniForm, has been in heavy use since it was first announced in Delta Lake 3.0. The Delta 3.2 release of UniForm includes support for Apache Hudi. Now, users can write data once with UniForm across all formats.

🧱 Liquid Clustering

Replaces table partitioning and ZORDER to simplify data layout decisions and optimize query performance. It provides flexibility to redefine clustering keys without rewriting existing data, allowing data layout to evolve alongside analytic needs over time.

Lakehouse Advancements

Post Shant's session, Matei Zaharia, CTO and co-founders of Databricks, took the stage at the Databricks AI and Data Summit 2024 to deliver a series of pivotal announcements related to Databricks Unity Catalog. He highlighted substantial advancements in the Lakehouse architecture and shared a list of key updates:

🧱 Lakehouse Federation — General Availability

Matei announced the general availability of Lakehouse Federation, a key feature of Unity Catalog that allows organizations to connect and manage external data sources within Databricks. This capability extends across multiple platforms like MySQL, PostgreSQL, Amazon Redshift, Snowflake, Azure SQL Database, Google BigQuery, and more, all within Databricks. Lakehouse Federation guarantees consistent governance practices and integrates advanced security features, such as row and column-level access controls, to external data sources.

🧱 Lakehouse Monitoring — General Availability

Another major announcement was the general availability of Lakehouse Monitoring. Databricks Lakehouse Monitoring lets you monitor the statistical properties and quality of the data in all of the tables in your account. You can also use it to track the performance of machine-learning models and model-serving endpoints by monitoring inference tables that contain model inputs and predictions.

Data Sharing and Collaboration

🧱 Databricks Data Clean Rooms

Matei also introduced Databricks Clean Rooms, which will soon be available for public preview. Clean Rooms facilitate secure, private computations between organizations, supporting a wide range of computations, including SQL, machine learning, Python, and R. Built on Delta Sharing and integrated with Lakehouse Federation, Databricks Clean Rooms enable cross-cloud and cross-platform collaboration, allowing organizations to perform complex data operations while maintaining data privacy and security.

🧱 Delta Sharing

Delta Sharing, a cornerstone of Databricks' open collaboration ecosystem, has been expanded to support data sharing from any data source. Delta Sharing enables seamless and secure sharing of tables and other data assets across different platforms. This open protocol continues to grow exponentially, facilitating efficient data collaboration and exchange across diverse systems.

At the end of the session, right in front of everyone, Matei open sourced Databricks Unity Catalog by changing the repo from private to public. It was a monumental moment.

Check out this GitHub repository.

Advanced-Data Engineering Tools

And finally after few walkthrough demos and at the very last Bilal Aslam joined the stage where he announced:

🧱 Databricks LakeFlow

A comprehensive solution designed to streamline the building and operation of production data pipelines. LakeFlow integrates several critical functionalities into a single, cohesive platform, enhancing efficiency and scalability.

Here are some key features of Databricks Lakeflow.

1) Native Connectors: Databricks LakeFlow includes scalable connectors for databases like MySQL, PostgreSQL, SQL Server, and Oracle, and enterprise applications like Salesforce, Microsoft Dynamics, NetSuite, Workday, ServiceNow, and Google Analytics. These connectors enable seamless data ingestion from various sources into Databricks.

2) Data Transformation: Users can transform data in batch and streaming modes using SQL and Python. This flexibility supports robust data processing workflows for real-time analytics and large-scale batch processing.

3) Real Time Mode for Apache Spark: Databricks LakeFlow introduces Real-Time Mode for Apache Spark, allowing stream processing with lower latencies than micro-batch processing, which is crucial for real-time applications.

4) Orchestration and Monitoring: The platform offers comprehensive orchestration and monitoring capabilities. Users can deploy workflows to production using CI/CD pipelines, ensuring reliable operations.

5) Serverless Compute and Unified Governance: Databricks LakeFlow, native to the Databricks Data Intelligence Platform, provides serverless compute and unified governance with Unity Catalog, ensuring scalable and secure data processing.

With these announcements, Day 2 (the final day) of the Databricks Data and AI Summit came to a close.

But these were just the major highlights—Databricks also rolled out several other notable updates. Let's dive into the key updates released in June.

Other Notable Updates:

🧱 Fully Refreshed Databricks Notebook UI — General Availability

The new Databricks Notebooks UI offers developers a streamlined, data-focused authoring experience. Key features include:

- Modern UX: An updated user interface enhances the coding experience and improves notebook organization.

- New Results Table: Allows no-code data exploration with search and filtering capabilities directly on result outputs.

- Improved Markdown Editor: As of June 5, 2024, the Markdown editor includes a live preview of Markdown cells and a toolbar for common elements like headers, lists, and links, simplifying the process of communicating ideas without needing to remember syntax.

🧱 Function calling — Public Preview

Function calling is now in public preview. This feature is available using Foundation Model APIs with pay-per-token models: DBRX Instruct and Meta-Llama-3-70B-Instruct. It allows users to call functions within their notebooks, enhancing the capabilities for data manipulation and analysis.

🧱 Unified login — General Availability

Unified login is generally available, enabling the management of a single SSO configuration for Databricks accounts and workspaces. This feature allows admins to enable unified login for all or selected workspaces, using account-level SSO configuration. All users, including account and workspace admins, must sign in using SSO. This feature has been generally available for accounts created after June 21, 2023.

🧱 Budgets to monitor account spending — Public Preview

Account administrators can now create budgets to track spending within their Databricks account. This feature, in public preview, includes customizable filters to monitor spending based on workspace and custom tags, allowing for more precise cost monitoring and management.

Save up to 50% on your Databricks spend in a few minutes!

Conclusion

And that's a wrap! Databricks Data and AI Summit 2024 kicked off with electrifying energy, unveiling a series of groundbreaking announcements. Highlights included the open-sourcing of Unity Catalog, the acquisition of Tabular to enhance data interoperability, major updates to Mosaic AI, the largest Delta release yet, a shift to serverless architecture, and native NVIDIA integration. These updates, along with a suite of new tools and features, promise an exciting future for Databricks users. You can check out their official page , release-notes and documentation to learn more about these releases and what's coming up next.