AI and ML domain has changed a lot over the past few years, with AI-powered assistants and large language models (LLMs) gaining widespread adoption. These advancements have optimized workflows across various industries, enabling users to automate routine tasks, generate and debug code, and extract valuable insights from data. Moreover, they enhance productivity through natural language interactions. In line with this trend, Databricks introduced the Databricks Assistant, which debuted in July 2023 and is currently generally available for all users. What sets this AI assistant apart is its context-aware capabilities, tightly integrated with the Databricks Lakehouse platform. Users can leverage it to write Python and SQL code, troubleshoot code issues, and obtain personalized data-driven insights—all through intuitive conversations in natural language.

In this article, we'll cover everything you need to know about Databricks Assistant, its key features. We'll start at the beginning with its roots in DatabricksIQ and cover the basics. Then we'll dive into basic setup and expert tips to get the most out of it.

What is Databricks Assistant?

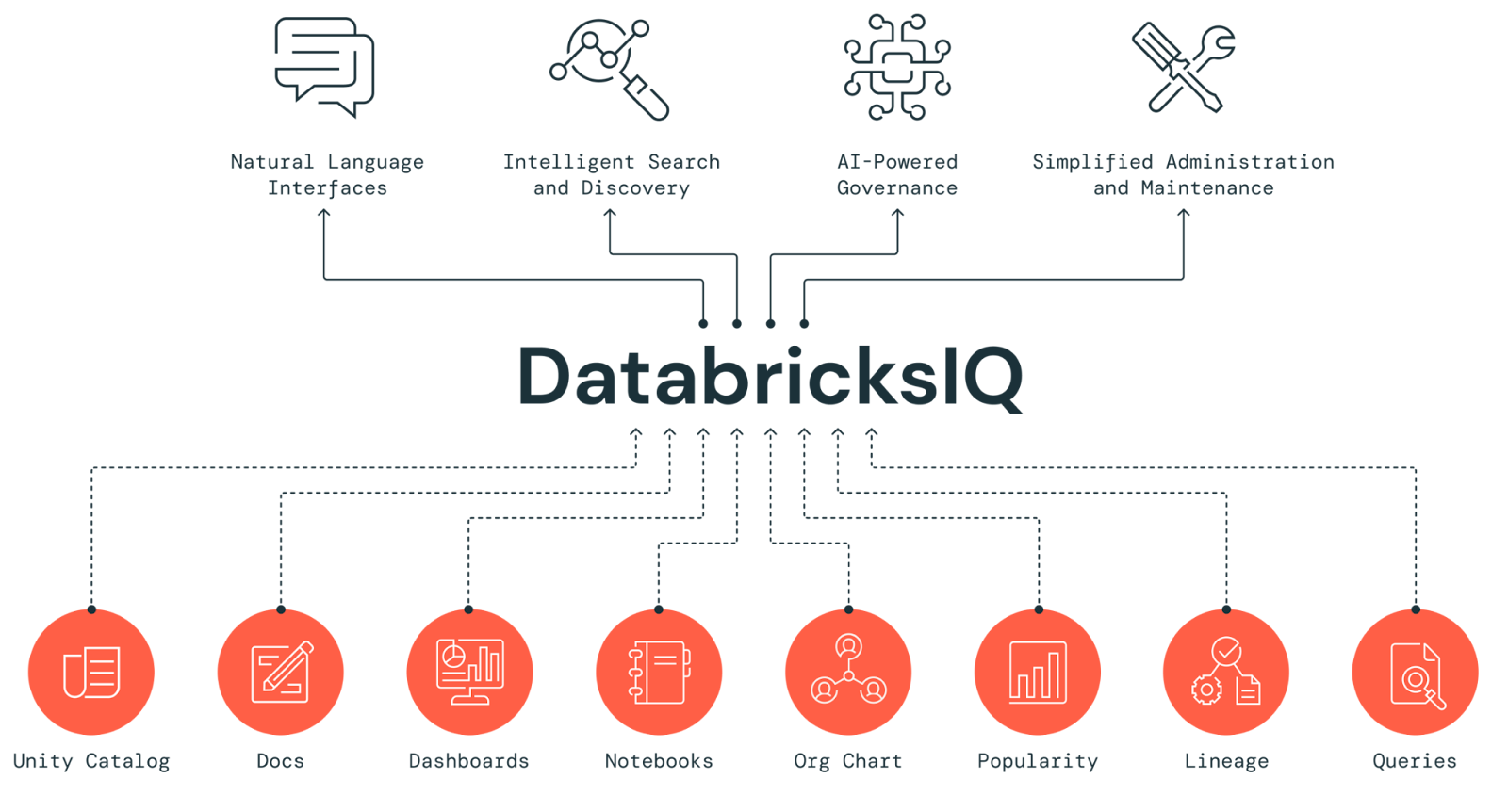

Before we dive into what Databricks Assistant is, let’s first understand DatabricksIQ, the engine at its core. DatabricksIQ is a Data Intelligence Engine that uses AI to power all parts of the Databricks Data Intelligence Platform. It utilizes metadata from your entire Databricks environment, such as Unity Catalog, dashboards, notebooks, and data pipelines, to create highly specialized AI models. These models learn your data, usage patterns, and business-specific terminology, delivering optimized performance and governance.

Databricks Assistant, built on the foundation of DatabricksIQ, uses these insights to deliver contextually aware and personalized responses. It assists users by generating queries, suggesting visualizations, and addressing code challenges, all while remaining tightly integrated into the Databricks workspace—ensuring responses are not only accurate but personalized to your unique needs.

Databricks Assistant is a context-aware AI tool integrated within Databricks Notebooks, Databricks SQL Editor, and File Editor. It enables users to interact with data through a conversational interface, enhancing productivity within the Databricks environment. Users can describe tasks in natural language, and the Databricks Assistant generates corresponding SQL or Python code, explains complex snippets, and suggests automatic fixes for errors. The Assistant leverages Databricks Unity Catalog metadata to understand tables, columns, descriptions, and popular data assets across the organization, allowing it to provide personalized and contextually relevant solutions.

Want to see Databricks Assistant in action? Check out the video below for a full demo.

Databricks Assistant Demo

Features of Databricks Assistant:

Databricks Assistant offers a range of features that can help streamline your workflows and get more done.

1) Natural Language Processing (NLP) Capabilities

Databricks Assistant uses advanced Natural Language Processing (NLP) techniques to make sense of user queries written in plain English. This feature allows users to interact with the assistant using natural language, making it easier to perform complex tasks without a ton of technical know-how. Whether you need to generate code, perform data transformations, or get some in-depth data insights, the Databricks Assistant figures out what you're trying to do and responds accordingly.

Prompt Example:

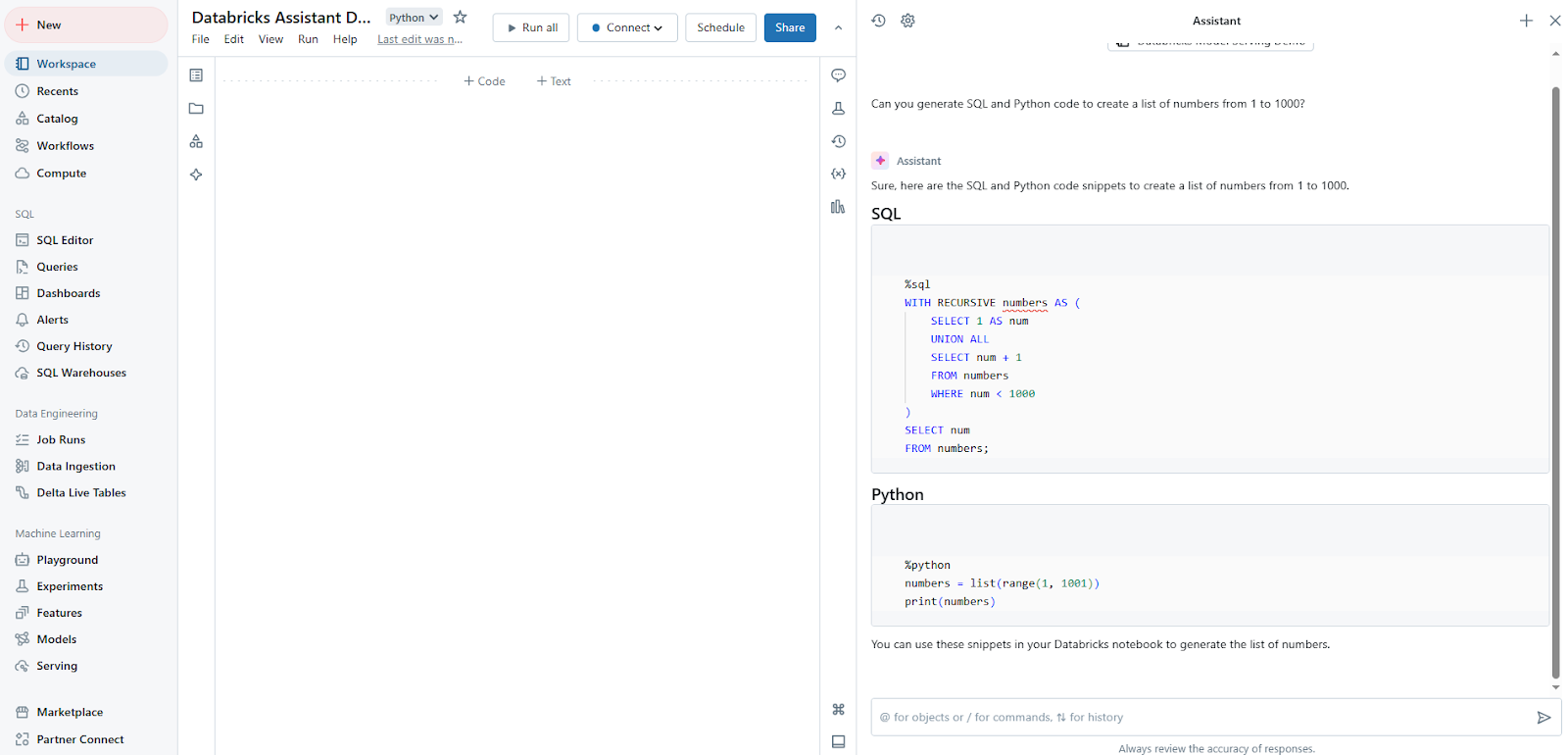

Can you generate SQL and Python code to create a list of numbers from 1 to 1000?

2) SQL and Python Code Generation

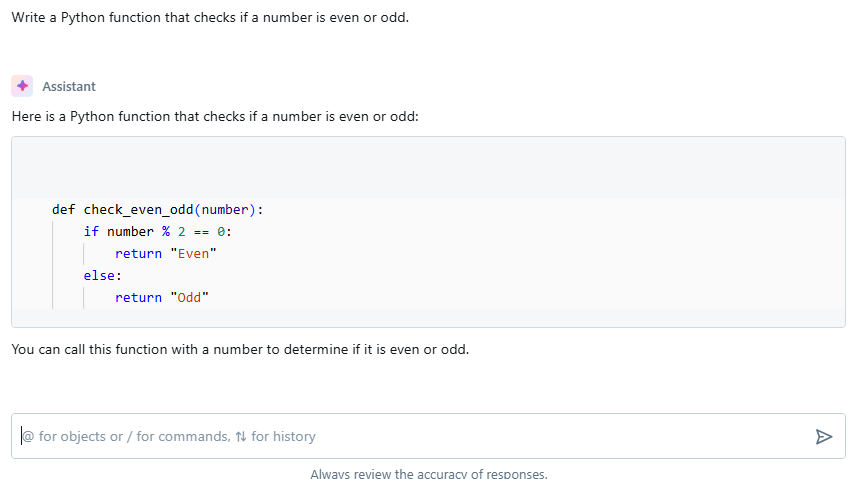

One of the most powerful features of Databricks Assistant is its ability to generate SQL queries and Python code based on user-provided descriptions. Users can describe what they want to achieve, and the assistant will produce the corresponding code, saving significant development time. On top of that, it can help you accelerate projects by writing boilerplate code or providing initial code for you to start with. You can then run the code itself, copy it, or add it into a new cell for further development.

Prompt Example:

Write a Python function that checks if a number is even or odd.

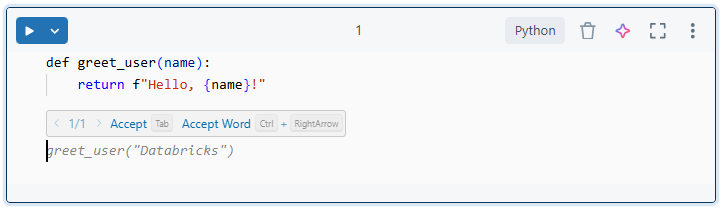

3) Autocomplete and Code Suggestions

Databricks Assistant enhances productivity by providing real-time code suggestions and autocompletion. This feature supports both SQL and Python, reducing typing effort and preventing syntax errors. By pressing control + shift + space (Windows) or option + shift + space (Mac) or control + I in general, the user can trigger autocomplete suggestions based on the context of their code.

Prompt Example:

Type the first part of a Python function.

Trigger autocomplete by typing the first part of the function and pressing Ctrl + Shift + Space (Windows), Option + Shift + Space (Mac), or Ctrl + I to get suggestions.

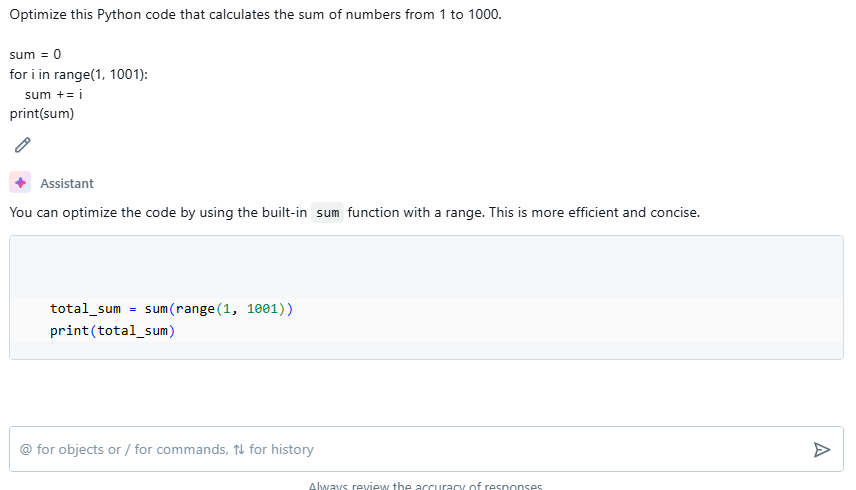

4) Code Optimization and Refactoring

Databricks Assistant can analyze existing code and suggest optimizations or refactoring opportunities. This feature helps improve code performance and maintainability without requiring extensive manual review.

Prompt Example:

Optimize this Python code that calculates the sum of numbers from 1 to 1000.

sum = 0

for i in range(1, 1001):

sum += i

print(sum)

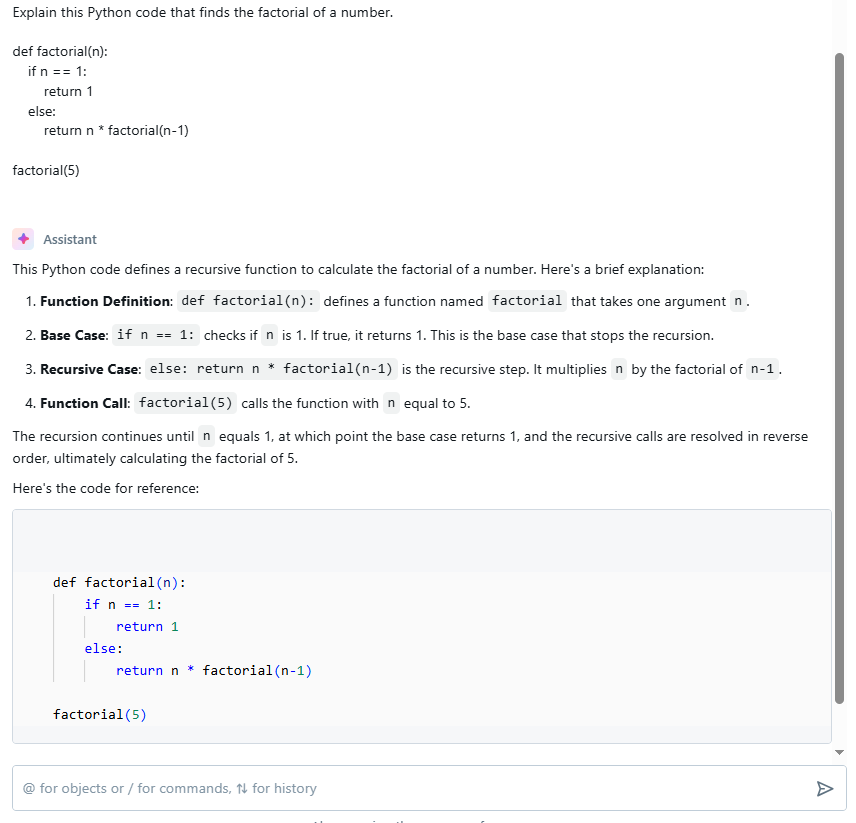

5) Code Explanation

For complex code snippets or SQL queries, the Databricks Assistant can provide clear, concise explanations in natural language. This feature is particularly valuable and can help you better understand certain projects you may be unfamiliar with.

Prompt Example:

Explain this Python code that finds the factorial of a number.

def factorial(n):

if n == 1:

return 1

else:

return n * factorial(n-1)

factorial(5)

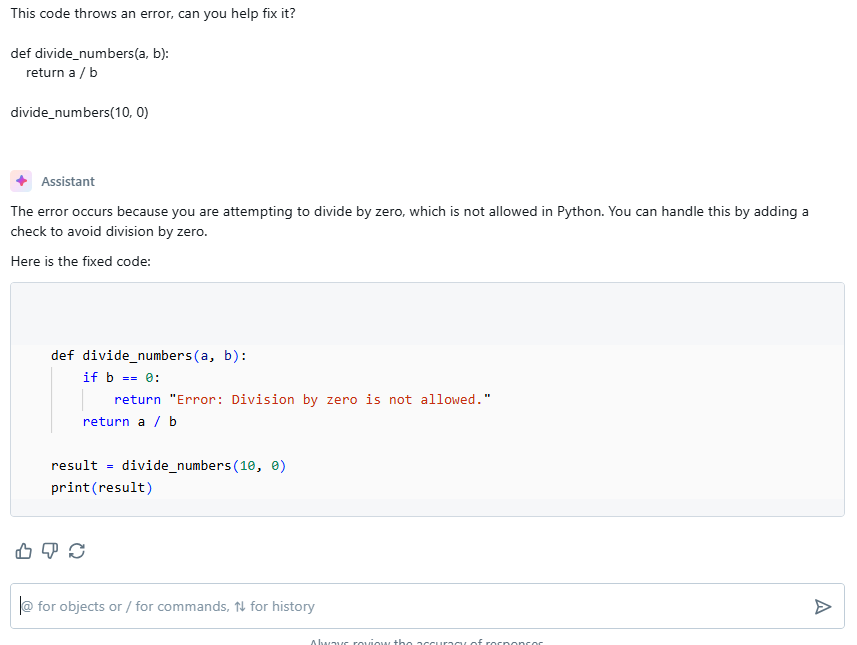

6) Code Error Diagnosis and Fixing

When errors occur, the Databricks Assistant can identify the issue and suggest fixes. It explains the problem in plain language and provides code snippets with proposed solutions.

Prompt Example:

This code throws an error, can you help fix it?

def divide_numbers(a, b):

return a / b

divide_numbers(10, 0)

Save up to 50% on your Databricks spend in a few minutes!

Step-by-Step Guide to Enable Databricks Assistant

Enabling Databricks Assistant is a simple process that requires little configuration. Here's a step-by-step guide to help you get started:

Step 1—Navigate and Login to Databricks Portal

First, access the Databricks portal through your web browser and log in with your necessary credentials.

Step 2—Create Databricks Workspace

If you haven't already, create a new Databricks Workspace where you'll be using the Databricks Assistant.

Check out this article for a step-by-step guide to setting up a Databricks Workspace.

Step 3—Launch the Databricks Workspace

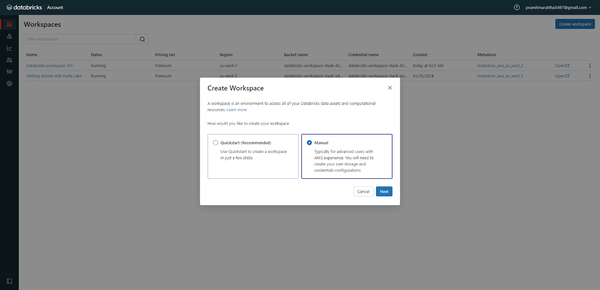

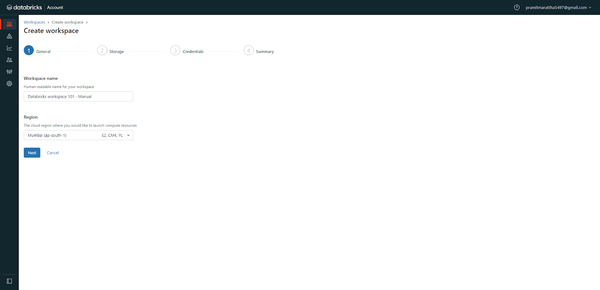

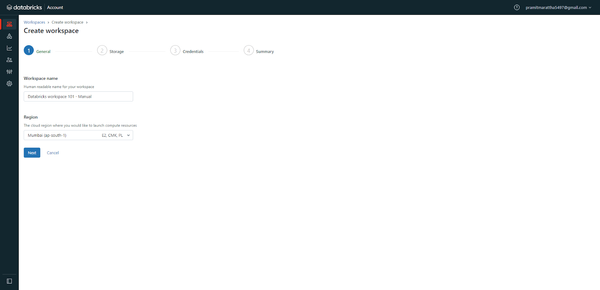

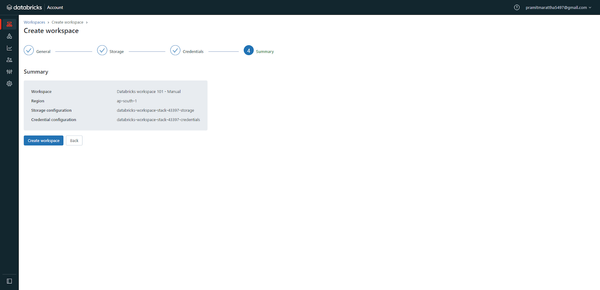

Once created, launch your Databricks workspace to access the Databricks environment. To do this, open the Databricks console and click on the "Create Workspace" button. This will take you to a page where you can choose between the Quickstart and Manual options. Select the "Manual" option and click "Next" to proceed.

Enter Databricks Workspace name and select the AWS region.

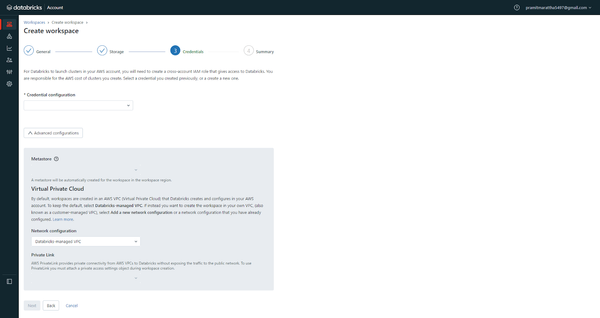

Choose a storage configuration, like an existing S3 bucket, or create a new one.

Configure credentials for database access, if any. Select/create VPCs, subnets, and security groups as needed. Customize configurations for encryption, private endpoints, etc.

Review all configs entered in the previous step, and then click "Create Workspace" to start the provisioning process.

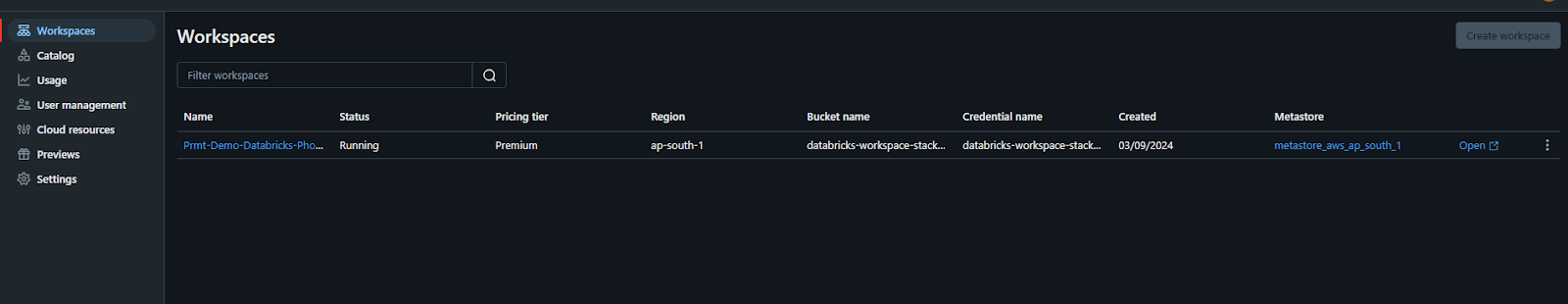

After submitting, check the workspace status on the Databricks Workspaces page in the console.

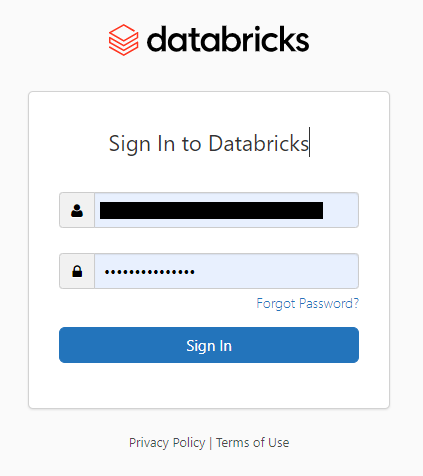

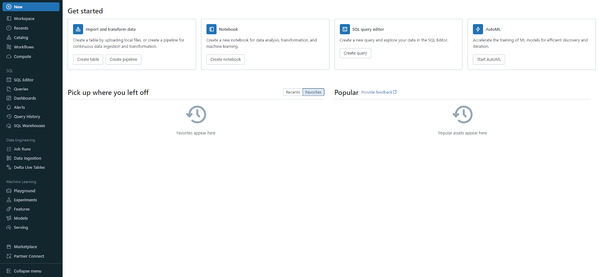

Once the stack is created, you can open your workspace and log in to it using your Databricks account email and password.

So there you have it! You've successfully created a Databricks Workspace.

Step 4—Enable Databricks Unity Catalog (Optional)

While not required for basic assistant functionality, enabling Databricks Unity Catalog can enhance the Databricks Assistant's capabilities by providing it with more context about your data environment.

If you need to manage multiple workspaces under one umbrella, enabling Databricks Unity Catalog is particularly beneficial.

Check out this article for a step-by-step guide to enable your workspace for the Databricks Unity Catalog.

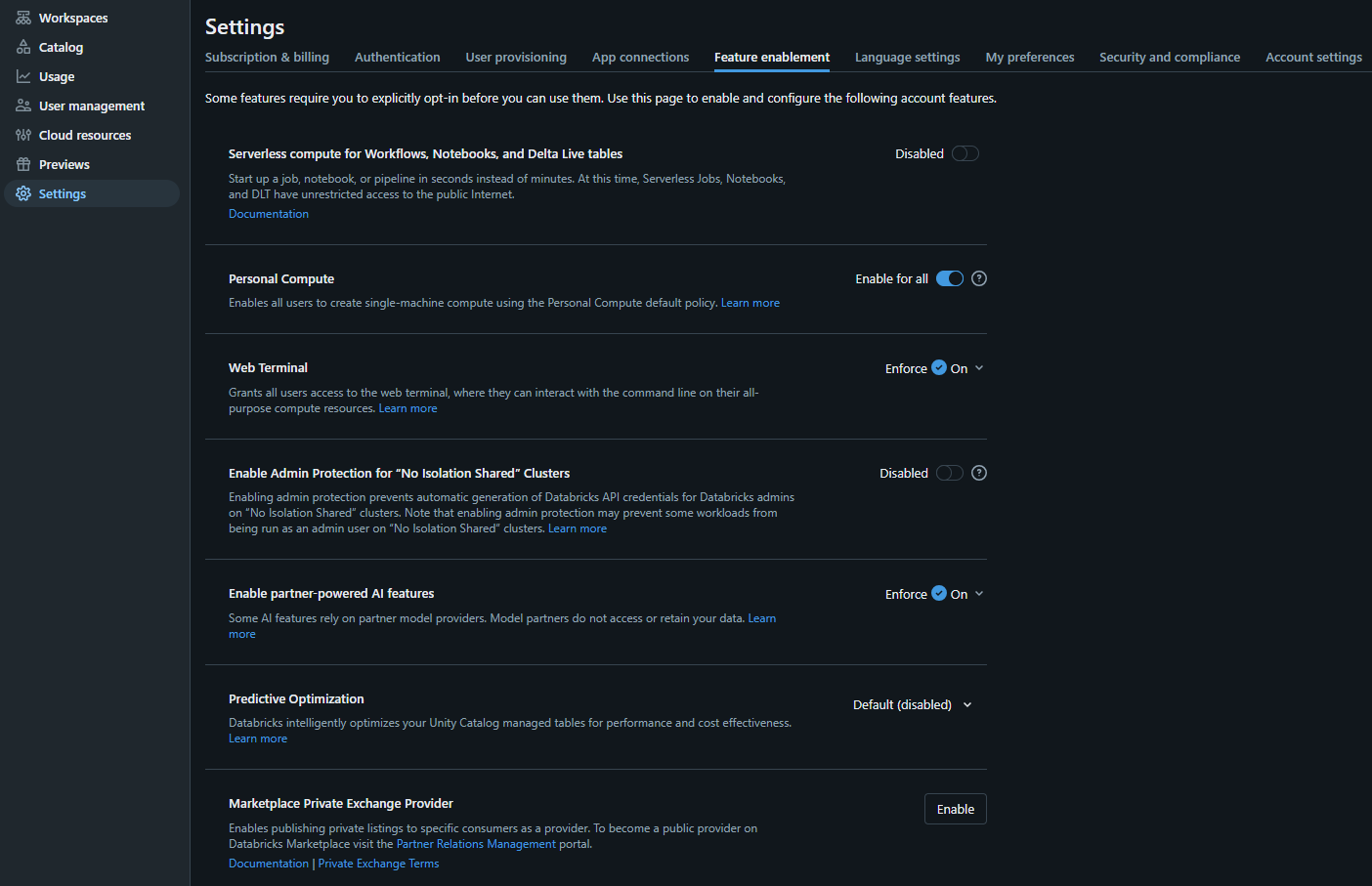

Step 5—Navigate to the Admin Portal

Access the admin portal through the workspace settings.

Step 6—Click on Settings

In the admin portal, locate and click on the Settings option.

Step 7—Search for "Feature enablement" Tab

Search and find the tab named “Feature enablement” tab.

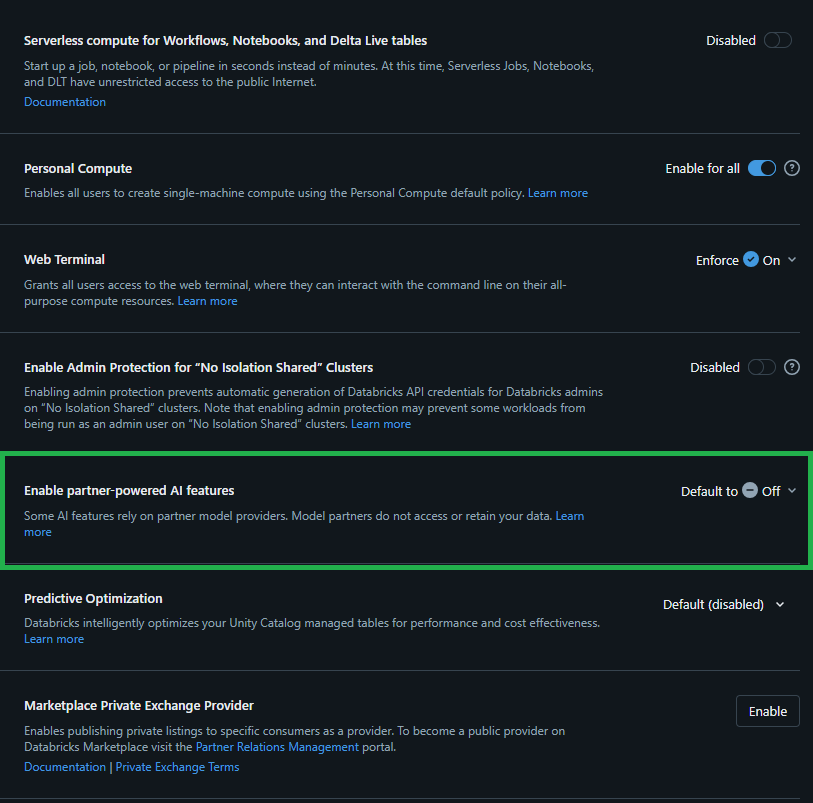

Step 8—Look for "Partner-powered AI features"

Within the “Feature enablement” section, find the subsection for AI assistive features.

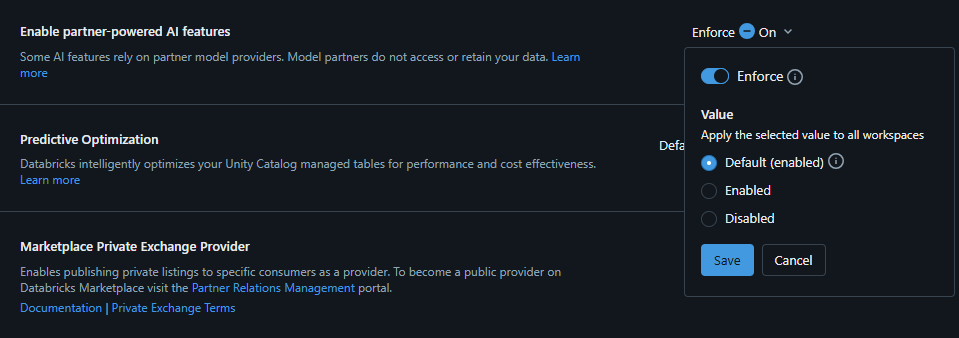

Step 9—Toggle to Enable Databricks Assistant

Finally, click the dropdown button, toggle the Enforce switch, and then select Default (enable) to activate Databricks Assistant for your workspace.

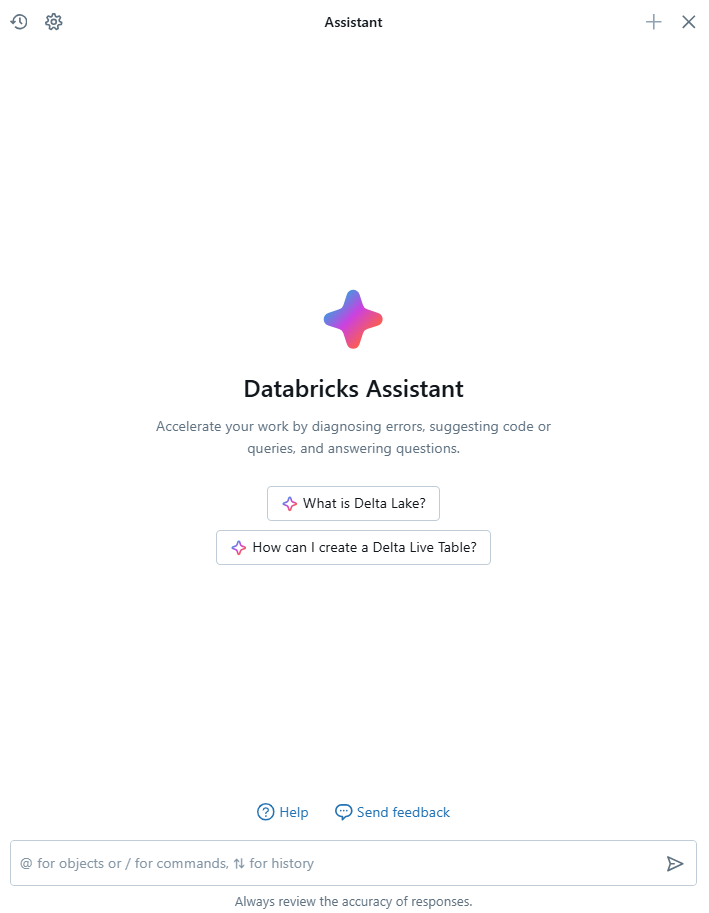

Once you have enabled it, open the Assistant pane, and click the Assistant icon or Ctrl + Alt + A in the left sidebar of your Databricks Notebook or Databricks SQL Editor.

Start typing your questions and converse normally in the text box at the bottom of the Assistant pane, then press Enter. Databricks Assistant will display the answer right away.

How Databricks Assistant Integrates with the Databricks Ecosystem?

Databricks Assistant is deeply integrated into various components of the Databricks environment. Its integration is seamless and multifaceted, designed to assist users in various tasks, from coding to data management. Below are the key aspects of how Databricks Assistant integrates with the broader Databricks environment.

1) Contextual Interaction

Databricks Assistant operates across different components of the Databricks environment. It provides seamless assistance for tasks such as code generation, query building, and data visualization.

a) Notebook Integration

In Databricks notebooks, users can invoke Databricks Assistant via the Assistant pane or directly in code cells. By pressing Cmd + i (MacOS) or Ctrl + i (Windows), users can ask questions in natural language. For example, you can ask the assistant to "Generate a simple line plot using matplotlib".

Databricks Assistant generates:

b) SQL Editor Integration

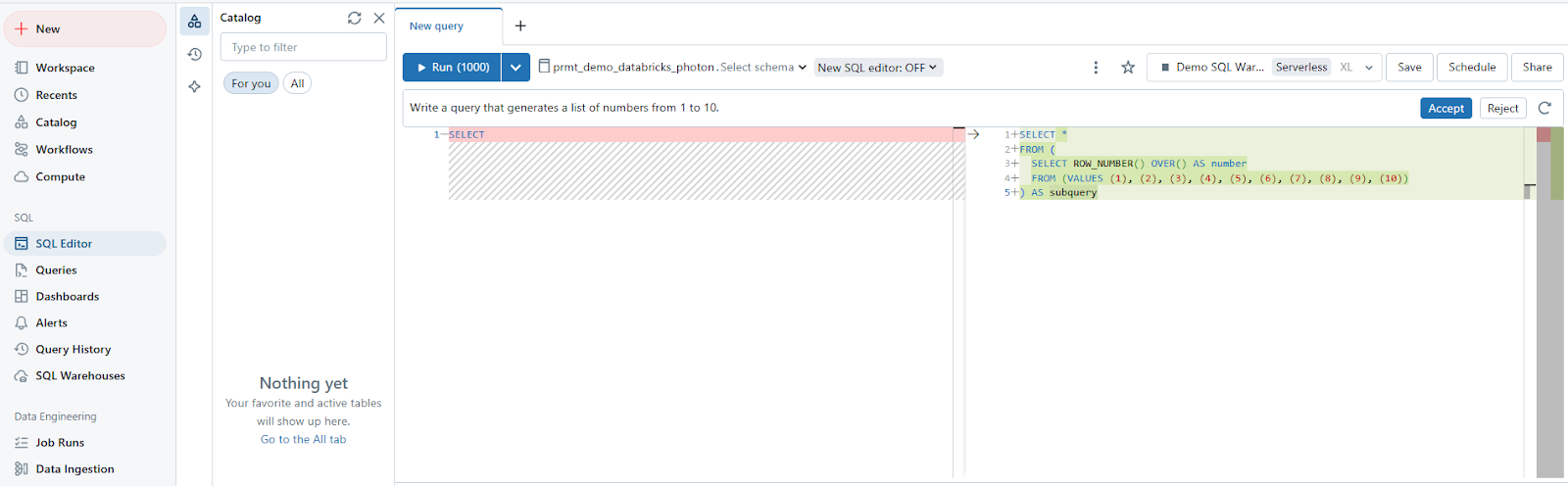

Databricks Assistant helps with SQL query generation by translating natural language into SQL code within the Databricks SQL editor itself. It can handle complex queries, such as filtering and aggregation, and is able to utilize Databricks Unity Catalog metadata (such as tables and columns) to improve accuracy. For example, if you ask Databricks Assistant to generate a query that generates a list of numbers from 1 to 10.

Databricks Assistant generates:

Databricks Assistant leverages contextual information from the user's workspace, such as:

- Code and Queries: It understands the code or queries currently being worked on, providing relevant suggestions or corrections.

- Table and Column Metadata: By accessing Databricks Unity Catalog metadata, it can generate accurate SQL queries based on existing tables and columns within the user's environment.

- User History: The Assistant utilizes previous interactions to refine its responses, making it more personalized and effective over time

2) Debugging and Error Resolution

Databricks Assistant has a feature called Quick Fix, which automatically corrects common errors like syntax mistakes, unresolved columns, and type conversions. It streamlines the debugging process for both Python and SQL errors. Users can invoke the assistant for error explanations and solutions using commands like /fix, improving overall productivity.

3) Integration Across Databricks Features

Beyond coding assistance, the Databricks Assistant integrates with several other features:

a) Dashboard Integration

Databricks Assistant helps when creating visualizations in Databricks dashboards. After selecting a dataset and adding a visualization widget, users can prompt the Assistant to generate specific charts. It allows for fast chart creation by understanding user descriptions and generating accurate visualizations based on the dataset.

b) Documentation Access

Databricks Assistant is integrated with the platform's documentation, allowing users to quickly access relevant material and solutions without leaving their workspace. It helps users find functionalities, troubleshoot problems, and learn more about available features.

4) Databricks Unity Catalog Integration

When Databricks Unity Catalog is enabled, Databricks Assistant becomes more powerful, as it can access metadata such as table and column descriptions, relationships, and data lineage. This allows the Assistant to generate more precise queries, tailored to the specific data models in the user’s environment.

What is the pricing for Databricks Assistant?

Databricks Assistant is available at no additional cost. Users are not charged specifically for the Assistant itself, but instead, they only pay for the compute resources they use while running their workloads, such as notebooks, queries, or jobs. This compute usage is billed in Databricks Units (DBUs), which represent the processing power consumed per hour, depending on the workload complexity and infrastructure chosen (e.g., AWS, Azure, or Google Cloud).

Note: There are, however, fair usage limits in place to prevent abuse, though most users are not significantly impacted by these limits.

9 Effective Techniques to Get the Most Out of Databricks Assistant

1) Be Very Clear with Your Prompts

The quality of responses from Databricks Assistant is highly dependent on the clarity of your input. When asking the Assistant to generate code or explain queries, make sure to provide explicit details, especially when dealing with complex datasets or multiple tables. Clear, specific prompts yield better results. So, instead of asking "show me sales data", try "generate a query to show total sales by region for Q1 2024, sorted in descending order".

Bad prompt: "Show some customer data"

Good prompt: "Create a query to display customer names, their total purchases,

and average order value for customers who have made at least 300 purchases in the last 30 days"2) Test Code Snippets Directly in the Assistant

Use Databricks Assistant panel as a scratchpad to iteratively develop and test code before adding it to your notebook. This allows for rapid iteration and feedback loops, ensuring that the code suggestions are not only syntactically correct but also optimized for your specific environment.

3) Use Table Context for Accurate Responses

Before asking questions about data, use the "Find Tables" action to help the assistant understand which tables are relevant.

For example:

User: "Find tables related to customer orders"

Assistant: Found the following relevant tables:

1. ….

2. ….

3. ….

User: "Now, show me the average order value by customer segment"4) Iteratively Test Code in the Databricks Assistant Panel

Databricks Assistant panel maintains conversation history, allowing you to refine and improve code gradually. With the help of it, you can progressively refine your code by adjusting parameters, testing partial queries, or modifying transformations in the Assistant panel itself. This allows for rapid iteration and immediate feedback, especially when developing complex pipelines or debugging multi-step processes. As code is generated or modified, you can execute and refine it without switching contexts, streamlining your development cycle.

5) Use Slash Commands for Quick Prompts

Slash commands streamline interaction with the Databricks Assistant by providing quick shortcuts to predefined actions. Slash commands are a fast way to trigger specific actions, especially in environments where efficiency is crucial. Here is the list of all available Slash commands.

| Prompt | Functionality |

|---|---|

| / | Displays a list of common commands |

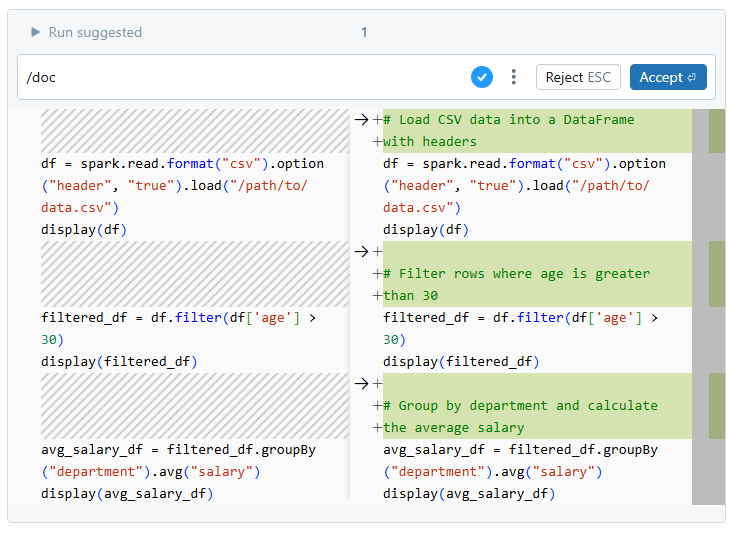

| /doc | Comments the code in a diff view |

| /explain | Provides explanations for the code in a cell |

| /fix | Suggests fixes for any code errors in a diff view |

| /findTables | Searches for relevant tables using Databricks Unity Catalog metadata |

| /findQueries | Searches for relevant queries based on Databricks Unity Catalog metadata |

| /prettify | Formats code for improved readability |

| /rename | Suggests new names for notebook cells and other elements |

| /settings | Allows adjustments to notebook settings directly from Assistant |

For example, typing /explain before a block of code will trigger the Assistant to break down and explain the logic, while /prettify formats code for improved readability.

6) Optimize Workflow with Notebook Cell Actions

Cell Actions enable users to interact with Databricks Assistant and generate code directly within notebooks, without needing the chat window. These actions include shortcuts for quickly accessing common tasks, such as documenting, debugging, and explaining code.

For instance, if you want to add a comment to your code, you can use a Cell Action command like /doc, or you can request the Assistant to generate a comment by typing a prompt, such as “write a comment for this code”.

7) Provide examples of your row-level data values

Since the assistant doesn't have access to actual data values, providing examples helps it generate more accurate code.

Example:

“Write a SQL query to calculate the average salary for employees from the 'Employee' table. The table contains columns like EmployeeID, EmployeeName, Salary, and Department. For example, in one row, EmployeeID = 101, EmployeeName = 'Elon Musk', Salary = 100000, and Department = 'Engineering'.”As you can see, in this case, by including actual row-level data values (such as EmployeeID = 101 and Salary = 100000), the Assistant can generate more accurate SQL queries or transformations tailored to your dataset structure.

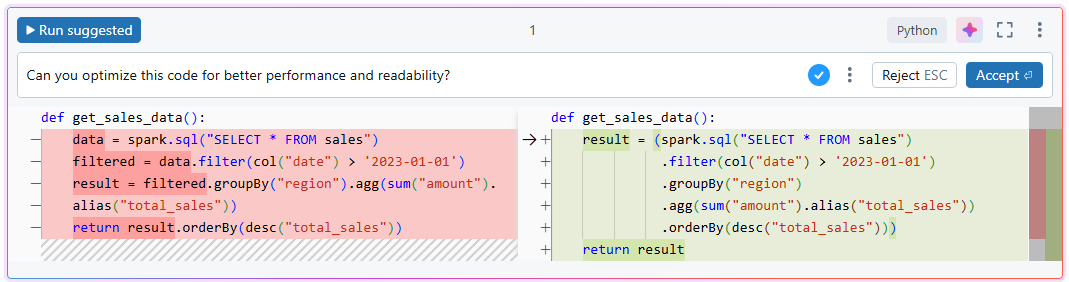

8) Refactor and Optimize Code

Make use of the Databricks Assistant to improve existing code. You can ask it to make your code more efficient, and readable, or to follow specific best practices.

For Example:

# Original code

def get_sales_data():

data = spark.sql("SELECT * FROM sales")

filtered = data.filter(col("date") > '2023-01-01')

result = filtered.groupBy("region").agg(sum("amount").alias("total_sales"))

return result.orderBy(desc("total_sales"))Ask Databricks Assistant to optimize

User: "Can you optimize this code for better performance and readability?"Optimized code suggested by Databricks Assistant:

9) Generate Visualizations In Databricks Dashboard

Databricks Assistant can help streamline the creation of visualizations in your Databricks dashboards. By providing prompts, you can generate charts directly from your dataset.

Steps to Use Databricks Assistant for Visualizations in Databricks Dashboard:

Step 1—Open Dashboards

Click the Dashboards icon to view the list of available dashboards.

Step 2—Select a Dashboard

Choose a dashboard title to start editing.

Step 3—Verify Dataset

Go to the Data tab to confirm the dataset being used in the dashboard. For example, a dataset might be defined by a SQL query on the samples catalog. At least one dataset must be present for chart generation. If multiple datasets are available, Databricks Assistant will select the most relevant one based on your input.

Step 4—Switch to Canvas

Return to the Canvas tab to begin working on your dashboard.

Step 5—Add Visualization Widget

Click the "Add Visualization" icon and place the widget on the canvas.

Step 6—Enter a Prompt

Type a description of the chart you want in the widget’s prompt box, then press Enter. The Databricks Assistant will generate a chart based on your description. You can accept or reject it, or revise the prompt to generate a new chart. If needed, use the "Regenerate" button to retry the chart generation.

Step 7—Editing Databricks Dashboard Visualizations

If the generated chart isn’t satisfactory, modify your input prompt to refine the chart.

Want to take Chaos Genius for a spin?

It takes less than 5 minutes.

Conclusion

Databricks Assistant is a big leap forward in AI-powered coding assistant, specifically made for the Databricks ecosystem. It uses DatabricksIQ to provide smart, context-aware support, which can significantly speed up your work as a data professional. To get the most out of it, you need to know what it can do and how to use it effectively. Put the tips and techniques in this article to the test and see how they work with different kinds of queries. With a bit of practice, this assistant can become a daily go-to tool. As AI and LLM tech keeps improving, Databricks Assistant is likely to get even more advanced.

In this article, we have covered:

- What is a Databricks Assistant?

- Step-by-Step Guide to Enable Databricks Assistant

- How Databricks Assistant Integrates with the Databricks Ecosystem?

- What is the pricing for Databricks Assistant?

- 9 Effective Techniques to Get the Most Out of Databricks Assistant

… and more!

FAQs

What is the Databricks Assistant?

Databricks Assistant is a context-aware AI tool designed to assist users in creating notebooks, queries, and dashboards within the Databricks platform.

How much does Databricks Assistant cost?

All current Databricks Assistant capabilities are available at no additional cost for all customers. Users only pay for the compute resources they use to run their notebooks, queries, and jobs.

What data is being sent to models by Databricks Assistant?

Databricks Assistant sends code, metadata, and context from your current notebook cell or SQL Editor tab, table and column names/descriptions, previous questions, and favorite tables but adheres strictly to privacy protocols outlined by your organization’s policies.

How does Databricks Assistant handle privacy?

Databricks Assistant respects Databricks Unity Catalog permissions and only sends metadata for tables that the user has permission to access. It uses secure third-party services like Azure OpenAI and has opted out of Abuse Monitoring to ensure data privacy.

What languages does Databricks Assistant support?

Databricks Assistant primarily supports Python and SQL, with some capabilities for R and Scala. It's optimized for Databricks-supported programming languages, frameworks, and dialects.

Do I need Unity Catalog to use Databricks Assistant?

No; however, enabling Databricks Unity Catalog enhances functionality regarding metadata management across workspaces.

Does Databricks Assistant respect Unity Catalog permissions when sending metadata to models?

Yes, all data sent to the models respects the user's Databricks Unity Catalog permissions. The assistant will not send metadata relating to tables that the user doesn't have permission to see.

Will Databricks Assistant execute unsafe code?

No, Databricks Assistant does not automatically run code on your behalf. Users are advised to review and test AI-generated code before running it, as AI models can sometimes make mistakes or misunderstand intent.

Can I use Databricks Assistant with tables containing regulated data?

Yes, you can use the assistant with tables that process regulated data (PHI, PCI, IRAP, FedRAMP), but you must comply with specific requirements, such as enabling the compliance security profile and adding the relevant compliance standard as part of the configuration.