Dealing with data-heavy applications? ClickHouse is a name that probably keeps popping up. And for good reason—it's particularly good at fast query processing and high data compression. ClickHouse is a beast of a database. It’s fully open source, uses columnar storage, and is built specifically for OLAP (Online Analytical Processing). Its story starts back at Yandex, the search giant, which began developing it internally around 2008–2009 for its demanding Metrica web analytics platform. They needed something seriously fast. After proving its worth internally, Yandex open sourced ClickHouse in 2016 under the Apache 2.0 license. Since then, it's built a solid reputation for lightning-fast queries (props to it unique techniques like vectorized execution), impressive data compression (columnar storage helps a lot here), and the ability to scale out across many servers to handle massive volumes of information.

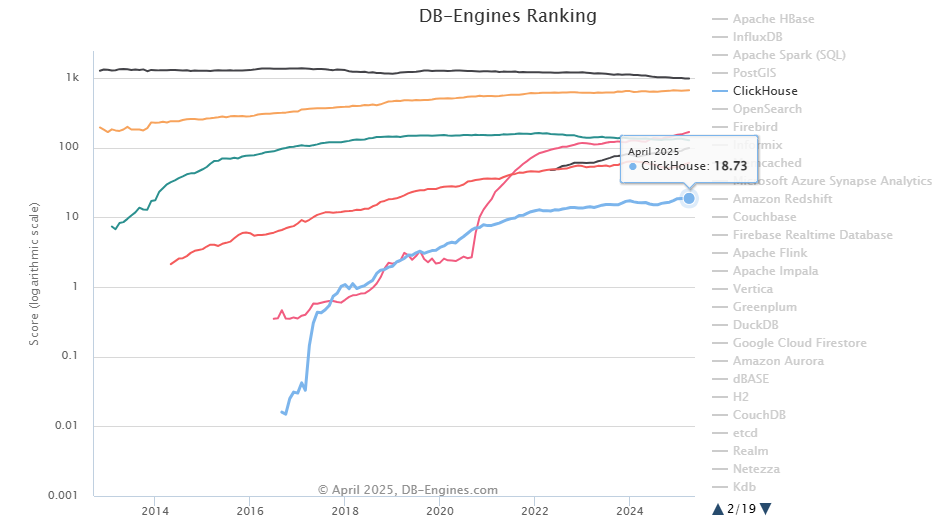

How popular is it? According to DB-Engines, ClickHouse is one of the fastest-growing high-performance, column-oriented SQL database systems for OLAP out there. As you can see in the graph below, ClickHouse is sitting at 31st place and climbing, with a score of 18.73 (as of April 2024).

ClickHouse also pulled in a hefty $250M Series B funding round at a $2B valuation, a clear sign it’s got serious momentum. And it’s not just small startups using it. Big tech names like Yandex (where it all started), Cloudflare, Cisco, Tencent, Lyft, eBay, IBM, Uber, PayPal, and thousands more rely on ClickHouse for demanding analytics needs.

But even with all that going for it, ClickHouse isn’t perfect. It has its quirks and technical limitations. Its laser focus on OLAP speed means it often struggles with transactional (OLTP) workloads. Complex JOIN operations can sometimes be slower compared to other systems. And trying to fetch a single, specific row quickly (a point lookup)? Often painfully inefficient. That’s likely why you’re here—exploring ClickHouse alternatives, looking for a platform that might be a better technical fit, easier to manage, or simply more suited to your specific analytical needs.

In this article, we’ll break down what ClickHouse does best, its core architecture, its strengths, and those key limitations. Then, we’ll compare it to 10 notable ClickHouse alternatives across five categories: cloud data warehouses/platforms, traditional relational databases, real-time analytics or OLAP systems, time-series databases, and embedded analytics tools.

In a hurry? Jump directly to the comparisons for each ClickHouse alternative:

What Is Clickhouse Used For?

ClickHouse is built from the ground up as a distributed columnar database management system optimized for analytical workloads. It can be used to run fast analytical queries on huge datasets, especially when processing real-time logs, monitoring metrics, and analyzing time-series data. Many teams leverage ClickHouse when they need rapid aggregations on continuously incoming data, such as in monitoring systems or real-time dashboards.

How to Get Started with ClickHouse

What You Can Do With ClickHouse?

So, what do you use it for? It shines where you need to process and analyze huge amounts of data without twiddling your thumbs waiting for results. Here’s the quick rundown:

1) Real-Time Analytics — ClickHouse processes high-volume queries in near real-time. You run aggregations quickly over billions of rows, making it suitable for real-time dashboards.

2) Log and Event Data Analysis — ClickHouse handles log data and event streams well. Its design lets you sift through massive amounts of events quickly, which works well for troubleshooting and monitoring.

3) Time-Series Data Processing — While specialized time-series databases exist (some are covered as ClickHouse alternatives later), ClickHouse is very capable here due to its columnar nature and query speed.

4) High Throughput Ingestion — You can ingest hundreds of millions of rows per day. Its columnar storage minimizes the work your queries need to do, allowing you to handle vast amounts of data without lag.

….and many more!

ClickHouse Architecture Breakdown

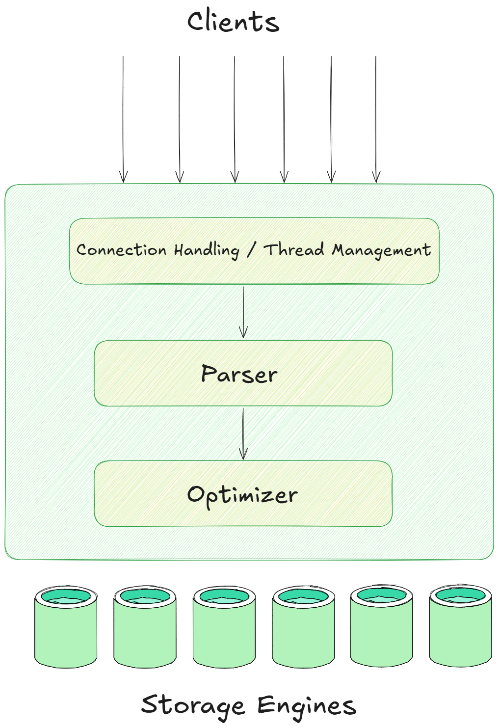

Now, let’s break down ClickHouse’s architecture. ClickHouse splits its architecture into three distinct layers:

- Query Processing Engine

- Storage Layer (Table Engines)

- Integration Layer

Let's break down each layer.

1) ClickHouse Architecture Layer 1—Query Processing Layer

The Query processing layer is where ClickHouse handles your SQL queries. It optimizes and executes them quickly, even with massive datasets. Here’s how it works:

➥ Parsing — Your SQL query is converted into an Abstract Syntax Tree (AST).

➥ Optimization (Logical & Physical) — This is where ClickHouse gets clever.

- Logical — The AST becomes a logical plan. ClickHouse applies rules here like pushing filters down closer to the data source, calculating constant expressions, rearranging conditions for efficiency.

- Physical — The logical plan is then adapted based on how the data is actually stored.

➥ Execution Plan & Scheduling — ClickHouse creates a detailed step-by-step plan (a pipeline of operators) for executing the query. It figures out how to break this plan down to run parts in parallel across available CPU cores, and potentially across multiple servers if your data is sharded.

➥ Execution — The query is executed using a vectorized engine across multiple threads and nodes, leveraging SIMD instructions where possible.

Core Components & Techniques Making It Fast:

➥ Vectorized Execution

ClickHouse processes data in batches (vectors) of column values rather than row by row. Due to this vectorized execution, it significantly reduces overhead and improves CPU cache utilization. For computationally intensive operations, ClickHouse can use LLVM to compile optimized native machine code at runtime, which gets cached for potential reuse.

➥ Multi-Level Parallelism

Parallelism in ClickHouse operates at three levels:

- Data Elements (SIMD) — Uses Single Instruction, Multiple Data instructions to perform the same operation on multiple data points simultaneously within a single CPU cycle.

- Data Chunks (Multi-Core) — Processes different data chunks across multiple CPU cores, breaking the query plan into independent execution lanes.

- Table Shards (Multi-Node) — Orchestrates work across multiple ClickHouse servers for sharded tables, pushing work to nodes holding data shards.

➥ Advanced Query Optimizations

ClickHouse uses several optimization techniques:

- Query Optimization — Applies techniques like constant folding, filter pushdown, and common subexpression elimination.

- Data Pruning — Uses primary key and skipping indices to avoid reading unnecessary data.

- Hash Table Optimizations — Selects from over 30 specialized hash table implementations based on key types and cardinality.

- Join Execution — Supports multiple join algorithms including hash joins, sort-merge joins, and index joins.

➥ Handling Multiple Queries at Once

ClickHouse needs to juggle multiple users and queries. It manages this through:

- Concurrency Control — Dynamically adjusts how many threads a single query gets based on system load and limits you can configure.

- Memory Limits — Tracks memory usage per query, user, and server. If a query gets too greedy (e.g: for a huge aggregation), it can spill intermediate data to disk and use external algorithms instead of crashing the server.

- I/O Scheduling — Allows throttling disk access based on configured bandwidth limits or priorities.

2) ClickHouse Architecture Layer 2—Data Storage Layer

The storage layer forms the foundation of ClickHouse's performance. It revolves around table engines that determine how data is physically stored and accessed. When you CREATE TABLE, you choose an engine, and that choice dictates everything about how data lives:

- Physical storage format and location

- Indexing methods

- Replication and sharding capabilities

- Query performance characteristics

The key players here are the MergeTree* family of engines.

The MergeTree* Family Engine

If you're doing serious analytics in ClickHouse, you're almost certainly using a MergeTree variant (MergeTree, ReplacingMergeTree, AggregatingMergeTree, ReplicatedMergeTree, etc.). These engines are inspired by Log-Structured Merge Trees (LSM Trees) but have their own distinct implementation.

How is Data Stored?

When you insert data, ClickHouse writes it into immutable "parts" (directories containing column files). Each part contains a chunk of rows sorted according to the table's PRIMARY KEY.

Note: this primary key defines sort order, not uniqueness like in OLTP databases.

ClickHouse constantly runs background processes to merge smaller parts into larger, more optimized ones. Within each part directory, data for each column is stored in its own separate file.

Data within those column files is compressed (LZ4 by default, ZSTD often better). Because data in a column is typically similar, it compresses very well, saving storage space and often speeding up queries (less data to read from disk).

Column data is logically divided into "granules" (typically 8192 rows). ClickHouse doesn't index every row. Instead, for each part, it stores a small index file (primary.idx) containing the primary key values for the first row of each granule. Since the data within the part is sorted by this key, ClickHouse can quickly scan this small index file (which often fits in memory) to determine which granules might contain the data matching your WHERE clause conditions on the primary key, skipping over potentially massive amounts of irrelevant data blocks.

Getting Data In (Ingestion)

Batching inserts is generally recommended. Each INSERT statement can create a small part, and merging too many tiny parts is inefficient. ClickHouse also supports asynchronous inserts where it buffers rows and writes parts periodically, which is better for high-frequency, small inserts.

Data Skipping

Besides the primary key index, you can define Skipping Indices on other columns or expressions. These are lightweight structures built over blocks of granules:

minmax(Stores the minimum and maximum value for the expression within that block)set(N)(Stores the unique values within the block (up to N values))Bloom_filter(A probabilistic index good for checking existence).

ClickHouse can also use Projections, which are like hidden, pre-aggregated or specially sorted copies of your data. If a query matches a projection's definition, ClickHouse might read from the smaller, faster projection instead of the main table.

Transforming Data During Merges

The background merge process can do more than just combine parts:

- ReplacingMergeTree — During merges, keeps only the latest version of rows that share the same sorting key (based on an optional version column). Handles updates/deduplication.

- AggregatingMergeTree — Combines rows with the same sorting key by merging intermediate states of aggregate functions (e.g: summing sums, merging HyperLogLog states for unique counts). Often used with Materialized Views for incremental pre-aggregation.

- TTL (Time-To-Live) — Can automatically delete rows or even move entire old parts to different storage (like S3) based on time expressions during merges.

Handling Updates/Deletes

OLAP systems aren't built for frequent modifications, but ClickHouse offers various ways:

Mutations— These are heavyweight background operations that rewrite entire data parts containing affected rows. They are asynchronous and non-atomic. Use them sparingly.Lightweight Deletes— A faster option that marks rows as deleted using an internal bitmap. Queries automatically filter these marked rows. Physical removal happens later during merges. Faster to execute than mutations but might slightly slow down subsequent reads until merges clean things up.

Replication and Consistency:

- You typically use

Replicated*MergeTreeengines for fault tolerance. - Replication uses a multi-master approach coordinated via ClickHouse Keeper (a built-in Raft implementation, similar to ZooKeeper).

- Replicas fetch missing parts from each other and apply operations logged in Keeper to reach eventual consistency.

- ClickHouse provides snapshot isolation for reads (queries see a consistent view of data parts that existed when the query started). It's not fully ACID compliant in the traditional OLTP sense, particularly regarding durability guarantees on individual writes (it relies on the OS buffer cache by default, so a sudden power loss could lose the most recent unflushed data).

Other Table Engines:

While MergeTree is dominant, but others also do exist:

Sharding and Replication Layer (Built on Table Engines)

Sharding— Large tables are often split across multiple nodes (shards). Each shard typically runs a local ReplicatedMergeTree table. Queries targeting the logical table use the Distributed table engine, which acts as a proxy, scattering the query to the shards and gathering results.Replication— Using ReplicatedMergeTree within each shard guarantees data is copied across nodes for high availability.

3) ClickHouse Architecture Layer 3—Integration Layer

ClickHouse isn’t just about performance; it’s also about integration. The integration layer connects ClickHouse to external systems, enabling seamless data exchange.

So, how ClickHouse handles data integration? ClickHouse uses a pull-based approach, connecting directly to remote data sources to retrieve data. It supports over 50 integration table functions and engines, which includes:

- Relational Databases (MySQL, PostgreSQL, SQLite)

- Object Storage (AWS S3, Google Cloud Storage (GCS), Azure Blob Storage)

- Streaming Systems (Kafka)

- NoSQL/Other Systems (Hive, MongoDB, Redis)

- Data Lakes (Iceberg, Hudi, DeltaLake)

- Standard protocols like ODBC - via the ODBC table function.

On top of that, ClickHouse can read and write numerous formats like CSV, JSON, Parquet , Avro , ORC, Arrow, Protobuf .

The ClickHouse Query Lifecycle (Simplified):

To understand how these layers interact, let's follow a query through the system:

- Client sends SQL query.

- Server parses, analyzes, and optimizes the query.

- Plan identifies target parts/granules using indexes.

- Executor requests needed columns/ranges from storage.

- Data read, decompressed, processed via vectorized pipeline (parallelized).

- Results aggregated/merged (potentially shuffling data between nodes).

- Final result sent to client.

Check out the article to learn more in-depth about ClickHouse Architecture.

So, What Is Clickhouse Best For?

ClickHouse is best for real-time analytics on large datasets. Here are the features that make ClickHouse stand out:

➥ Columnar Storage. ClickHouse reads only the columns your query needs, cutting disk I/O and speeding up scans.

➥ MergeTree Engine Family. ClickHouse provides powerful table engines which isn't just one engine, but a family (MergeTree, ReplicatedMergeTree, AggregatingMergeTree, .....) that can handle high ingest rates and support features like deduplication and incremental aggregation.

➥ Vectorized Query Execution. ClickHouse processes data not one value at a time, but in batches (arrays or "vectors") of column values.

➥ Parallel & Distributed Processing. You can run ClickHouse on a single server or scale it out across a cluster. It splits queries across CPU cores and nodes, processing data in parallel.

➥ Data Compression. ClickHouse supports advanced compression algorithms like LZ4 and ZSTD. LZ4 for speed; ZSTD for higher compression.

➥ Real-Time Analytics. You can ingest data into ClickHouse in real-time with no locking. It’s designed for high-throughput inserts, so you can analyze fresh data as soon as it lands.

➥ Materialized Views. ClickHouse supports Materialized Views which allow you to pre-compute and store the results of aggregation queries.

➥ Pluggable External Tables and Integrations. ClickHouse can read from and write to many external systems (S3, HDFS, MySQL, PostgreSQL, Kafka, etc.,) and supports various data formats (CSV, JSON, Parquet, Avro, ORC, Arrow, Protobuf).

➥ Scalability (Horizontal). ClickHouse is designed to scale horizontally. You can add more nodes to your cluster as your data grows. Its distributed architecture means you’re not locked into a single box, and you can keep scaling without major headaches.

➥ SQL Support with Extensions. ClickHouse supports a rich SQL dialect with various extensions. You can run complex SQL queries on your data, like joins, aggregations, and window functions. Not only that, ClickHouse also allows you to create user-defined functions to extend its capabilities.

➥ Hardware Efficiency. ClickHouse is generally known for using server resources (CPU, RAM, I/O) efficiently to achieve its performance.

...and so much more!!

These features make ClickHouse a top performer for OLAP, but also define areas where a ClickHouse alternative might be needed.

What Are the Drawbacks of Clickhouse?

Okay, let's talk about the flip side. ClickHouse is fast, sure, but it's definitely not the right tool for every single job. Its strengths come with trade-offs, and ignoring them can lead to some serious headaches down the road.

➥ OLAP Focus (Not for OLTP). ClickHouse is primarily designed for OLAP (Online Analytical Processing), which means it excels at handling complex queries and large datasets. But, it’s not perfect for online transaction processing (OLTP) workloads. If your use case involves a lot of transactional operations, such as frequent updates, inserts, or deletes, ClickHouse might not be the best fit.

➥ Transactional Support? Not Here. ClickHouse doesn’t offer full ACID transactions. You can’t rely on it for workloads that need strict consistency or rollback support. If you’re used to databases like PostgreSQL or MySQL, you’ll miss this. It’s designed for OLAP, not OLTP. So, if your use case needs lots of updates, deletes, or row-level locking, you’ll hit a wall.

➥ Inefficiency for Point Lookups. Sparse indexing means ClickHouse isn’t efficient at fetching single rows by key. It’s blazing fast for scanning and aggregating millions of rows, but if you want to grab one record by ID, you’ll be waiting longer than you’d expect. It’s not built for transactional, row-based workloads.

➥ Joins? Only If You Must. Joins can be a real hassle. ClickHouse can handle them, but performance takes a hit when things get complex, especially with huge tables. You’ll often have to flatten your data—combine related tables into one—before loading it in. This adds pipeline complexity and can slow things down.

➥ Non-Standard SQL Dialect. You’ll find that ClickHouse’s SQL dialect is missing some features. No window functions (at least in earlier versions), and its join implementation is different from what you might expect if you’re coming from MySQL or PostgreSQL. Migrating existing queries can be a hassle.

➥ Cluster Management Can Be a Headache. Running ClickHouse at scale means managing clusters, replication, and sharding yourself. Cluster expansion often requires heavy, manual data rebalancing. If your team isn’t experienced with distributed systems, this can be a real challenge.

➥ Cloud Integration? Not Plug-and-Play. ClickHouse can be a bit tricky to work with when it comes to cloud-native tools and managed services. Integrating with ETL, data visualization, or reporting tools can take more work than with other databases that have broader ecosystem support.

These limitations aren't deal-breakers, but they're a trade-off since ClickHouse is built for specific use cases. Knowing these limitations will help you decide if it's the right fit or if one of the ClickHouse alternatives might better suit your needs.

10 True ClickHouse Alternatives: Which One Wins?

Okay, you see the trade-offs. Now, let's look at the ClickHouse alternatives.

ClickHouse is powerful, but the analytics landscape is broad. Let's explore alternatives, grouped by their primary function. Here we have categorize these alternatives to make comparisons clearer:

Let's dig in!

TL;DR:

If you don't want to read the entire post, here's a high-level summary of all ClickHouse alternatives based on several key factors.| 🔮 | Architecture | Primary Use Case | Storage Model | Deployment Model | Compute Scaling Model | Storage Scaling Model | Real-Time Ingestion Strength | SQL Dialect / Compatibility | JOIN Performance | ACID Compliance | OLTP Suitability | Open Source Core? | Cost Model(s) |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| ClickHouse | Columnar OLAP (Vectorized, MergeTree) | Real-time OLAP, Logs, Metrics, Events | Columnar (MergeTree) | Self-managed / Cloud Managed | Horizontal (Shards/Replicas) | Coupled / Independent | High | Extended SQL (Analytics Focus) | Good (Improving) | No (Atomic Operations) | No | Yes (Apache 2.0) | Infra/Ops (OSS), Usage (Cloud) |

| Snowflake | Cloud DW (MPP, Shared Data) | General BI/DW, Data Sharing, Elastic Workloads | Columnar (Micro-partitions) | Cloud Managed (SaaS) | Horizontal (Elastic Warehouses) | Independent (Object Store) | Medium | High (Standard SQL) | Strong (MPP) | Yes | No | No | Usage (Compute Credits + Storage) |

| Databricks | Lakehouse Platform (Spark/Photon) | Unified ETL, Streaming, BI, ML/AI | Columnar (Delta Lake/Parquet) | Cloud Managed (PaaS) | Horizontal (Spark Clusters) | Independent (Object Store) | High | High (Standard SQL + Spark APIs) | Strong (Spark SQL) | Yes (via Delta Lake) | No | Yes (Spark, Delta, etc.) | Usage (Compute DBUs + Storage) |

| Google BigQuery | Cloud DW (Serverless MPP, Dremel) | General BI/DW, Serverless Analytics, ML | Columnar (Capacitor) | Cloud Managed (Serverless) | Horizontal (Auto Slots) | Independent (Object Store) | High | High (Standard SQL) | Strong (MPP) | Yes | No | No | Usage (Scan/Slots + Storage) |

| Amazon Redshift | Cloud DW (MPP, RA3/Serverless Focus) | General BI/DW (AWS Focused) | Columnar | Cloud Managed (PaaS) | Horizontal (Nodes/RPUs) | Coupled / Independent | Medium | High (PostgreSQL-like) | Strong (MPP, Tuned) | Yes | No | No | Usage (Nodes/RPUs + Storage) |

| MySQL | RDBMS (Row-Store, InnoDB) | OLTP, Application Backend | Row (InnoDB B+Tree) | Self-managed / Cloud Managed | Vertical, Read Replicas | Coupled (Node Storage) | Low (for Analytics) | High (Standard SQL) | Strong (Relational) | Yes (InnoDB) | Yes | Yes (GPL) | Infra/Ops (OSS), Instance (Cloud) |

| PostgreSQL | ORDBMS (Row-Store, MVCC) | OLTP, Complex Apps, GIS, JSON | Row (Heap Tables, B+Tree) | Self-managed / Cloud Managed | Vertical, Read Replicas | Coupled (Node Storage) | Low (for Analytics) | High (Standard SQL, Rich Types) | Strong (Relational) | Yes | Yes | Yes (PostgreSQL License) | Infra/Ops (OSS), Instance (Cloud) |

| Apache Druid | Real-time OLAP (Microservices, External Deps) | Low-Latency Dashboards, Events, Metrics | Columnar (Segments, Bitmaps) | Self-managed / Cloud Managed | Horizontal (Node Types) | Independent (Deep Store) | High | Medium (Druid SQL, Improving) | Limited (Lookups Best) | No (Immutable Segments) | No | Yes (Apache 2.0) | Infra/Ops (OSS), Usage (Cloud) |

| Apache Pinot | Real-time OLAP (Microservices, External Deps) | User-Facing Analytics (Lowest Latency), Upserts | Columnar (Segments, Pluggable Indices) | Self-managed / Cloud Managed | Horizontal (Node Types) | Independent (Deep Store) | High | Medium (Pinot SQL, Improving) | Limited (Lookups Best) | No (Segment Immutability / Upserts) | No | Yes (Apache 2.0) | Infra/Ops (OSS), Usage (Cloud) |

| TimescaleDB | Time-Series DB (Postgres Extension) | Time-Series + Relational Analytics | Row (+ Columnar Compression) | Self-managed / Cloud Managed | Vertical (Postgres) + Multi-node | Coupled (Node Storage) | High | High (Standard PostgreSQL SQL) | Strong (Relational) | Yes | Yes (Postgres Core) | Yes (Apache 2.0 Core) | Infra/Ops (OSS), Usage (Cloud) |

| DuckDB | In-Process OLAP Library | Local/Embedded Analytics, File Querying | Columnar | Embedded Library | Single-Node (Vertical) | Single File / In-Memory | N/A | High (PostgreSQL-like) | Good (Single Node) | Yes (In-Process) | N/A | Yes (MIT License) | Library (Free) |

☁️ ClickHouse Alternatives: Category 1—ClickHouse vs Cloud Data Warehouses / Platforms

These platforms are cloud-native, often feature separation of storage and compute, and provide managed services. They excel at diverse analytical workloads but differ significantly in their underlying design, performance characteristics, and cost models compared to ClickHouse.

ClickHouse Alternative 1—Snowflake

Snowflake is a widely adopted cloud-native data platform, delivered as a fully managed SaaS (Software-as-a-Service). It aims to provide a single service for data warehousing, data lakes, data engineering, and data science workloads, running transparently on AWS, Microsoft Azure, or Google Cloud Platform infrastructure.

What is Snowflake? - ClickHouse Alternatives - ClickHouse vs Snowflake

Snowflake's Architecture

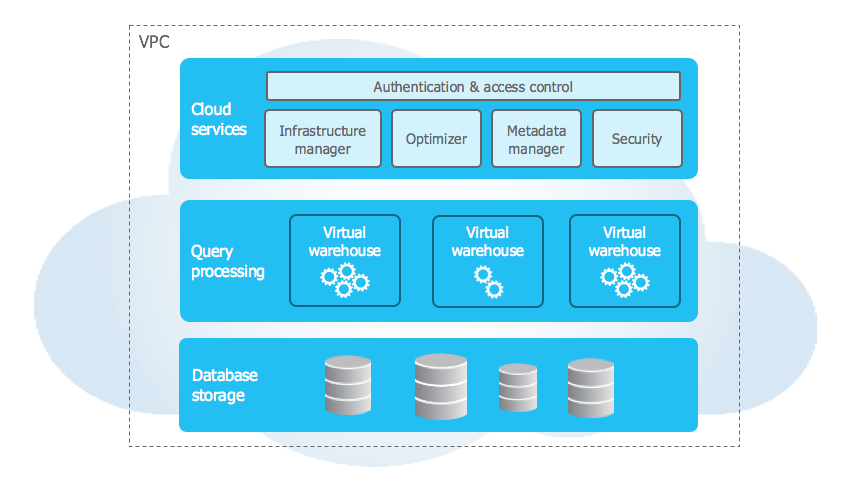

Snowflake architecture is built on three main layers that work together but can scale independently. Snowflake architecture is different from older systems where storage and compute were tied together. It runs completely on public cloud infrastructure (like AWS, Azure, or GCP).

➥ Storage Layer

Snowflake’s storage layer leverages the underlying cloud provider's durable object storage (Amazon S3, Azure Blob Storage, Google Cloud Storage). When you load data, Snowflake reorganizes it into its internal optimized, compressed, columnar format. Data is stored in immutable files known as micro-partitions (typically 50-500MB uncompressed size, but stored compressed). Snowflake automatically manages all aspects of this storage: file organization, compression, encryption, and metadata management. Crucially, Snowflake collects rich metadata about the data within each micro-partition. This metadata is used extensively by the optimizer for data pruning, allowing queries to skip reading micro-partitions that cannot contain relevant data, significantly reducing scan times.

➥ Query Processing (Compute) Layer

Compute power in Snowflake is provided by Virtual Warehouses (VWs). These are independent MPP (Massively Parallel Processing) compute clusters (using underlying cloud instances like EC2 or Azure VMs) that you provision and manage via SQL commands or the UI. Each VW consists of multiple nodes with CPU, memory, and local SSD caching (for intermediate query results and temporary data). When you face a spike, you can scale up a warehouse or add multiple clusters for the same workload to handle concurrency—no manual sharding or capacity planning required.

➥ Cloud Services Layer

The cloud services layer acts as the "brain" of Snowflake, coordinating the entire platform. It's a collection of highly available, distributed services responsible for:

- Authentication and Access Control

- Infrastructure Management

- Metadata Management (catalog, micro-partition statistics)

- Query Parsing, Optimization, and Compilation

- Transaction Management

- Security Enforcement

- Client Connectors (JDBC, ODBC, ....)

This layer runs independently and scales automatically behind the scenes.

This three-layer architecture with decoupled storage and compute is fundamental to Snowflake's elasticity, concurrency handling, and managed service approach.

Snowflake's Key Features

Check out the article below for a comprehensive overview of Snowflake's extensive capabilities, including its architectural foundations, security measures, and key features.

Pros and Cons of Snowflake:

Snowflake Pros:

- You pay separately for storage and compute. Scale your virtual warehouses up or down without touching data size—you only spin extra compute when you need it.

- It's a fully managed SaaS. No OS patches, no manual hardware configurations in a data center. You log in and start loading data.

- Zero‑copy cloning. Snap a copy of terabytes in seconds without doubling storage.

- Time travel and fail‑safe. Go back to data snapshots for up to 90 days.

- Automatic scaling and concurrency. Multi‑cluster warehouses spin up when you hit queuing, so your queries don’t block each other.

- Micro‑partitioning under the hood. Snowflake breaks data into small chunks and prunes non‑relevant ones automatically.

- Rich ecosystem and Snowpark. Build data pipelines in Python, Java or Scala right where your data sits.

- Strong security and compliance. End‑to‑end encryption, role‑based access and certifications like HIPAA and PCI‑DSS keep your data locked down.

- Data sharing and marketplace. Share live data with partners or use third‑party data sets.

Snowflake Cons:

- Cost surprises happen. If you forget to auto‑suspend warehouses or don’t track credit usage, bills can spike faster than you expect.

- Limited infrastructure control. You can’t tweak the OS or network stack; you have to play by Snowflake’s rules.

- Proprietary features and SQL extensions. Porting to another platform means you might need to rewrite your queries and UDFs.

- Data ingestion overhead. Continuous loads via Snowpipe avoid batch windows but add per‑file charges that can add up on lots of small files.

- Query caching can mask true performance. You might think a slow query is fast when you’re really hitting the cache, not raw compute.

- Metadata bloat. With heavy DDL or lots of micro‑partitions, your metadata store can grow, adding slight latency.

- Cross‑cloud replication limits. You can’t replicate freely between AWS, Azure and GCP in one go—you have to pick one per account.

Want to take Chaos Genius for a spin?

It takes less than 5 minutes.

Is Clickhouse Better Than Snowflake?

Alright, let's break down the ClickHouse vs Snowflake comparison. They are both highly capable analytical databases optimized for different goals and operational models. ClickHouse often excels in raw processing speed for specific OLAP tasks, while Snowflake offers a broader, more managed, and elastic cloud data platform experience.

ClickHouse vs Snowflake—Architecture & Deployment Model

The architectural differences are fundamental to their operation, scalability, and cost characteristics.

| 🔮 | Snowflake | ClickHouse |

|---|---|---|

| Core Design | Cloud-native Data Warehouse Platform (SaaS) | Open Source Columnar Analytical Database |

| Deployment | Fully managed SaaS on AWS, Azure, GCP | Flexible: Self-managed (on-prem, cloud VMs), Kubernetes, Managed Service (ClickHouse Cloud, others) |

| Storage/Compute | Decoupled: Centralized object storage, separate compute (Virtual Warehouses). | Traditionally Coupled (Shared-Nothing): Nodes have own storage/compute. Decoupled Option: Can use object storage (S3, GCS, Azure Blob) via MergeTree settings (esp. in ClickHouse Cloud or newer self-managed). |

| Scaling Model | Shared-Data: Compute scales independently, accessing shared storage. | Shared-Nothing (default): Scale by adding nodes (compute+storage). Shared-Disk (with object storage): Compute can scale somewhat independently of storage. |

| Storage Format | Proprietary columnar format (Micro-partitions). | Open columnar format (MergeTree engine family); Can read/write Parquet, ORC, etc., directly or via table functions. |

| Data Model | Relational; Strong semi-structured support (VARIANT, OBJECT, ARRAY). |

Relational; Strong complex type support (Nested, Tuple, Map, Array, JSON object). |

| Workload Isolation | High: Via separate Virtual Warehouses. | Lower (Self-hosted default): Shared resources on a cluster. Isolation often requires separate clusters or careful configuration. Higher (ClickHouse Cloud): Service-based isolation. |

Snowflake's architecture inherently enforces separation, leading to easier elasticity and managed scaling. ClickHouse offers flexibility (coupled or decoupled, self-managed or cloud) but requires more architectural decisions and potentially more effort (especially self-hosted) to achieve similar separation and isolation.

ClickHouse vs Snowflake—Performance & Query Execution

| 🔮 | Snowflake | ClickHouse |

|---|---|---|

| Query Speed (OLAP) | Fast for general DW tasks; Highly dependent on warehouse size, caching, and pruning. Seconds to minutes typical. | Extremely Fast: Optimized for aggregations, scans, time-series. Often sub-second/millisecond latency for well-tuned queries. Significantly faster for specific analytical patterns. |

| Execution Engine | Traditional query execution pipeline; vectorized processing elements. | Vectorized Query Execution: Processes data in batches (vectors) for high CPU efficiency. |

| Indexing & Pruning | Relies heavily on Micro-partition Metadata Pruning. Optional: Clustering Keys (sort data), Search Optimization Service (point lookups, paid). | Sparse Primary Index: Sorts data, enables efficient range scans/pruning. Data Skipping Indices: (minmax, set, bloom filter, etc.) prune granule blocks. Materialized Views. |

| Query Optimization | Sophisticated cost-based optimizer; multi-layered caching (metadata, results, local disk). | Rule-based and basic cost-based elements; relies heavily on engine design (MergeTree), vectorized execution, indices, and Materialized Views. User hints/settings influential. |

| Real-time Ingest | Near-real-time via Snowpipe (micro-batching, latency seconds to minutes). Batch COPY INTO for bulk loads. |

High-Throughput Writes: Designed for streaming inserts (though internal batching occurs). Can achieve very low ingestion latency (sub-second possible). |

| Updates/Deletes | Background re-write of affected micro-partitions (metadata pointers updated). Asynchronous. | ALTER TABLE ... UPDATE/DELETE implemented as background mutations (rewriting parts). Optimized for append-heavy, less frequent mutations. Synchronous option exists but impacts performance. |

| Compression | Automatic, efficient columnar compression. | Highly effective and tunable columnar compression (LZ4, ZSTD default, Delta, Gorilla, etc.). Often achieves higher compression ratios. |

ClickHouse is purpose-built for speed on analytical aggregations and scans, leveraging vectorized execution and efficient indexing for lower latency on specific OLAP queries. Snowflake offers strong, generally applicable performance across broader DW workloads, heavily optimized via metadata pruning and caching, with less manual tuning usually required.

ClickHouse vs Snowflake—Scalability & Concurrency

How easily can you handle more data or more users?

Both scale to petabytes, but via different mechanisms:

| 🔮 | Snowflake | ClickHouse |

|---|---|---|

| Compute Scaling | Easy & Fast: Vertical resize (change warehouse size) near-instant. Horizontal via Multi-cluster Warehouses (automatic or manual). | Self-hosted: Manual (add nodes, configure shards/replicas, rebalance data). ClickHouse Cloud: Simplified compute scaling (vertical/horizontal options available). |

| Storage Scaling | Automatic & Transparent: Leverages underlying cloud object storage. | Self-hosted: Manual (add disks/nodes). Object Storage: Scales with cloud provider limits. ClickHouse Cloud: Managed, typically leveraging object storage. |

| Scaling Architecture | Shared-Data: Compute nodes access central storage pool. | Shared-Nothing (default): Data partitioned/replicated across nodes. Shared-Disk (Object Storage): Compute nodes access shared object storage. |

| Scaling Complexity | Low: Managed via UI/SQL; decoupling simplifies operations. | High (Self-hosted): Requires careful planning, configuration (sharding keys, replication), and operational effort (rebalancing). Medium (ClickHouse Cloud): Simplified by managed service. |

| Query Concurrency | Scalable via Multi-cluster Warehouses: Each cluster handles a number of queries (default 8, adjustable). Add clusters for more concurrency. | High by Design: Nodes can handle many concurrent queries, limited by CPU/Memory/IO. Very high aggregate concurrency possible across a cluster. ClickHouse Cloud services are optimized for high concurrency. |

Snowflake provides a simpler, more elastic, and largely automated scaling experience, especially for compute and concurrency, due to its managed nature and decoupled architecture. ClickHouse (especially self-hosted) offers immense scaling potential but demands significantly more expertise for planning, configuration, and management.

ClickHouse vs Snowflake—Cost Model

| 🔮 | Snowflake | ClickHouse |

|---|---|---|

| Core Pricing | Usage-based: Compute (credits/second, warehouse size-dependent), Storage (TB/month), Serverless Features (Snowpipe, etc.). | Self-hosted: Free Open Source (pay infrastructure/operational costs). ClickHouse Cloud: Usage-based (vCPU, RAM, Storage, Data Transfer, specific features). |

| Compute Cost | Billed per second (60s min) for active warehouses. Cost determined by size (XS, S,...). Can be paused (auto-suspend). | Self-hosted: Cost of hardware/VMs. ClickHouse Cloud: Billed based on compute resource usage time/size. Often lower cost per query/unit of performance for OLAP tasks. |

| Storage Cost | Per TB/month on cloud object storage (includes Snowflake overhead/optimization). | Self-hosted: Cost of disks/object storage. ClickHouse Cloud: Per GB/month, often competitive due to potentially higher compression ratios. |

| Extra Costs | Data transfer, Snowpipe (per file/notification), Search Optimization Service, Materialized Views (compute for refresh), Fail-safe storage. | Self-hosted: Operational overhead. ClickHouse Cloud: Data transfer (egress), backups, potentially specific features depending on provider plan. |

| Cost Efficiency | Good for bursty/variable workloads due to auto-suspend. Can become expensive for sustained, high-compute tasks if not managed well. | Often More Cost-Effective for compute-intensive, sustained analytical workloads due to raw performance efficiency (less compute needed for same task). OSS offers lowest TCO if expertise exists. |

ClickHouse frequently offers better price-performance for high-throughput, low-latency analytical workloads, particularly when sustained compute is required. Snowflake's model provides flexibility and can be cost-effective for intermittent or unpredictable workloads, but requires vigilant cost management to prevent run-away compute expenses.

ClickHouse vs Snowflake—SQL Dialect, Ecosystem & Usability

| 🔮 | Snowflake | ClickHouse |

|---|---|---|

| SQL Compliance | High ANSI SQL compliance; familiar dialect for users from traditional RDBMS/DW backgrounds. | Powerful SQL dialect, but includes many unique functions and non-standard syntax extensions tailored for analytics. Learning curve can be steeper. |

| Semi-structured | Excellent native support (VARIANT, OBJECT, ARRAY) with specialized functions/operators for querying. |

Robust support (JSON, Map, Tuple, Array, Nested) with many dedicated functions, though querying can feel less integrated than VARIANT. |

| Ecosystem | Mature, extensive ecosystem; native connectors for most BI tools (Tableau, PowerBI), ETL/ELT tools, data catalogs. Snowpark for Python/Java/Scala. | Growing ecosystem; good JDBC/ODBC support, numerous third-party clients/integrations. Python clients popular. Official BI integrations improving. |

| Mutability | Updates/Deletes handled via background micro-partition rewrites. Suited for batch modifications. | ALTER mutations are background operations optimized for infrequent, bulk changes. Not ideal for transactional (OLTP) updates. |

| Community & Support | Enterprise support model from Snowflake Inc. Active user community forums. | Strong open source community. Commercial support available from ClickHouse Inc. and other vendors (Altinity). |

| Useability | High ease-of-use due to managed service, familiar SQL, UI, and extensive documentation. | Steeper learning curve, especially for self-hosted (setup, tuning, cluster management). ClickHouse Cloud significantly improves usability. |

Snowflake generally offers a smoother learning curve, broader out-of-the-box tooling integration, and more standard SQL experience, appealing to a wider range of users. ClickHouse provides immense power and flexibility with its SQL extensions and data types but may require more specialized knowledge for optimal use and integration.

ClickHouse vs Snowflake—When Would You Pick One Over the Other?

Choose Snowflake if:

- You need a versatile, general-purpose cloud data platform for diverse analytical needs (BI, ELT, reporting, data science, data sharing).

- Ease of use, minimal operational overhead, and a fully managed SaaS experience are of utmost importance.

- Elasticity and independent scaling of compute and storage are critical requirements.

- You require robust workload isolation for different teams or applications.

- Your user base includes mixed technical/non-technical users relying heavily on standard BI tools.

- Workloads are variable or bursty, benefiting significantly from per-second billing and auto-suspension.

- Native secure data sharing capabilities are important for collaboration.

- You prefer a highly ANSI SQL-compliant environment.

- You heavily utilize semi-structured data and need flexible querying capabilities (

VARIANT).

Choose ClickHouse if:

- Your primary driver is extreme query speed and low latency (sub-second) for OLAP, especially real-time dashboards and user-facing analytics.

- You are processing massive datasets (terabytes/petabytes) requiring rapid aggregations, filtering, and time-series analysis.

- Cost-efficiency at scale for high-performance, sustained analytical workloads is a major factor.

- You have high-throughput, append-heavy data streams (e.g: event logs, IoT data, metrics, observability).

- You value open source flexibility, deep customization potential (self-hosting), or need a managed service hyper-focused on analytical speed (ClickHouse Cloud).

- Very high query concurrency against raw data is essential.

- You have the technical expertise (or choose ClickHouse Cloud) to manage/tune its configuration for optimal performance.

So, wrapping up the ClickHouse vs Snowflake comparison: Snowflake offers a convenient, elastic, feature-rich, and broadly applicable managed cloud data platform perfect for general data warehousing, BI, and diverse user bases. ClickHouse provides superior performance and often better cost-efficiency for specific, demanding OLAP tasks, particularly in real-time scenarios, appealing strongly to use cases prioritizing speed, efficiency, and (optionally) open source control.

Continue reading...

ClickHouse Alternative 2—Databricks

Databricks is a Unified Data Analytics Platform, often referred to as a Lakehouse Platform. Founded in 2013 by the original creators of Apache Spark, Delta Lake and MlFlow, it aims to merge the benefits of data lakes (cost-effectiveness, flexibility for diverse data, scalability) with the performance, reliability, and governance features traditionally associated with data warehouses. It provides an integrated environment for data engineering, data science, machine learning (including Generative AI), and SQL analytics, available as a managed service on AWS, Azure, and GCP.

What is Databricks? - ClickHouse Alternatives - ClickHouse Competitors

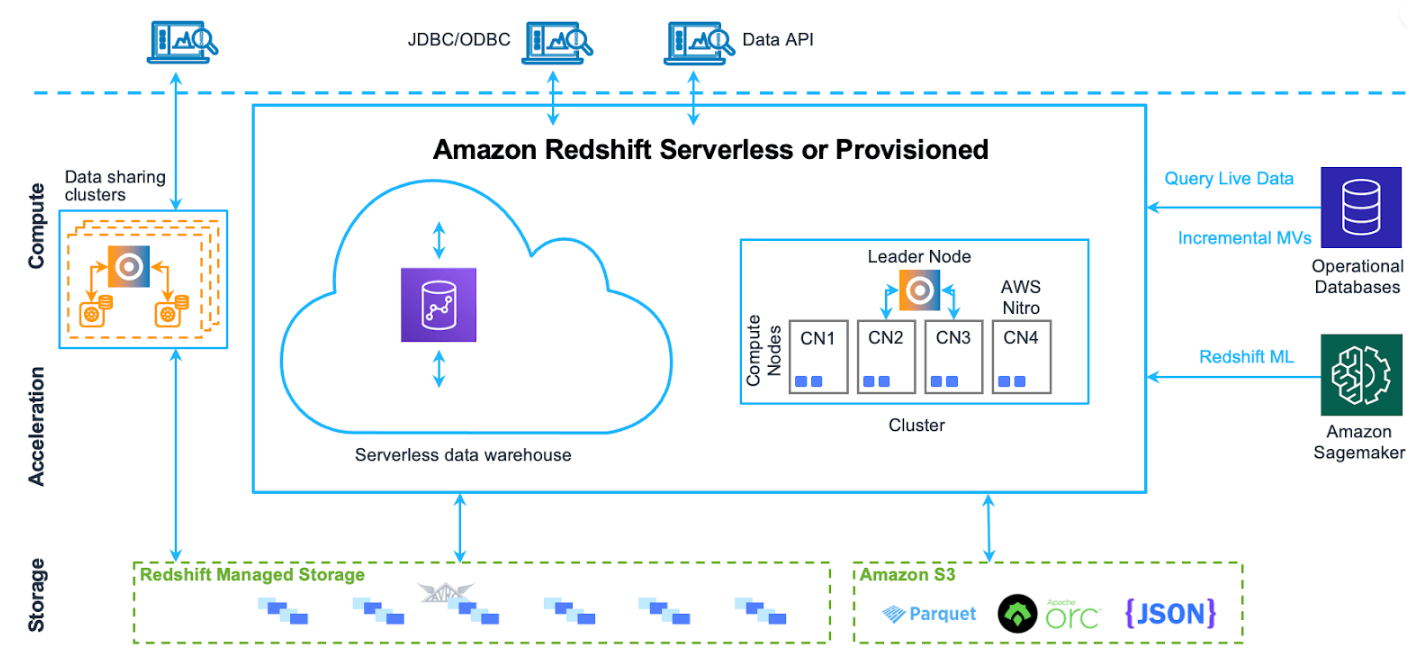

Databricks Architecture—The Lakehouse Foundation

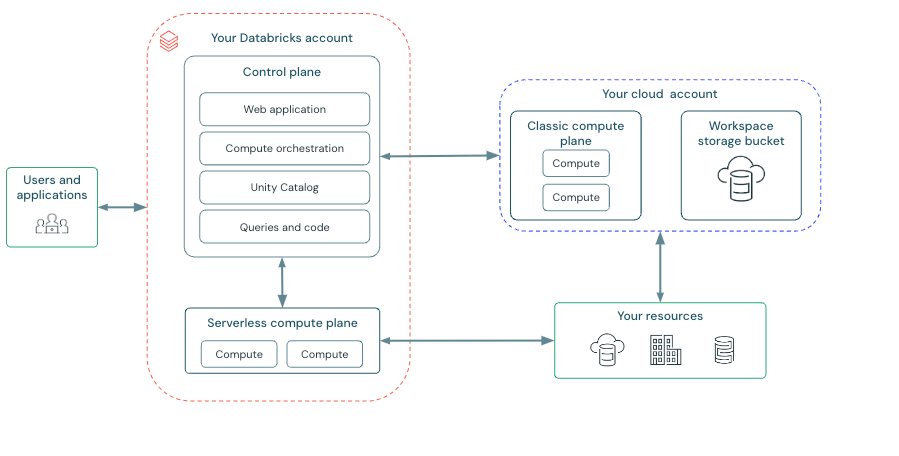

The Databricks platform architecture is logically divided into a Control Plane and a Compute Plane, built upon the customer's cloud infrastructure and storage.

➥ Control Plane

The Control Plane is basically Databricks' command center. It runs in Databricks' own cloud account. It handles backend services like the web UI, notebook and job configurations, cluster management orchestration, REST APIs, and often metadata management, especially when using Unity Catalog. User code and business data are not processed or stored here.

➥ Compute Plane

The Compute Plane is where data processing actually occurs using Databricks compute resources (clusters). There are two deployment models:

- Classic Compute Plane — Compute resources (like VMs for Spark clusters or Databricks SQL warehouses) are provisioned and run directly within your cloud account. You have visibility into these resources in your cloud console.

- Serverless Compute Plane — Compute resources run within a secure environment in Databricks' cloud account within your region. Databricks manages the instance types, scaling, patching, and optimization, offering a more hands-off experience similar to other serverless offerings.

Regardless of the model, compute is fundamentally separated from storage.

Check out the article below to learn more in-depth about the capabilities and architecture behind Databricks.

Databricks Key Features

Databricks provides a wide array of features integrated into its platform:

1) Unified Data Platform — Databricks provides a single interface for notebooks (supporting SQL, Python, Scala, R), job orchestration (Workflows), SQL querying (Databricks SQL), dashboarding, ML experimentation, and governance.

2) Optimized Apache Spark — Databricks Runtime (DBR) offers a managed, optimized version of Apache Spark with significant performance enhancements and caching mechanisms.

3) Delta Lake Integration — Databricks has native support for Delta Lake features (ACID, time travel, schema management, DML) for building reliable data lakes.

4) Databricks SQL (DSQL) — Databricks provides a dedicated SQL analytics experience with optimized SQL Warehouses (including Serverless options), query editor, dashboards, and alerts.

5) Photon Engine — Databricks comes with Photon Engine built in, which is a high-performance, C++ based vectorized execution engine accelerating SQL and DataFrame workloads.

6) MLflow for Machine Learning Lifecycle — MLflow is integrated within Databricks for tracking experiments, managing models, and automating deployments. You can track parameters, metrics, and artifacts, and even plug in with tools like OpenAI or Hugging Face for generative AI use cases.

7) Lakehouse Federation — You can query data across multiple sources, including Oracle and Hive Metastore, without moving it.

8) Collaboration Tools — Databricks provides:

- Interactive Notebooks

- Real-Time Notebook Sharing

- Version Control

9) Unity Catalog — Databricks is integrated with Unity Catalog which is centralized governance and access control for all your data assets, with REST API support for automation.

10) Delta Live Tables — Databricks comes with DLT integrated which automates and tracks your ETL pipelines with improved query tracking and maintenance features.

11) AutoML and LLM Fine-Tuning — Databricks has built-in AutoML for model selection and hyperparameter tuning, plus support for fine-tuning large language models (LLMs).

… and so much more.

Pros and Cons of Databricks:

Databricks Pros:

- Databricks handles massive data volumes and supports batch and streaming jobs.

- Databricks supports multiple languages, you can use Python, SQL, Scala, and R in the same notebook.

- Databricks supports real-time editing in notebooks which lets your team work together.

- Databricks can scale resources up or down based on workload.

- Mature ML/AI capabilities. Best-in-class integration for the end-to-end machine learning lifecycle.

- Databricks supports Delta Lake which adds ACID transactions, time travel, and schema enforcement to your data lake.

- Works with AWS, Azure, Google Cloud, and connects to popular BI (Business Intelligence) tools and data sources.

- Governance. Databricks Unity Catalog provides robust, centralized governance capabilities.

- Databricks provides extensive docs and a big community. You’ll find answers—eventually.

Databricks Cons:

- The breadth of features and underlying concepts (Spark, Delta Lake) can present a steeper learning curve than specialized tools. Configuration tuning can be very complex.

- Pricing is based on Databricks Units (DBUs) which vary by compute type, instance size, and cloud provider. Requires careful monitoring and optimization to control costs, especially for inefficient jobs or idle clusters.

- Photon is there to boosts performance... BUT optimal speed can still depend on cluster configuration, data layout (partitioning, Z-Ordering in Delta Lake), and query patterns. Not always as fast as specialized OLAP engines like ClickHouse for specific query types.

- Databricks Notebooks cap results at 10,000 rows or 2 MB. Analyzing big outputs? You’ll need to chunk or export your data.

- Large or messy notebooks become hard to manage. Searching for code snippets or outputs can get frustrating.

- Databricks is a managed service which restricts your access to underlying infrastructure. Fine-tuning hardware or networking isn’t really an option.

- Git integration is limited or clunky.

- Dependency on cloud providers. Outages or issues with your cloud provider (AWS, Azure, GCP) will impact Databricks availability and performance.

Save up to 50% on your Databricks spend in a few minutes!

Alright, now let's break down the ClickHouse vs Databricks comparison.

ClickHouse vs Databricks: What's Best for You?

We'll dig into the technical stuff, but at a high level: ClickHouse prioritizes raw query speed for OLAP tasks, while Databricks focuses on integrating the entire data lifecycle (engineering, analytics, ML) on a flexible "Lakehouse" foundation.

ClickHouse vs Databricks—Architecture & Core Focus

| 🔮 | Databricks | ClickHouse |

|---|---|---|

| Core Engine | Apache Spark (distributed processing) enhanced by Photon (C++ vectorized execution engine). | Custom C++ vectorized execution engine optimized specifically for analytical (OLAP) queries. |

| Primary Focus | Unified Platform: ETL/ELT, Streaming, SQL Analytics (BI), Data Science, ML/AI on a Lakehouse foundation. | High-performance OLAP Database: Real-time analytics, interactive dashboards, log/event analysis. |

| Storage | Decoupled: Uses Delta Lake format (over Parquet) on user's cloud object storage (S3, ADLS, GCS). | Flexible: Traditionally coupled (shared-nothing nodes with local storage). Increasingly uses decoupled object storage (esp. ClickHouse Cloud or self-managed config). Stores data in proprietary columnar format (MergeTree family). |

| Data Model | Relational schema via Delta Lake; Handles structured, semi-structured, and unstructured data effectively. | Primarily Columnar Relational DBMS. Strong support for structured and semi-structured (JSON, Map, Array, Nested). |

| Deployment | Managed cloud service on AWS, Azure, GCP. | Flexible: Self-managed (OSS), Kubernetes, Managed Service (ClickHouse Cloud, others). |

| Architecture | Lakehouse: Blends data lake flexibility (open formats, object storage) with DW reliability/performance. | Distributed Columnar Database: Optimized for fast analytical reads and aggregations. |

Databricks is architected as a comprehensive platform leveraging Spark and Delta Lake for diverse data workloads with inherent compute-storage separation. ClickHouse is a purpose-built analytical engine prioritizing query speed, offering more deployment flexibility but a narrower focus.

ClickHouse vs Databricks—Performance Profile

| 🔮 | Databricks | ClickHouse |

|---|---|---|

| Query Performance (OLAP) | Fast: Databricks SQL with Photon engine delivers competitive analytical query performance, leveraging Delta Lake optimizations (stats, Z-Ordering). | Extremely Fast: Often benchmarks faster for raw OLAP query speed (scans, aggregations, GROUP BY) due to specialized engine and storage format. Excels at low latency. |

| Query Performance (ETL/ML) | Excellent: Leverages the full power of distributed Spark for complex transformations, large-scale data processing, and ML model training. | Not Designed For This: Typically used as a source/sink for external ETL/ML tools, not for executing complex pipelines or training models internally. |

| Real-time Ingestion | Good: Spark Structured Streaming provides robust near-real-time ingestion into Delta Lake tables. Latency typically in seconds/minutes. | Excellent: Optimized for high-throughput, low-latency data ingestion (often sub-second possible). Handles millions of inserts per second. |

| Joins | Generally Strong: Spark's distributed join strategies handle large joins well. Databricks SQL optimizes joins further. | Improving: Performance varies. Best with denormalized schemas or small dimension table lookups. Large distributed joins can be less efficient than Spark/MPP DWs. |

| Concurrency | Good: Scales via clusters. Databricks SQL Warehouses are designed for high-concurrency BI workloads. Autoscaling helps manage load. | Very High: Designed for high query concurrency per node, often suitable for user-facing applications hitting the database directly. |

| Optimization Techniques | Delta Lake statistics, partition pruning, Z-Ordering/Liquid Clustering, Photon vectorized execution, Spark Adaptive Query Execution (AQE). | Vectorized execution, sparse primary index, data skipping indices, MergeTree storage engine optimizations, efficient compression, Materialized Views (pre-aggregation). |

| Update/Delete Performance | Efficient: Delta Lake's MERGE, UPDATE, DELETE operations leverage transaction log and data skipping efficiently. |

Less Efficient: ALTER TABLE ... UPDATE/DELETE performs background mutations (rewriting data parts). Optimized for append-heavy workloads; frequent updates are costly. |

ClickHouse typically offers lower latency and higher throughput for pure OLAP queries. Databricks provides strong, broad performance across SQL, ETL, and ML, with robust support for data mutability via Delta Lake.

ClickHouse vs Databricks—Scalability & Management

| 🔮 | Databricks | ClickHouse |

|---|---|---|

| Compute Scaling | Easy & Automated: Autoscaling Spark clusters and SQL Warehouses (Classic/Serverless). Serverless offers near-instant scaling. | Self-hosted: Manual horizontal scaling (add nodes/shards, rebalance). ClickHouse Cloud: Managed scaling options available (vertical/horizontal). |

| Storage Scaling | Independent & Automatic: Scales seamlessly with cloud object storage capacity. | Self-hosted: Scales with node storage capacity. Object Storage: Scales with cloud provider limits. ClickHouse Cloud: Managed, uses object storage. |

| Architecture | Decoupled Storage & Compute: Inherent in the Lakehouse design using object storage. | Flexible: Can be Coupled (OSS default) or Decoupled (ClickHouse Cloud / Object Storage config). |

| Management | Fully Managed Platform: Databricks handles infrastructure, patching, Spark/Photon optimization. Unity Catalog for governance. | Self-hosted: Significant operational overhead (setup, tuning, upgrades, scaling). ClickHouse Cloud: Managed service reduces operational burden. |

Databricks offers a more automated, elastic, and managed scaling experience due to its cloud-native, decoupled architecture. ClickHouse provides high scalability potential but requires more manual effort and expertise when self-hosted.

ClickHouse vs Databricks—Feature Ecosystem & Transactions

| 🔮 | Databricks | ClickHouse |

|---|---|---|

| SQL Support | Supports ANSI SQL via Databricks SQL endpoint. Also native Spark SQL dialect. Multi-language Notebooks (Python, Scala, R, SQL). | Extended SQL dialect with powerful analytical functions; some non-standard syntax/behavior. Primarily SQL interface. |

| ML/AI Integration | Core Strength: Deeply integrated with MLflow, libraries (scikit-learn, TensorFlow, PyTorch), AutoML features, LLM support. Model serving capabilities. | Limited Built-in: Primarily serves as a fast data source/sink for external ML frameworks and tools. Some basic ML functions exist. |

| Transactions | ACID Transactions: Provided by Delta Lake for data reliability and consistency on object storage. | Limited: No traditional ACID transactions. Mutations are atomic but eventual consistency applies to replicas. Designed for analytical immutability patterns. |

| Data Formats | Primary: Delta Lake (on Parquet). Excellent support for reading/writing Parquet, ORC, CSV, JSON, Avro, etc. Handles unstructured data well. | Primary: MergeTree family. Can read/write Parquet, ORC, CSV, JSON via functions/engines but optimized for internal format. Limited unstructured data support. |

| Governance | Strong: Centralized via Unity Catalog (access control, lineage, audit, discovery, sharing). | Basic role-based access control (RBAC). Governance relies more on external tools or manual processes. |

| Ecosystem | Broad partner ecosystem, extensive connectors via Spark, strong BI tool integration (esp. via Databricks SQL). | Growing ecosystem, especially strong in observability/monitoring (Grafana, Prometheus). Standard JDBC/ODBC drivers. |

Databricks provides a much broader feature set, particularly excelling in multi-language support, deeply integrated ML/AI capabilities, ACID transactions via Delta Lake, and unified governance. ClickHouse focuses intently on maximizing analytical query performance with a powerful, specialized SQL dialect.

ClickHouse vs Databricks—When Would You Pick One Over the Other?

Okay, decision time. Here's a quick rundown:

Choose Databricks if:

- You require a unified platform spanning data engineering (ETL/ELT), SQL analytics, streaming, data science, and machine learning.

- Workloads involve significant data transformations, complex pipeline dependencies, or ML model training integrated with your data storage.

- Robust, integrated ML/AI capabilities (MLflow, libraries, deployment) are a core requirement.

- You are building a Lakehouse architecture leveraging open formats like Delta Lake on cloud object storage.

- A fully managed cloud service abstracting infrastructure and providing automated features (scaling, optimization) is preferred.

- Multi-language support (Python, Scala, R alongside SQL) is essential for your teams.

- ACID transactions on your analytical data are necessary for reliability and consistency.

- Centralized data governance (access control, lineage, auditing) across diverse assets is critical.

Choose ClickHouse if:

- Your absolute top priority is extreme low-latency (sub-second) OLAP query performance, especially for real-time dashboards, analytics APIs, or user-facing features.

- You are analyzing massive volumes of append-heavy data like time-series, events, logs, or metrics where fast aggregations are key.

- Cost-efficiency for high-throughput analytical compute is a major decision factor.

- Very high data ingestion rates (millions of rows/sec) with low latency are required.

- You need a specialized analytical database and plan to handle complex ETL or ML tasks using separate, dedicated systems.

- High query concurrency against the analytical store is a fundamental need.

- You prioritize performance per hardware unit and have the expertise for self-hosting (or choose ClickHouse Cloud for a managed option).

So, wrapping up the ClickHouse vs Databricks comparison: Both are powerful, but they solve different core problems. Databricks provides breadth and integration across the data lifecycle on an open lakehouse foundation. ClickHouse provides unparalleled depth and speed for specific, demanding OLAP query scenarios, excelling where raw analytical performance is the most critical factor. They are both powerful ClickHouse alternatives in different contexts, serving distinct primary needs.

Continue reading...

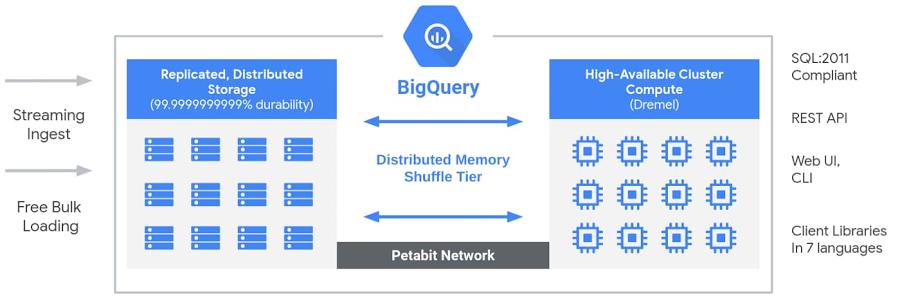

ClickHouse Alternative 3—Google BigQuery

Google BigQuery is Google Cloud's fully managed, serverless, petabyte-scale data warehouse. It distinguishes itself through its serverless architecture, abstracting infrastructure management and allowing users to focus on querying data using standard SQL. It's designed for large-scale analytics and integrates deeply within the Google Cloud Platform (GCP).

Google BigQuery in a minute - ClickHouse Alternatives - ClickHouse Competitors

Google BigQuery's Architecture: Serverless Power

Google BigQuery achieves its scale and serverless nature by leveraging several core Google infrastructure technologies, fundamentally separating storage and compute:

➥ Storage Layer (Colossus + Capacitor)

- Data resides physically within Colossus, Google's global-scale distributed filesystem.

- BigQuery stores table data using Capacitor, its proprietary columnar storage format, optimized for reading semi-structured data efficiently. Capacitor organizes data by column, applies advanced compression, encrypts data at rest, and physically layouts data to maximize parallelism for Dremel.

- Storage is fully managed by Google, scaling transparently and independently of compute. Users interact with logical tables; physical sharding and replication are handled automatically.

➥ Compute Layer (Dremel Engine)

- Query execution is powered by Dremel, Google's large-scale distributed SQL query engine, architected as a Massively Parallel Processing (MPP) system.

- Dremel utilizes a multi-level serving tree: Incoming SQL queries hit a root server, which parses the query, reads metadata, and dispatches query stages to intermediate "mixer" nodes and finally to thousands of leaf nodes or "slots".

- Slots are the fundamental units of computation, representing an abstracted bundle of CPU, RAM, and network resources dynamically allocated for a query. Thousands of slots can operate in parallel, each scanning a portion of the data directly from the Capacitor storage layer.

➥ Execution Coordination & Data Shuffling (Borg & Jupiter)

- Borg, Google's cluster management system, orchestrates the allocation of hardware resources (slots) required by Dremel for each query stage.

- Jupiter, Google's internal petabit-scale network fabric, facilitates extremely fast data shuffling between slots during query execution phases involving large-scale JOINs, aggregations, or window functions.

This architecture allows BigQuery to dynamically provision potentially massive amounts of compute resources tailored to each query's complexity, executing it rapidly without requiring users to perform manual capacity planning or cluster management (especially in the on-demand model).

Core Strength of Google BigQuery

Google BigQuery offers a range of capabilities built upon its serverless architecture:

1) Serverless Operation — No infrastructure (servers, clusters) for users to provision, manage, or tune. Google handles all underlying operations.

2) Automatic Scaling & High Availability — Compute resources (slots) scale automatically based on query complexity and data size (on-demand) or provisioned capacity. Storage scales seamlessly. High availability and durability are built-in.

3) Separation of Storage and Compute — Google BigQuery allows independent scaling and pricing for storage and compute resources.

4) Standard SQL Support — Google BigQuery is compliant with the ANSI:2011 SQL standard, providing a familiar interface for analysts.

5) Google BigQuery ML (BQML) — Enables users to train, evaluate, and predict with machine learning models (regression, classification, clustering, time series forecasting, matrix factorization, DNNs via TensorFlow integration) directly within BigQuery using SQL syntax, minimizing data movement.

6) Google BigQuery Storage APIs:

- Storage Write API — High-throughput gRPC-based API for efficient streaming ingestion (preferred over older

tabledata.insertAll) and batch loading, offering exactly-once semantics within streams. - Storage Read API — High-throughput gRPC-based API allowing direct, parallelized data access from BigQuery storage into applications or frameworks like Spark, TensorFlow, or Pandas, often faster and cheaper than exporting data.

7) Google BigQuery BI (Business Intelligence) Engine — An in-memory analysis service that caches frequently accessed data used by BI (Business Intelligence) tools (Looker, Looker Studio, Tableau, etc.), accelerating dashboard performance and providing sub-second query latency for interactive exploration.

8) Geospatial Analytics (GIS) — Native support for geography data types (points, lines, polygons based on WGS84 spheroid) and standard GIS functions.

9) Federated Queries — Ability to query data residing in external sources (Google Cloud Storage files, Cloud SQL, Spanner, Cloud Bigtable) directly without loading it into Google BigQuery’s managed storage.

10) Flexible Pricing Models — Google BigQuery offers various pricing models:

- On-Demand — Pay per terabyte (TB) of data processed by queries. Includes a monthly free processing tier. Best for variable or unpredictable workloads.

- Capacity Pricing (Slots) — Purchase dedicated processing capacity (slots) via Flex Slots (minimum 60-second commitment) or monthly/annual commitments. Available in Standard, Enterprise, and Enterprise Plus editions, offering varying features, performance levels, and pricing for predictable costs on sustained workloads. Storage is billed separately based on data volume (active vs long-term).

11) Security & Governance — Google BigQuery easily integrates with GCP IAM for authentication/authorization; supports column-level and row-level security, data masking, VPC Service Controls, customer-managed encryption keys (CMEK), detailed audit logging via Cloud Logging, and integration with Data Loss Prevention (DLP) API.

12) Data Management & Optimization — Google BigQuery supports table partitioning (by time-unit, integer range, or ingestion time) and table clustering (by specified columns) to optimize query performance and cost by pruning data scanned based on query predicates.

…and so much more!

Pros and Cons of Google BigQuery:

Google BigQuery Pros:

- Google BigQuery runs on a serverless model with no infrastructure to manage.

- Google BigQuery scales instantly to petabyte‑size data warehouses without cluster planning.

- Google BigQuery executes SQL queries over terabytes of data in seconds using Dremel‑based columnar storage.

- Google BigQuery supports the standard SQL dialect (plus legacy SQL) so analysts don’t face a steep learning curve.

- Google BigQuery includes BigQuery ML for training and using machine learning models with simple SQL commands.

- Google BigQuery offers federated queries to run statements on external databases like Cloud SQL and Spanner.

- Google BigQuery integrates natively with Google Cloud services such as Storage, Pub/Sub, and Dataflow.

- Google BigQuery provides flexible pricing with on‑demand and flat‑rate options to match different workload patterns.

- Google BigQuery encrypts data at rest and in transit and enables fine‑grained access via IAM policies.

- Google BigQuery auto‑backs up data and maintains high availability through built‑in replication.

Google BigQuery Cons:

- Google BigQuery charges per TB scanned in on‑demand mode, which can lead to unpredictable query costs.

- Choosing the right edition, number of slots, and commitment type requires careful analysis to be cost-effective.

- Users have less direct control over query execution compared to systems like ClickHouse. Optimization relies mainly on SQL patterns, partitioning, and clustering.

- Google BigQuery can exhibit higher initial latency (seconds) for simple or small queries compared to low-latency optimized engines, making it less ideal for real-time interactive applications needing millisecond responses.

- DML limitations. Subject to quotas on Data Manipulation Language (UPDATE, DELETE, INSERT) frequency and volume; not designed for high-frequency transactional workloads (OLTP).

- Exporting large datasets out of GCP can incur significant network egress charges.

- Scheduled queries can only run at 15‑minute minimum intervals, which restricts near‑real‑time workflows.

- Query performance can vary due to slot contention or cold‑start delays.

- BigQuery is exclusively available on Google Cloud Platform.

Let's get into the ClickHouse vs BigQuery showdown. Both are major players that can handle massive data loads, but they take different approaches and excel in different spots.

ClickHouse vs BigQuery: What's Best for You?

Choosing between them really hinges on what you need to do, how fast you need it, and what your setup looks like.

Clickhouse vs BigQuery—Architecture & Management

| 🔮 | Google BigQuery | ClickHouse |

|---|---|---|

| Core Design | Fully managed, serverless cloud data warehouse. | Open source columnar analytical database (DBMS). |

| Deployment Model | Exclusively on Google Cloud Platform (GCP) as a managed service. | Flexible: Self-hosted (on-prem, any cloud), Kubernetes, Managed Service (ClickHouse Cloud on GCP/AWS/Azure, others). |

| Storage/Compute | Fully Decoupled: Storage (Colossus/Capacitor) and Compute (Dremel/Slots) scale and operate independently. | Flexible: Coupled (OSS default, shared-nothing) or Decoupled (ClickHouse Cloud / Object Storage config). |

| Management | Zero-Ops: Fully managed by Google. Users manage data and queries, not infrastructure. | High (Self-hosted): Requires significant setup, tuning, scaling, upgrades. Low (ClickHouse Cloud): Managed service option. |

| Resource Model | Abstracted: Compute measured in Slots (dynamic resource units). Users don't manage VMs/nodes directly. | Direct: Resources map directly to hardware (CPU, RAM, Disk - OSS) or specific service tiers (ClickHouse Cloud). |

| Storage Format | Proprietary columnar format (Capacitor) on Colossus filesystem. | Proprietary columnar format (MergeTree engine family) on local disk or cloud object storage. Known for high compression. |

BigQuery offers extreme operational simplicity via its serverless, GCP-native model. ClickHouse provides deployment flexibility (multi-cloud, on-prem, managed) and more direct control over resources and configuration, especially when self-hosted.

Clickhouse vs BigQuery—Performance Profile

| 🔮 | Google BigQuery | ClickHouse |

|---|---|---|

| Query Speed (OLAP) | Good to Fast: Excels at large-scale scans/joins/aggregations due to massive parallelism. Latency typically seconds to tens of seconds. Slower for small/simple queries. | Extremely Fast: Optimized for low latency (often sub-second) on analytical queries, especially scans, aggregations, time-series analysis. High throughput. |

| Real-time Ingestion | Good: High throughput via Storage Write API (gRPC streaming). End-to-end latency typically seconds. | Excellent: Very high throughput batch inserts. Kafka engine & integrations support near-real-time. Lower ingestion latency often achievable. |

| Tuning & Optimization | Limited User Control: Relies on Google's auto-optimization. Users influence via schema (partitioning/clustering) and SQL patterns (declarative optimization). | Extensive Tuning: Fine-grained control via primary/skipping indexes, Materialized Views, engine settings, hardware config (imperative tuning). Requires expertise. |

| Performance Consistency | Variable (On-Demand): Can fluctuate due to shared resources. More Predictable (Capacity): Dedicated slots offer steadier performance. |

Generally Predictable: Latency is more consistent when cluster is adequately resourced and tuned for the workload. |

| Complex Queries (Joins) | Handles very large, complex joins well due to distributed shuffle network (Jupiter) and massive parallelism. | Performance varies; large distributed joins can be less efficient than BigQuery/Spark. Best with denormalization or smaller dimension joins. |

ClickHouse typically delivers significantly lower latency for targeted OLAP queries and offers more tuning levers. BigQuery provides effortless scaling for extremely large or complex ad-hoc queries and handles massive joins robustly, albeit often with higher baseline latency.

Clickhouse vs BigQuery—Scalability & Concurrency

| 🔮 | Google BigQuery | ClickHouse |

|---|---|---|

| Scalability Approach | Serverless & Automatic: Managed entirely by Google, scales transparently based on demand or capacity. | Horizontal: Scales by adding nodes/shards. Requires manual configuration/rebalancing (OSS) or managed service features (ClickHouse Cloud). |

| Compute Scaling | Automatic: Scales via dynamic slot allocation (on-demand) or provisioned slot capacity (Editions). | Manual/Managed: Add compute nodes/VMs (OSS) or adjust managed service tiers (ClickHouse Cloud). |

| Storage Scaling | Automatic & Independent: Managed by Google on Colossus. | Manual/Managed: Add disk/nodes (OSS coupled) or relies on object storage scaling (decoupled). |

| Concurrency | High: Default quotas allow many concurrent queries (e.g: 100+ interactive). Managed via slot availability. | Very High: Designed for high concurrency; limits depend on node resources/cluster size/configuration. Often supports thousands per service. |

BigQuery offers unparalleled ease of scaling. ClickHouse provides high scalability potential but requires more planning and active management if self-hosted.

Clickhouse vs BigQuery—Data Handling, SQL, & Ecosystem

| 🔮 | Google BigQuery | ClickHouse |

|---|---|---|

| SQL Dialect | Standard SQL (ANSI:2011 compliant). Familiar for most analysts. | Extended SQL dialect with powerful analytical functions, array/map manipulation, and some non-standard syntax optimized for performance. |

| Updates/Deletes | Standard SQL UPDATE/DELETE/MERGE DML statements. Subject to quotas and not optimized for high-frequency (OLTP) use. |

Limited via asynchronous ALTER TABLE... UPDATE/DELETE mutations. Optimized for append-heavy workloads; mutations are background rewrite operations. |

| Materialized Views | Supported, with automatic refresh and smart tuning options. | Supported, powerful mechanism often used for incremental aggregation and query acceleration. Requires manual definition. |

| Ecosystem | Deep GCP Integration: Native connectors to Dataflow, Pub/Sub, Dataproc, AI Platform, Cloud Functions, Looker/Looker Studio. Federated Queries. | Strong Open Source & Observability: Integrates well with Kafka, Prometheus, Grafana, Vector DBs. Reads many formats/sources (S3, GCS, HDFS, JDBC/ODBC). Growing connectors. |

BigQuery provides standard SQL and seamless integration within the GCP ecosystem. ClickHouse offers a more specialized, powerful SQL dialect for analytics and strong integrations within open source and observability stacks. BigQuery has better support for standard DML, while ClickHouse focuses on append-heavy patterns.

Clickhouse vs BigQuery—Cost Framework

| 🔮 | Google BigQuery | ClickHouse |

|---|---|---|

| Model | Dual: On-demand (per TB scanned) or Capacity (per slot-hour/committed, via Editions). Storage billed separately (active/long-term rates). | Flexible: OSS is free (pay infrastructure/operational costs). Managed ClickHouse Cloud typically usage-based (compute, storage, data scan/transfer). |

| Cost Driver | Data scanned (On-Demand) or Slot reservation/commitment (Capacity). | Hardware/Instance resources (OSS) or Managed service tier/usage metrics (ClickHouse Cloud). |

| Predictability | On-demand can be highly variable. Capacity offers predictable compute costs. | Self-hosted depends on infrastructure stability. Managed Cloud plans often offer better predictability based on chosen tier/commitments. |

| Efficiency | Pay-per-scan incentivizes strict query optimization (partitioning/clustering crucial). Can be cost-effective for sporadic use. | High performance & compression often lead to lower resource needs (compute/storage) for the same analytical task, potentially yielding better price-performance. |

ClickHouse's efficiency often makes it more cost-effective for sustained, high-volume analytical workloads. BigQuery's serverless on-demand model can be cheaper for infrequent or exploratory usage but requires careful optimization or capacity planning to control costs under heavy load.

When Would You Choose BigQuery vs ClickHouse?

Choose Google BigQuery if:

- You are deeply integrated into the Google Cloud Platform (GCP) ecosystem.

- Minimal operational overhead and a fully managed, serverless experience are top priorities.

- Your primary use cases involve large-scale reporting, ad-hoc data exploration, BI dashboards, where multi-second latency is generally acceptable.

- You need effortless, automatic scaling for unpredictable workloads or massive datasets without manual intervention.

- Your team prefers standard SQL and prioritizes ease of use over performance tuning control.

- Seamless integration with specific GCP services like BQML, AI Platform, Dataflow, or Looker is crucial.

- Built-in Geospatial (GIS) capabilities are required.

Choose ClickHouse if:

- Extreme low-latency query performance (sub-second) is essential for your application (e.g: real-time dashboards, analytics APIs, user-facing features).

- You are processing high volumes of time-series, event, log, or telemetry data requiring rapid aggregation and filtering.

- High data ingestion throughput and high query concurrency are critical operational requirements.

- You need fine-grained control over performance tuning, system configuration, and hardware/resource allocation (especially if self-hosting).

- Storage efficiency (due to high compression) and potentially better cost-performance for compute-intensive analytical workloads are major drivers.

- You require advanced analytical SQL functions or specialized data types (Arrays, Maps, LowCardinality) for optimization.